私たちはついにSparkフレームワークに関する本を翻訳し始めました:

本日、Sparkの機能に関するレビュー記事の翻訳に注目しました。

Sparkについて最初に聞いたのは2013年後半、Scalaに興味を持つようになったときです-Sparkが書かれているのはこの言語です。 少し後に、興味のために、タイタニックの乗客の生存を予測することに専念するデータサイエンスの分野からプロジェクトの開発に着手しました。 Sparkプログラミングとその概念を理解するのに最適な方法であることがわかりました。 すべての初心者Spark開発者に彼を知ってもらうことを強くお勧めします。

今日、SparkはAmazon、eBay、Yahoo!などの多くの主要企業で使用されています。 多くの組織は、数千のノードのクラスターでSparkを運用しています。 Spark FAQによると、これらのクラスターの最大のものには8,000以上のノードがあります。 実際、Sparkは注目に値し、探索する価値のある技術です。

この記事では、Sparkの概要と、使用例とコードサンプルを紹介します。

Apache Sparkとは何ですか? はじめに

Sparkは、「超高速クラスターコンピューティング」のツールとして位置付けられているApacheプロジェクトです。 活発な無料コミュニティによって開発されたこのプロジェクトは、現在、Apacheプロジェクトの中で最も活発です。

Sparkは、高速で汎用性の高いデータ処理プラットフォームを提供します。 Hadoopと比較して、Sparkはメモリ内のプログラムを100倍以上、ディスク上のプログラムを10倍以上高速化します。

さらに、Sparkコードはより高速に記述されます。これは、80を超える高レベルの演算子を自由に使用できるためです。 これを理解するために、BigDataの世界の「Hello World!」アナログを見てみましょう。単語カウントの例です。 MapReduce用にJavaで記述されたプログラムには約50行のコードが含まれ、Spark(Scala)では次のもののみが必要です。

sparkContext.textFile("hdfs://...") .flatMap(line => line.split(" ")) .map(word => (word, 1)).reduceByKey(_ + _) .saveAsTextFile("hdfs://...")

Apache Sparkを学習するとき、もう1つの重要な側面は注目に値します。既製の対話型シェル(REPL)を提供します。 REPLを使用すると、最初にタスク全体をプログラミングして完了しなくても、コードの各行の結果をテストできます。 したがって、既製のコードをはるかに高速に記述することができ、さらに、状況データ分析が提供されます。

さらに、Sparkには次の主要な機能があります。

- 現在、Scala、Java、PythonのAPIを提供し、他の言語(Rなど)のサポートも準備しています

- Hadoopエコシステムおよびデータソース(HDFS、Amazon S3、Hive、HBase、Cassandraなど)とうまく統合されます。

- Hadoop YARNまたはApache Mesosを実行しているクラスターで動作し、オフラインでも動作可能

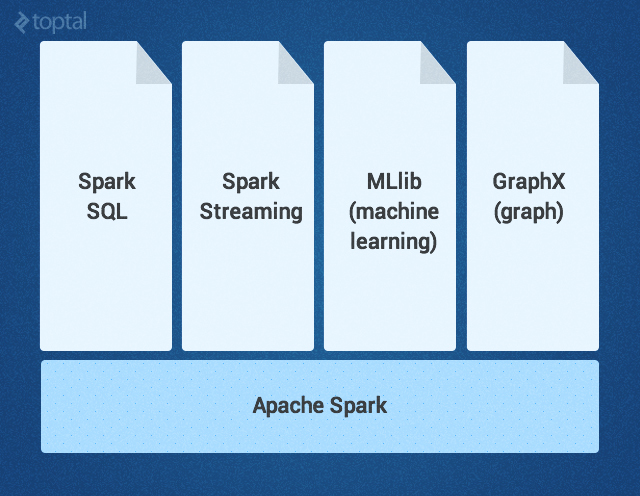

Sparkコアは、同じアプリケーション内でシームレスにドッキングする強力な高レベルライブラリのセットによって補完されます。 現在、このようなライブラリには、SparkSQL、Spark Streaming、MLlib(機械学習用)、GraphXが含まれています。これらのすべてについては、この記事で詳しく説明します。 他のSparkライブラリと拡張機能も開発されています。

スパークカーネル

Sparkコアは、大規模な並列および分散データ処理のコアエンジンです。 カーネルは以下を担当します。

- メモリ管理とフェイルオーバー

- クラスタジョブのスケジューリング、配布、および追跡

- ストレージシステムとの相互運用性

SparkはRDD (堅牢な分散データセット)の概念を導入します。これは、並行して処理できるオブジェクトの不変でフォールトトレラントな分散コレクションです。 RDDには、あらゆるタイプのオブジェクトを含めることができます。 RDDは、外部データセットをロードするか、メインプログラム(ドライバープログラム)からコレクションを配布することによって作成されます。 RDDは2種類の操作をサポートしています。

- 変換は、RDDで実行される操作(マッピング、フィルタリング、結合など)です。 変換の結果は、その結果を含む新しいRDDです。

- アクションは、RDDでのいくつかの計算の結果として取得された値を返す操作(たとえば、削減、カウントなど)です。

Sparkの変換は「遅延」モードで実行されます。つまり、結果は変換後すぐには計算されません。 代わりに、実行される操作と、操作を実行するデータセット(ファイルなど)を単に「記憶」します。 変換は、アクションが呼び出されたときにのみ計算され、その結果がメインプログラムに返されます。 この設計のおかげで、Sparkのパフォーマンスが向上しています。 たとえば、大きなファイルがさまざまな方法で変換されて最初のアクションに転送された場合、Sparkは最初の行のみを処理して結果を返し、この方法ではファイル全体を処理しません。

デフォルトでは、変換された各RDDは、新しいアクションを実行するたびに再評価できます。 ただし、RDDは、ストレージまたはキャッシング方式を使用してメモリに長時間保存することもできます。 この場合、Sparkは必要な要素をクラスター上に保持し、より高速に要求できるようになります。

きらめき

SparkSQLは、SQLまたはHive Query Languageを使用したデータのクエリをサポートするSparkコンポーネントです。 ライブラリは、(MapReduceの代わりに)Sparkの上で作業するためのApache Hiveポートとして登場し、現在はすでにSparkスタックと統合されています。 さまざまなデータソースをサポートするだけでなく、SQLクエリをコード変換にバインドすることもできます。 非常に強力なツールであることがわかりました。 以下は、Hive互換リクエストの例です。

// sc – SparkContext. val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc) sqlContext.sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING)") sqlContext.sql("LOAD DATA LOCAL INPATH 'examples/src/main/resources/kv1.txt' INTO TABLE src") // HiveQL sqlContext.sql("FROM src SELECT key, value").collect().foreach(println)

スパークストリーミング

Spark Streamingはリアルタイムのストリーミング処理をサポートしています。 そのようなデータは、稼働中のWebサーバーのログファイル(Apache FlumeやHDFS / S3など)、ソーシャルネットワーク(Twitterなど)の情報、およびKafkaなどのさまざまなメッセージキューです。 「内部」Spark Streamingは入力データストリームを受信し、データをパケットに分割します。 次に、これらはSparkエンジンによって処理され、その後、最終的なデータストリームが(以下のようにバッチ形式で)生成されます。

Spark Streaming APIはSpark Core APIと完全に一致するため、プログラマはバッチデータとストリーミングデータの両方を簡単に操作できます。

MLlib

MLlibは、分類、回帰、クラスタリング、コフィルタリングなどのためにクラスター上で水平にスケーリングするように設計されたさまざまなアルゴリズムを提供する機械学習ライブラリです。 これらのアルゴリズムの一部は、ストリーミングデータでも機能します。たとえば、通常の最小二乗法を使用した線形回帰やk-means法を使用したクラスタリング(リストは間もなく拡大します)。 Apache Mahout (Hadoopの機械学習ライブラリ)は既にMapReduceを離れており、現在はSpark MLlibと連携して開発されています。

Graphx

GraphXは、グラフを操作し、グラフで並列操作を実行するためのライブラリです。 このライブラリは、ETL、研究分析、グラフベースの反復計算のための汎用ツールを提供します。 グラフを操作するための組み込み操作に加えて、PageRankなどのグラフを操作するための従来のアルゴリズムのライブラリも提供します。

Apache Sparkの使用方法:イベント検出の例

Apache Sparkが何であるかがわかったので、どのタスクと問題が最も効果的に解決されるかを考えてみましょう。

最近、Twitterのフローを分析することで、地震の登録実験に関する記事を見つけました 。 ちなみに、この記事では、この方法を使用すると、気象庁のレポートよりも迅速に地震について学ぶことができることが示されました。 この記事で説明されているテクノロジーはSparkとは似ていませんが、この例はSparkのコンテキストで正確に興味深いように見えます。つまり、グルーコードなしで単純化されたコードフラグメントを操作する方法を示しています。

まず、私たちに関係があると思われるツイートを除外する必要があります。たとえば、「地震」や「衝撃」に言及する場合です。 これは、次のようにSpark Streamingで簡単に実行できます。

TwitterUtils.createStream(...) .filter(_.getText.contains("earthquake") || _.getText.contains("shaking"))

次に、ツイートのセマンティック分析を行って、彼らが話すプッシュが関連しているかどうかを判断する必要があります。 「地震!」や「今震えている」などのツイートは肯定的な結果と見なされ、「地震会議で私」または「昨日はひどく震えていました」-否定的な結果と見なされます。 この記事の著者は、この目的のためにサポートベクターメソッド(SVM)を使用しました。 同様に、 ストリーミングバージョンも実装します 。 MLlibから生成されるサンプルコードは次のようになります。

// , , LIBSVM val data = MLUtils.loadLibSVMFile(sc, "sample_earthquate_tweets.txt") // (60%) (40%). val splits = data.randomSplit(Array(0.6, 0.4), seed = 11L) val training = splits(0).cache() val test = splits(1) // , val numIterations = 100 val model = SVMWithSGD.train(training, numIterations) // , model.clearThreshold() // val scoreAndLabels = test.map { point => val score = model.predict(point.features) (score, point.label) } // val metrics = new BinaryClassificationMetrics(scoreAndLabels) val auROC = metrics.areaUnderROC() println("Area under ROC = " + auROC)

このモデルの正しい予測の割合が私たちに合っていれば、次の段階に進むことができます:検出された地震に対応する。 これを行うには、一定の期間内に受信した一定の数(密度)の肯定的なツイートが必要です(記事を参照)。 注:ツイートにジオロケーション情報が含まれている場合、地震の座標を特定できます。 この知識があれば、SparkSQLを使用して既存のHiveテーブル(地震通知を受信したいユーザーに関するデータを保存)にクエリし、メールアドレスを抽出して、パーソナライズされたアラートを送信できます。

// sc – SparkContext. val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc) // sendEmail – sqlContext.sql("FROM earthquake_warning_users SELECT firstName, lastName, city, email") .collect().foreach(sendEmail)

Apache Sparkの他の用途

もちろん、Sparkの潜在的な範囲は、地震学だけにとどまりません。

以下は、ビッグデータの高速で多様かつ大量の処理が必要な、Sparkが非常に適している他の実用的な状況の指標(つまり、決して網羅的ではない)の選択です。

ゲーム業界:リアルタイムで堅実なストリームに到着するゲームイベントを記述するパターンの処理と検出。 その結果、プレーヤーの保持、ターゲットを絞った広告、難易度の自動修正などを使用して、すぐにそれらに対応し、十分なお金を稼ぐことができます。

eコマースでは、 ALSの場合のように、リアルタイムのトランザクション情報をストリーミングクラスタリングアルゴリズムに転送できます。 その後、結果を他の非構造化データソースからの情報(たとえば、顧客レビューやレビューなど)と組み合わせることができます。 徐々に、この情報を使用して、新しい傾向を考慮して、推奨事項を改善できます。

金融セクターやセキュリティの目的で、Sparkスタックを使用して、不正や侵入を検出したり、リスク分析に基づいた認証を行ったりできます。 したがって、膨大な量のアーカイブログを収集し、それらを外部データソース、たとえばデータリークやハッキングされたアカウント( https://haveibeenpwned.com/を参照)などの情報と組み合わせることで、最高の結果を得ることができます。接続/要求に関する情報。たとえば、IPによる地理位置情報や時間データに焦点を合わせます。

おわりに

そのため、Sparkは、構造化および非構造化の両方で、大量のデータ(リアルタイムとアーカイブの両方)を処理する大きな計算負荷に関連する重要なタスクを簡素化するのに役立ちます。 Sparkは、機械学習やグラフ作成アルゴリズムなどの複雑な機能のシームレスな統合を提供します。 Sparkは、ビッグデータ処理を大衆に運びます。 それを試してください-あなたはそれを後悔しません!