To get a detailed understanding of this issue, you will have to read and bring together more than one article and spend quite a lot of time on this. In this material, this is all put together and, in theory, should save time and the analysis in my opinion has turned out to be quite detailed.

What will happen under the cut: the principle of operation of single byte encodings (ASCII, Windows-1251, etc.), the prerequisites for the appearance of Unicode, what is Unicode, Unicode encodings UTF-8, UTF-16, their differences, fundamental features, compatibility and incompatibility of different encodings, principles of character encoding, practical analysis of encoding and decoding.

The issue with encodings now has certainly lost relevance, but still I don’t think it will be superfluous to know how they work now and how they worked before.

Unicode Prerequisites

I think it's worth starting from the time when computerization was not yet so developed and was only gaining momentum. Then the developers and standardizers did not think that computers and the Internet would gain such huge popularity and prevalence. Actually then there was a need for text encoding. In what form it was necessary to store the letters in the computer, and he (the computer) understands only ones and zeros. So a one-byte ASCII encoding was developed (most likely it is not the first encoding, but it is the most common and indicative, therefore we will consider it as a reference). What is she like? Each character in this encoding is encoded with 8 bits. It is easy to calculate that, based on this, the encoding can contain 256 characters (eight bits, zeros or ones 2 8 = 256).

The first 7 bits (128 characters 2 7 = 128) in this encoding were given to Latin characters, control characters (such as line breaks, tabs, etc.) and grammar characters. The rest were reserved for national languages. That is, it turned out that the first 128 characters are always the same, and if you want to encode your native language, please use the remaining capacity. Actually, a huge zoo of national codings appeared. And now you yourself can imagine, for example, when I am in Russia I take and create a text document, by default it is created in the Windows-1251 encoding (Russian encoding used in Windows) and sent to someone, for example, in the USA. Even the fact that my interlocutor knows Russian will not help him, because when he opens my document on his computer (in the editor with the default encoding of the same ASCII), he will see not Russian letters, but krakozyabra. To be more precise, those places in the document that I write in English will be displayed without problems, because the first 128 characters of the Windows-1251 and ASCII encodings are the same, but where I wrote the Russian text, if it does not indicate the correct encoding in its editor, in the form of a crocodile.

I think the problem with national encodings is understandable. Actually, there are a lot of these national encodings, and the Internet has become very wide, and everyone in it wanted to write in their own language and did not want their language to look like crooked hair. There were two ways out, to indicate for each page of the encoding, or to create one common for all characters in the world symbol table. The second option won, so we created a Unicode character table.

ASCII small workshop

It may seem elementary, but since I decided to explain everything in detail, then this is necessary.

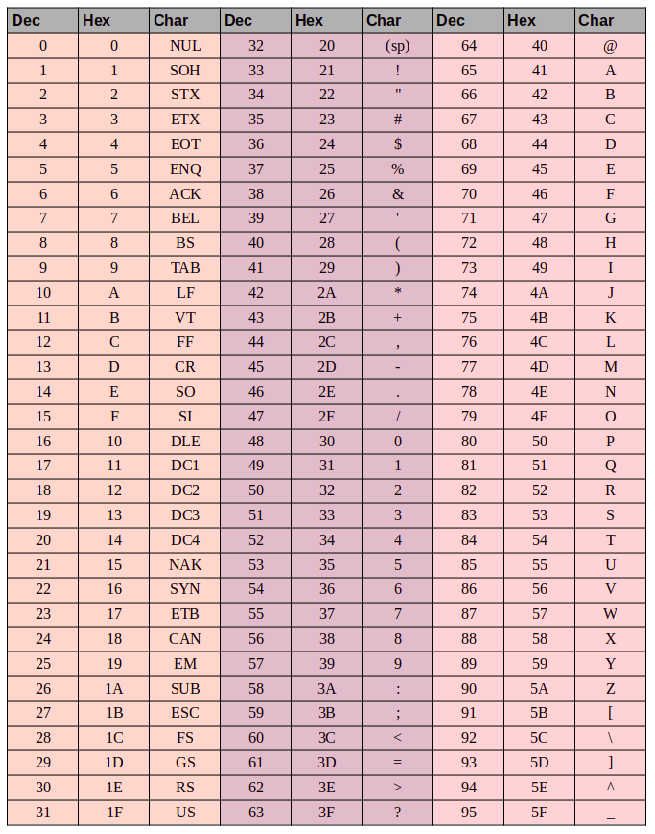

Here is the ASCII character table:

Here we have 3 columns:

- decimal character number

- character number in hexadecimal format

- representation of the symbol itself.

So, we will encode the string “ok” (English) in ASCII encoding. The character "o" (eng.) Has a position of 111 in decimal and 6F in hexadecimal.

01101111

translate this into a binary system -

01101111

. The symbol "k" (eng.) - position 107 in decimal and 6B in hexadecimal, translate into binary -

01101011

. Total string "ok" encoded in ASCII will look like this -

01101111 01101011

. The decoding process will be reverse. We take 8 bits, translate them into a 10-decimal encoding, get the character number, look at the table what kind of character it is.

Unicode

With the prerequisites for creating a common table for all the characters in the world, sorted out. Now, actually, to the table itself. Unicode - this is the table that is (this is not an encoding, but a symbol table). It consists of 1,114,112 positions. Most of these positions have not yet been filled with symbols, so it is unlikely that this space will need to be expanded.

This total space is divided into 17 blocks, 65,536 characters each. Each block contains its own group of characters. The zero block is the basic one, it contains the most used characters of all modern alphabets. In the second block are the characters of extinct languages. There are two blocks reserved for private use. Most blocks are not filled yet.

Total capacity of Unicode characters is from 0 to 10FFFF (in hexadecimal).

Hexadecimal characters are written with the prefix "U +". For example, the first base block includes characters from U + 0000 to U + FFFF (from 0 to 65,535), and the last seventeenth block from U + 100,000 to U + 10FFFF (from 1,048,576 to 1,114,111).

Well now, instead of the zoo of national encodings, we have a comprehensive table in which all the characters that may be useful to us are encrypted. But there are also drawbacks. If before each character was encoded with one byte, now it can be encoded with a different number of bytes. For example, to encode all the characters of the English alphabet, one byte is still sufficient, for example, the same character “o” (English) has a U + 006F in Unicode, that is, the same number as in ASCII is 6F in hexadecimal and 111 in decimal. But to encode the character " U + 103D5 " (this is the ancient Persian number one hundred) - 103D5 in hexadecimal and 66,517 in decimal, here we need three bytes.

Unicode encodings such as UTF-8 and UTF-16 should already solve this problem. Further we will talk about them.

Utf-8

UTF-8 is a variable length Unicode encoding that can be used to represent any Unicode character.

Let's talk more about variable length, what does it mean? The first thing to say is that the structural (atomic) unit of this encoding is a byte. The fact that the encoding of a variable is long means that one character can be encoded with a different number of structural units of the encoding, that is, with a different number of bytes. For example, Latin is encoded in one byte, and Cyrillic in two bytes.

A little digress from the topic, you need to write about the compatibility of ASCII and UTF

The fact that Latin characters and basic control structures, such as line breaks, tabs, etc. encoded with one byte makes utf encodings compatible with ASCII encodings. That is, in fact, the Latin and control structures are located in the same places in both ASCII and UTF, and the fact that they are encoded there and there by one byte ensures this compatibility.

Let's take the “o” character from the ASCII example above. Remember that in the table of ASCII characters it is at 111 positions, in bit form it will be

01101111

. In the Unicode table, this character is U + 006F, which will also be

01101111

in bit form. And now, since UTF is a variable-length encoding, this character will be encoded in one byte in it. That is, the representation of this symbol in both encodings will be the same. And so for the entire range of characters from 0 to 128. That is, if your document consists of English text, you will not notice the difference if you open it in UTF-8 and UTF-16 and ASCII, and so on until you start working with the national alphabet.

Let’s compare in practice what the phrase “Hello World” will look like in three different encodings: Windows-1251 (Russian encoding), ISO-8859-1 (encoding of Western European languages), UTF-8 (unicode encoding). The essence of this example is that the phrase is written in two languages. Let's see how it will look in different encodings.

In the encoding ISO-8859-1 there are no such characters "m", "and" and "p".

Now let's work with the encodings and see how to convert a string from one encoding to another and what will happen if the conversion is incorrect, or it cannot be done due to the difference in encodings.

We assume that the phrase was originally encoded in Windows-1251. Based on the table above, we write this phrase in binary form, encoded in Windows-1251. To do this, we only need to translate the symbols from binary to decimal or hexadecimal (from the table above).

01001000 01100101 01101100 01101100 01101111 00100000 11101100 11101000 11110000

Well, this is the phrase "Hello World" encoded in Windows-1251.

Now imagine that you have a file with text, but don’t know in which encoding this text is. You assume that it is encoded in ISO-8859-1 and open it in your editor in that encoding. As mentioned above, with a part of the symbols everything is in order, they are in this encoding, and even are in the same places, but with the symbols from the word “world” everything is more complicated. These characters are not in this encoding, and in their place in the encoding ISO-8859-1 are completely different characters. Specifically, “m” is position 236, “and” is 232. “p” is 240. And at these positions in ISO-8859-1 encoding are the following characters position 236 - character “ì”, 232 - “è”, 240 - "ð"

So the phrase “Hello World” encoded in Windows-1251 and opened in ISO-8859-1 encoding will look like this: “Hello ìèð”. So it turns out that these two encodings are only partially compatible, and it will not work correctly to encode a string from one encoding to another, because there simply are simply no such characters.

Here, Unicode encodings will be needed, and specifically in this case, consider UTF-8. The fact that the characters in it can be encoded with a different number of bytes from 1 to 4 we have already found out. Now it’s worth saying that using UTF can be encoded not only 256 characters, as in the previous two, but just do all Unicode characters

It works as follows. The first bit of each byte of the character encoding is not responsible for the character itself, but for determining the byte. That is, for example, if the leading (first) bit is zero, then this means that only one byte is used to encode a character. Which provides compatibility with ASCII. If you carefully look at the ASCII character table, you will see that the first 128 characters (English alphabet, control characters and punctuation marks) if they are converted to binary, everything starts with a zero bit (be careful if you translate characters into a binary system using for example online converter, then the first zero leading bit can be discarded, which can be confusing).

01001000

- the first bit is zero, then 1 byte encodes 1 character -> "H"

01100101

- the first bit is zero, which means 1 byte encodes 1 character -> "e"

If the first bit is not zero, then the character is encoded in several bytes.

For double-byte characters, the first three bits should be - 110

110 10000 10 111100

- at the beginning of 110, then 2 bytes encode 1 character. The second byte in this case always starts with 10. In total, discard the control bits (the initial ones, which are highlighted in red and green) and take all the remaining

10000111100

(

10000111100

), translate them into hexadecimal (043) -> U + 043C in Unicode, the symbol “m ".

for three-byte characters in the first byte, the leading bits are 1110

1110 1000 10 000111 10 1010101

- we sum everything except the control bits and we get that in hexadecimal it is 103V5, U + 103D5 is the ancient Persian digit one hundred (

10000001111010101

)

for four-byte characters in the first byte, the leading bits are 11110

11110 100 10 001111 10 111111 10 111111

- U + 10FFFF is the last valid character in the unicode table (

100001111111111111111

)

Now, if desired, we can record our phrase in UTF-8 encoding.

Utf-16

UTF-16 is also a variable length encoding. Its main difference from UTF-8 is that the structural unit in it is not one but two bytes. That is, in UTF-16 encoding, any Unicode character can be encoded with either two or four bytes. Let’s understand for the future a pair of such bytes I will call a code pair. Based on this, any Unicode character encoded in UTF-16 can be encoded with either one code pair or two.

Let's start with the characters that are encoded by one code pair. It is easy to calculate that there can be 65,535 such characters (2v16), which completely coincides with the base Unicode block. All characters in this UTF-16 encoded Unicode block will be encoded with one code pair (two bytes), everything is simple here.

the symbol "o" (Latin) -

00000000 01101111

the symbol "M" (Cyrillic) -

00000100 00011100

Now consider characters outside the base Unicode range. For their encoding, two code pairs (4 bytes) are required. And the mechanism for coding them is a bit more complicated, let's take it in order.

To begin with, we introduce the concepts of a surrogate pair. A surrogate pair is two code pairs used to encode one character (total 4 bytes). For such surrogate pairs, a special range from D800 to DFFF is assigned in the Unicode table. This means that when converting a code pair from a byte form to hexadecimal, you get a number from this range, then this is not an independent character, but a surrogate pair.

To encode a character from the range 10000 - 10FFFF (that is, a character for which you need to use more than one code pair) you need:

- Subtract 10000 (hexadecimal) from the character code (this is the smallest number from the range 10000 - 10FFFF )

- as a result of the first point, a number no greater than FFFFF will be obtained, occupying up to 20 bits

- the leading 10 bits of the received number are summed with D800 (the beginning of the range of surrogate pairs in Unicode)

- the next 10 bits are summed with DC00 (also a number from the range of surrogate pairs)

- after that we get 2 surrogate pairs of 16 bits each, the first 6 bits in each such pair are responsible for determining that it is a surrogate,

- the tenth bit in each surrogate is responsible for its order; if it is 1, then this is the first surrogate, if 0, then the second

We will analyze this in practice, I think it will become clearer.

For example, we encrypt the symbol, and then decrypt it. Take the ancient Persian number one hundred (U + 103D5):

- 103D5 - 10000 = 3D5

- 3D5 =

0000000000 1111010101

(the leading 10 bits turned out to be zero, we bring this to a hexadecimal number, we get 0 (first ten), 3D5 (second ten)) - 0 + D800 = D800 (

110110 0 000000000

) the first 6 bits determine that the number from the range of surrogate pairs the tenth bit (on the right) is zero, then this is the first surrogate - 3D5 + DC00 = DFD5 (

110111 1 111010101

) the first 6 bits determine that the number in the range of surrogate pairs is the tenth bit (on the right) is one, then this is the second surrogate - total, this character in UTF-16 is

1101100000000000 1101111111010101

Now we will decode the opposite. Let's say that we have such a code - 1101100000100010 1101111010001000:

- translate into hexadecimal form = D822 DE88 (both values are from the range of surrogate pairs, so we have before us a surrogate pair)

-

110110 0 000100010

- the tenth bit (on the right) is zero, then the first substitute -

110111 1 010001000

- the tenth bit (on the right) is one, then the second substitute - we discard 6 bits responsible for determining the surrogate, we get

0000100010 1010001000

( 8A88 ) - add 10,000 (fewer surrogate ranges) 8A88 + 10000 = 18A88

- look in the unicode table the character U + 18A88 = Tangut Component-649. Components of Tangut script.

Thanks to those who were able to read to the end, I hope it was useful and not very boring.

Here are some interesting links on this topic:

habr.com/en/post/158895 - useful general information on encodings

habr.com/en/post/312642 - about Unicode

unicode-table.com/ru - the Unicode character table itself

Well, actually where would you be without her

en.wikipedia.org/wiki/%D0%AE%D0%BD%D0%B8%D0%BA%D0%BE%D0%B4 - Unicode

en.wikipedia.org/wiki/ASCII - ASCII

en.wikipedia.org/wiki/UTF-8 - UTF-8

en.wikipedia.org/wiki/UTF-16 - UTF-16