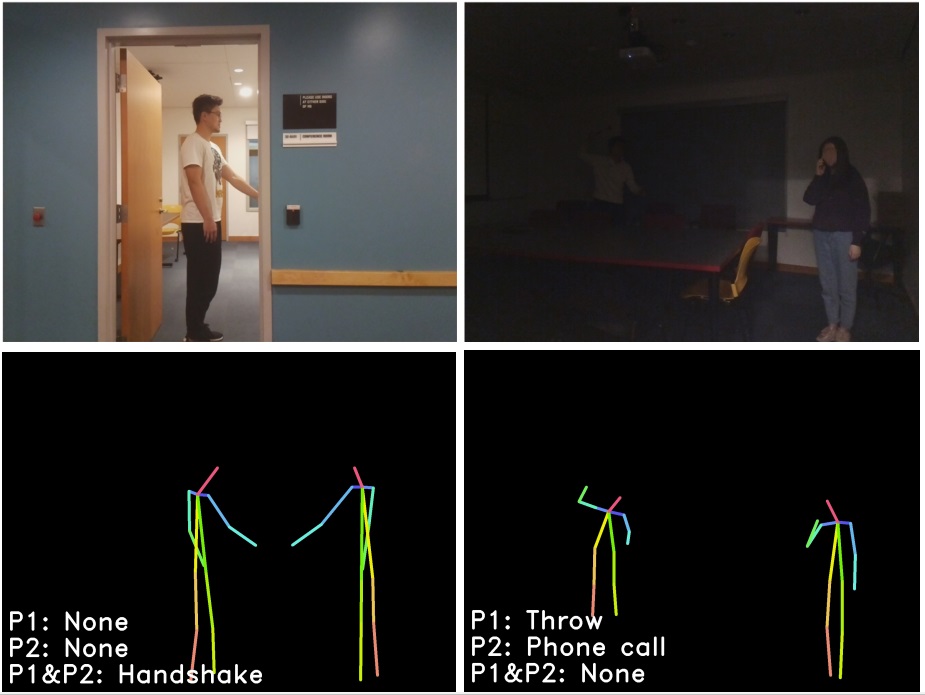

On the left, two people shake hands, one of them behind the wall from the camera. On the right, a man in the dark throws an object to the person who is calling. Below is the generated skeletal model and the prediction of actions.

The CSAIL (Computer Science and Artificial Intelligence Lab) team’s radio vision was already written on Habré ( once and twice ), today there are some fresh details.

The algorithm uses radio waves rather than visible light to determine what people are doing without showing how they look.

Machine vision has an impressive track record. It has the superhuman ability to recognize people, faces and objects. It can even recognize various kinds of actions, although not as well as humans.

But its performance is limited. Machine vision is especially difficult when people, faces or objects are partially closed. And when the light level drops to 0, they, like people, are almost blind.

But there is another part of the electromagnetic spectrum that is not so limited. Radio waves fill our world, whether it is night or day. They easily pass through walls, are transmitted and reflected by human bodies. Indeed, researchers have developed various ways to use Wi-Fi radio signals to see behind closed doors.

But these radio-vision systems have some disadvantages. Their resolution is low, the images are noisy and filled with distracting reflections, which makes it difficult to understand what is happening.

In this sense, radio images and images in visible light have their additional advantages and disadvantages. And this increases the likelihood of using the strengths of one to overcome the shortcomings of the other.

Meet Tianhong Li and his colleagues at MIT, who have found a way to teach the radio system to recognize the actions of people by teaching it using images in visible light. The new radio-vision system allows you to see what people are doing in a wide range of situations when visualization in visible light is impossible. “We are introducing a neural network model that can detect human actions through walls and occlusions, as well as in poor lighting conditions ,” said Lee and Co.

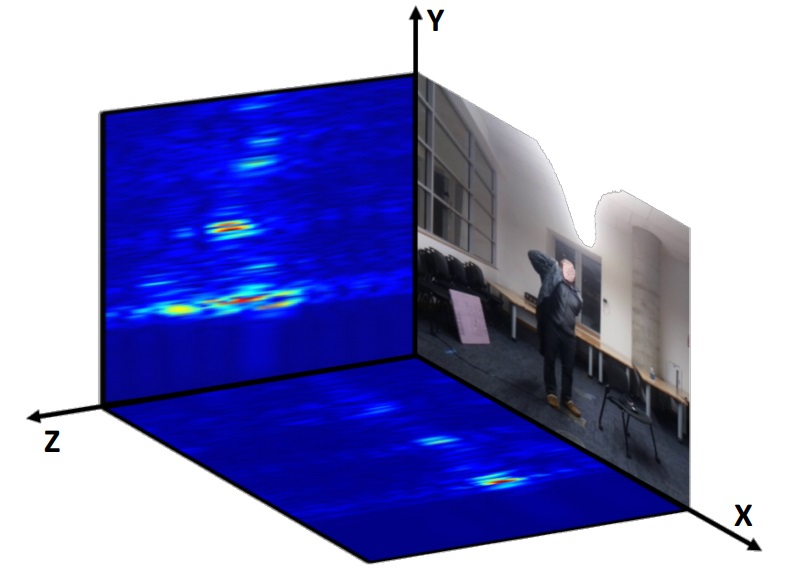

Radio frequency heatmap and RGB image recorded in parallel.

The team uses a tricky trick. The main idea is to record video images of the same scene using visible light and radio waves. Machine vision systems are already capable of recognizing human actions from images in visible light. Therefore, the next step is to correlate these images with the radio images of the same scene.

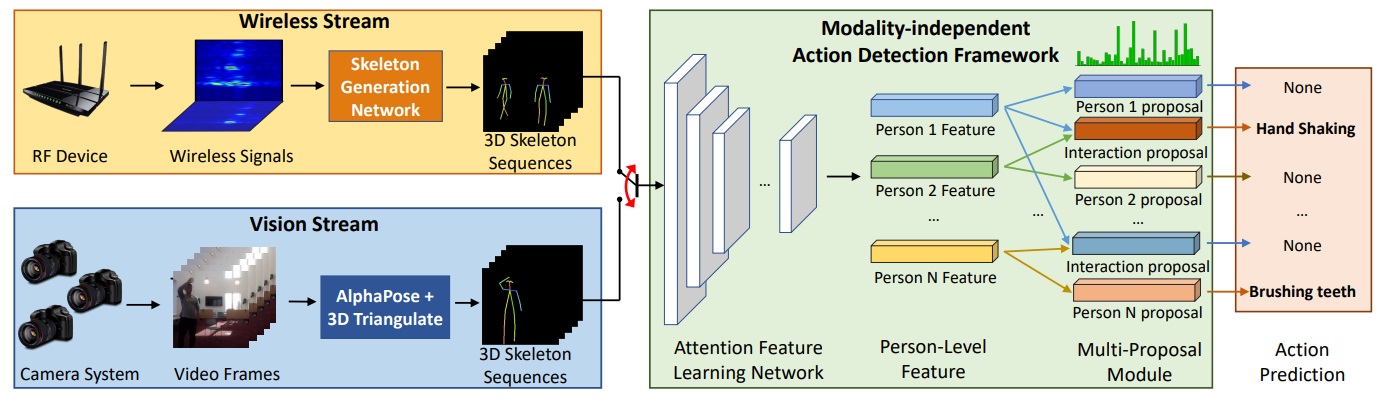

RF-Action Architecture. RF-Action identifies human activities by wireless signal. Retrieves the “3D skeleton" for each person from the raw stream of the wireless signal (yellow box). Then, actions from the extracted sequences of “skeletons” (green field) are detected and recognized. The Action Detection Framework can also accept three-dimensional skeletons generated from visual data as input (blue rectangle), which allows you to train on skeletons generated by radio frequencies, as well as on existing databases with recognized actions.

However, the difficulty lies in ensuring that the learning process is focused on the human movement, and not on other things, such as the background. Therefore, Lee and the team introduce an intermediate stage in which the machine generates 3D models of figures that reproduce the actions of people.

“By translating data into an intermediate representation based on a skeleton, our model can learn from both visual and radio frequency datasets, and allows both tasks to help each other ,” said Lee and the team.

Thus, the system learns to recognize actions in visible light, and then recognize the same actions occurring in the dark or behind walls using radio waves. “We show that our model achieves accuracy comparable to vision recognition systems based on vision in visible scenarios, and continues to work accurately when people are not visible,” the researchers say.

This is an interesting work that has significant potential. The obvious application is in scenarios when images in visible light are impossible - in low light conditions and behind closed doors.

But there are other situations. One of the problems with visible images is that people are recognizable, which causes privacy issues.

But the radio system does not have the ability to recognize faces. Identification of actions without face recognition does not raise the same concerns regarding confidentiality. “This can bring technology to people's homes and integrate it into smart home systems ,” Lee and Co. say. This can be used, for example, to monitor an elderly person’s house and notify relevant services of a fall. And that would happen without much risk to privacy.

This goes beyond the capabilities of modern vision-based systems.

results

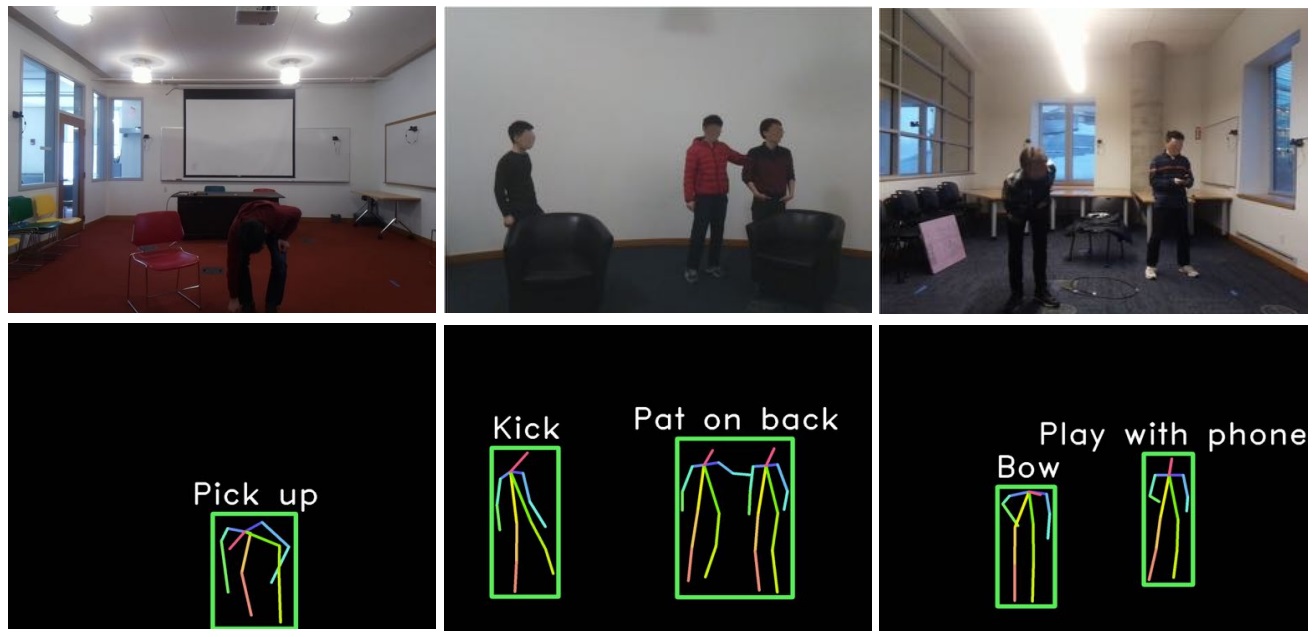

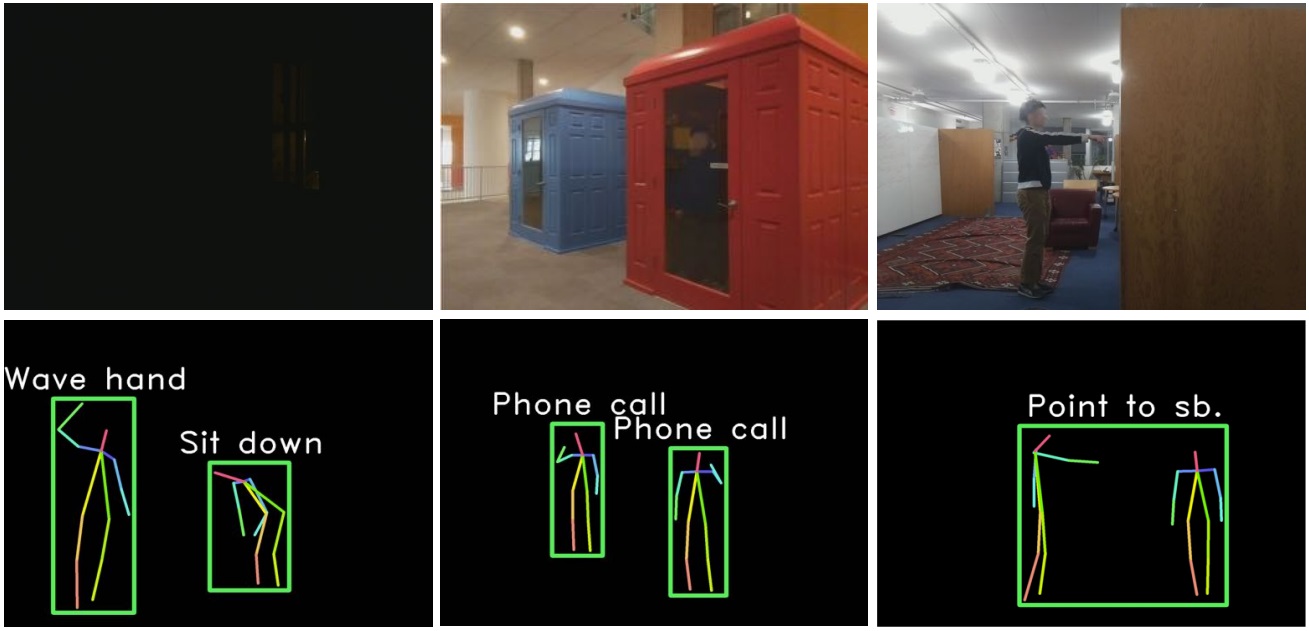

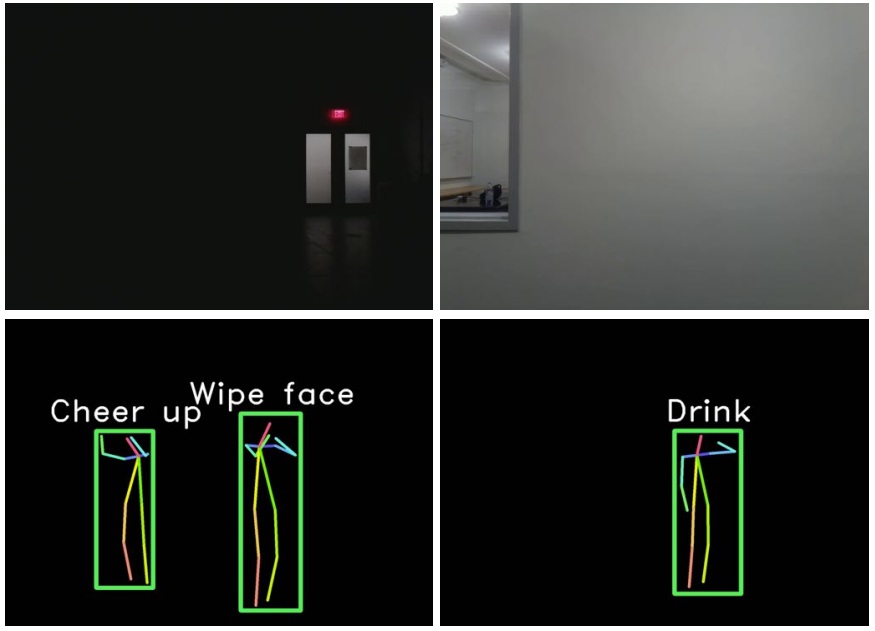

The result of work in various scenarios. Visible scenes:

Partial or complete overlap of the field of view and poor lighting. Skeletons are shown in the form of two-dimensional projections of the generated 3D model:

- Article at arXiv.org: Making the Invisible Visible: Action Recognition Through Walls and Occlusions

About ITELMA

We are a large automotive component company. The company employs about 2500 employees, including 650 engineers.

We are perhaps the most powerful competence center in Russia for the development of automotive electronics in Russia. Now we are actively growing and we have opened many vacancies (about 30, including in the regions), such as a software engineer, design engineer, lead development engineer (DSP programmer), etc.

We have many interesting challenges from automakers and concerns driving the industry. If you want to grow as a specialist and learn from the best, we will be glad to see you in our team. We are also ready to share expertise, the most important thing that happens in automotive. Ask us any questions, we will answer, we will discuss.

We are perhaps the most powerful competence center in Russia for the development of automotive electronics in Russia. Now we are actively growing and we have opened many vacancies (about 30, including in the regions), such as a software engineer, design engineer, lead development engineer (DSP programmer), etc.

We have many interesting challenges from automakers and concerns driving the industry. If you want to grow as a specialist and learn from the best, we will be glad to see you in our team. We are also ready to share expertise, the most important thing that happens in automotive. Ask us any questions, we will answer, we will discuss.

Read more useful articles: