Who cares, please, under the cat.

Details about the robotic project can be found here , but for now we will directly stream the video from it (or rather, from the Android smartphone attached to it) to a personal electronic computer.

What do we need for this?

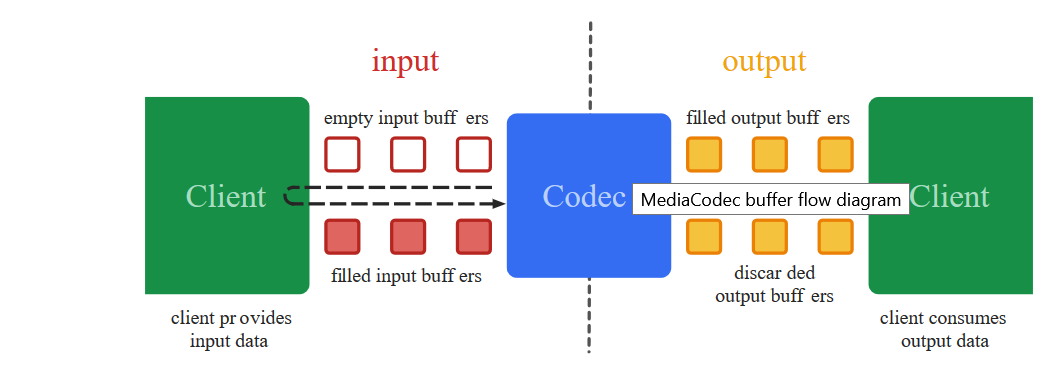

In order to transfer a video stream from the smartphone’s screen to somewhere else, as you know, it (stream) must first be converted to a suitable shrunken format (it will be too thick to transmit frame by frame), set time-stamps (time stamps) and sent in binary form to the recipient . Which will perform the inverse decoding operation.

It is precisely these low-level black cases that the Media Codec class has been dealing with since 2013, from the release date of Android 4.3.

Another thing is that earlier approaching video encoding, unlike today, was not so simple. To get a picture from the camera, it was necessary to use tons of a mysterious code in which, as in the spells of the Yakut shamans, the only inaccuracy could lead to a complete crash of the application. Add to this the previous Camera API, where instead of ready-made callbacks, you had to write different synchronized pens yourself, and this lesson, let's say, is not for the faint of heart.

And most importantly, you look at the working code from afar, everything seems to be clear in general terms. You start to transfer in parts to your project - it is not clear why it is pouring. But it’s impossible to correct, because it’s hard to figure out the details.

Yes, and from solid

Fortunately, for the slow-witted, Google builders have introduced the magical concept of Surface , working with which you can avoid low-level details. It’s hard for me as a layman to understand at what cost and what the developer loses, but now we can almost literally say: “Android, take this Surface onto which the video from the camera is displayed and without changing anything there, well, as it is, encode and send on. ” And the most amazing thing is that it works. And with the new Camera2 API, the program itself knows when to send data, new callbacks appeared!

So now to encode the video - just spit. What are we going to do now.

We take the code from the first article and, as usual, throw everything out of it except the buttons and camera initialization.

Let's start with the Layout for the app.

<?xml version="1.0" encoding="utf-8"?> <androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" tools:context=".MainActivity"> <TextureView android:id="@+id/textureView" android:layout_width="356dp" android:layout_height="410dp" android:layout_marginTop="32dp" app:layout_constraintEnd_toEndOf="parent" app:layout_constraintHorizontal_bias="0.49" app:layout_constraintStart_toStartOf="parent" app:layout_constraintTop_toTopOf="parent" /> <LinearLayout android:layout_width="292dp" android:layout_height="145dp" android:layout_marginStart="16dp" android:orientation="vertical" app:layout_constraintBottom_toBottomOf="parent" app:layout_constraintEnd_toEndOf="parent" app:layout_constraintStart_toStartOf="parent" app:layout_constraintTop_toBottomOf="@+id/textureView" app:layout_constraintVertical_bias="0.537"> <Button android:id="@+id/button1" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> <Button android:id="@+id/button2" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> <Button android:id="@+id/button3" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> </LinearLayout> </androidx.constraintlayout.widget.ConstraintLayout>

And end with Media Codec hooking

In the last post, we displayed the image from the camera on the Surface and wrote video from it using MediaRecorder. To do this, we simply specified both components in the Surface list.

(Arrays.asList(surface, mMediaRecorder.getSurface()).

Here the same thing, only instead of mMediaRecorder we specify:

(Arrays.asList(surface, mEncoderSurface),

It turns out, something like:

private void startCameraPreviewSession() { SurfaceTexture texture = mImageView.getSurfaceTexture(); texture.setDefaultBufferSize(320, 240); surface = new Surface(texture); try { mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); mPreviewBuilder.addTarget(surface); mPreviewBuilder.addTarget(mEncoderSurface); mCameraDevice.createCaptureSession(Arrays.asList(surface, mEncoderSurface), new CameraCaptureSession.StateCallback() { @Override public void onConfigured(CameraCaptureSession session) { mSession = session; try { mSession.setRepeatingRequest(mPreviewBuilder.build(), null, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } @Override public void onConfigureFailed(CameraCaptureSession session) { } }, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } }

What is mEncoderSurface? And this is the same Surface that Media Codec will work with. Just for starters, you need to initialize both of them in approximately this way.

private void setUpMediaCodec() { try { mCodec = MediaCodec.createEncoderByType("video/avc"); // H264 } catch (Exception e) { Log.i(LOG_TAG, " "); } int width = 320; // int height = 240; // int colorFormat = MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface; // int videoBitrate = 500000; // bps ( ) int videoFramePerSecond = 20; // FPS int iframeInterval = 2; // I-Frame MediaFormat format = MediaFormat.createVideoFormat("video/avc", width, height); format.setInteger(MediaFormat.KEY_COLOR_FORMAT, colorFormat); format.setInteger(MediaFormat.KEY_BIT_RATE, videoBitrate); format.setInteger(MediaFormat.KEY_FRAME_RATE, videoFramePerSecond); format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, iframeInterval); mCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE); // mEncoderSurface = mCodec.createInputSurface(); // Surface mCodec.setCallback(new EncoderCallback()); mCodec.start(); // Log.i(LOG_TAG, " "); }

Now it remains to prescribe a single callback. When Media Codec suddenly feels that the next data for further broadcasting is ready, he will notify us about it through him:

private class EncoderCallback extends MediaCodec.Callback { @Override public void onInputBufferAvailable(MediaCodec codec, int index) { } @Override public void onOutputBufferAvailable(MediaCodec codec, int index, MediaCodec.BufferInfo info) { outPutByteBuffer = mCodec.getOutputBuffer(index); byte[] outDate = new byte[info.size]; outPutByteBuffer.get(outDate); Log.i(LOG_TAG, " outDate.length : " + outDate.length); mCodec.releaseOutputBuffer(index, false); } @Override public void onError(MediaCodec codec, MediaCodec.CodecException e) { Log.i(LOG_TAG, "Error: " + e); } @Override public void onOutputFormatChanged(MediaCodec codec, MediaFormat format) { Log.i(LOG_TAG, "encoder output format changed: " + format); } }

The outDate byte array is a real treasure. It contains ready-made pieces of an encoded H264 video stream with which we can now do whatever we want.

Here they are…

Some pieces may be too large for transmission over the network, but nothing, the system, if necessary, will chop them up by itself and send them to the recipient.

But if it’s very scary, then you can shred yourself by shoving such a fragment

int count =0; int temp =outDate.length ; do {// byte[] ds; temp = temp-1024; if(temp>=0) { ds = new byte[1024];} else { ds = new byte[temp+1024];} for(int i =0;i<ds.length;i++) { ds[i]=outDate[i+1024*count]; } count=count+1; try { // Log.i(LOG_TAG, " outDate.length : " + ds.length); DatagramPacket packet = new DatagramPacket(ds, ds.length, address, port); udpSocket.send(packet); } catch (IOException e) { Log.i(LOG_TAG, " UDP "); } } while (temp>=0);

But for now, we need to see firsthand that the data in the buffer is indeed a H264 video stream. Therefore, let's send them to a file:

We will write in the setup:

private void setUpMediaCodec() { File mFile = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test3.h264"); try { outputStream = new BufferedOutputStream(new FileOutputStream(mFile)); Log.i("Encoder", "outputStream initialized"); } catch (Exception e) { e.printStackTrace(); }

And in the callback where is the buffer:

try { outputStream.write(outDate, 0, outDate.length);// } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); }

Open the application, press the button: “TURN ON THE CAMERA AND STREAM”. Recording starts automatically. We wait a little and press the stop button.

The saved file will normally not normally be lost, since the format is not MP4, but if you open it with a VLC player or convert it online using ONLINE CONVERT , we will make sure that we are on the right track. True, the image lies on its side, but it is fixable.

In general, for each event of recording, photographing or stream, it is better, of course, to open a new session each time, and close the old one. That is, first we turn on the camera and launch the bare preview. Then, if you need to take a picture, close the preview and open the preview, but with Image Reader fastened. If we switch to video recording, then close the current session and start the session with the preview and Media Recorder attached to it. I didn’t do this so that the visibility of the code does not suffer, but it’s up to you to decide how it is more convenient for yourself.

And here is the whole code.

BasicMediaCodec

package com.example.basicmediacodec; import androidx.annotation.RequiresApi; import androidx.appcompat.app.AppCompatActivity; import androidx.core.content.ContextCompat; import android.Manifest; import android.content.Context; import android.content.pm.ActivityInfo; import android.content.pm.PackageManager; import android.graphics.SurfaceTexture; import android.hardware.camera2.CameraAccessException; import android.hardware.camera2.CameraCaptureSession; import android.hardware.camera2.CameraDevice; import android.hardware.camera2.CameraManager; import android.hardware.camera2.CaptureRequest; import android.media.MediaCodec; import android.media.MediaCodecInfo; import android.media.MediaFormat; import android.os.Build; import android.os.Bundle; import android.os.Environment; import android.os.Handler; import android.os.HandlerThread; import android.os.StrictMode; import android.util.Log; import android.view.Surface; import android.view.TextureView; import android.view.View; import android.widget.Button; import android.widget.Toast; import java.io.BufferedOutputStream; import java.io.File; import java.io.FileOutputStream; import java.io.IOException; import java.nio.ByteBuffer; import java.util.Arrays; public class MainActivity extends AppCompatActivity { public static final String LOG_TAG = "myLogs"; public static Surface surface = null; CameraService[] myCameras = null; private CameraManager mCameraManager = null; private final int CAMERA1 = 0; private Button mButtonOpenCamera1 = null; private Button mButtonStreamVideo = null; private Button mButtonTStopStreamVideo = null; public static TextureView mImageView = null; private HandlerThread mBackgroundThread; private Handler mBackgroundHandler = null; private MediaCodec mCodec = null; // Surface mEncoderSurface; // Surface BufferedOutputStream outputStream; ByteBuffer outPutByteBuffer; private void startBackgroundThread() { mBackgroundThread = new HandlerThread("CameraBackground"); mBackgroundThread.start(); mBackgroundHandler = new Handler(mBackgroundThread.getLooper()); } private void stopBackgroundThread() { mBackgroundThread.quitSafely(); try { mBackgroundThread.join(); mBackgroundThread = null; mBackgroundHandler = null; } catch (InterruptedException e) { e.printStackTrace(); } } @RequiresApi(api = Build.VERSION_CODES.M) @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_PORTRAIT); setContentView(R.layout.activity_main); Log.d(LOG_TAG, " "); if (checkSelfPermission(Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) ) { requestPermissions(new String[]{Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE}, 1); } mButtonOpenCamera1 = findViewById(R.id.button1); mButtonStreamVideo = findViewById(R.id.button2); mButtonTStopStreamVideo = findViewById(R.id.button3); mImageView = findViewById(R.id.textureView); mButtonOpenCamera1.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { setUpMediaCodec();// if (myCameras[CAMERA1] != null) {// if (!myCameras[CAMERA1].isOpen()) myCameras[CAMERA1].openCamera(); } } }); mButtonStreamVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { { // } } }); mButtonTStopStreamVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if (mCodec != null) { Toast.makeText(MainActivity.this, " ", Toast.LENGTH_SHORT).show(); myCameras[CAMERA1].stopStreamingVideo(); } } }); mCameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE); try { // myCameras = new CameraService[mCameraManager.getCameraIdList().length]; for (String cameraID : mCameraManager.getCameraIdList()) { Log.i(LOG_TAG, "cameraID: " + cameraID); int id = Integer.parseInt(cameraID); // myCameras[id] = new CameraService(mCameraManager, cameraID); } } catch (CameraAccessException e) { Log.e(LOG_TAG, e.getMessage()); e.printStackTrace(); } } public class CameraService { private String mCameraID; private CameraDevice mCameraDevice = null; private CameraCaptureSession mSession; private CaptureRequest.Builder mPreviewBuilder; public CameraService(CameraManager cameraManager, String cameraID) { mCameraManager = cameraManager; mCameraID = cameraID; } private CameraDevice.StateCallback mCameraCallback = new CameraDevice.StateCallback() { @Override public void onOpened(CameraDevice camera) { mCameraDevice = camera; Log.i(LOG_TAG, "Open camera with id:" + mCameraDevice.getId()); startCameraPreviewSession(); } @Override public void onDisconnected(CameraDevice camera) { mCameraDevice.close(); Log.i(LOG_TAG, "disconnect camera with id:" + mCameraDevice.getId()); mCameraDevice = null; } @Override public void onError(CameraDevice camera, int error) { Log.i(LOG_TAG, "error! camera id:" + camera.getId() + " error:" + error); } }; private void startCameraPreviewSession() { SurfaceTexture texture = mImageView.getSurfaceTexture(); texture.setDefaultBufferSize(320, 240); surface = new Surface(texture); try { mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); mPreviewBuilder.addTarget(surface); mPreviewBuilder.addTarget(mEncoderSurface); mCameraDevice.createCaptureSession(Arrays.asList(surface, mEncoderSurface), new CameraCaptureSession.StateCallback() { @Override public void onConfigured(CameraCaptureSession session) { mSession = session; try { mSession.setRepeatingRequest(mPreviewBuilder.build(), null, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } @Override public void onConfigureFailed(CameraCaptureSession session) { } }, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } public boolean isOpen() { if (mCameraDevice == null) { return false; } else { return true; } } public void openCamera() { try { if (checkSelfPermission(Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED) { mCameraManager.openCamera(mCameraID, mCameraCallback, mBackgroundHandler); } } catch (CameraAccessException e) { Log.i(LOG_TAG, e.getMessage()); } } public void closeCamera() { if (mCameraDevice != null) { mCameraDevice.close(); mCameraDevice = null; } } public void stopStreamingVideo() { if (mCameraDevice != null & mCodec != null) { try { mSession.stopRepeating(); mSession.abortCaptures(); } catch (CameraAccessException e) { e.printStackTrace(); } mCodec.stop(); mCodec.release(); mEncoderSurface.release(); closeCamera(); } } } private void setUpMediaCodec() { File mFile = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test3.h264"); try { outputStream = new BufferedOutputStream(new FileOutputStream(mFile)); Log.i("Encoder", "outputStream initialized"); } catch (Exception e) { e.printStackTrace(); } try { mCodec = MediaCodec.createEncoderByType("video/avc"); // H264 } catch (Exception e) { Log.i(LOG_TAG, " "); } int width = 320; // int height = 240; // int colorFormat = MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface; // int videoBitrate = 500000; // bps ( ) int videoFramePerSecond = 20; // FPS int iframeInterval = 3; // I-Frame MediaFormat format = MediaFormat.createVideoFormat("video/avc", width, height); format.setInteger(MediaFormat.KEY_COLOR_FORMAT, colorFormat); format.setInteger(MediaFormat.KEY_BIT_RATE, videoBitrate); format.setInteger(MediaFormat.KEY_FRAME_RATE, videoFramePerSecond); format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, iframeInterval); mCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE); // mEncoderSurface = mCodec.createInputSurface(); // Surface mCodec.setCallback(new EncoderCallback()); mCodec.start(); // Log.i(LOG_TAG, " "); } private class EncoderCallback extends MediaCodec.Callback { @Override public void onInputBufferAvailable(MediaCodec codec, int index) { } @Override public void onOutputBufferAvailable(MediaCodec codec, int index, MediaCodec.BufferInfo info) { outPutByteBuffer = mCodec.getOutputBuffer(index); byte[] outDate = new byte[info.size]; outPutByteBuffer.get(outDate); try { Log.i(LOG_TAG, " outDate.length : " + outDate.length); outputStream.write(outDate, 0, outDate.length);// } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } mCodec.releaseOutputBuffer(index, false); } @Override public void onError(MediaCodec codec, MediaCodec.CodecException e) { Log.i(LOG_TAG, "Error: " + e); } @Override public void onOutputFormatChanged(MediaCodec codec, MediaFormat format) { Log.i(LOG_TAG, "encoder output format changed: " + format); } } @Override public void onPause() { if (myCameras[CAMERA1].isOpen()) { myCameras[CAMERA1].closeCamera(); } stopBackgroundThread(); super.onPause(); } @Override public void onResume() { super.onResume(); startBackgroundThread(); } }

And don't forget about permissions in the manifest.

<uses-permission android:name="android.permission.CAMERA" /> <uses-permission android:name="android.permission.INTERNET"/>

So, we made sure that Media Codec is working. But using it to write video to a file is somehow spiritless. Media Recorder can handle this task much better, and it will add sound. Therefore, we will throw out the file part again and add a code block for streaming video to the network using the udp protocol. It is also very simple.

First, we initialize the UDP server.

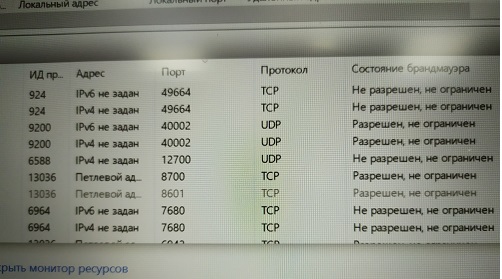

DatagramSocket udpSocket; String ip_address = "192.168.1.84"; // InetAddress address; int port = 40002; // , …….. try { udpSocket = new DatagramSocket(); Log.i(LOG_TAG, " udp "); } catch ( SocketException e) { Log.i(LOG_TAG, " udp "); } try { address = InetAddress.getByName(ip_address); Log.i(LOG_TAG, " "); } catch (Exception e) {

And in the same callback, where we sent readiness data to the stream for the file, we will now send them in the form of datagrams to our home network (I hope everyone has it?)

try { DatagramPacket packet = new DatagramPacket(outDate, outDate.length, address, port); udpSocket.send(packet); } catch (IOException e) { Log.i(LOG_TAG, " UDP "); }

Is that all?

It would seem, but no. The application will brighten up at startup. You see, the system doesn’t like that in the main stream we send all kinds of datagram packets. But there is no reason for panic. Firstly, although we are in the main thread, we still work asynchronously, that is, to trigger a callback. Secondly sending udp packets is the same asynchronous process. We just tell the operating system that it would be nice to send a packet, but that we rely entirely on it in this matter. Therefore, so that Android does not rebel, at the beginning of the program, add two lines:

StrictMode.ThreadPolicy policy = new StrictMode.ThreadPolicy.Builder().permitAll().build(); StrictMode.setThreadPolicy(policy);

In general, the following small

package com.example.basicmediacodec; import androidx.annotation.RequiresApi; import androidx.appcompat.app.AppCompatActivity; import androidx.core.content.ContextCompat; import android.Manifest; import android.content.Context; import android.content.pm.ActivityInfo; import android.content.pm.PackageManager; import android.graphics.SurfaceTexture; import android.hardware.camera2.CameraAccessException; import android.hardware.camera2.CameraCaptureSession; import android.hardware.camera2.CameraDevice; import android.hardware.camera2.CameraManager; import android.hardware.camera2.CaptureRequest; import android.media.MediaCodec; import android.media.MediaCodecInfo; import android.media.MediaFormat; import android.os.Build; import android.os.Bundle; import android.os.Handler; import android.os.HandlerThread; import android.os.StrictMode; import android.util.Log; import android.view.Surface; import android.view.TextureView; import android.view.View; import android.widget.Button; import android.widget.Toast; import java.io.BufferedOutputStream; import java.io.IOException; import java.net.DatagramPacket; import java.net.DatagramSocket; import java.net.InetAddress; import java.net.SocketException; import java.nio.ByteBuffer; import java.util.Arrays; public class MainActivity extends AppCompatActivity { public static final String LOG_TAG = "myLogs"; public static Surface surface = null; CameraService[] myCameras = null; private CameraManager mCameraManager = null; private final int CAMERA1 = 0; private Button mButtonOpenCamera1 = null; private Button mButtonStreamVideo = null; private Button mButtonTStopStreamVideo = null; public static TextureView mImageView = null; private HandlerThread mBackgroundThread; private Handler mBackgroundHandler = null; private MediaCodec mCodec = null; // Surface mEncoderSurface; // Surface BufferedOutputStream outputStream; ByteBuffer outPutByteBuffer; DatagramSocket udpSocket; String ip_address = "192.168.1.84"; InetAddress address; int port = 40002; private void startBackgroundThread() { mBackgroundThread = new HandlerThread("CameraBackground"); mBackgroundThread.start(); mBackgroundHandler = new Handler(mBackgroundThread.getLooper()); } private void stopBackgroundThread() { mBackgroundThread.quitSafely(); try { mBackgroundThread.join(); mBackgroundThread = null; mBackgroundHandler = null; } catch (InterruptedException e) { e.printStackTrace(); } } @RequiresApi(api = Build.VERSION_CODES.M) @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); StrictMode.ThreadPolicy policy = new StrictMode.ThreadPolicy.Builder().permitAll().build(); StrictMode.setThreadPolicy(policy); setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_PORTRAIT); setContentView(R.layout.activity_main); Log.d(LOG_TAG, " "); if (checkSelfPermission(Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) ) { requestPermissions(new String[]{Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE}, 1); } mButtonOpenCamera1 = findViewById(R.id.button1); mButtonStreamVideo = findViewById(R.id.button2); mButtonTStopStreamVideo = findViewById(R.id.button3); mImageView = findViewById(R.id.textureView); mButtonOpenCamera1.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { setUpMediaCodec();// if (myCameras[CAMERA1] != null) {// if (!myCameras[CAMERA1].isOpen()) myCameras[CAMERA1].openCamera(); } } }); mButtonStreamVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { { // } } }); mButtonTStopStreamVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if (mCodec != null) { Toast.makeText(MainActivity.this, " ", Toast.LENGTH_SHORT).show(); myCameras[CAMERA1].stopStreamingVideo(); } } }); try { udpSocket = new DatagramSocket(); Log.i(LOG_TAG, " udp "); } catch ( SocketException e) { Log.i(LOG_TAG, " udp "); } try { address = InetAddress.getByName(ip_address); Log.i(LOG_TAG, " "); } catch (Exception e) { } mCameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE); try { // myCameras = new CameraService[mCameraManager.getCameraIdList().length]; for (String cameraID : mCameraManager.getCameraIdList()) { Log.i(LOG_TAG, "cameraID: " + cameraID); int id = Integer.parseInt(cameraID); // myCameras[id] = new CameraService(mCameraManager, cameraID); } } catch (CameraAccessException e) { Log.e(LOG_TAG, e.getMessage()); e.printStackTrace(); } } public class CameraService { private String mCameraID; private CameraDevice mCameraDevice = null; private CameraCaptureSession mSession; private CaptureRequest.Builder mPreviewBuilder; public CameraService(CameraManager cameraManager, String cameraID) { mCameraManager = cameraManager; mCameraID = cameraID; } private CameraDevice.StateCallback mCameraCallback = new CameraDevice.StateCallback() { @Override public void onOpened(CameraDevice camera) { mCameraDevice = camera; Log.i(LOG_TAG, "Open camera with id:" + mCameraDevice.getId()); startCameraPreviewSession(); } @Override public void onDisconnected(CameraDevice camera) { mCameraDevice.close(); Log.i(LOG_TAG, "disconnect camera with id:" + mCameraDevice.getId()); mCameraDevice = null; } @Override public void onError(CameraDevice camera, int error) { Log.i(LOG_TAG, "error! camera id:" + camera.getId() + " error:" + error); } }; private void startCameraPreviewSession() { SurfaceTexture texture = mImageView.getSurfaceTexture(); texture.setDefaultBufferSize(320, 240); surface = new Surface(texture); try { mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); mPreviewBuilder.addTarget(surface); mPreviewBuilder.addTarget(mEncoderSurface); mCameraDevice.createCaptureSession(Arrays.asList(surface, mEncoderSurface), new CameraCaptureSession.StateCallback() { @Override public void onConfigured(CameraCaptureSession session) { mSession = session; try { mSession.setRepeatingRequest(mPreviewBuilder.build(), null, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } @Override public void onConfigureFailed(CameraCaptureSession session) { } }, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } public boolean isOpen() { if (mCameraDevice == null) { return false; } else { return true; } } public void openCamera() { try { if (checkSelfPermission(Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED) { mCameraManager.openCamera(mCameraID, mCameraCallback, mBackgroundHandler); } } catch (CameraAccessException e) { Log.i(LOG_TAG, e.getMessage()); } } public void closeCamera() { if (mCameraDevice != null) { mCameraDevice.close(); mCameraDevice = null; } } public void stopStreamingVideo() { if (mCameraDevice != null & mCodec != null) { try { mSession.stopRepeating(); mSession.abortCaptures(); } catch (CameraAccessException e) { e.printStackTrace(); } mCodec.stop(); mCodec.release(); mEncoderSurface.release(); closeCamera(); } } } private void setUpMediaCodec() { try { mCodec = MediaCodec.createEncoderByType("video/avc"); // H264 } catch (Exception e) { Log.i(LOG_TAG, " "); } int width = 320; // int height = 240; // int colorFormat = MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface; // int videoBitrate = 500000; // bps ( ) int videoFramePerSecond = 20; // FPS int iframeInterval = 3; // I-Frame MediaFormat format = MediaFormat.createVideoFormat("video/avc", width, height); format.setInteger(MediaFormat.KEY_COLOR_FORMAT, colorFormat); format.setInteger(MediaFormat.KEY_BIT_RATE, videoBitrate); format.setInteger(MediaFormat.KEY_FRAME_RATE, videoFramePerSecond); format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, iframeInterval); mCodec.configure(format, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE); // mEncoderSurface = mCodec.createInputSurface(); // Surface mCodec.setCallback(new EncoderCallback()); mCodec.start(); // Log.i(LOG_TAG, " "); } private class EncoderCallback extends MediaCodec.Callback { @Override public void onInputBufferAvailable(MediaCodec codec, int index) { } @Override public void onOutputBufferAvailable(MediaCodec codec, int index, MediaCodec.BufferInfo info) { outPutByteBuffer = mCodec.getOutputBuffer(index); byte[] outDate = new byte[info.size]; outPutByteBuffer.get(outDate); try { DatagramPacket packet = new DatagramPacket(outDate, outDate.length, address, port); udpSocket.send(packet); } catch (IOException e) { Log.i(LOG_TAG, " UDP "); } mCodec.releaseOutputBuffer(index, false); } @Override public void onError(MediaCodec codec, MediaCodec.CodecException e) { Log.i(LOG_TAG, "Error: " + e); } @Override public void onOutputFormatChanged(MediaCodec codec, MediaFormat format) { Log.i(LOG_TAG, "encoder output format changed: " + format); } } @Override public void onPause() { if (myCameras[CAMERA1].isOpen()) { myCameras[CAMERA1].closeCamera(); } stopBackgroundThread(); super.onPause(); } @Override public void onResume() { super.onResume(); startBackgroundThread(); } }

I don’t know how others do, but on my Red Note 7 you can even see how kilobytes are downloaded at the right address

And there are a lot of such udp sockets, how much network bandwidth is enough. The main thing is that there are addresses where. You will have a broadcast.

Now let's go look for the desired address on the computer

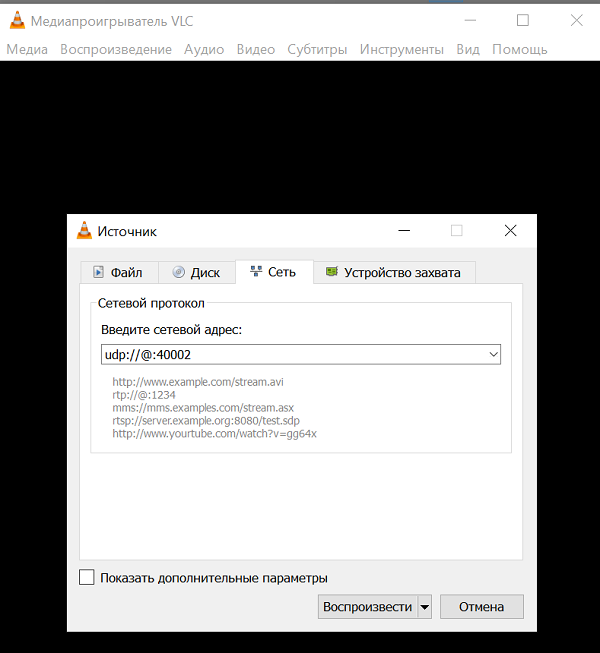

I must say that not every computer program is capable of absorbing and digesting a H264 video stream via a single udp channel without any additional information. But some may. This is for example the extremely widely known VLC media player . This is such a cool thing that if you start to describe its capabilities, then from the article you get a whole book. Surely you have it. If not, put it on.

And judging by the description of the commands for it, udp packets can digest this player.

URL syntax: file:///path/file Plain media file http://host[:port]/file HTTP URL ftp://host[:port]/file FTP URL mms://host[:port]/file MMS URL screen:// Screen capture dvd://[device] DVD device vcd://[device] VCD device cdda://[device] Audio CD device udp://[[<source address>]@[<bind address>][:<bind port>]]

And all these source address and bind address, in theory, are not needed. Only the listening port is needed.

And yet, of course, you must not forget about allowing this port to listen (Malware is)

Did you know that Windows does not allow you to make a print screen from the resource monitor?

Or you can disable the firewall at all (I do not recommend it)

So, having overcome these thorns, we launch the VLC player with our address and enjoy the blank screen. No video.

How so?

And like this.You probably have the latest version of VLC 3.08 Vetinari? That's just it, in this version of udp, it is declared deprecated and, moreover, it’s fucked up.

So the logic of the player’s developers is clear. Few people need to use the bare udp channel nowadays because:

- Normally only works on a home non-congested network. Once you go out into the outside world and unnumbered datagrams begin to get lost and come in the wrong order in which they were sent. And for a video decoder, this is very unpleasant.

- Unencrypted and easily compromised

Therefore, normal people, of course, use higher-level protocols RTP and others. That is, on the fingers - you write a server that uses udp (for speed) anyway, but at the same time exchanges control information with the client to whom it streams the video. What is its bandwidth, is it necessary to increase or decrease the cache for data, what image detail is optimal now, and so on and so forth. Again, sound is also sometimes needed. And he needs, you know, synchronization with video.

The Odnoklassniki guys even had to file their protocol for streaming. But their tasks, of course, are much more important - to send videos with cats to tens of millions of housewives around the world. There you will not manage one udp channel.

But it’s kind of sad for us to write our RTP server on android. Probably, you can even find ready-made and even free, but let's try not to complicate entities for now. Just take the version of the VLC player where udp streaming still worked.

So, download from here VLC 2.2.6 Umbrella

Install instead of or next to the old (that is, new VLC), as you wish.

We start and see a blank screen again.

And all this is because we obviously did not configure the use of the H264 codec. So VLC would be able to select the codec automatically if it had to deal with the file (in the settings initially, automatic selection was specified). But they throw a byte stream over a single channel, and there are dozens of codecs that VLC supports. How can he figure out which one to use?

Therefore, we install the codec by force.

And now we enjoy the broadcast of "live" video. The only thing is that for some reason it lies on its side, but this is already easily fixed in the settings of the video player.

And you can just start the player from the command line with this key:

C:\Program Files\VideoLAN\VLC\vlc udp://@:40002 --demux h264 --video-filter=transform --transform-type=90

And it will decode itself and turn.

So streaming works. It remains only to integrate it into the JAVA window of the robot control application. We will deal with this very soon in the final part.