Hello!

It so happened that our small development team, not to say that recently, and certainly not all of a sudden, has grown to migrate some (and in the long term, all) products to Kubernetes.

There were many reasons for this, but our story is not about holivar.

From the infrastructure basis, we had little choice. vCloud Director and vCloud Director. We chose a newer version and decided to start.

Once again, looking through “The Hard Way”, I quickly came to the conclusion that a tool for automating at least basic processes, such as deployment and sizing, was needed yesterday. Deep immersion in Google brought to light such a product as VMware Container Service Extension (CSE) - an open source product that allows you to automate the creation and sizing of k8s clusters for those in vCloud.

Disclaimer: CSE has its limitations, but for our purposes it came up perfectly. Also, the solution must be supported by the cloud provider, but since the server part is also open-source, ask for it from the nearest manager :)

CSE Client Installation

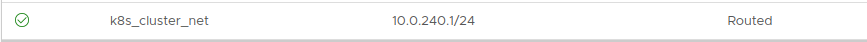

- To get started, you will need an administrator account in the vCloud organization and a routed network for the cluster created in advance. Important: during the deployment process you need Internet access from this network, do not forget to configure Firewall / NAT.

Addressing does not matter. In this example, take 10.0.240.0/24:

Since after creating the cluster you will need to manage it somehow, it is recommended that you have a VPN with routing to the created network. We use standard SSL-VPN configured on our organization’s Edge Gateway.

- Next, you need to install the CSE client to where k8s clusters will be managed. In my case, this is a working laptop and a couple of

well-hiddencontainers that steer automation.

The client requires installed Python version 3.7.3 and higher and an installed vcd-cli module , so we will install both.

pip3 install vcd-cli pip3 install container-service-extension

- After installation, check the version of CSE and get the following:

# vcd cse version Error: No such command "cse".

Unexpectedly, but fixable. - As it turned out, CSE needs to be screwed as a module to vcd-cli.

To do this, you must first log in vcd-cli to our organization:

# vcd login MyCloud.provider.com org-dev admin Password: admin logged in, org: 'org-dev', vdc: 'org-dev_vDC01'

- After that, vcd-cli will create the configuration file ~ / .vcd-cli / profiles.yaml

At its end, you must add the following:

extensions: - container_service_extension.client.cse

- Then we check again:

# vcd cse version CSE, Container Service Extension for VMware vCloud Director, version 2.5.0

The client installation phase is complete. Let's try to deploy the first cluster.

Cluster Deployment

CSE has several sets of usage parameters, all of which can be viewed here.

- First, create the keys for passwordless access to the future cluster. This point is important, because by default, password entry on nodes will be disabled. And, if you do not specify the keys, you can get a lot of work through the virtual machine console, which is not to say convenient.

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub.

- We try to start creating a cluster:

vcd cse cluster create MyCluster --network k8s_cluster_net --ssh-key ~/.ssh/id_rsa.pub --nodes 3 --enable-nfs

- If we get Error: Session has expired or user not logged in. Please re-login. - log in vcd-cli to vCloud again, as described above, and try again.

This time, everything is fine, and the task of creating a cluster has started.

cluster operation: Creating cluster vApp 'MyCluster' (38959587-54f4-4a49-8f2e-61c3a3e879e0) from template 'photon-v2_k8-1.12_weave-2.3.0' (revision 1)

- It will take about 20 minutes to complete the task. In the meantime, we will analyze the main launch parameters.

- --network - the network we created earlier.

- --ssh-key - keys created by us that will be written to the cluster nodes.

- --nodes n - The number of Worker nodes of the cluster. There will always be one master, this is a CSE limitation.

- --enable-nfs - create an additional node for NFS balls under persistent volumes. A little pedal option, we will return a little later to the adjustment of what it does.

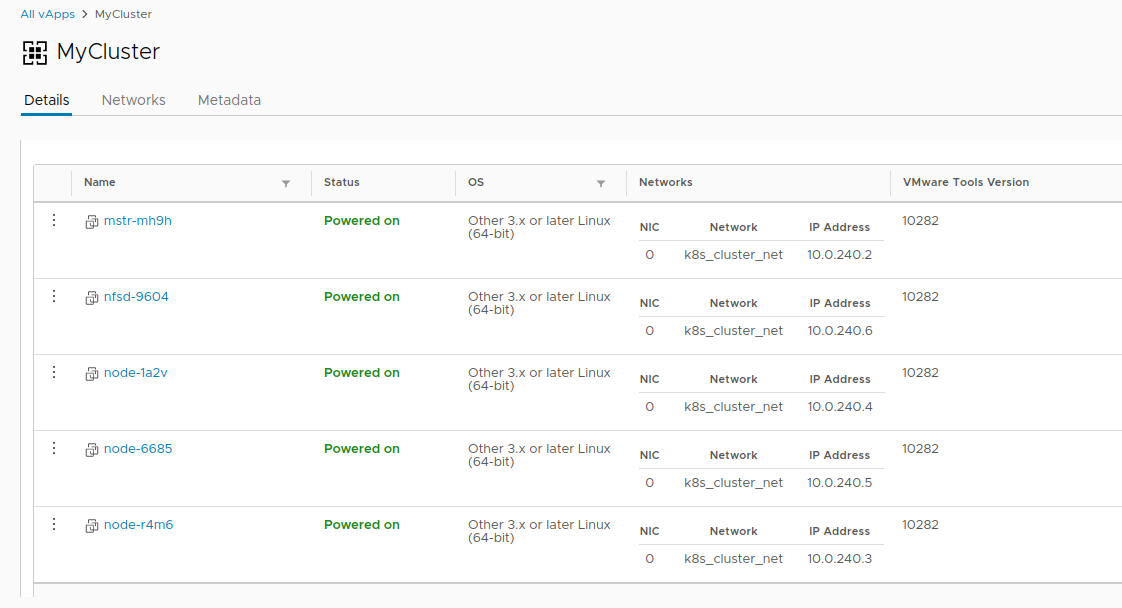

- Meanwhile, in vCloud, you can visually observe the creation of a cluster.

- Once the task of creating a cluster is completed, it is ready to go.

- Verify the deployment with the vcd cse cluster info MyCluster command .

- Next, we need to get the cluster configuration to use kubectl .

# vcd cse cluster config MyCluster > ./.kube/config

- And you can check the status of the cluster using it:

Not so simple

At this point, the cluster can be considered conditionally operational, if not for the story with persistent volumes. Since we are in vCloud, using vSphere Provider will fail. The option --enable-nfs is designed to smooth out this trouble, but it did not work out to the end. Manual tuning is required.

- To start, our node needs to create a separate Independent disk in vCloud. This ensures that our data does not disappear with the cluster if it is deleted. Also, connect the drive to NFS.

# vcd disk create nfs-shares-1 100g --description 'Kubernetes NFS shares' # vcd vapp attach mycluster nfsd-9604 nfs-shares-1

- After that, go through ssh (did you really create the keys?) To our NFS node and finally connect the disk:

root@nfsd-9604:~# parted /dev/sdb (parted) mklabel gpt Warning: The existing disk label on /dev/sdb will be destroyed and all data on this disk will be lost. Do you want to continue? Yes/No? yes (parted) unit GB (parted) mkpart primary 0 100 (parted) print Model: VMware Virtual disk (scsi) Disk /dev/sdb: 100GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags: Number Start End Size File system Name Flags 1 0.00GB 100GB 100GB primary (parted) quit root@nfsd-9604:~# mkfs -t ext4 /dev/sdb1 Creating filesystem with 24413696 4k blocks and 6111232 inodes Filesystem UUID: 8622c0f5-4044-4ebf-95a5-0372256b34f0 Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872 Allocating group tables: done Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done

- Create a directory for the data and mount a fresh section there:

mkdir /export echo '/dev/sdb1 /export ext4 defaults 0 0' >> /etc/fstab mount -a

- We will create five test sections and share them for the cluster:

>cd /export >mkdir vol1 vol2 vol3 vol4 vol5 >vi /etc/exports # /export/vol1 *(rw,sync,no_root_squash,no_subtree_check) /export/vol2 *(rw,sync,no_root_squash,no_subtree_check) /export/vol3 *(rw,sync,no_root_squash,no_subtree_check) /export/vol4 *(rw,sync,no_root_squash,no_subtree_check) /export/vol5 *(rw,sync,no_root_squash,no_subtree_check) #:wq! ;) # - >exportfs -r

- After all this magic, you can create PV and PVC in our cluster like this:

PV

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: PersistentVolume metadata: name: nfs-vol1 spec: capacity: storage: 10Gi accessModes: - ReadWriteMany nfs: # Same IP as the NFS host we ssh'ed to earlier. server: 10.150.200.22 path: "/export/vol1" EOF

PVC

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-pvc spec: accessModes: - ReadWriteMany storageClassName: "" resources: requests: storage: 10Gi EOF

This ends the story of the creation of one cluster and begins the history of its life cycle. As a bonus, there are two more useful CSE commands that allow you to save resources from time to time

# 8 >cse cluster resize MyCluster --network k8s_cluster_net --nodes 8 # >vcd cse node delete MyCluster node-1a2v node-6685 --yes

Thank you all for your time, if you have questions - ask in the comments.