Preparing the application for Istio

Istio is a convenient tool for connecting, protecting and monitoring distributed applications. Istio uses a variety of technologies to launch and manage software on a large scale, including containers for packaging application code and dependencies for deployment, and Kubernetes for managing these containers. Therefore, to work with Istio, you must know how an application with several services based on these technologies works without Istio. If you already know these tools and concepts, feel free to skip this guide and go directly to Installing Istio on the Google Kubernetes Engine (GKE) or installing the Istio on GKE extension.

This is a step-by-step guide where we will take a look at the whole process from source code to a container on GKE, so that you get a basic idea of these technologies using an example. You will also see how Istio takes advantage of these technologies. It is assumed that you do not know anything about containers, Kubernetes, service mesh or Istio.

Tasks

In this guide, you will complete the following tasks:

- Exploring a simple hello world application with multiple services.

- Running the application from source code.

- Packing the application in containers.

- Creating a Kubernetes Cluster

- Deploy containers to a cluster.

Before you start

Follow the instructions to enable the Kubernetes Engine API:

- Go to the Kubernetes Engine page in the Google Cloud Platform console.

- Create or select a project.

- Wait for the API and related services to turn on. This may take several minutes.

- Make sure billing is configured for the Google Cloud Platform project. Learn how to enable billing .

In this guide, you can use Cloud Shell, which prepares the g1-small virtual machine in the Google Compute Engine with Debian-based Linux, or a Linux or macOS computer.

Option A: Using Cloud Shell

Benefits of using Cloud Shell:

- The Python 2 and Python 3 development environments (including virtualenv ) are fully customized.

- The gcloud , docker , git, and kubectl command-line tools that we will use are already installed.

- You have several text editors to choose from:

- The code editor that opens with the edit icon at the top of the Cloud Shell window.

- Emacs, Vim, or Nano that open from the command line in Cloud Shell.

To use Cloud Shell :

- Go to the GCP console.

- Click the Activate Cloud Shell button at the top of the GCP console window.

At the bottom of the GCP console, in a new window, a Cloud Shell session opens with a command line.

Option B: using command line tools locally

If you will be working on a computer with Linux or macOS, you need to configure and install the following components:

Set up your Python 3 and Python 2 development environment .

Install the Cloud SDK with the gcloud command line tool .

Install kubectl , a command-line tool for working with Kubernetes .

gcloud components install kubectl

Install Docker Community Edition (CE) . You will use the docker command line tool to create container images for the sample application.

Install the Git version control tool to get a sample application with GitHub.

Download sample code

Download the helloserver source code:

git clone https://github.com/GoogleCloudPlatform/istio-samples

Go to the sample code directory:

cd istio-samples/sample-apps/helloserver

Exploring a multi-service application

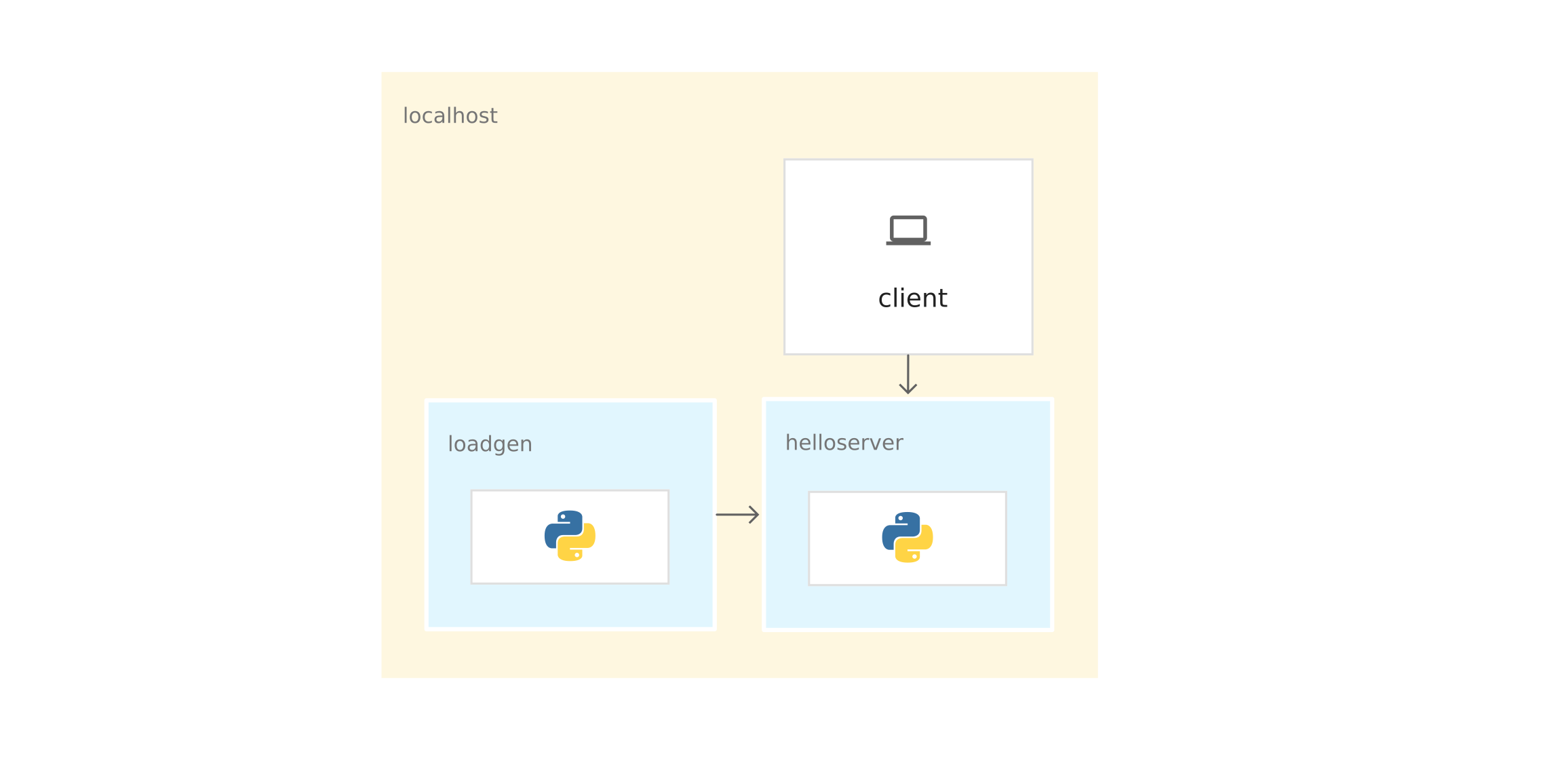

The sample application is written in Python and consists of two components that interact using REST :

- server : a simple server with one GET endpoint , / , which displays "hello world" on the console.

- loadgen : a script that sends traffic to the server , with a configurable number of requests per second.

Running an application from source

To learn a sample application, run it in Cloud Shell or on a computer.

1) In the istio-samples / sample-apps / helloserver directory, start the server :

python3 server/server.py

When server starts, the following is displayed:

INFO:root:Starting server...

2) Open another terminal window to send requests to server . If you are using Cloud Shell, click the add icon to open another session.

3) Send a request to server :

curl http://localhost:8080

server answers:

Hello World!

4) From the directory where you downloaded the sample code, go to the directory that contains loadgen :

cd YOUR_WORKING_DIRECTORY/istio-samples/sample-apps/helloserver/loadgen

5) Create the following environment variables:

export SERVER_ADDR=http://localhost:8080 export REQUESTS_PER_SECOND=5

6) Run virtualenv :

virtualenv --python python3 env

7) Activate the virtual environment:

source env/bin/activate

8) Set requirements for loadgen :

pip3 install -r requirements.txt

9) Run loadgen :

python3 loadgen.py

When started, loadgen displays the following message:

Starting loadgen: 2019-05-20 10:44:12.448415 5 request(s) complete to http://localhost:8080

In another terminal window, server displays the following messages on the console:

127.0.0.1 - - [21/Jun/2019 14:22:01] "GET / HTTP/1.1" 200 - INFO:root:GET request, Path: / Headers: Host: localhost:8080 User-Agent: python-requests/2.22.0 Accept-Encoding: gzip, deflate Accept: */*

From a network perspective, the entire application runs on the same host (local computer or Cloud Shell virtual machine). Therefore, you can use localhost to send requests to the server .

10) To stop loadgen and server , enter Ctrl-c in each terminal window.

11) In the terminal window loadgen, deactivate the virtual environment:

deactivate

Packing the application in containers

To run the application on GKE, you need to package the sample application - server and loadgen - into containers . A container is a way to pack an application to isolate it from the environment.

To pack the application in a container, you need a Dockerfile . A Dockerfile is a text file that defines commands for building the source code of an application and its dependencies into a Docker image. After the build, you upload the image to the container registry, for example, the Docker Hub or the Container Registry .

The example already has a Dockerfile for server and loadgen with all the necessary commands to assemble the images. Below is the Dockerfile for server :

FROM python:3-slim as base FROM base as builder RUN apt-get -qq update \ && apt-get install -y --no-install-recommends \ g++ \ && rm -rf /var/lib/apt/lists/* # Enable unbuffered logging FROM base as final ENV PYTHONUNBUFFERED=1 RUN apt-get -qq update \ && apt-get install -y --no-install-recommends \ wget WORKDIR /helloserver # Grab packages from builder COPY --from=builder /usr/local/lib/python3.7/ /usr/local/lib/python3.7/ # Add the application COPY . . EXPOSE 8080 ENTRYPOINT [ "python", "server.py" ]

- The FROM python: 3-slim as base command tells Docker to use the latest Python 3 image as the base image .

- COPY team . . copies the source files to the current working directory (in our case only server.py ) in the container file system.

- ENTRYPOINT defines the command that is used to start the container. In our case, this command is almost the same as the one you used to run server.py from the source code.

- The EXPOSE command indicates that the server is listening on port 8080 . This command does not provide ports . This is some kind of documentation that is needed to open port 8080 when the container starts.

Preparing for application containerization

1) Set the following environment variables. Replace PROJECT_ID with your GCP project identifier.

export PROJECT_ID="PROJECT_ID"

export GCR_REPO="preparing-istio"

Using the PROJECT_ID and GCR_REPO values, you mark the Docker image when you collect and send it to the private Container Registry.

2) Set the default GCP project for the gcloud command line tool .

gcloud config set project $PROJECT_ID

3) Set the default zone for the gcloud command line tool .

gcloud config set compute/zone us-central1-b

4) Make sure that the Container Registry service is included in the GCP project.

gcloud services enable containerregistry.googleapis.com

Containerization server

Change to the directory where the server example is located:

cd YOUR_WORKING_DIRECTORY/istio-samples/sample-apps/helloserver/server/

Build the image using the Dockerfile and environment variables that you defined earlier:

docker build -t gcr.io/$PROJECT_ID/$GCR_REPO/helloserver:v0.0.1 .

The -t option represents a Docker tag. This is the name of the image that you use when deploying the container.

- Send the image to the Container Registry:

docker push gcr.io/$PROJECT_ID/$GCR_REPO/helloserver:v0.0.1

Containerization loadgen

1) Go to the directory where the loadgen example is located :

cd ../loadgen

2) Assemble the image:

docker build -t gcr.io/$PROJECT_ID/$GCR_REPO/loadgen:v0.0.1 .

3) Send the image to the Container Registry:

docker push gcr.io/$PROJECT_ID/$GCR_REPO/loadgen:v0.0.1

View a list of images

Browse the list of images in the repository and make sure that the images are sent:

gcloud container images list --repository gcr.io/$PROJECT_ID/preparing-istio

The command displays the names of the images just sent:

NAME gcr.io/PROJECT_ID/preparing-istio/helloserver gcr.io/PROJECT_ID/preparing-istio/loadgen

Creating a GKE Cluster

These containers could be run on the Cloud Shell virtual machine or on the computer with the docker run command . But in a production environment, you need a way to centrally orchestrate containers. For example, we need a system that ensures that containers always work, and we need a way to scale up and launch additional instances of containers if traffic increases.

You can use GKE to run container applications. GKE is a container orchestration platform that clusters virtual machines. Each virtual machine is called a host. GKE clusters are based on the open source cluster management system Kubernetes. Kubernetes provides mechanisms for interacting with the cluster.

Creating a GKE Cluster:

1) Create a cluster:

gcloud container clusters create istioready \ --cluster-version latest \ --machine-type=n1-standard-2 \ --num-nodes 4

The gcloud command creates an istioready cluster in the GCP project and the default zone that you specified. To start Istio, we recommend that you have at least 4 nodes and an n1-standard-2 virtual machine.

The team creates a cluster for several minutes. When the cluster is ready, the command issues a similar message .

2) Specify the credentials in the kubectl command-line tool to use it to manage the cluster:

gcloud container clusters get-credentials istioready

3) Now you can communicate with Kubernetes through kubectl . For example, with the following command you can find out the status of nodes:

kubectl get nodes

The command displays a list of nodes:

NAME STATUS ROLES AGE VERSION gke-istoready-default-pool-dbeb23dc-1vg0 Ready <none> 99s v1.13.6-gke.13 gke-istoready-default-pool-dbeb23dc-36z5 Ready <none> 100s v1.13.6-gke.13 gke-istoready-default-pool-dbeb23dc-fj7s Ready <none> 99s v1.13.6-gke.13 gke-istoready-default-pool-dbeb23dc-wbjw Ready <none> 99s v1.13.6-gke.13

Key Concepts of Kubernetes

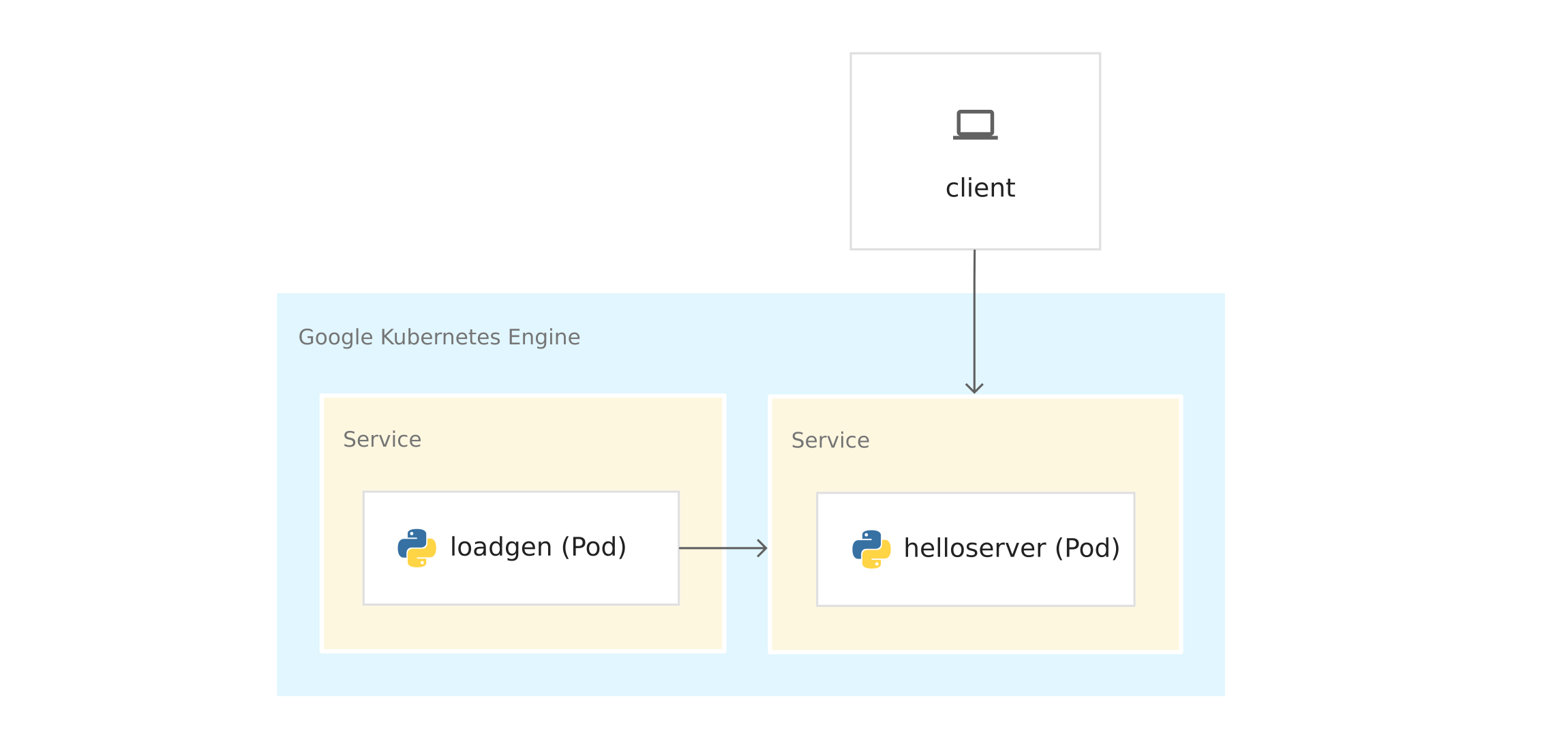

The diagram shows the application on GKE:

Before you deploy containers to GKE, look at the key concepts of Kubernetes. There are links at the very end if you want to know more.

- Nodes and clusters . In GKE, a node is a virtual machine. On other Kubernetes platforms, the host can be a computer or virtual machine. A cluster is a collection of nodes that can be considered a single entity and where you deploy a containerized application.

- Pods . In Kubernetes, containers run in pods. A pod in Kubernetes is an indivisible unit. A pod holds one or more containers. You deploy the server and loadgen containers in separate pods. When there are several containers in a pod (for example, an application server and a proxy server ), the containers are managed as a single object and share the resources of the pod.

- Deployment . In Kubernetes, deployment is an object that is a collection of identical pods. Deployment runs several replicas of pods distributed across the nodes of the cluster. Deployment automatically replaces pods that fail or do not respond.

- Kubernetes service . When you run application code in GKE, the connection between loadgen and server changes. When you started the services on the Cloud Shell virtual machine or on the computer, you sent requests to the server at localhost: 8080 . After deployment to GKE, pods run on available nodes. By default, you can’t control what host pod is running on, so pods don’t have permanent IP addresses.

To get the IP address for the server , you need to define the network abstraction on top of the pods. This is the Kubernetes service . The Kubernetes service provides a permanent endpoint for a set of pods. There are several types of services . server uses a LoadBalancer , which provides an external IP address to contact server from outside the cluster.

Kubernetes also has a built-in DNS system that assigns DNS names (for example, helloserver.default.cluster.local ) to services. Thanks to this, pods within the cluster communicate with other pods in the cluster at a fixed address. The DNS name cannot be used outside the cluster, such as on Cloud Shell or on a computer.

Kubernetes Manifests

When you started the application from the source code, you used the imperative python3 command

server.py

Imperative implies the verb: "do it."

Kubernetes uses a declarative model . This means that we do not tell Kubernetes what exactly needs to be done, but describe the desired state. For example, Kubernetes starts and stops pods as necessary, so that the actual state of the system is as desired.

You specify the desired state in the manifest, or YAML files. The YAML file contains specifications for one or more Kubernetes objects.

The example contains the YAML file for server and loadgen . Each YAML file indicates the desired state of the deployment object and the Kubernetes service.

server.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: helloserver spec: selector: matchLabels: app: helloserver replicas: 1 template: metadata: labels: app: helloserver spec: terminationGracePeriodSeconds: 5 restartPolicy: Always containers: - name: main image: gcr.io/google-samples/istio/helloserver:v0.0.1 imagePullPolicy: Always

- kind indicates the type of object.

- metadata.name indicates the name of the deployment.

- The first field spec contains a description of the desired state.

- spec.replicas indicates the desired number of pods.

- The spec.template section defines the pod template. In the specification of pods there is an image field where the name of the image to be extracted from the Container Registry is indicated.

Service is defined as follows:

apiVersion: v1 kind: Service metadata: name: hellosvc spec: type: LoadBalancer selector: app: helloserver ports: - name: http port: 80 targetPort: 8080

- LoadBalancer : Clients send requests to the IP address of the load balancer, which has a fixed IP address and which is accessible from outside the cluster.

- targetPort : as you recall, the EXPOSE 8080 command in the Dockerfile did not provide ports. You provide port 8080 so that you can reach the server container from outside the cluster. In our case, hellosvc.default.cluster.local: 80 (short name: hellosvc ) corresponds to port 8080 of the IP address of the helloserver pod.

- port : this is the port number where other services in the cluster will send requests.

loadgen.yaml

The deployment object in loadgen.yaml is similar to server.yaml . The difference is that the deployment object contains an env section. It defines the environment variables that loadgen needs and that you set when you started the application from the source code.

apiVersion: apps/v1 kind: Deployment metadata: name: loadgenerator spec: selector: matchLabels: app: loadgenerator replicas: 1 template: metadata: labels: app: loadgenerator spec: terminationGracePeriodSeconds: 5 restartPolicy: Always containers: - name: main image: gcr.io/google-samples/istio/loadgen:v0.0.1 imagePullPolicy: Always env: - name: SERVER_ADDR value: "http://hellosvc:80/" - name: REQUESTS_PER_SECOND value: "10" resources: requests: cpu: 300m memory: 256Mi limits: cpu: 500m memory: 512Mi

Since loadgen does not accept incoming requests, ClusterIP is specified for the type field. This type provides a fixed IP address that services in the cluster can use, but this IP address is not provided to external clients.

apiVersion: v1 kind: Service metadata: name: loadgensvc spec: type: ClusterIP selector: app: loadgenerator ports: - name: http port: 80 targetPort: 8080

Deploy containers in GKE

1) Go to the directory where the server example is located:

cd YOUR_WORKING_DIRECTORY/istio-samples/sample-apps/helloserver/server/

2) Open server.yaml in a text editor.

3) Replace the name in the image field with the name of your Docker image.

image: gcr.io/PROJECT_ID/preparing-istio/helloserver:v0.0.1

Replace PROJECT_ID with the identifier of your GCP project.

4) Save and close server.yaml .

5) Expand the YAML file in Kubernetes:

kubectl apply -f server.yaml

After successful completion, the command issues the following code:

deployment.apps/helloserver created service/hellosvc created

6) Go to the directory where loadgen is located:

cd ../loadgen

7) Open loadgen.yaml in a text editor.

8) Replace the name in the image field with the name of your Docker image.

image: gcr.io/PROJECT_ID/preparing-istio/loadgenv0.0.1

Replace PROJECT_ID with the identifier of your GCP project.

9) Save and close loadgen.yaml , close the text editor.

10) Expand the YAML file in Kubernetes:

kubectl apply -f loadgen.yaml

After successful completion, the command issues the following code:

deployment.apps/loadgenerator created service/loadgensvc created

11) Check the status of the hearths:

kubectl get pods

The command shows the status:

NAME READY STATUS RESTARTS AGE helloserver-69b9576d96-mwtcj 1/1 Running 0 58s loadgenerator-774dbc46fb-gpbrz 1/1 Running 0 57s

12) Extract the application logs from the loadgen pod. Replace POD_ID with the identifier from the previous answer.

kubectl logs loadgenerator-POD_ID

13) Get the external hellosvc IP addresses:

kubectl get service

The command response looks something like this:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hellosvc LoadBalancer 10.81.15.158 192.0.2.1 80:31127/TCP 33m kubernetes ClusterIP 10.81.0.1 <none> 443/TCP 93m loadgensvc ClusterIP 10.81.15.155 <none> 80/TCP 4m52s

14) Send a request to hellosvc : replace EXTERNAL_IP with the external hellosvc IP address.

curl http://EXTERNAL_IP

Tackle Istio

You already have an application deployed to GKE. loadgen can use Kubernetes DNS ( hellosvc: 80 ) to send requests to server , and you can send requests to server at an external IP address. Although Kubernetes has many features, some information about the services is lacking:

- How do services interact? What is the relationship between the services? How is the traffic between the services? You are aware that loadgen sends requests to server , but imagine that you know nothing about the application. To answer these questions, we look at the list of running hearths in GKE.

- Metrics . How long does the server respond to an incoming request? How many requests per second go to server? Does it give error messages?

- Security Information . Is the traffic between loadgen and server simply via HTTP or mTLS ?

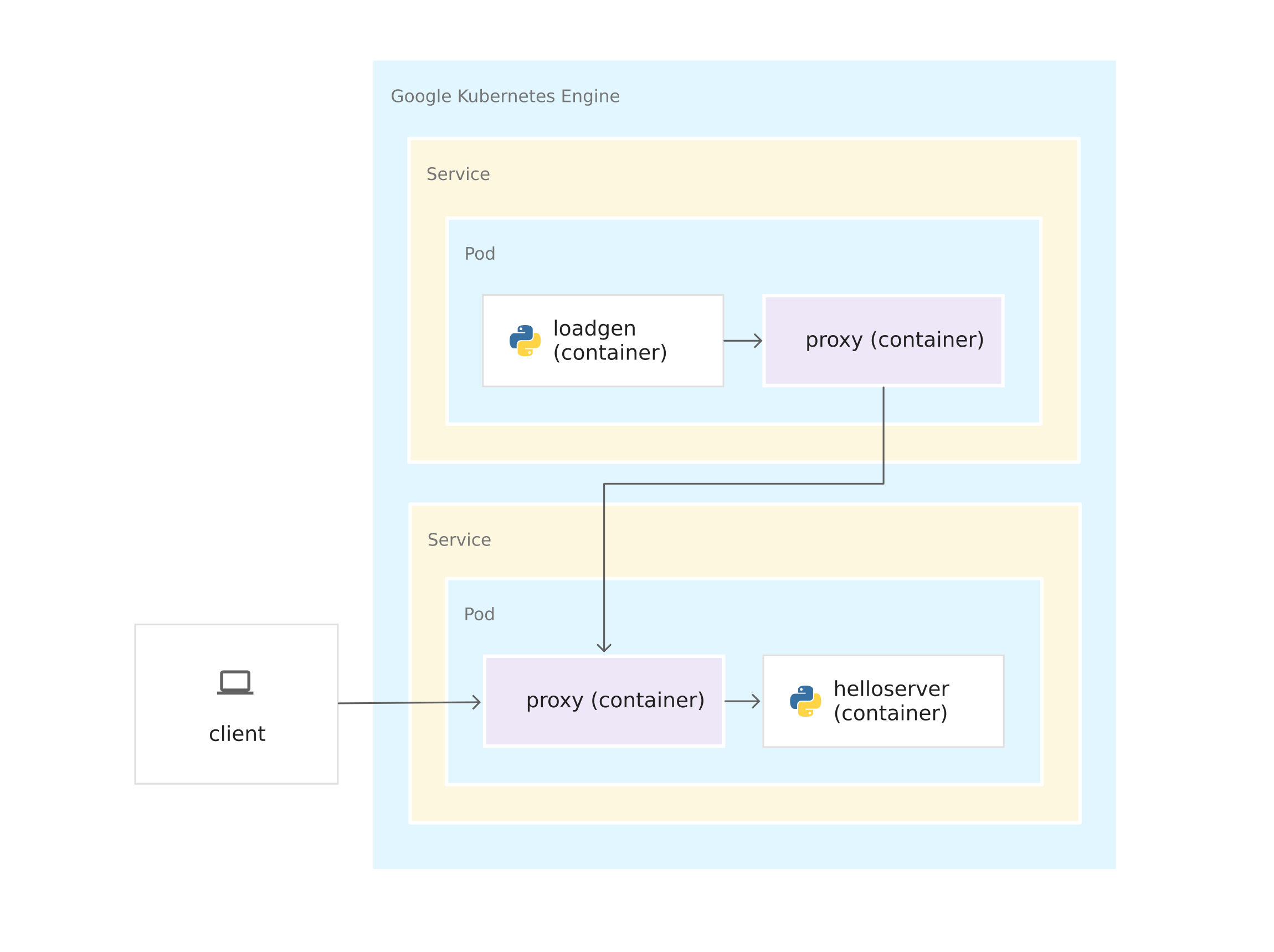

All these questions are answered by Istio. To do this, Istio places an Envoy sidecar proxy in each pod. Envoy proxy intercepts all incoming and outgoing traffic to application containers. This means that server and loadgen receive Envoy sidecar proxies, and all traffic from loadgen to server goes through Envoy proxies.

Connections between Envoy proxies form a service mesh. The service mesh architecture provides a layer of control over Kubernetes.

Once Envoy proxies are executed in their containers, Istio can be installed on top of the GKE cluster, almost without changing the application code. But you did some work to prepare the application for management using Istio:

- Services for all containers. The server and loadgen deployments are linked by Kubernetes service. Even loadgen , which does not receive incoming requests, has a service.

- Ports in services must have names. Although service ports can be left unnamed in GKE, Istio requires a port name in accordance with its protocol. In the YAML file, the port for server is called http because server uses the HTTP protocol . If service used gRPC , you would name the port grpc .

- Deployments are marked. Therefore, you can use Istio's traffic management features, for example, sharing traffic between versions of the same service.

Install Istio

There are two ways to install Istio. You can enable the Istio on GKE extension or install the open source version of Istio on a cluster. With Istio on GKE, you can easily manage the installation and upgrade of Istio as part of the GKE cluster life cycle. If you need the latest version of Istio or more control over the configuration of the Istio control panel, install the open source version instead of the Istio on GKE extension. To decide on the approach, read the article Do I Need Istio on GKE? .

Select an option, study the appropriate manual and follow the instructions to install Istio on the cluster. If you want to use Istio with your newly deployed application, enable sidecar embedding for the default namespace.

Cleaning

To ensure that the resources for the resources you used in this guide are not debited from your Google Cloud Platform account, remove the container cluster when installing Istio and playing around with the sample application. This will delete all cluster resources, such as compute instances, disks, and network resources.

What's next?

Learn the following technologies:

Learn the following tools:

Learn the concepts of Kubernetes:

All Articles