The path of artificial intelligence from a fantastic idea to the scientific industry

The idea of artificial intelligence has long worried humanity. Automatons received a lot of attention in the myths of ancient Greece, and the most famous example is the artificial woman Pandora, created by Zeus. Mystical golems are found in Jewish culture. And amazing karakuri dolls occupy an important place in the Japanese epic.

In the 17th century, some philosophers pondered the possibility of “putting the mind” into inanimate objects. Many theories have been put forward. For example, Rene Descartes believed in the dualism of mind and body. His views rejected the possibility of the mechanization of intelligence.

Leibniz had other views. He believed that all human thought can be represented mathematically using elementary symbols. To this end, he proposed Characteristica Universalis, the symbolism of concepts needed to describe knowledge.

Artificial intelligence and automatons have been featured in fiction since time immemorial. An example is the "Frankenstein, or Modern Prometheus" by Mary Shelley and the "Rossum Universal Robots" by Karel Chapek. Thanks to them, in 1921 the word "robot" appeared in literature.

These were philosophical prerequisites for the emergence of AI. Now let's talk about specific scientists and their works, thanks to which artificial intelligence from fiction has become a reality. A relatively concrete and factual story began relatively recently. The famous Turing test was first conducted in 1950, but only six years later, in 1956, AI began to form as a separate discipline. Let's see how it was.

First steps

The number of scientific papers related to artificial intelligence rose sharply in the 1950s and 60s. But earlier there have been studies affecting this topic. Bertrand Russell and Alfred North Whitehead published The Principles of Mathematics in 1913. Around the same time, George Bull put forward his laws of thought . So the foundations of mathematical logic were laid.

Perhaps it all began at the moment when an incomprehensible 15-year-old boy burst into the office of Rudolf Karnap. Karnap then already became an influential philosopher and taught at the University of Chicago. He published The Logical Syntax of the Language. The boy came without permission, and indicated errors in this work. Rudolph was shocked. The visitor was unusual. In addition, he did not even introduce himself, and immediately ran away. After months of searching, Rudolph finally found his visitor at a local university. It turned out to be Walter Harry Pitts .

Three years before that (yes, at 12), Walter wrote a letter to Bertrand Russell, pointing out the problems found in the aforementioned Principles. Russell was so impressed that he invited the boy to graduate school at Cambridge University in the UK. Walter, although growing up in a dysfunctional family, did not dare to move from Detroit. However, when Russell arrived in Chicago to give lectures, Pitts fled home to study with him. He did not become a student at the University of Chicago, but he diligently attended lectures. (The life of Walter Pitts is generally very bright and interesting, despite its short duration. I recommend reading about it on your own - approx. Translator ).

In 1942, Walter Pitts met with Warren McCallock . McCullock invited Pitts to stay at his house. They were convinced of the correctness of the Leibniz theory , which suggested the possibility of "mechanization" of human thought. And they tried to create a model for neurobiology of the human nervous system. They published their main article about the same in 1943, calling it "The logical calculus of ideas related to nervous activity ." This document has made an invaluable contribution to the field of artificial intelligence. Scientists have proposed a simple model known as the McCullock-Pitts mathematical neuron . She is still studying in machine learning courses. The ideas that scientists have proposed are the foundation of almost all modern AI.

Warren McCallock and Walter Pitts

Norbert Weiner's cybernetics and Claude Shannon's theory of information were published in 1948. Cybernetics is a study of "control and communication in a living organism and in a machine." Information theory is the measurement of the amount of information, its storage and transmission. Both works had a big impact on AI.

Cybernetics provided a direct study of biological and mechanical intelligence. And the theory of information has influenced basic mathematics.

After a couple of years, Alan Turing performed the Turing test . He described a method for determining whether a machine is smart. Simplified test is as follows: a person communicates with one computer and one person. Based on their answers to questions, he must determine who he is talking to: a person or a computer program. The task of a computer program is to mislead a person, forcing him to make the wrong choice. ”

Although the Turing test is too limited to test modern smart systems, it was a real breakthrough at the time. Alan Turing's name hit the press, making the industry more popular.

Turing test

In 1956, a conference was held in Dartmouth on the topic of studying the concepts of "mechanization" of intelligence. Later, participants in this conference will become outstanding personalities in the field of AI. The most famous was Marvin Minsky , who in 1951 created the first neural network machine, SNARC. He will become the most famous name in the world of artificial intelligence in the coming decades.

Claude Shannon also attended the conference. And future Nobel laureate Herbert A. Simon and Allen Newell made their debut with their " Logic-Theorist ." Subsequently, he will solve 38 of the first 52 theorems in Russell's Principles of Mathematics.

John McCarthy - also one of the pioneers of artificial intelligence - coined the name "Artificial Intelligence." Participants agreed with this term. This was the birth of AI.

Industry Development (1956–1974)

Semantic network

Thanks to this interest in AI has grown, a lot of interesting developments have appeared. In 1959, Newell and Simon created the “Common Problem Solver,” which theoretically could solve any formalized problem. And James Slagle created a SAINT (Symbical Automatic INTegrator) heuristic program that solved the problems of symbolic integration in calculus. These programs were impressive.

After creating the Turing test, the naturalness of the language has become an important area of AI. Daniel Bobrow’s STUDENT program was able to solve word problems in high school. A little later, the concept of the semantic network appeared - a map of various concepts and the relations between them (as in the figure). Several successful programs have been built on this network. In 1966, Joseph Weisenbaum created ELIZA. A virtual interlocutor could conduct realistic conversations with people.

In connection with such an active development of the AI industry, scientists made extremely bold statements:

- Newell and Simon, 1958: “In 10 years, the digital computer will become the world chess champion.” And "within 10 years, a digital computer will discover and prove an important new mathematical theorem."

- Simon GA, 1965: “Machines will be capable of performing any work that a person can do for 20 years.”

- Marvin Minsky, 1967: "In a generation ... the problem of creating" artificial intelligence "will be substantially solved."

- Marvin Minsky, 1970: “In three to eight years, we will have a machine with the general intelligence of an ordinary person.”

One way or another, money came into the industry. The Office of Advanced Research Projects (ARPA, later renamed DARPA) allocated $ 2.2 million to the MIT team. The then president of DARPA decided that they should “finance people, not projects,” and created a free research culture. This allowed researchers to carry out any projects that they considered correct.

Temporary Cooling (1974-1980)

In 1969, Marvin Minsky and Seymour Papert published their book Perceptrons . In it, they showed the fundamental limitations of perceptrons and highlighted the inability of perceptrons to control the elementary XOR scheme. This has led to a shift in the interest of artificial intelligence researchers to the area of symbolic computing, which is opposite to neural networks.

The alternate approach of symbolic AI gained explosive growth. But this approach did not yield any significant results. In the 1970s, it became clear that AI researchers were overly optimistic about AI. The goals that they promised were not yet achieved and their achievement seemed to be a matter of a very distant future.

Researchers realized that they rest against the wall. AI was applied to simple tasks. But the real scenarios were too complex for these systems. The number of possibilities that the algorithms had to explore turned out to be astronomical. This led to the problem of a combinatorial explosion . And then the classic question “how to make a computer smart” arose. It was a common sense problem.

All this led to the fact that investors were disappointed in technology. AI funding has thus disappeared, and research has ceased. DARPA, too, could no longer support a hacker research culture due to changes in legislation. Sponsors have frozen funding for research in the field of artificial intelligence. Therefore, the period 1974-1980. called " winter AI ".

Rebirth

In 1981, the Japanese government began to seriously invest in research in the field of artificial intelligence. The country allocated $ 850 million for the fifth-generation computer project. He aimed at developing AI. The alleged computers had to communicate, translate other languages, recognize pictures. Computers were expected to be the basis for creating devices that could simulate thinking.

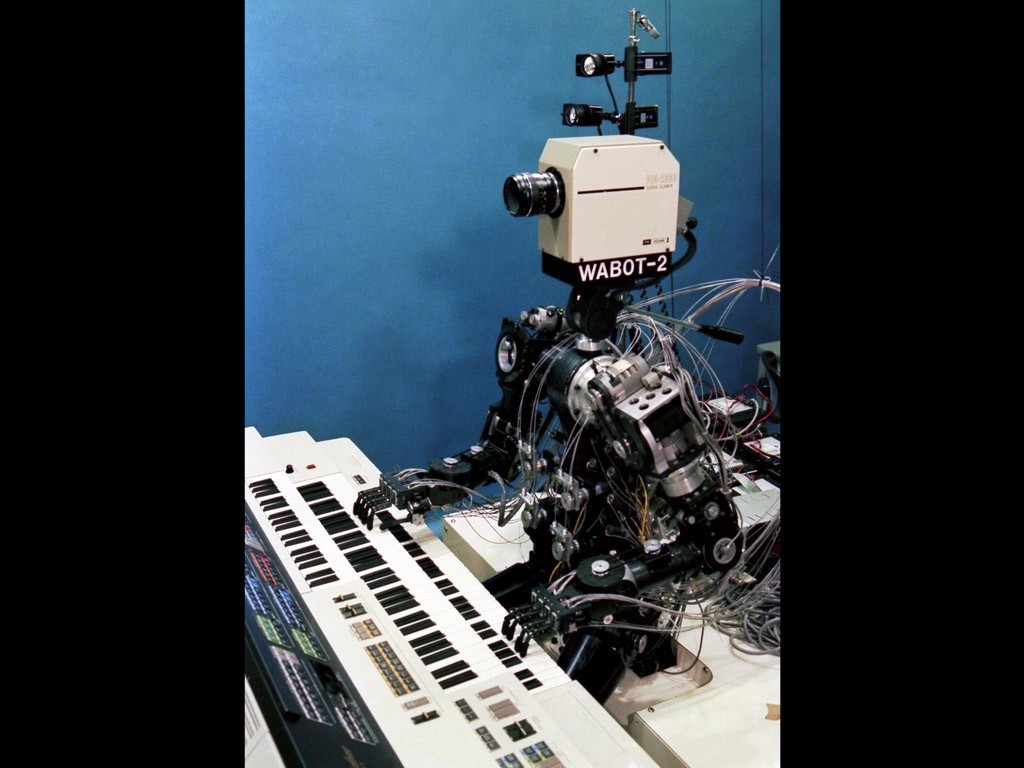

Wabot-2

Research in Japan seemed promising. In 1980, the Wabot-2 robot was developed at Waseda University. He could communicate with people, read musical scores and play the electronic organ. The success of the Japanese forced other governments and private businesses to turn their gaze to AI again.

At this point, the question of connectionism arose. In 1982, Hopfield created a new form of neural network that is capable of studying and processing information. Jeffrey Hinton and David Rumelhart popularized reverse self-distinction. This technology was phenomenal and remains vital in machine learning to this day.

AI gained commercial success in the form of "expert systems." These systems had a deep knowledge of a particular topic. Carnegie Mellon University (CMU) in 1980 released an expert system called XCON. It was used at Digital Equipment Corporation. Until 1986, the company saved $ 40 million annually.

Second winter

PC revolution has changed the course of AI development

In the late 1980s, the success of the AI industry was overshadowed by the computer revolution. Apple and IBM produced more and more powerful computers at the same time. Desktop computers have become cheaper and more powerful than artificial intelligence-based Lisp machines. An entire industry worth half a billion dollars was destroyed in one night. AI successes in expert systems such as the XCON machine have proven to be too expensive.

There are problems with expert systems. They could not learn. They were “fragile” (that is, they could make serious mistakes if they were given unusual data). The practical scope of expert systems has become limited. The new DARPA leadership has decided that AI is not the “next wave." And redirected investments to projects that, from their point of view, should bring immediate results.

By 1991, the goals of a computer project in Japan had not been achieved. Scientists underestimated the difficulties they had to face. Over 300 AI companies closed or went bankrupt by the end of 1993. It was actually the end of the first wave of commercial use of artificial intelligence.

Fresh stream

When the echoes of the computer revolution began to subside, people had the opportunity to use large computing power. With the ubiquity of computers, the number of diverse databases has grown. It was all very cool in terms of AI development.

New technologies solved problems and removed barriers that interfered with scientists. Using increasing computing power, researchers pushed the boundaries of the possible. From the databases that became Big Data, it was possible to extract more and more knowledge. The possibilities for the practical use of AI have become more apparent.

A new concept called “ intelligent agent ” was established in the 1990s. Intelligent Agent (IA) is a system that independently performs the task issued by the user for long periods of time. There was a hope that one day we will be able to teach the IA to interact with each other. This would lead to the creation of universal and more “smart” systems.

In the AI community, different opinions about the use of mathematics in the field of artificial intelligence roamed. Some thought that intelligence was too complex to be described with mathematical symbols. In their opinion, people are rarely guided by logic when making decisions. Their opponents objected that logical chains are the way forward.

G. Kasparov plays with Deep Blue

Very soon, in 1997, the IBM Deep Blue supercomputer defeated Garry Kasparov. At that moment, Kasparov was the world chess champion. What, according to Newell and Simon, was supposed to happen by 1968, finally happened in 1997.

Modern chess computers are much stronger than any person. The highest Elo rating ever achieved by humans is 2,882. For computers, the most common figure is 3,000 Elo. The highest ever recorded was over 3,350.

In 2005, Stanford developed a robot for autonomous driving. He won the DARPA Grand Challenge by driving 131 miles (211 km) along an unexplored desert trail.

Jeopardy

In February 2011, IBM decided to test its IBM Watson in the Jeopardy quiz. The computer was able to defeat the two greatest champions of Jeopardy by a significant margin.

With the avalanche-like development of the Internet and social networks, the amount of information has grown. IT companies needed to do something with the data they received. And the use of AI has become a necessity, not entertainment.

Now Google sorts SERPs using Machine Learning. YouTube selects recommended videos using ML algorithms; Amazon recommends Amazon products in the same way. The Facebook news feed is generated by a smart computer. And even Tinder finds people using ML algorithms.

Thanks to the computer revolution, AI technology has become our indispensable tool. And now humanity is looking forward, waiting for the creation of super machines. And although the effects of artificial intelligence are sometimes controversial, further development of this technology is inevitable.

What else is useful to read on the Cloud4Y blog

→ The computer will make you tasty

→ AI helps study animals in Africa

→ Summer is almost over. Almost no data leaked

→ 4 ways to save on backups in the cloud

→ IoT, fog and clouds: talk about technology?

Subscribe to our Telegram channel so as not to miss another article! We write no more than twice a week and only on business.

All Articles