Megapack: how Factorio developers managed to solve the 200-player multiplayer problem

In May of this year, I participated as a player in the KatherineOfSky MMO event . I noticed that when the number of players reaches a certain number, every few minutes some of them “fall off”. Fortunately for you (but not for me), I was one of those players who disconnected every time , even with a good connection. I took this as a personal challenge and began to look for the causes of the problem. After three weeks of debugging, testing and fixing, the error was finally fixed, but this journey was not so simple.

The problems of multiplayer games are very difficult to track down. Usually they arise in very specific conditions of network parameters and in very specific states of the game (in this case, the presence of more than 200 players). And even when it is possible to reproduce the problem, it cannot be properly debugged, because the insertion of control points stops the game, confuses the timers and usually leads to the termination of the connection due to exceeding the waiting time. But thanks to the stubbornness and wonderful tool called clumsy, I managed to figure out what was happening.

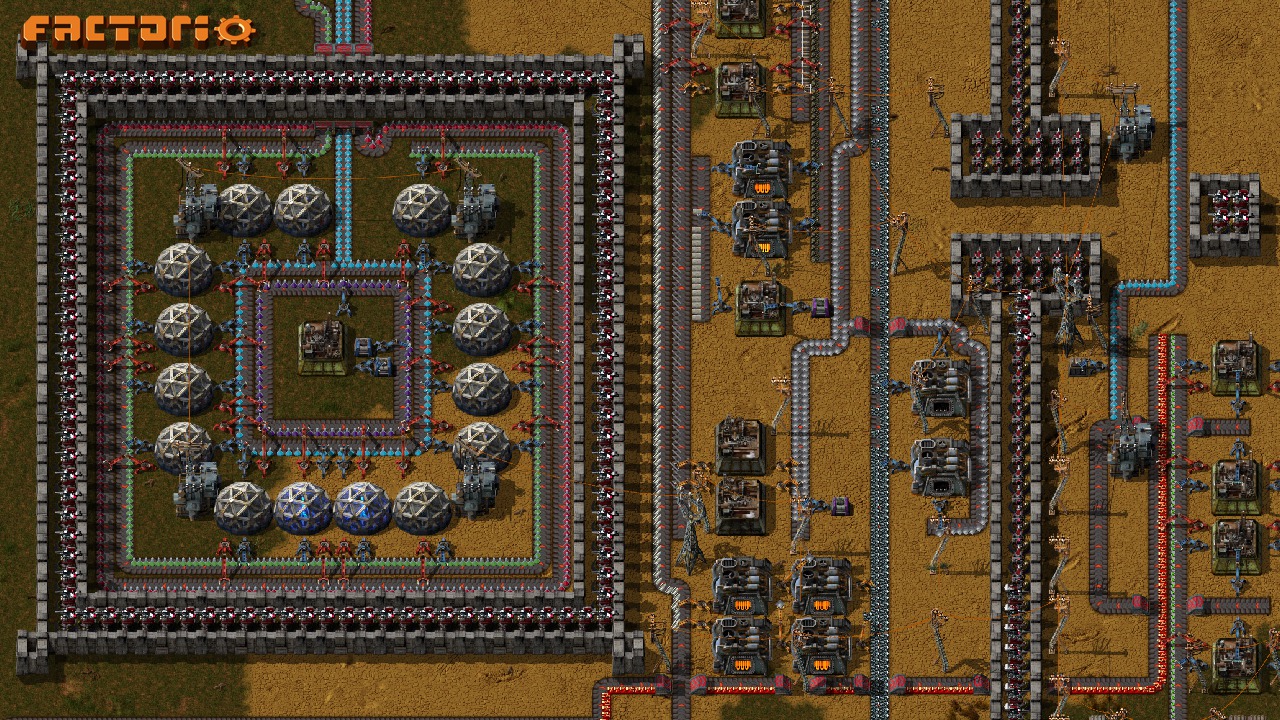

In short: due to an error and incomplete implementation of the simulation of the delay state, the client sometimes finds himself in a situation where he has to send a network packet in one clock cycle, consisting of the player’s actions to select about 400 game entities (we call it a “megapacket”). After that, the server should not only correctly receive all these input actions, but also send them to all other clients. If you have 200 customers, this quickly becomes a problem. The channel to the server is quickly clogged, which leads to packet loss and a cascade of re-requested packets. Postponing input actions then leads to the fact that even more clients start sending megapackets, and their avalanche becomes even stronger. Successful clients manage to recover, all the rest “fall off”.

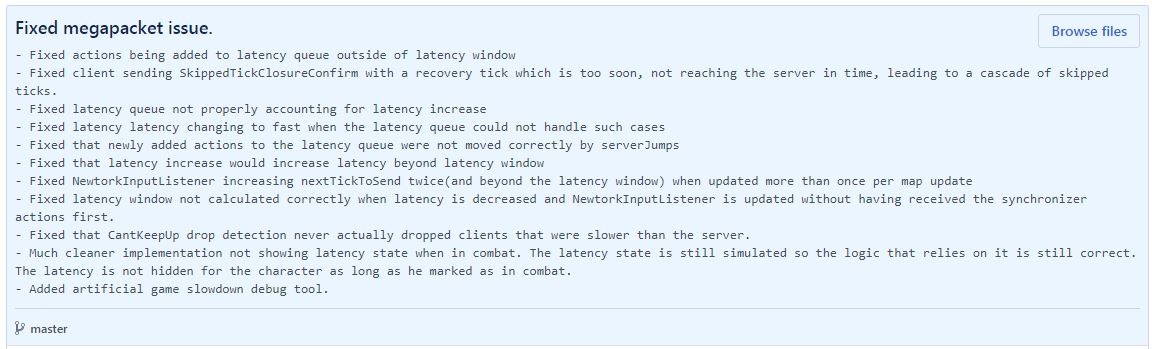

The problem was quite fundamental, and it took me 2 weeks to fix it. It is quite technical, so below I will explain the juicy technical details. But first you need to know that since version 0.17.54, released on June 4, in the face of temporary connection problems, the multiplayer has become more stable, and the concealment of delays is much less buggy (less braking and teleporting). In addition, I changed the way I hide the delays in the battle and I hope that due to this they will be a little smoother.

Multi-User Megapack - Technical Details

In simple terms, the multiplayer in the game works as follows: all clients simulate the state of the game, receiving and sending only the player’s input (called “Input actions”, Input Actions ). The main task of the server is to transmit Input Actions and control that all clients perform the same actions in one cycle. Read more about this in the post FFF-149 .

Since the server must make decisions about what actions to perform, the player’s actions move in approximately the same way: the player’s action -> game client -> network -> server -> network -> game client. This means that each player’s action is performed only after he completes the round-trip path through the network. Because of this, the game would seem terribly inhibitory, so almost immediately after the appearance of the multiplayer in the game, a mechanism for concealing delays was introduced. Hiding a delay imitates a player’s input without taking into account the actions of other players and server decisions.

Factorio has a game state called Game State - this is the full state of the card, player, entities and everything else. It is deterministically simulated in all clients based on actions received from the server. The game state is sacred, and if it ever begins to differ from the server or any other client, then desynchronization occurs.

In addition to Game State , we have Latency State delays. It contains a small subset of the ground state. Latency State is not sacred and simply presents a picture of how the state of the game will look in the future based on the Input Actions introduced by the player.

To do this, we store a copy of the created Input Actions in the delay queue.

That is, at the end of the process on the client side, the picture looks something like this:

- Apply the Input Actions of all players to the Game State as these input actions were received from the server.

- We remove from the delay queue all Input Actions that, according to the server, have already been applied to Game State .

- Delete the Latency State and reset it so that it looks exactly the same as the Game State .

- Apply all actions from the delay queue to Latency State .

- Based on the data from Game State and Latency State, we render the game to the player.

All this is repeated in every measure.

Too complicated? Do not relax, that is not all. To compensate for the unreliability of Internet connections, we created two mechanisms:

- Missed ticks: when the server decides that the Input Actions will be performed in the game beat, then if it doesn’t receive a player’s Input Actions (for example, due to the increased delay), it will not wait, but will tell this client “I didn’t take into account your Input Actions , I’ll try to add them to the next measure. ” This is done so that, due to problems with the connection (or with the computer) of one player, the map update does not slow down for everyone else. It is worth noting that Input Actions are not ignored, but simply postponed.

- Round Trip Delay: The server is trying to guess what the round-trip delay between the client and server is for each client. Every 5 seconds, if necessary, he discusses with the client a new delay (depending on how the connection behaved in the past), and accordingly increases or decreases the delay in transferring data back and forth.

By themselves, these mechanisms are quite simple, but when they are used together (which often happens with connection problems), the logic of the code becomes difficult to manage and with a bunch of borderline cases. In addition, when these mechanisms come into play , the server and the delay queue must correctly implement a special Input Action called StopMovementInTheNextTick . Due to this, with problems with the connection, the character will not run by itself (for example, under the train).

Now you need to explain to you how entity selection works. One of the transmitted types of Input Action is a change in the state of selection of an entity. It tells everyone what entity the player hovered over. As you can understand, this is one of the most frequent input actions sent by clients, so to save bandwidth, we optimized it so that it takes up as little space as possible. This is implemented as follows: when choosing each entity, instead of preserving the absolute, high-precision coordinates of the map, the game retains a low-current relative displacement from the previous choice. This works well because mouse selection usually happens very close to the previous selection. Because of this, two important requirements arise: Input Actions must never be skipped and must be performed in the correct order. These requirements are met for Game State . But since the task of the Latency state is to “look good enough” for the player, they are not satisfied in the state of delays. Latency State does not take into account many borderline cases associated with skipping cycles and changing round-trip delays.

You can already guess where everything is going. Finally, we begin to see the causes of the megapackage problem. The root of the problem is that in deciding whether to pass the action of the selection change, the entity selection logic relies on the Latency State , and this state does not always contain the correct information. Therefore, a megapackage is generated like this:

- The player has a connection problem.

- The mechanisms of skipping measures and regulating the round-trip delay come into play.

- Queuing state delays does not account for these mechanisms. This causes some actions to be deleted prematurely or performed in the wrong order, resulting in an incorrect Latency State .

- The player has a problem with the connection and he, to catch up with the server, simulates up to 400 clock cycles.

- In each clock cycle, a new action, changing the selection of an entity, is generated and prepared for sending to the server.

- The client sends a mega-packet of over 400 changes to the selection of entities to the server (and with other actions: the state of shooting, walking, etc., also suffered from this problem).

- The server receives 400 input actions. Since he is not allowed to skip a single input action, he orders all clients to perform these actions and sends them over the network.

The irony is that a mechanism designed to save channel bandwidth created huge network packets as a result.

We solved this problem by correcting all borderline cases of updating and supporting the queue of delays. Although it took quite a while, in the end it was worth implementing everything correctly and not relying on fast hacks.

All Articles