Cloud security monitoring

Transferring data and applications to the clouds is a new problem for corporate SOCs that are not always ready to monitor other people's infrastructure. According to Netoskope, a medium-sized enterprise (apparently still in the USA) uses 1246 different cloud services, which is 22% more than a year ago. 1246 cloud services !!! 175 of them relate to HR services, 170 are related to marketing, 110 - in the field of communications and 76 in finance and CRM. Cisco uses “total” 700 external cloud services. Therefore, I am a little confused by these numbers. But in any case, the problem is not in them, but in the fact that the clouds are being actively used by an increasing number of companies that would like to have the same capabilities for monitoring cloud infrastructure as in their own network. And this trend is growing - according to the American Audit Office, by 2023, 1,200 data centers are going to close in the USA (6,250 have already closed). But the transition to the cloud is not just “but let's transfer our servers to an external provider”. New IT architecture, new software, new processes, new restrictions ... All this makes significant changes to the work of not only IT, but also information security. And if the providers learned how to cope with the security of the cloud itself (the benefit of the recommendations is quite a lot), then there are significant difficulties with cloud monitoring of information security, especially on SaaS platforms, which we will talk about.

Let's say your company has moved part of its infrastructure to the cloud ... Stop. Not this way. If the infrastructure is transferred, and you are only now thinking about how you will monitor it, then you have already lost. If this is not Amazon, Google or Microsoft (and then with reservations), then you probably will not have many opportunities to monitor your data and applications. Well, if you are given the opportunity to work with logs. Sometimes data about security events will be available, but you will not have access to them. For example, Office 365. If you have the cheapest E1 license, then security events are not available to you at all. If you have an E3 license, your data is stored in just 90 days, and only if you have E5 - the duration of the logs is available for a year (although there are some nuances here as well, due to the need to separately request a number of logging functions from Microsoft support). By the way, the E3 license is much weaker in terms of monitoring functions than corporate Exchange. To achieve the same level, you need an E5 license or an additional Advanced Compliance license, which may require additional money that was not included in your financial model for the transition to the cloud infrastructure. And this is just one example of an underestimation of issues related to monitoring information security clouds. In this article, without pretending to be complete, I want to draw attention to some nuances that should be considered when choosing a cloud provider from a security point of view. And at the end of the article, a checklist will be given that should be completed before you consider that the issue of monitoring cloud information security has been resolved.

There are several typical problems that lead to incidents in cloud environments, which IB services do not have time to respond to or do not see at all:

Moving to the clouds is always the search for a balance between the desire to maintain control over the infrastructure and transferring it to the more professional hands of the cloud provider, which specializes in maintaining it. And in the field of cloud security, this balance also needs to be sought. Moreover, depending on the used model of providing cloud services (IaaS, PaaS, SaaS), this balance will be different all the time. In any situation, we must remember that today all cloud providers follow the so-called shared responsibility and shared security model. The cloud is responsible for something, the client is responsible for something, having placed its data, its applications, its virtual machines and other resources in the cloud. It would be foolish to expect that having gone into the cloud, we will transfer all responsibility to the provider. But building all the security on your own when moving to the cloud is also unwise. A balance is needed, which will depend on many factors: - risk management strategies, threat models available to the cloud provider of defense mechanisms, legislation, etc.

For example, the classification of data hosted in the cloud is always the responsibility of the customer. A cloud provider or an external service provider can only help him with tools that will help mark data in the cloud, identify violations, delete data that violates the law, or disguise it using one method or another. On the other hand, physical security is always the responsibility of the cloud provider, which it cannot share with customers. But everything that is between the data and the physical infrastructure is precisely the subject of discussion in this article. For example, cloud availability is the provider’s responsibility, and setting ITU rules or enabling encryption is the client’s responsibility. In this article, we’ll try to look at the information security mechanisms that various various popular cloud providers in Russia provide today, what are the features of their application, and when to look in the direction of external overlay solutions (for example, Cisco E-mail Security) that expand the capabilities of your cloud in part cybersecurity. In some cases, especially if you are following a multi-cloud strategy, you have no choice but to use external security monitoring solutions in several cloud environments at once (for example, Cisco CloudLock or Cisco Stealthwatch Cloud). Well, in some cases, you will understand that the cloud provider you have chosen (or imposed on you) does not offer any options for monitoring information security at all. This is unpleasant, but also a lot, as it allows you to adequately assess the level of risk associated with working with this cloud.

To monitor the security of the clouds you use, you have only three options:

Let's see what features each of these options have. But first, we need to understand the general scheme that will be used in monitoring cloud platforms. I would highlight 6 main components of the information security monitoring process in the cloud:

Understanding these components will allow you to quickly determine what you can get from your provider and what you will have to do yourself or with the help of external consultants.

I already wrote above that so many cloud services today do not provide any possibility for monitoring information security. In general, they do not pay much attention to the topic of information security. For example, one of the popular Russian services for sending reports to government agencies via the Internet (I will not specifically mention his name). The entire security section of this service revolves around the use of certified cryptographic information protection tools. The information security section of another domestic cloud service for electronic document management is not an example anymore. It talks about public key certificates, certified cryptography, eliminating web vulnerabilities, protecting against DDoS attacks, applying ITU, backing up, and even conducting regular IS audits. But not a word about monitoring, nor about the possibility of gaining access to information security events that may be of interest to the customers of this service provider.

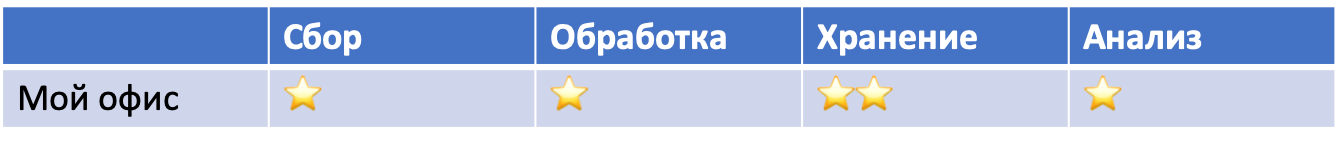

In general, by the way the cloud provider describes IS issues on its website and in the documentation, one can understand how seriously he generally takes this issue. For example, if you read the manuals for My Office products, then there isn’t a word about security at all, and the documentation for the separate product My Office. KS3 ”, designed to protect against unauthorized access, is the usual enumeration of clauses of the 17th order of the FSTEC, which executes“ My office.KS3 ”, but is not described how it performs and, most important, how to integrate these mechanisms with corporate information security. Perhaps such documentation is there, but I did not find it in the public domain on the My Office site. Although maybe I just do not have access to this classified information? ..

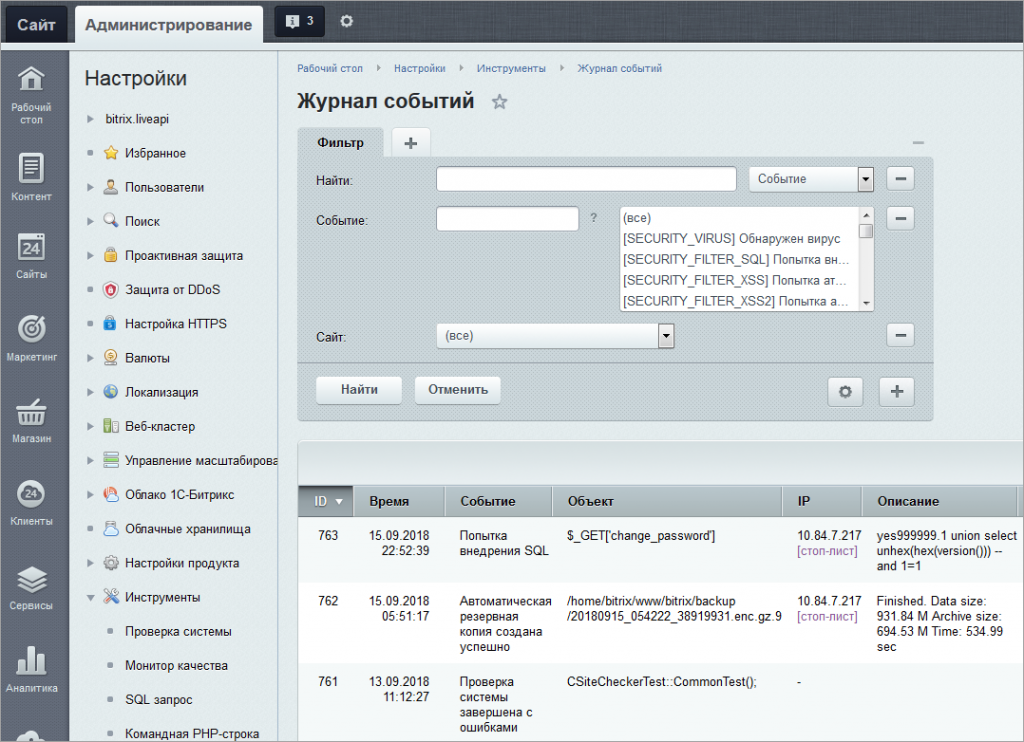

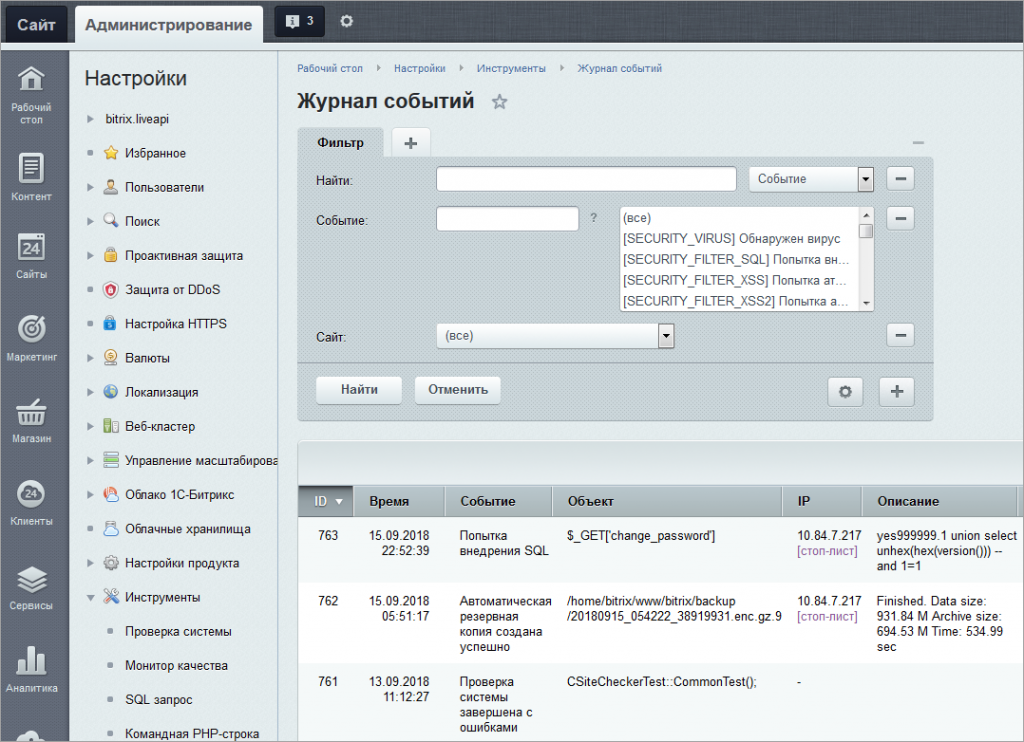

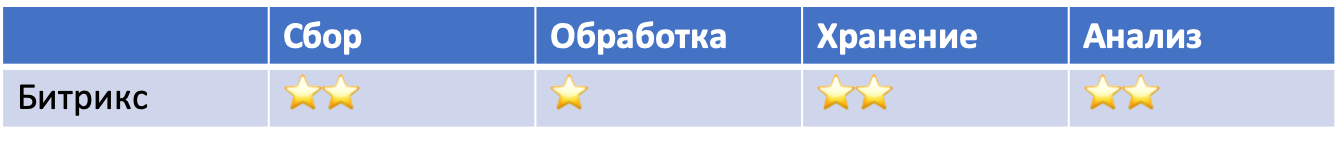

In the same Bitrix, the situation is an order of magnitude better. The documentation describes the formats of the event logs and, interestingly, the intrusion log, which contains events related to potential threats to the cloud platform. From there you can get IP, username or guest name, event source, time, User Agent, type of event, etc. True, you can work with these events either from the control panel of the cloud itself or upload data in MS Excel format. It’s difficult to automate work with Bitrix logs now and you will have to do part of the work manually (upload the report and load it into your SIEM). But if you recall that until relatively recently, and this was not possible, then this is a big progress. At the same time, I want to note that many foreign cloud providers offer similar functionality “for beginners” - either look at the logs with your eyes through the control panel, or upload data to yourself (although most upload data in .csv format, not Excel).

If you do not consider the option with no logs, then cloud providers usually offer you three options for monitoring security events - dashboards, uploading data and accessing them via API. The first one seems to solve many problems for you, but this is not entirely true - if there are several magazines, you have to switch between the screens that display them, losing the big picture. In addition, the cloud provider is unlikely to provide you with the opportunity to correlate security events and generally analyze them from a security point of view (usually you are dealing with raw data that you need to understand yourself). There are exceptions and we will talk about them further. Finally, it is worth asking, what events does your cloud provider record, in what format, and how much do they correspond to your IS monitoring process? For example, the identification and authentication of users and guests. The same Bitrix allows you to record the date and time of this event, the name of the user or guest (in the presence of the Web Analytics module), an object to which you have access, and other elements typical of a web site for these events. But corporate IS services may need information about whether a user has logged into the cloud from a trusted device (for example, in a corporate network, Cisco ISE implements this task). And such a simple task as the geo-IP function, which will help determine if the user account of the cloud service is stolen? And even if the cloud provider provides it to you, then this is not enough. The same Cisco CloudLock does not just analyze geolocation, but uses machine learning and analyzes historical data for each user and tracks various anomalies in attempts to identify and authenticate. Only MS Azure has similar functionality (if there is a corresponding subscription).

There is one more difficulty - since for many cloud providers IS monitoring is a new topic that they are just starting to deal with, they are constantly changing something in their decisions. Today they have one version of the API, tomorrow another, the third day after tomorrow. One must also be prepared for this. The same thing with functionality that can change, which must be taken into account in your information security monitoring system. For example, Amazon initially had separate cloud event monitoring services - AWS CloudTrail and AWS CloudWatch. Then came a separate IB event monitoring service - AWS GuardDuty. After some time, Amazon launched the new Amazon Security Hub management system, which includes an analysis of the data received from GuardDuty, Amazon Inspector, Amazon Macie and several others. Another example is the Azure log integration tool with SIEM - AzLog. Many SIEM vendors actively used it, until in 2018 Microsoft announced the termination of its development and support, which put many customers who used this tool before the problem (as it was resolved, we will talk later).

Therefore, carefully monitor all the monitoring functions that your cloud provider offers you. Or trust external solution providers to act as intermediaries between your SOC and the cloud you want to monitor. Yes, it will be more expensive (although not always), but on the other hand, you will shift all responsibility to the shoulders of others. Or not all? .. Recall the concept of shared security and understand that we can’t shift anything - you will have to figure out how different cloud providers provide information security monitoring of your data, applications, virtual machines and other resources located in the cloud. And we will start with what Amazon offers in this part.

Yes, yes, I understand that Amazon is not the best example in view of the fact that it is an American service and can be blocked as part of the fight against extremism and the dissemination of information prohibited in Russia. But in this publication, I would just like to show how different cloud platforms differ in information security capabilities and what you should pay attention to when transferring your key processes to the clouds from a security point of view. Well, if some of the Russian developers of cloud solutions get something useful for themselves, then this will be excellent.

First I must say that Amazon is not an impregnable fortress. Various incidents regularly happen to his clients. For example, the names, addresses, birth dates, and telephones of 198 million voters were stolen from Deep Root Analytics. 14 million Verizon subscriber records stolen from Nice Systems, Israel In doing so, AWS built-in capabilities allow you to detect a wide range of incidents. For example:

This discrepancy is due to the fact that the customer himself is responsible for the security of customer data, as we found out above. And if he did not worry about the inclusion of protective mechanisms and did not turn on monitoring tools, then he will learn about the incident only from the media or from his clients.

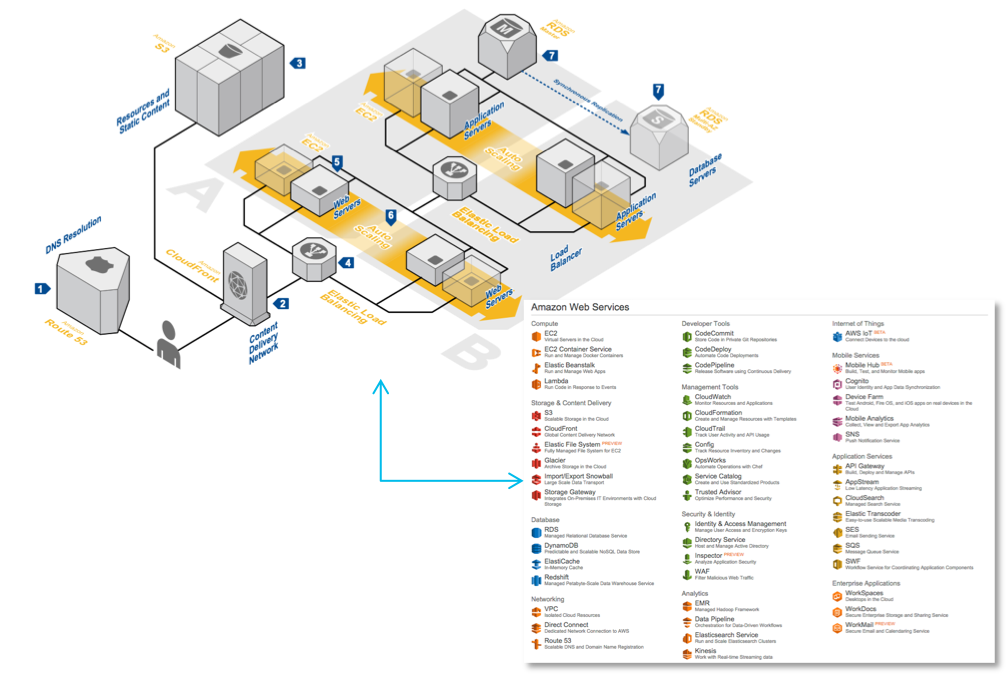

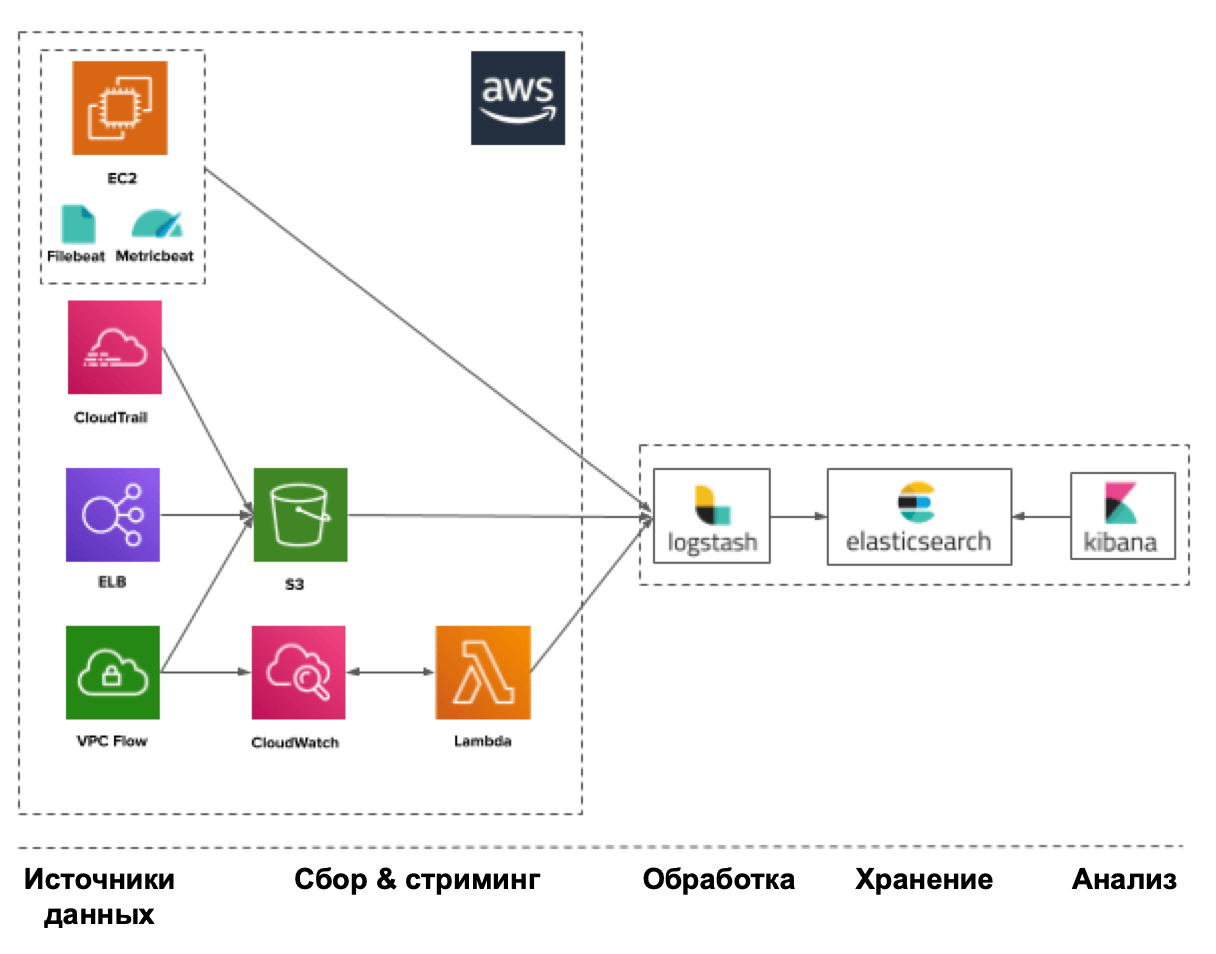

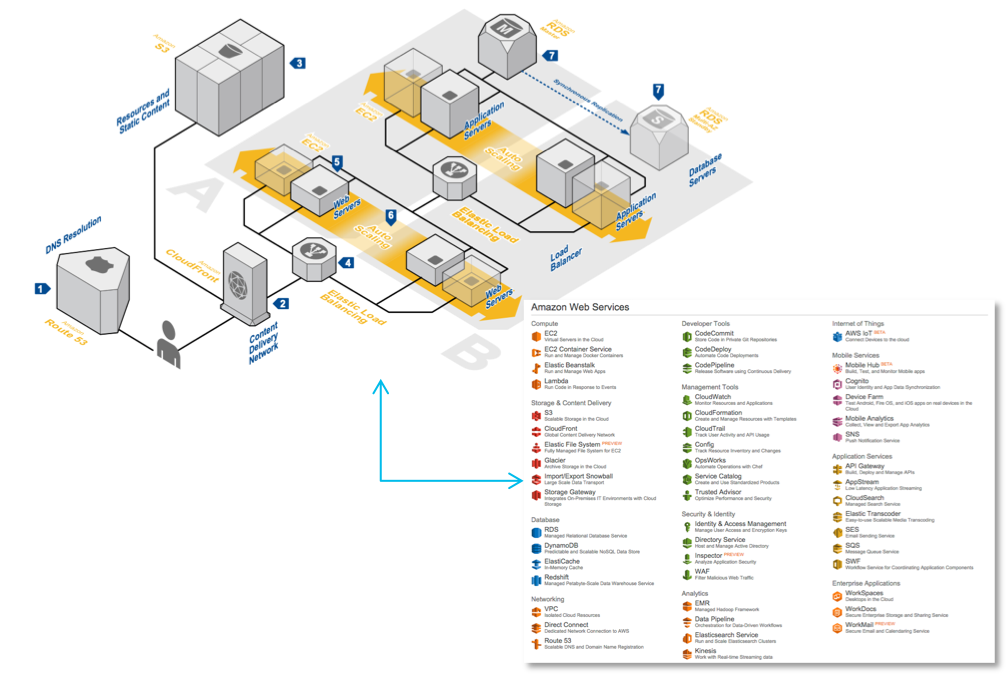

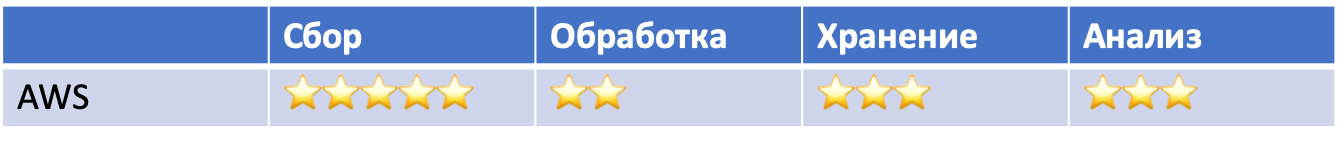

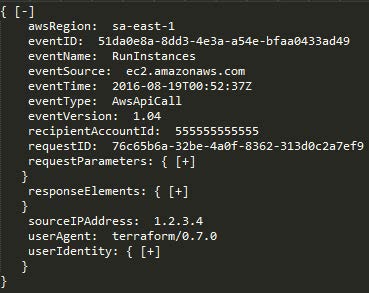

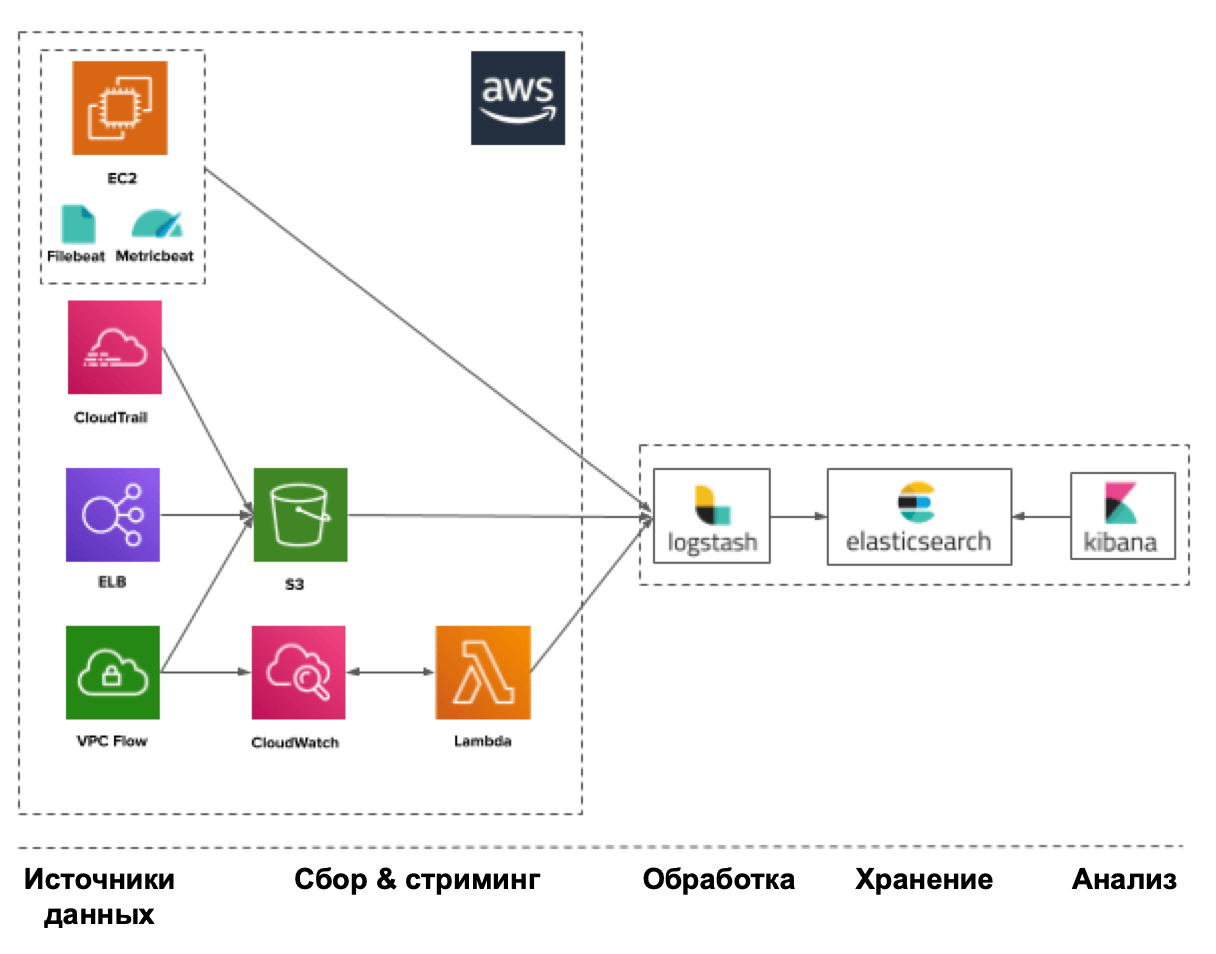

To detect incidents, you can use a wide range of various monitoring services developed by Amazon (although they are often complemented by external tools, such as osquery). So, in AWS, all user actions are tracked, regardless of how they are carried out - through the management console, command line, SDK or other AWS services. All records of the actions of each AWS account (including username, action, service, activity parameters and its result) and API use are available through AWS CloudTrail. You can view these events (for example, logging into the AWS IAM console) from the CloudTrail console, analyze them using Amazon Athena, or “give” them to external solutions, such as Splunk, AlienVault, etc. The AWS CloudTrail logs themselves are placed in your AWS S3 bucket.

The other two AWS services provide some more important monitoring capabilities. Firstly, Amazon CloudWatch is an AWS resource and application monitoring service that, among other things, can detect various anomalies in your cloud. All AWS built-in services, such as Amazon Elastic Compute Cloud (servers), Amazon Relational Database Service (databases), Amazon Elastic MapReduce (data analysis) and 30 other Amazon services, use Amazon CloudWatch to store their logs. Developers can use the Amazon CloudWatch open API to add log monitoring functionality to user applications and services, which allows expanding the range of analyzed events in the context of information security.

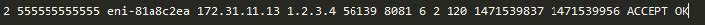

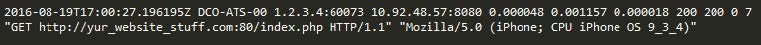

Secondly, the VPC Flow Logs service allows you to analyze the network traffic sent or received by your AWS servers (externally or internally), as well as between microservices. When any of your AWS VPC resources interacts with the network, VPC Flow Logs records network traffic information, including the source and destination network interfaces, as well as IP addresses, ports, protocol, number of bytes, and number of packets you saw. Those with local network security experience recognize this as an analogue to NetFlow flows that can be created by switches, routers, and enterprise-level firewalls. These logs are important for information security monitoring because they, unlike user and application activity events, also allow you to not miss network connectivity in AWS virtual private cloud environment.

Thus, these three AWS services — AWS CloudTrail, Amazon CloudWatch, and VPC Flow Logs — together provide a reasonably effective view of your account usage, user behavior, infrastructure management, application and service activity, and network activity. For example, they can be used to detect the following anomalies:

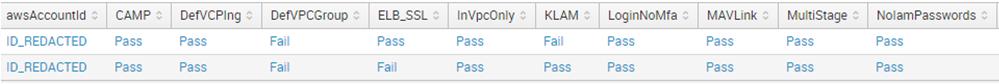

Amazon Web Services has also developed other services for cybersecurity that can solve many other tasks. For example, AWS has a built-in service for auditing policies and configurations - AWS Config. This service provides continuous auditing of your AWS resources and their configurations. Consider a simple example: suppose you want to make sure that user passwords are disabled on all your servers and access is only possible on the basis of certificates. AWS Config makes it easy to verify this for all your servers. There are other policies that can be applied to your cloud servers: “No server can use port 22”, “Only administrators can change the firewall rules” or “Only user Ivashko can create new user accounts, and he can do it is only on Tuesdays. ” In the summer of 2016, AWS Config was expanded to automate the detection of violations of developed policies. AWS Config Rules are, in essence, continuous configuration requests for the Amazon services you use, which generate events in case of violation of the relevant policies. For example, instead of periodically executing AWS Config requests to verify that all virtual server drives are encrypted, AWS Config Rules can be used to constantly check server drives for this condition. And, most importantly, in the context of this publication, any violations generate events that can be analyzed by your IS service.

AWS also has its equivalents to traditional corporate information security solutions that also generate security events that you can and should analyze:

I will not detail all Amazon services that may be useful in the context of information security. The main thing to understand is that they can all generate events that we can and should analyze in the context of information security, using for this both Amazon’s built-in capabilities and external solutions, for example, SIEM, which can take security events to your monitoring center and analyze them there along with events from other cloud services or from internal infrastructure, perimeter or mobile devices.

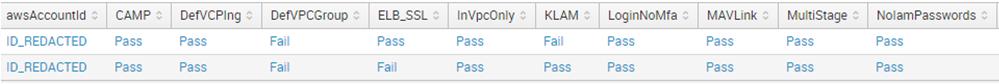

In any case, it all starts with data sources that provide you with IB events. Such sources include, but are not limited to:

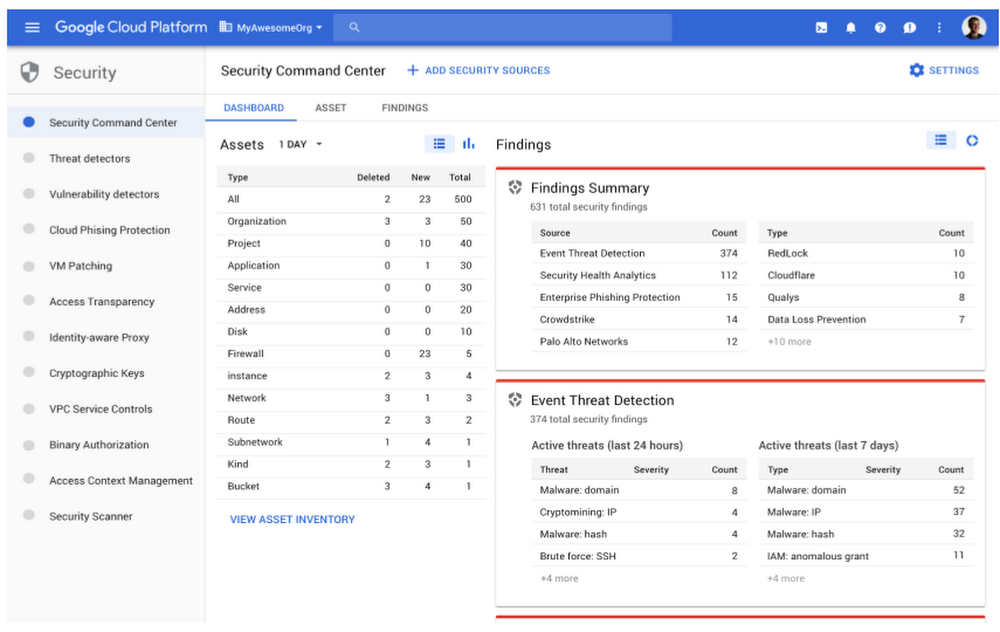

Amazon, offering such a range of event sources and tools for their generation, is very limited in its ability to analyze the collected data in the context of information security. You will have to independently study the available logs, looking for the corresponding indicators of compromise in them. The AWS Security Hub, which Amazon recently launched, aims to solve this problem by becoming a sort of cloud-based SIEM for AWS. But while it is only at the beginning of its journey and is limited both by the number of sources with which it works, and by other restrictions established by the architecture and subscriptions of Amazon itself.

I don’t want to enter into a long debate about which of the three cloud providers (Amazon, Microsoft or Google) is better (especially since each of them has its own specifics and is suitable for solving its problems); focus on the security monitoring capabilities these players provide. I must admit that Amazon AWS was one of the first in this segment and therefore has advanced the farthest in terms of its security functions (although many admit that using them is difficult). But this does not mean that we will ignore the opportunities that Microsoft offers us with Google.

Microsoft products have always been distinguished by their "openness" and in Azure the situation is similar. For example, if AWS and GCP always proceed from the concept of “everything that is not allowed is prohibited,” then Azure has the exact opposite approach. For example, creating a virtual network in the cloud and a virtual machine in it, all ports and protocols are open and enabled by default. Therefore, you will have to spend a little more effort on the initial setup of access control system in the cloud from Microsoft. And this also imposes more stringent requirements on you in terms of monitoring activity in the Azure cloud.

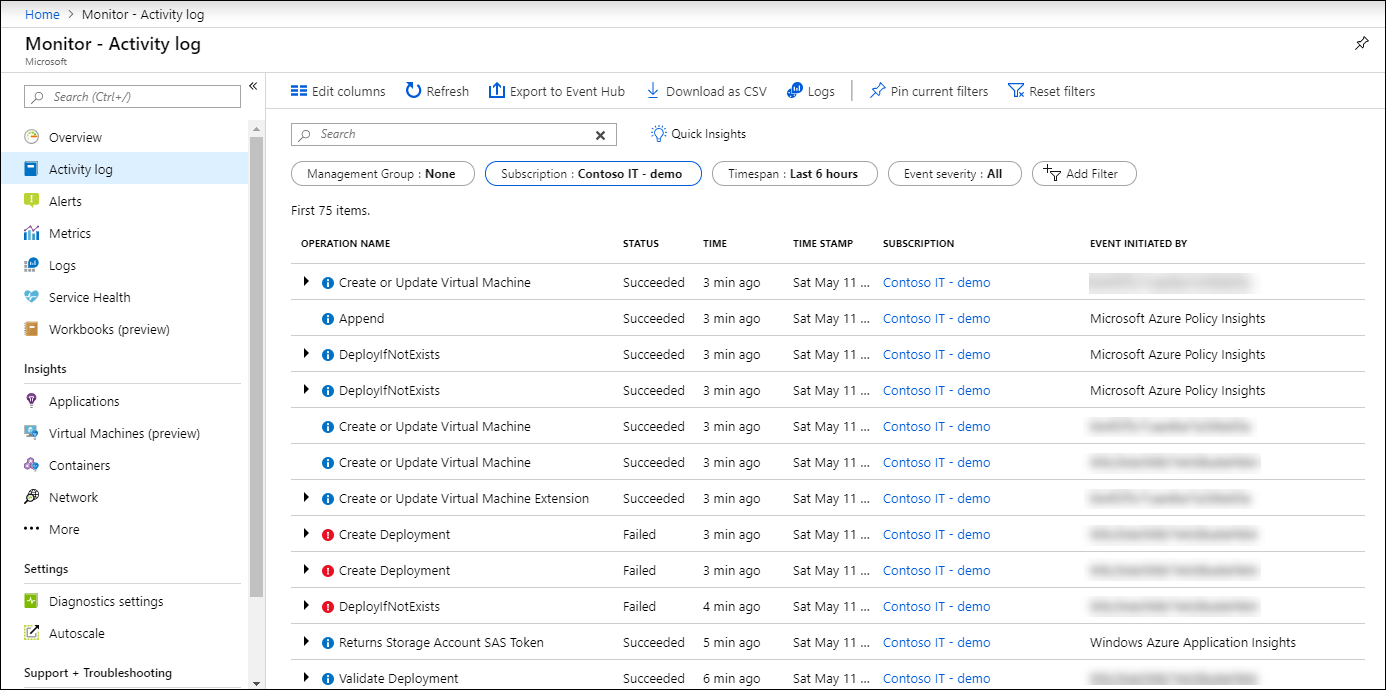

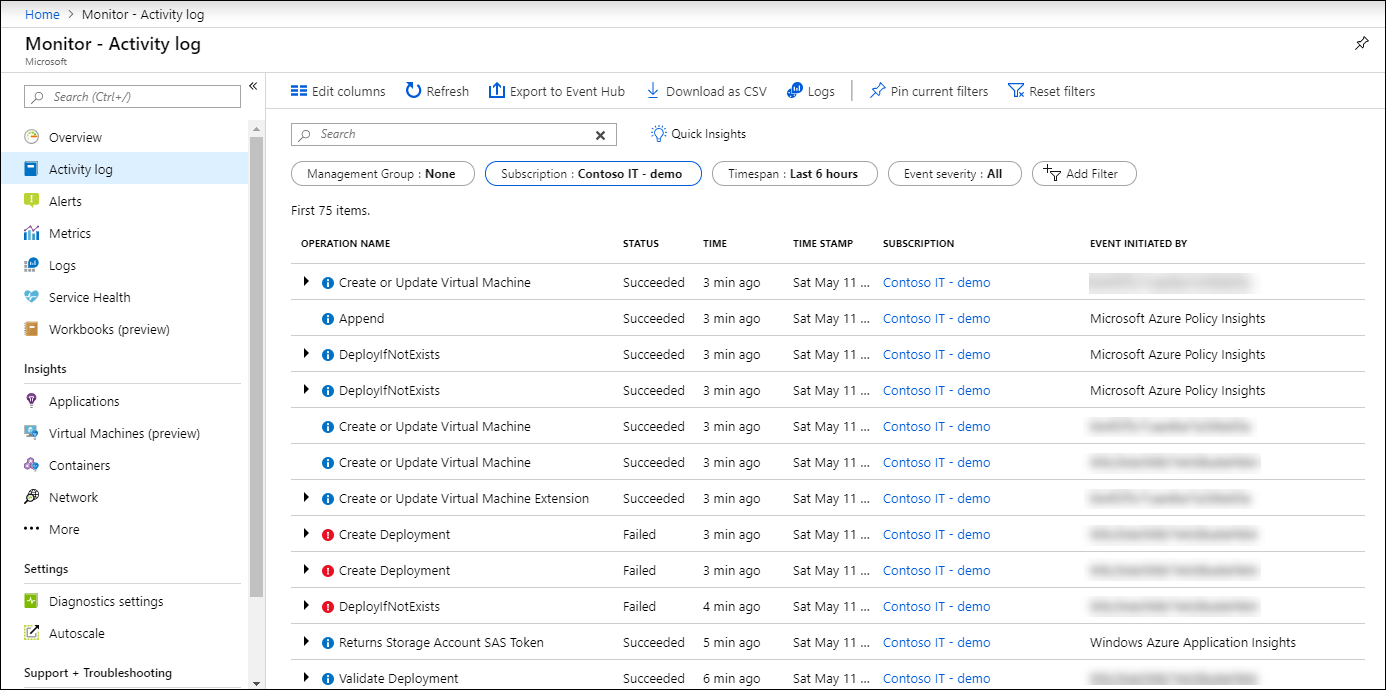

AWS has a peculiarity associated with the fact that when you monitor your virtual resources, if they are in different regions, then you have difficulties in combining all events and their unified analysis, to eliminate which you need to resort to various tricks, such as Create custom code for AWS Lambda that will carry events between regions. There is no such problem in Azure - its Activity Log mechanism monitors all activity throughout the organization without restrictions. The same applies to the AWS Security Hub, which was recently developed by Amazon to consolidate many security functions within a single security center, but only within its region, which, however, is not relevant for Russia.Azure has its own Security Center, which is not bound by regional restrictions, providing access to all the security features of the cloud platform. Moreover, for various local teams, he can provide his own set of defensive capabilities, including the safety events they manage. The AWS Security Hub is still striving to become like the Azure Security Center. But it’s worth adding a fly in the ointment - you can squeeze out a lot of what was described earlier in AWS from Azure, but this is most conveniently done only for Azure AD, Azure Monitor and Azure Security Center. All other Azure defense mechanisms, including security event analysis, are not yet managed in the most convenient way. Part of the problem is solved by the API, which permeates all Microsoft Azure services,but this will require additional efforts for integrating your cloud with your SOC and the availability of qualified specialists (in fact, like with any other. SIEM working with cloud APIs). Some SIEMs, which will be discussed later, already support Azure and can automate the task of monitoring it, but there are some difficulties with it - not all of them can take all the logs that Azure has.

Event collection and monitoring in Azure is provided using the Azure Monitor service, which is the main tool for collecting, storing and analyzing data in the Microsoft cloud and its resources - Git repositories, containers, virtual machines, applications, etc. All data collected by Azure Monitor is divided into two categories: real-time metrics that describe key performance indicators of the Azure cloud, and registration logs containing data organized in records characterizing certain aspects of the activities of Azure resources and services. In addition, using the Data Collector API, the Azure Monitor service can collect data from any REST source to build its own monitoring scenarios.

Here are a few sources of security events that Azure offers you that you can access through the Azure Portal, CLI, PowerShell, or REST API (and some only through the Azure Monitor / Insight API):

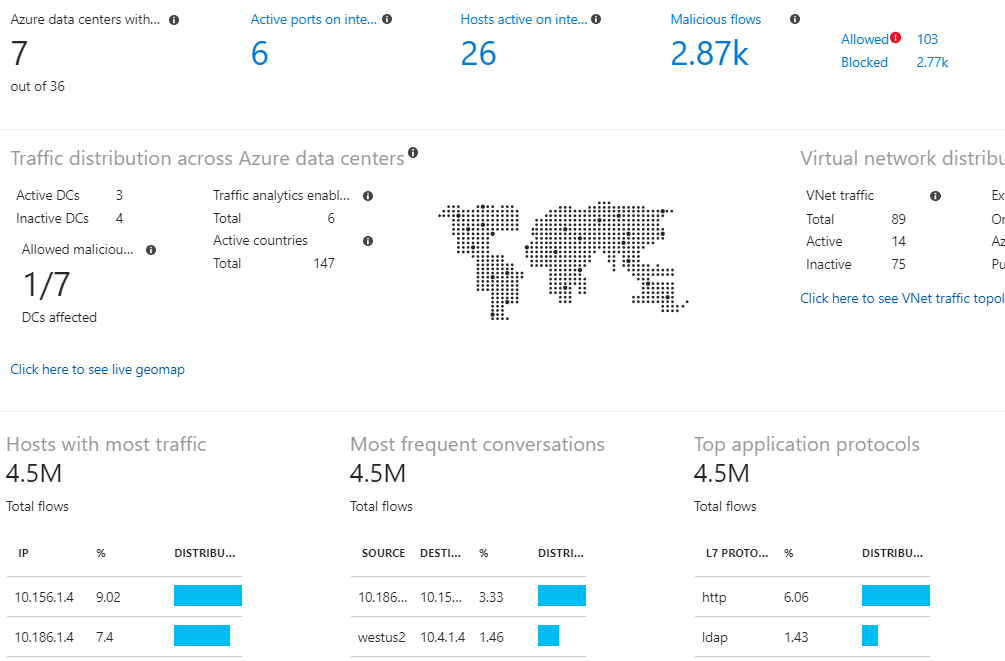

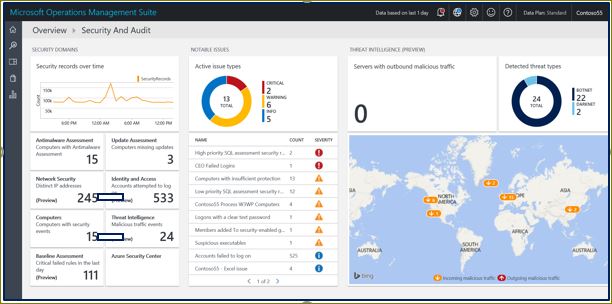

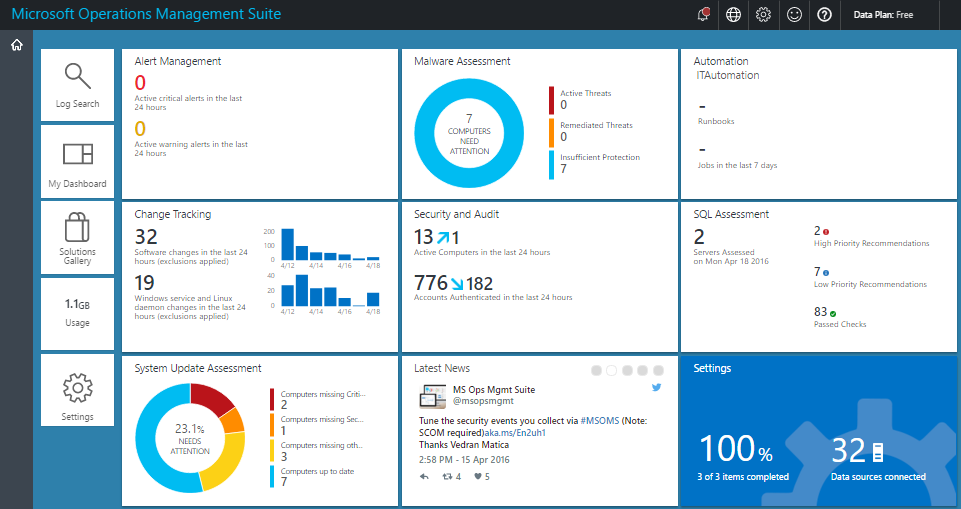

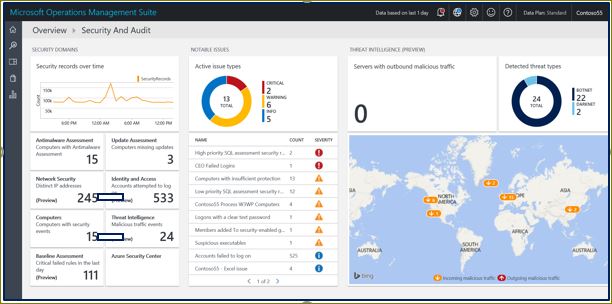

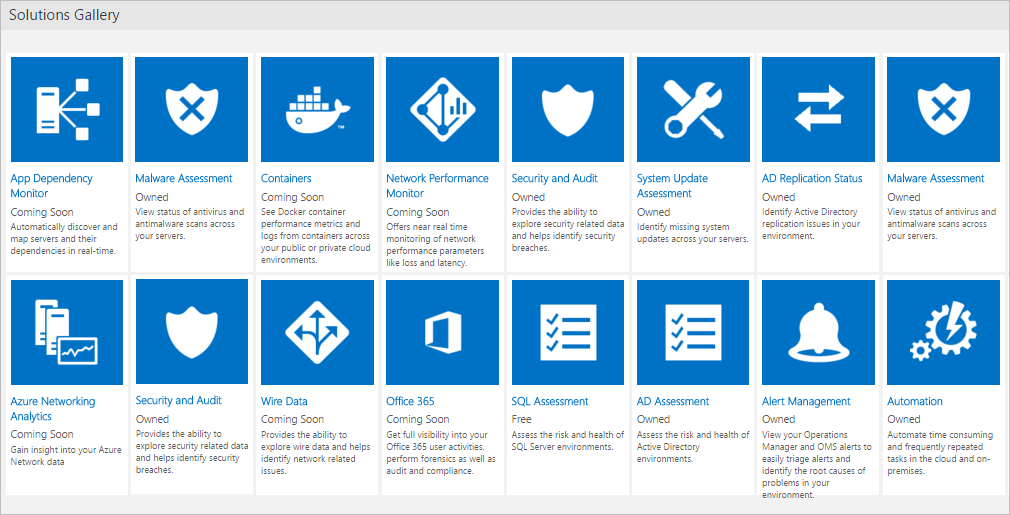

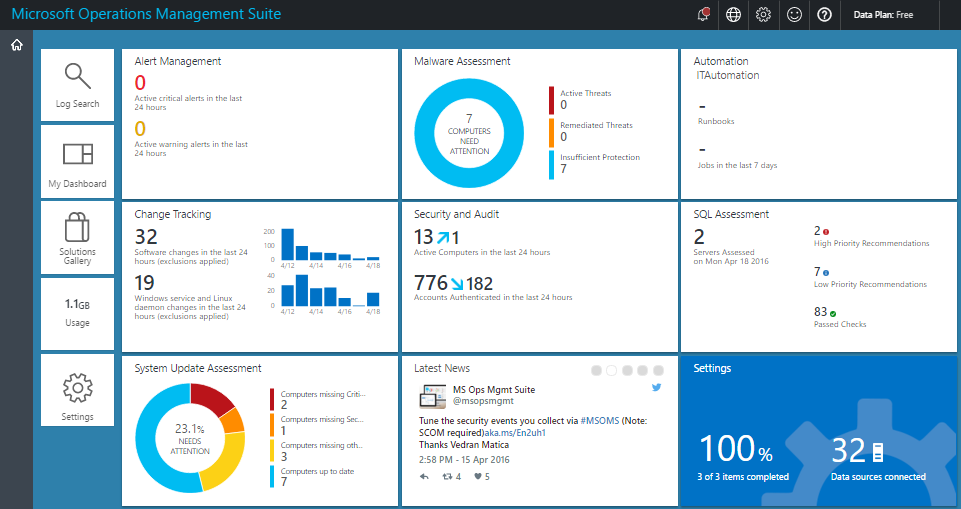

For monitoring, you can use external SIEM or the built-in Azure Monitor and its extensions. We’ll talk more about IS event management systems, but for now we’ll see what Azure offers us to analyze data in the context of security. The main screen for everything related to security in Azure Monitor is Log Analytics Security and Audit Dashboard (the free version supports storing a limited amount of events for only one week). This panel is divided into 5 main areas that visualize summary statistics on what is happening in your cloud environment:

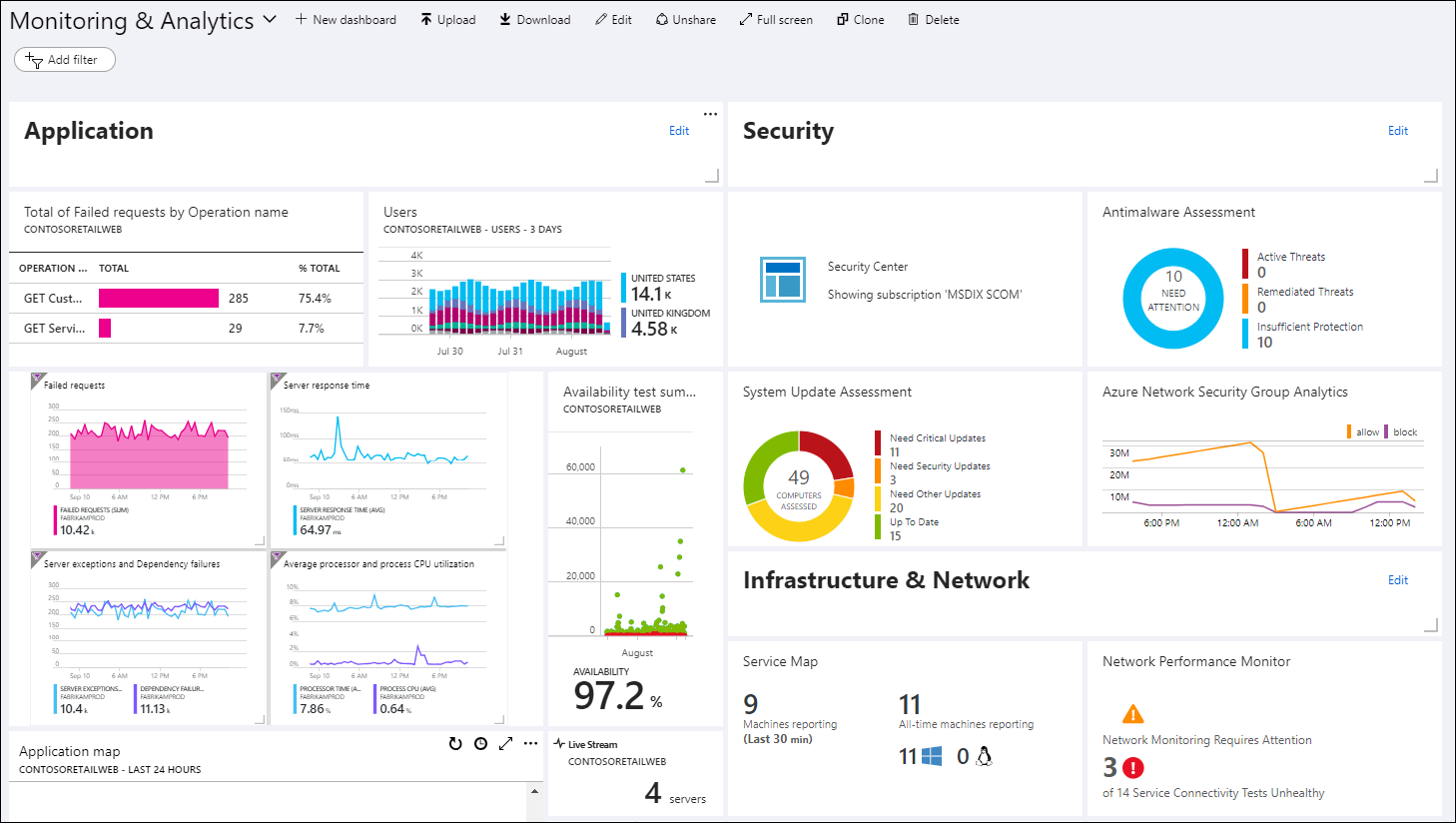

Azure Monitor extensions include Azure Key Vault (protection of cryptographic keys in the cloud), Malware Assessment (analysis of protection against malicious code on virtual machines), Azure Application Gateway Analytics (analysis of, among other things, cloud firewall logs), etc. . These tools, enriched by certain rules of event processing, allow you to visualize various aspects of the activities of cloud services, including security, and to identify certain deviations from work. But, as often happens, any additional functionality requires an appropriate paid subscription, which will require you to appropriate financial investments that you need to plan ahead.

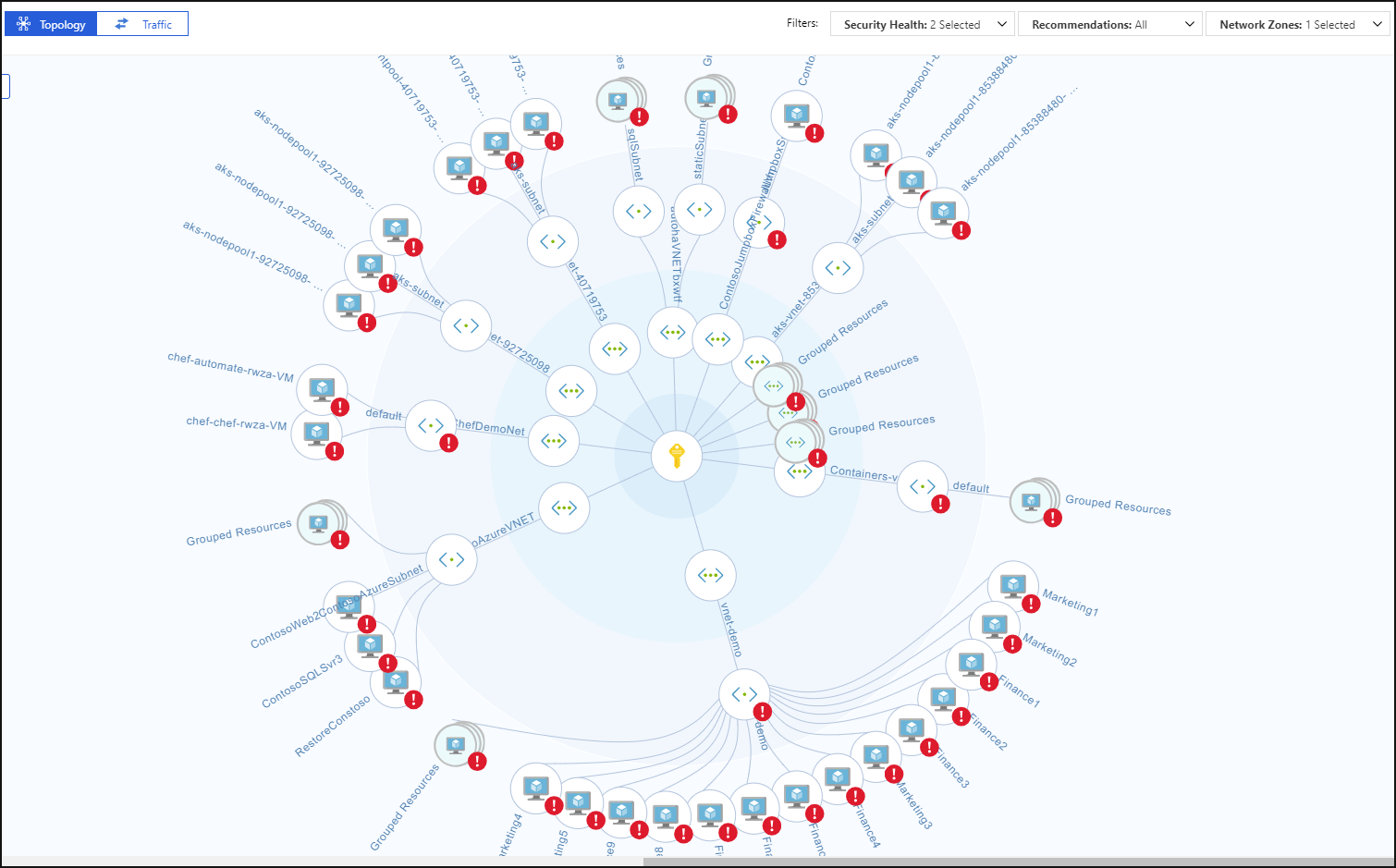

Azure has a number of built-in threat monitoring capabilities that are integrated into Azure AD, Azure Monitor, and the Azure Security Center. Among them, for example, detecting the interaction of virtual machines with known malicious IPs (due to the integration with Microsoft Threat Intelligence services), detecting malware in the cloud infrastructure by receiving alarms from virtual machines located in the cloud, attacks like “password guessing” ”To virtual machines, vulnerabilities in the configuration of the user identification system, logging in from anonymizers or infected nodes, account leakage, logging in from unusual locations, etc. Azure today is one of the few cloud providers that offers you built-in Threat Intelligence capabilities to enrich the collected information security events.

As mentioned above, the protective functionality and, as a consequence, the security events generated by it are not accessible to all users equally, but requires a certain subscription that includes the functionality you need, which generates the corresponding events for monitoring information security. For example, some of the functions for monitoring anomalies in accounts described in the previous paragraph are available only in the P2 premium license for Azure AD. Without it, you, as in the case of AWS, will have to analyze the collected security events “manually”. And, also, depending on the type of license for Azure AD, not all events for analysis will be available to you.

On the Azure portal, you can manage both search queries to the logs of interest to you, and configure dashboards to visualize key information security indicators. In addition, there you can choose the Azure Monitor extensions, which allow you to expand the functionality of the Azure Monitor logs and get a deeper analysis of events from a security point of view.

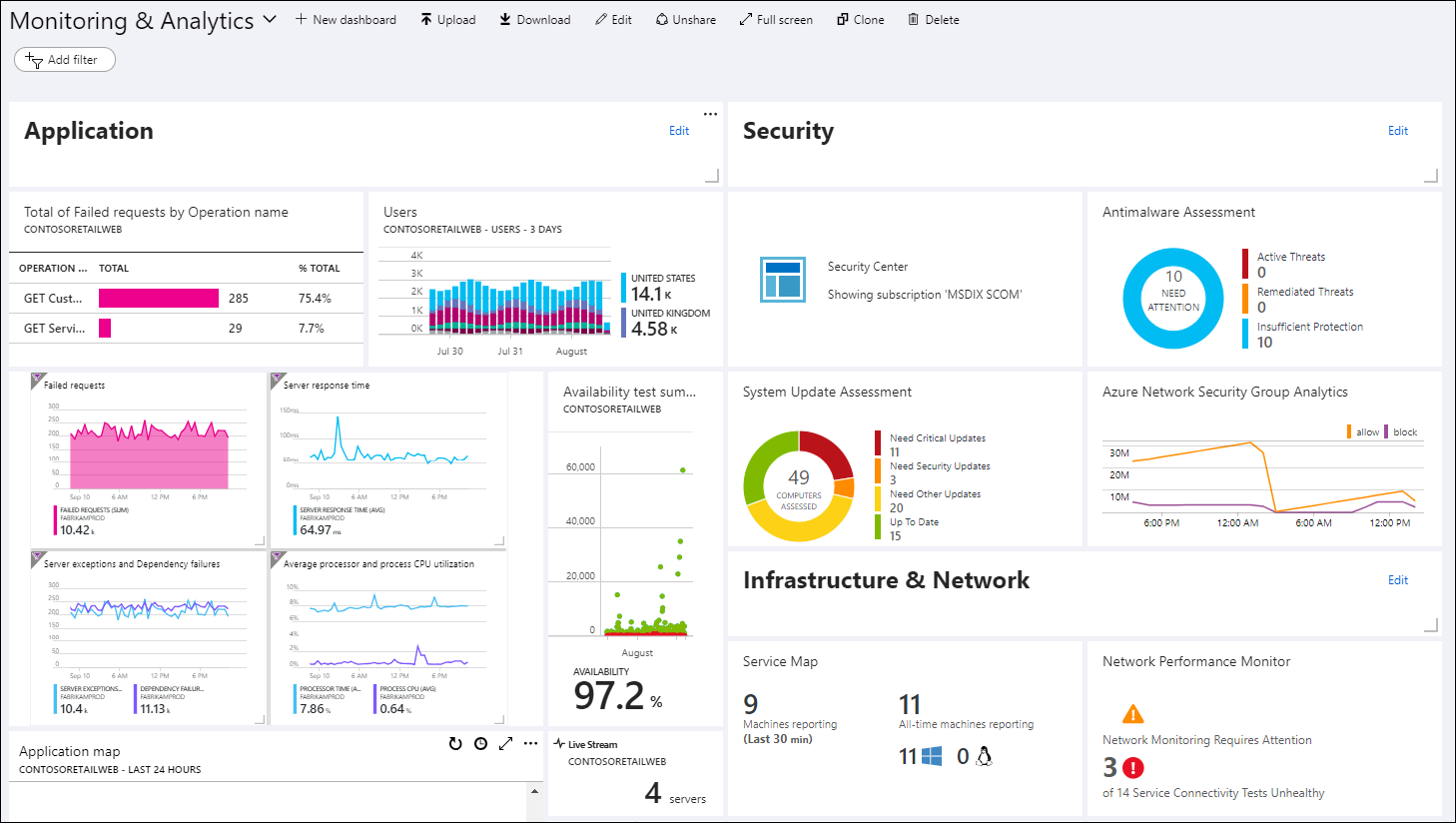

If you need not only the ability to work with logs, but the comprehensive security center of your Azure cloud platform, including IS policy management, then you can talk about the need to work with the Azure Security Center, most of which are useful for a fee, for example, threat detection, monitoring outside of Azure, conformity assessment, etc. (In the free version, you only have access to a security assessment and recommendations for resolving identified problems). It consolidates all security issues in one place. In fact, we can talk about a higher level of information security than Azure Monitor provides, since in this case the data collected throughout your cloud factory is enriched using a variety of sources, such as Azure, Office 365, Microsoft CRM online, Microsoft Dynamics AX, outlook .com, MSN.com,Microsoft Digital Crimes Unit (DCU) and Microsoft Security Response Center (MSRC), which are superimposed on various sophisticated machine learning algorithms and behavioral analytics, which should ultimately increase the efficiency of threat detection and response.

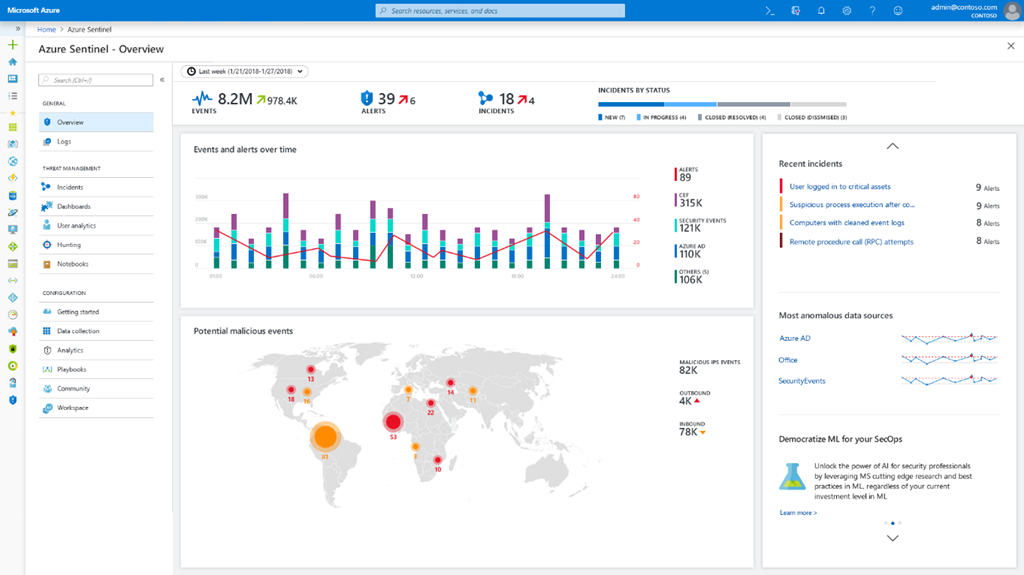

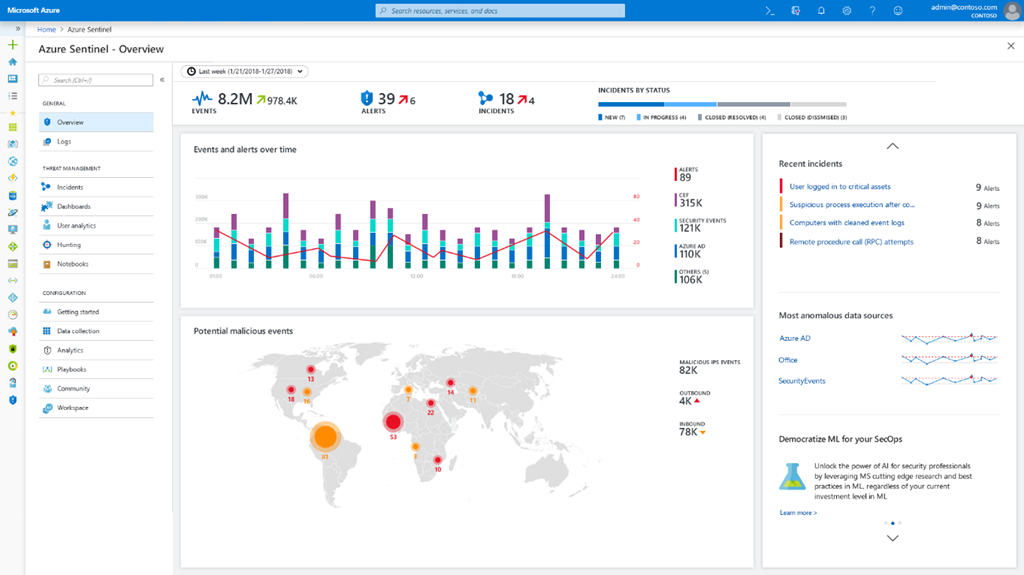

Azure has its own SIEM - it appeared at the beginning of 2019. This is the Azure Sentinel, which relies on data from Azure Monitor, and can also integrate with. external security solutions (for example, NGFW or WAF), the list of which is constantly updated. In addition, through the integration of the Microsoft Graph Security API, you can connect your own Threat Intelligence feeds to Sentinel, which enriches the ability to analyze incidents in your Azure cloud. It can be argued that Azure Sentinel is the first "native" SIEM that appeared in cloud providers (the same Splunk or ELK, which can be placed in the cloud, for example, AWS, are still not developed by traditional cloud service providers).The Azure Sentinel and Security Center could be called the SOC for the Azure cloud and they could be limited (with certain reservations) if you no longer had any infrastructure and you transferred all your computing resources to the cloud and it would be a Microsoft cloud Azure

But since Azure’s built-in capabilities (even with a Sentinel subscription) are often not enough for IS monitoring and integration of this process with other sources of security events (both cloud and internal), it becomes necessary to export the collected data to external systems, which may include SIEM. This is done both with the help of the API, and with the help of special extensions that are officially available at the moment only for the following SIEMs - Splunk (Azure Monitor Add-On for Splunk), IBM QRadar (Microsoft Azure DSM), SumoLogic, ArcSight and ELK. More recently, there were more such SIEMs, but from June 1, 2019, Microsoft stopped supporting the Azure Log Integration Tool (AzLog),which at the dawn of Azure's existence and in the absence of normal standardization of working with logs (Azure Monitor did not even exist yet) made it easy to integrate external SIEMs with the Microsoft cloud. Now the situation has changed and Microsoft recommends the Azure Event Hub platform as the main integration tool for the rest of SIEM. Many have already implemented this integration, but be careful - they may not capture all the Azure logs, but only a few (see the documentation for your SIEM).

Concluding a brief excursion to Azure, I want to give a general recommendation on this cloud service - before you say anything about the security monitoring functions in Azure, you should configure them very carefully and test that they work as written in the documentation and as the consultants told you Microsoft (and they may have different views on the performance of Azure features). If you have financial opportunities from Azure, you can squeeze out a lot of useful things in terms of information security monitoring. If your resources are limited, as in the case of AWS, you will have to rely only on your own resources and raw data that Azure Monitor provides you with. And remember that many monitoring functions cost money and it is better to familiarize yourself with pricing in advance. For example,free of charge, you can store data for 31 days with a volume of not more than 5 GB per customer - exceeding these values will require you to fork out additionally (about 2+ dollars for storing each additional GB from the customer and $ 0.1 for storing 1 GB each additional month). Working with application telemetry and metrics may also require additional financial resources, as well as working with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).as well as work with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).as well as work with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).

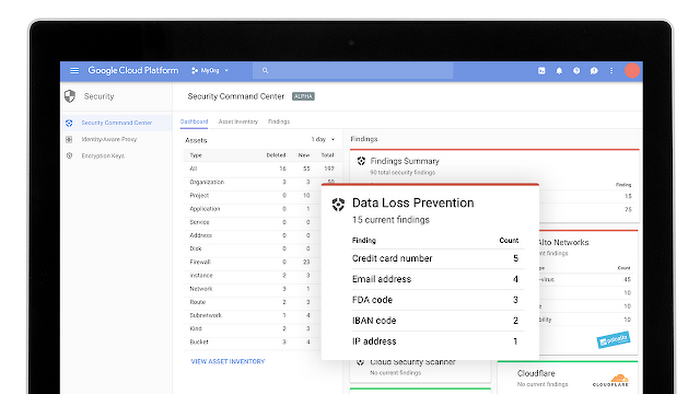

The Google Cloud Platform against the background of AWS and Azure looks quite young, but this is partly good. Unlike AWS, which was building up its capabilities, including defensive ones, gradually, having problems with centralization; GCP, like Azure, is much better managed centrally, which reduces the number of errors and implementation time in the enterprise. From a security perspective, GCP is, oddly enough, located between AWS and Azure. He also has a single event registration for the entire organization, but it is incomplete. Some functions are still in beta mode, but gradually this deficiency should be eliminated and GCP will become a more mature platform in terms of information security monitoring.

The main tool for recording events in GCP is Stackdriver Logging (an analogue of Azure Monitor), which allows you to collect events across your entire cloud infrastructure (as well as AWS). From a security perspective in GCP, each organization, project, or folder has four logs:

Log Example: Access Transparency

Access to these logs is possible in several ways (in much the same way as previously considered by Azure and AWS) - through the Log Viewer interface, through the API, through the Google Cloud SDK or through the Activity page of your project, in which you are interested in events. In the same way, they can also be exported to external solutions for additional analysis. The latter is done by exporting logs to BigQuery or Cloud Pub / Sub storage.

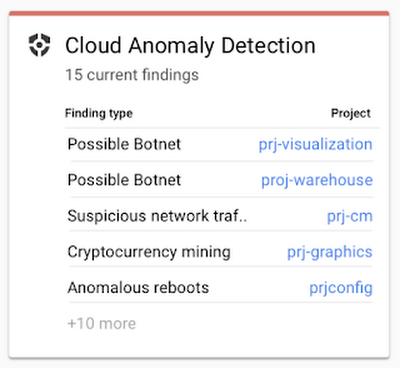

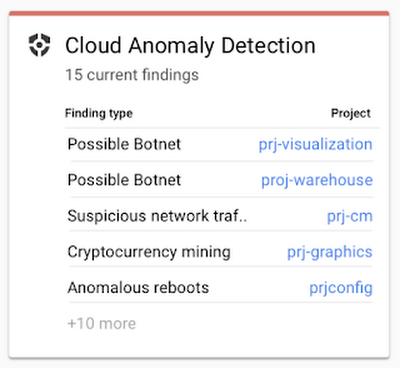

In addition to Stackdriver Logging, the GCP platform also offers Stackdriver Monitoring functionality, which allows you to track key metrics (performance, MTBF, overall status, etc.) of cloud services and applications. Processed and visualized data can make it easier to find problems in your cloud infrastructure, including in the security context. But it should be noted that this functionality will not be very rich precisely in the context of information security, since today GCP has no analogue of the same AWS GuardDuty and cannot distinguish bad ones from all recorded events (Google developed Event Threat Detection, but so far it is in beta and talk about its usefulness early). Stackdriver Monitoring could be used as a system for detecting anomalies, which will then be investigated to find the causes of their occurrence. But in the conditions of the shortage of personnel qualified in the field of information security GCP in the market, this task at the moment looks difficult.

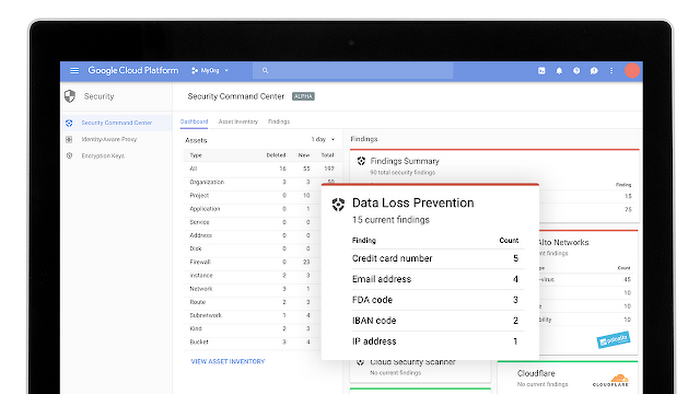

It’s also worthwhile to give a list of some information security modules that can be used within your GCP cloud, and which are similar to what AWS offers:

Many of these modules generate security events that can be sent to BigQuery for analysis or export to other systems, including SIEM. As already mentioned above, GCP is an actively developed platform and now Google is developing a number of new information security modules for its platform. Among them are Event Threat Detection (now available in beta), which scans Stackdriver logs for traces of unauthorized activity (similar to GuardDuty in AWS), or Policy Intelligence (available in alpha), which will allow developing intelligent access policies for GCP resources.

I did a short review of the built-in monitoring capabilities in popular cloud platforms. But do you have specialists who are able to work with the raw logs of the IaaS provider (not everyone is ready to buy advanced features of AWS or Azure or Google)? In addition, many people know the proverb “trust, but verify”, which in the field of security is as true as ever. How much do you trust the built-in capabilities of the cloud provider that send you IB events? How much do they focus on information security?

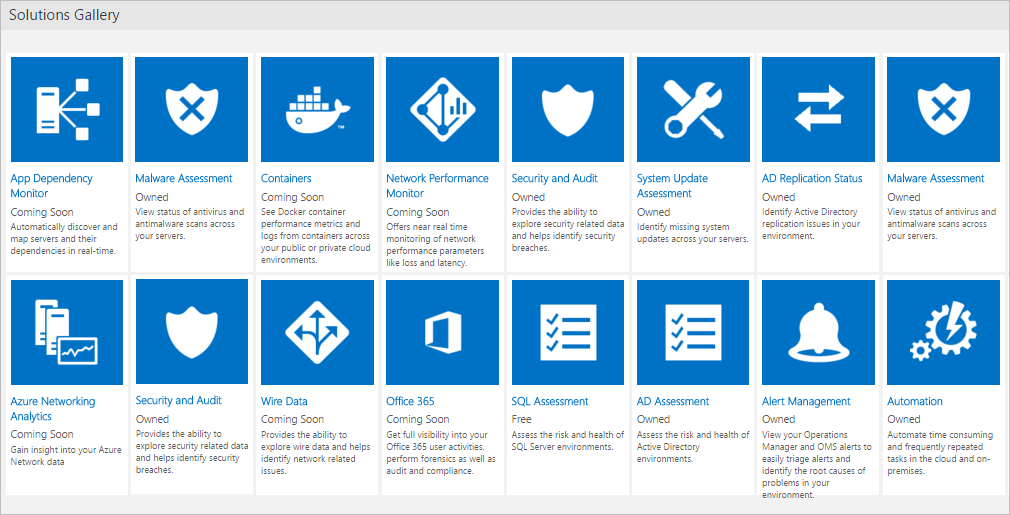

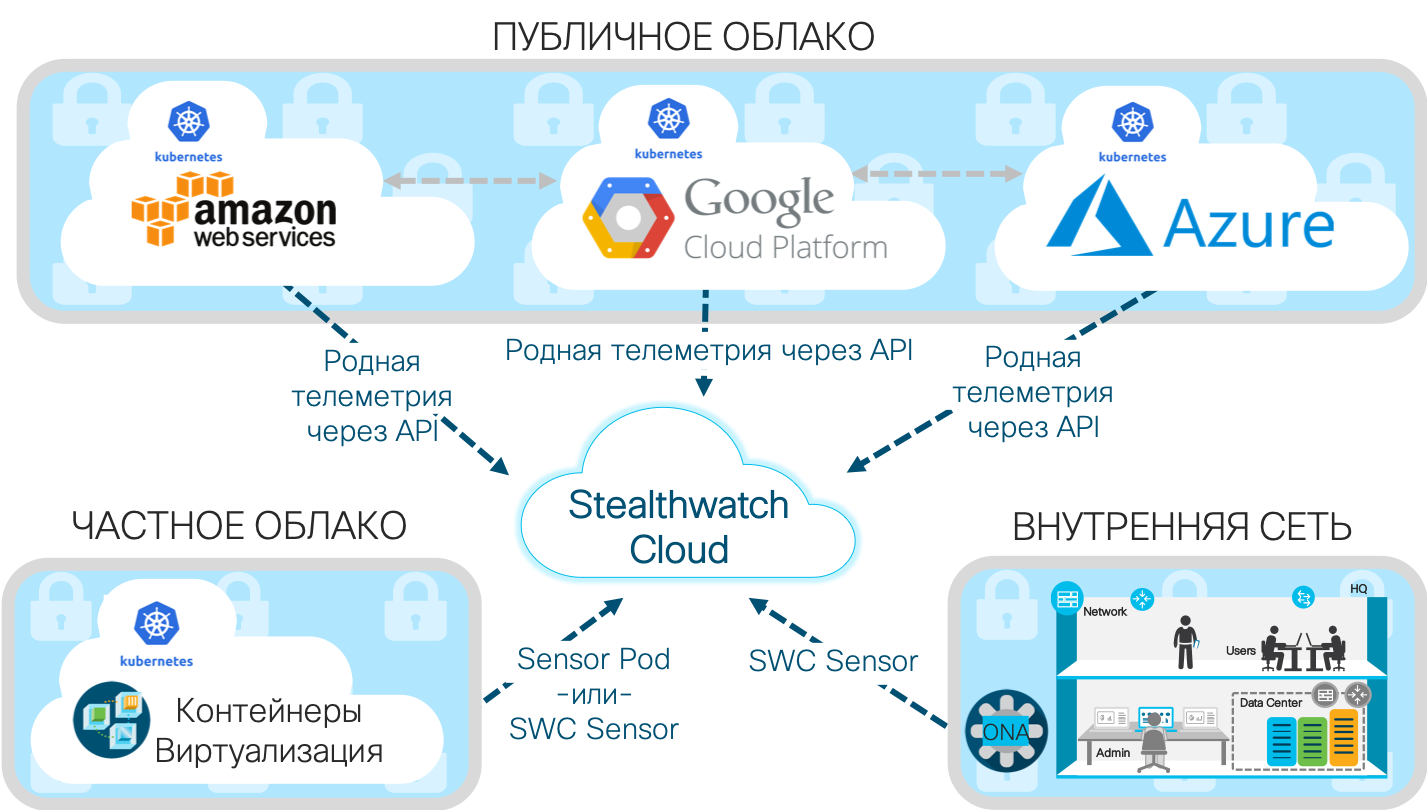

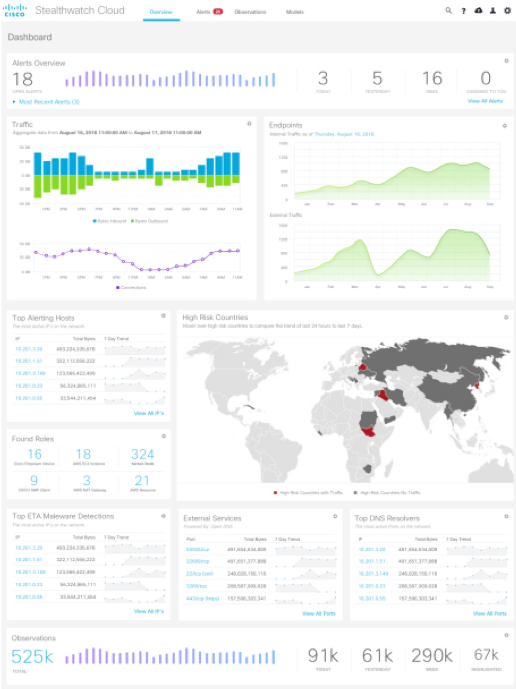

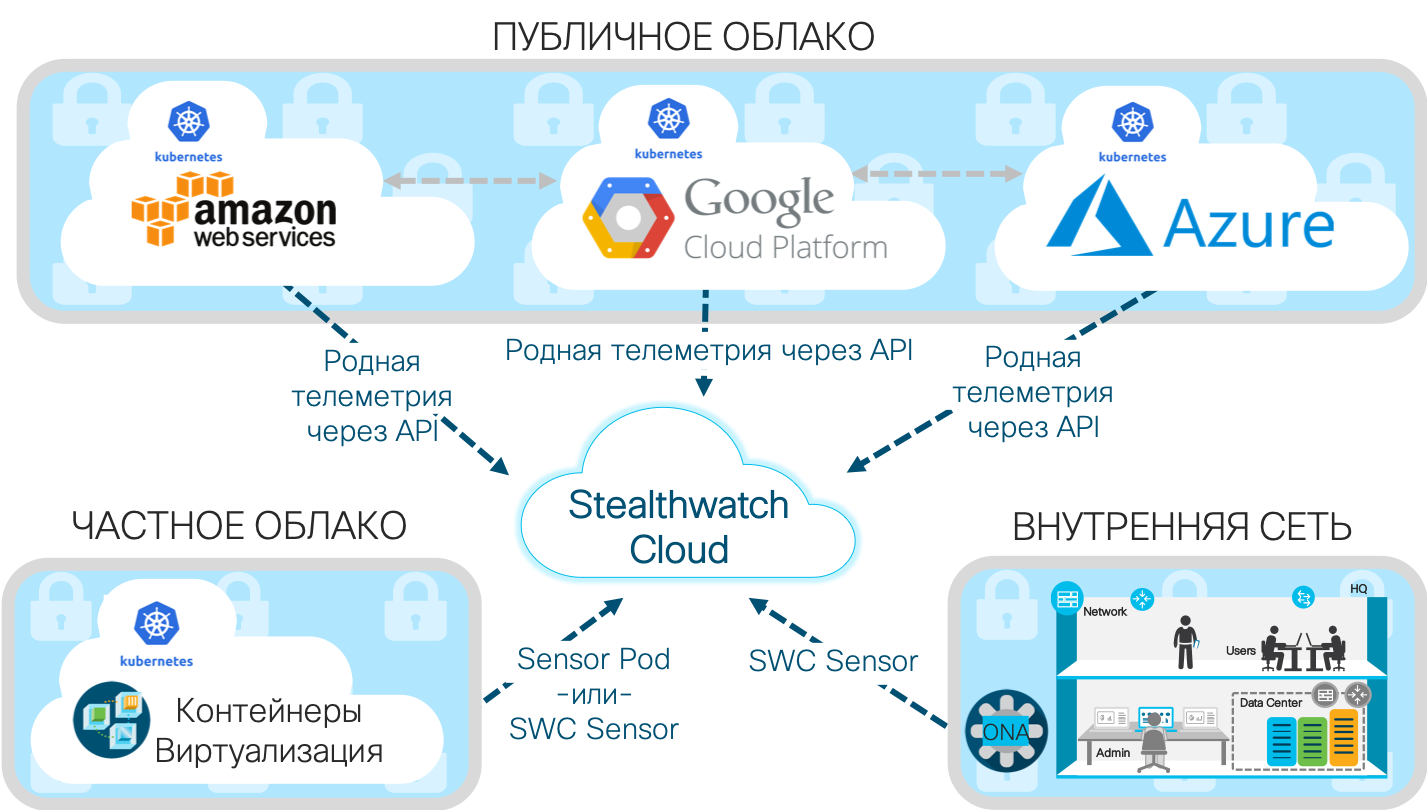

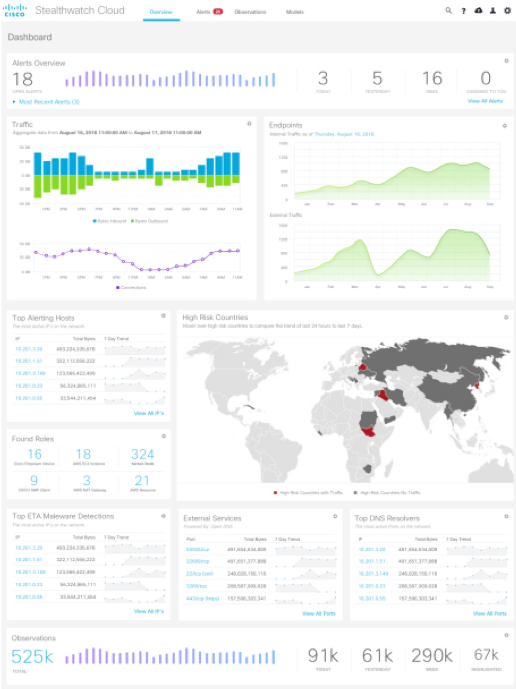

Sometimes it’s worth looking towards overlay solutions for monitoring cloud infrastructures that can complement the built-in cloud security, and sometimes such solutions are the only way to get data on the security of your data and applications located in the cloud. In addition, they are simply more convenient, since they take on all the tasks of analyzing the necessary logs generated by different cloud services of different cloud providers. An example of such an imposed solution is the Cisco Stealthwatch Cloud, which focuses on the only task - monitoring IS anomalies in cloud environments, including not only Amazon AWS, Microsoft Azure and Google Cloud Platform, but also private clouds.

AWS provides a flexible computing platform, but this flexibility makes it easier for companies to make mistakes that lead to security issues. And the shared IS model only contributes to this. Running software in the cloud with unknown vulnerabilities (for example, AWS Inspector or GCP Cloud Scanner can fight against known vulnerabilities), weak passwords, incorrect configurations, insiders, etc. And all this affects the behavior of cloud resources, which can be observed by Cisco Stealthwatch Cloud, which is a system for monitoring information security and attack detection. public and private clouds.

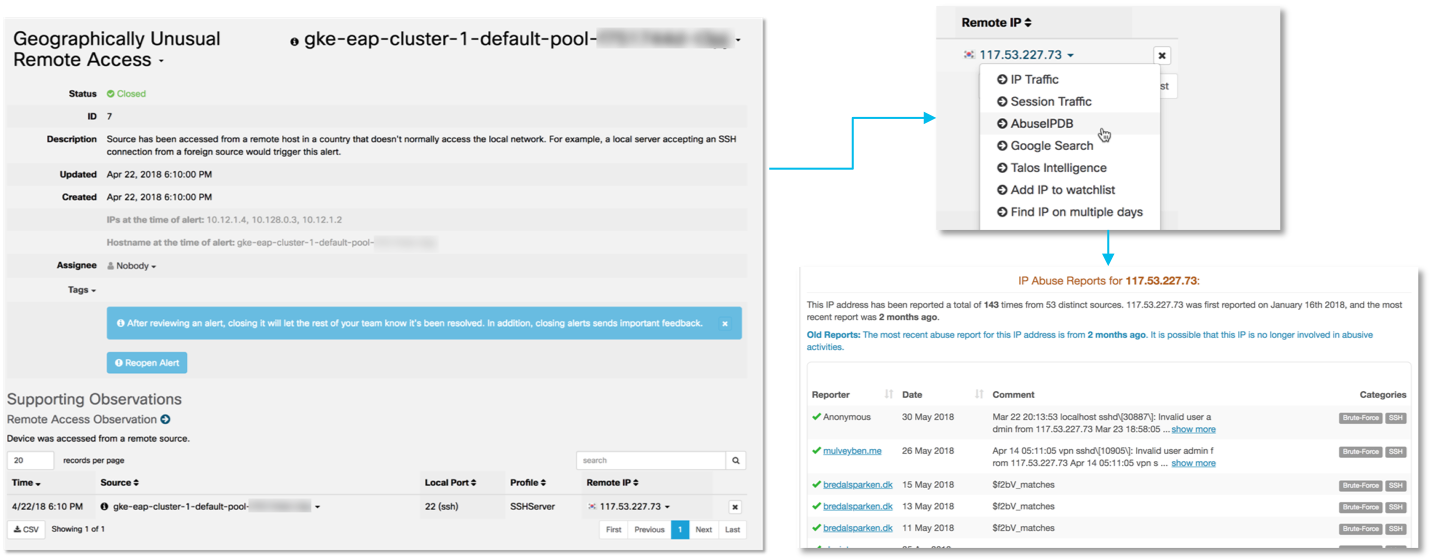

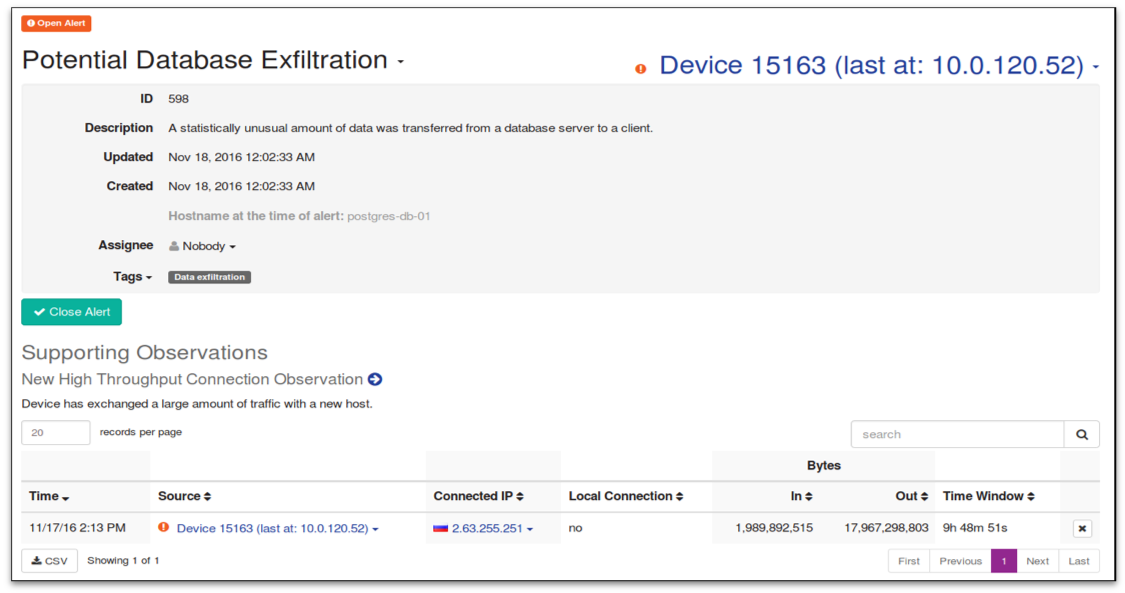

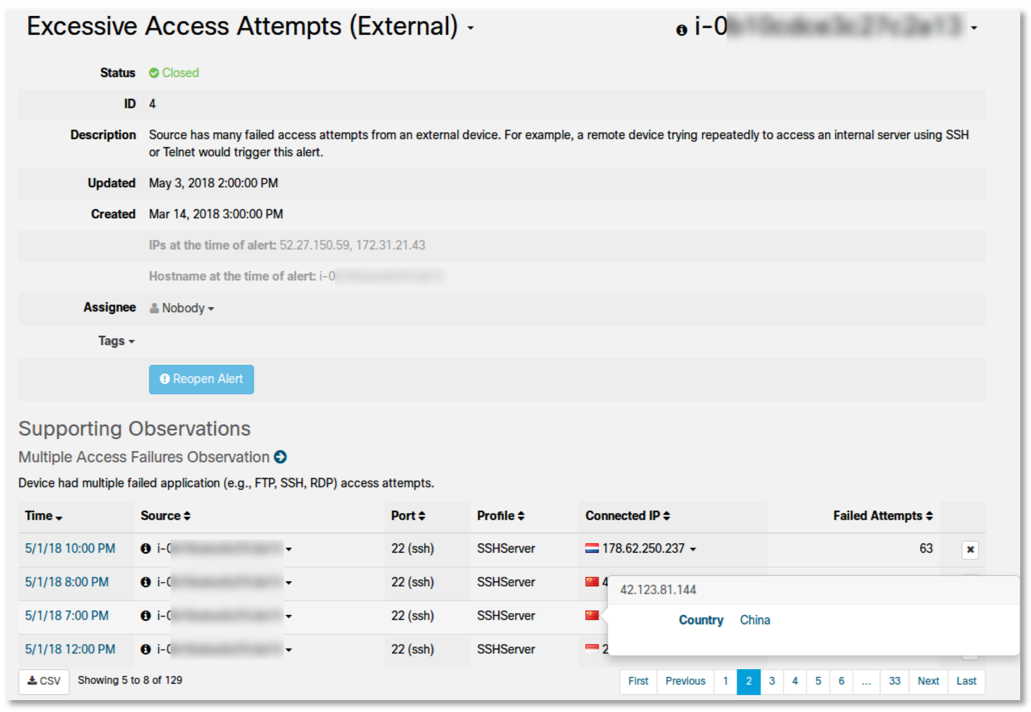

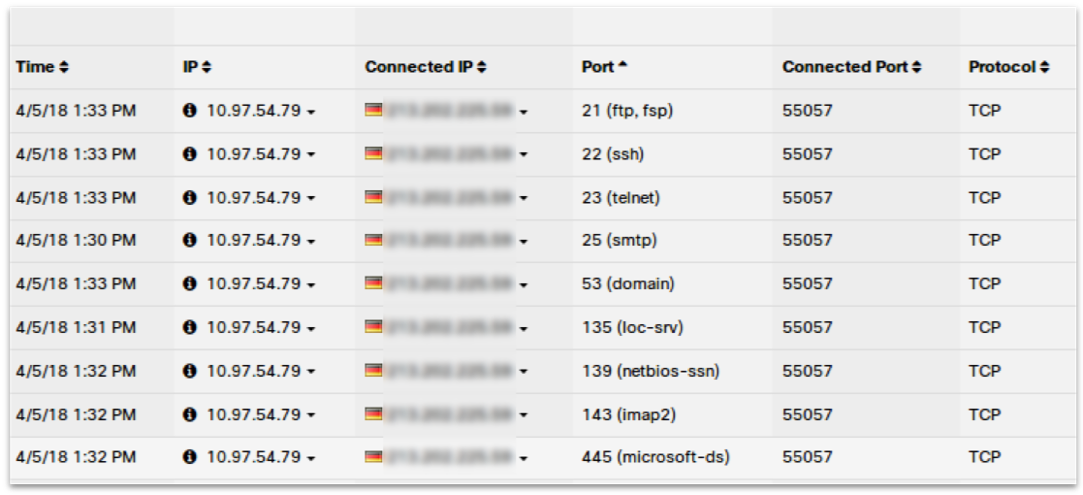

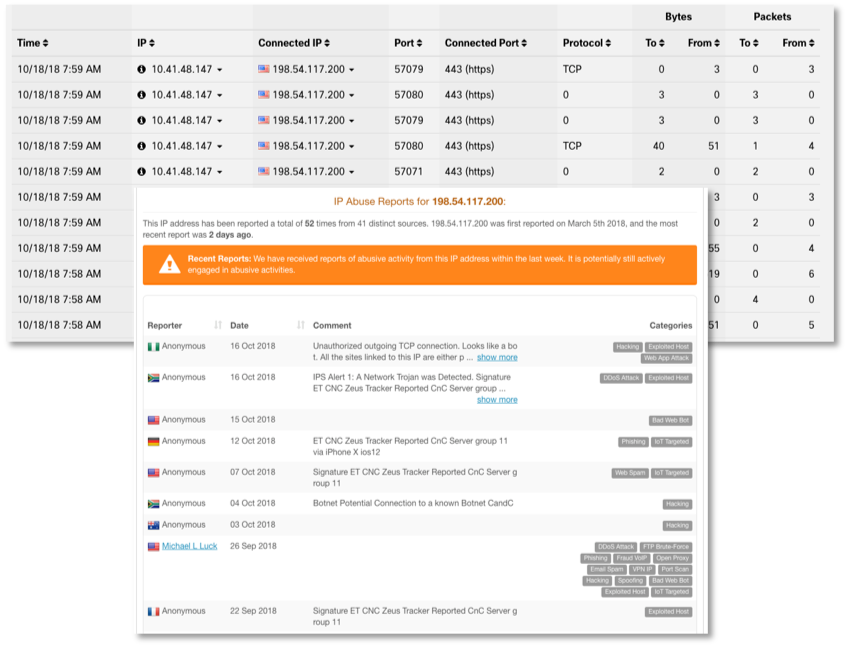

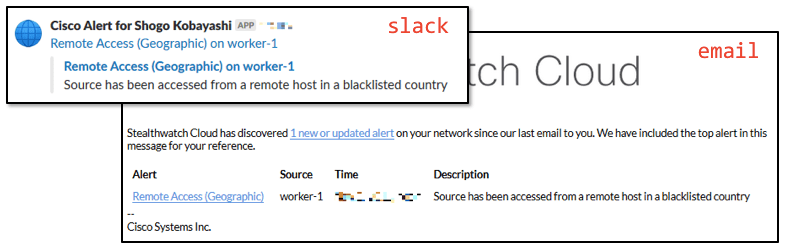

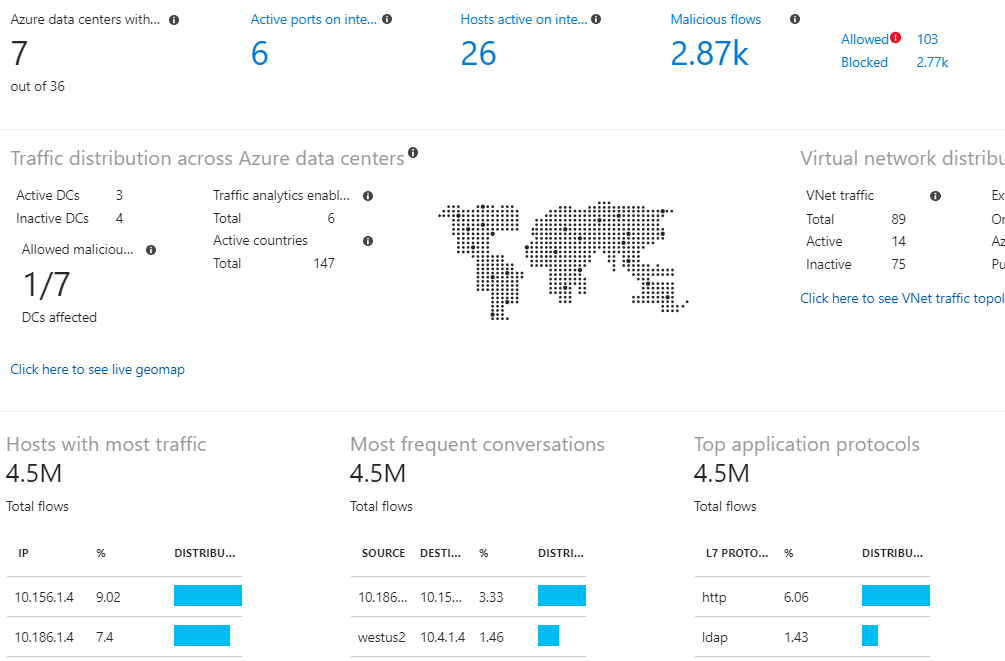

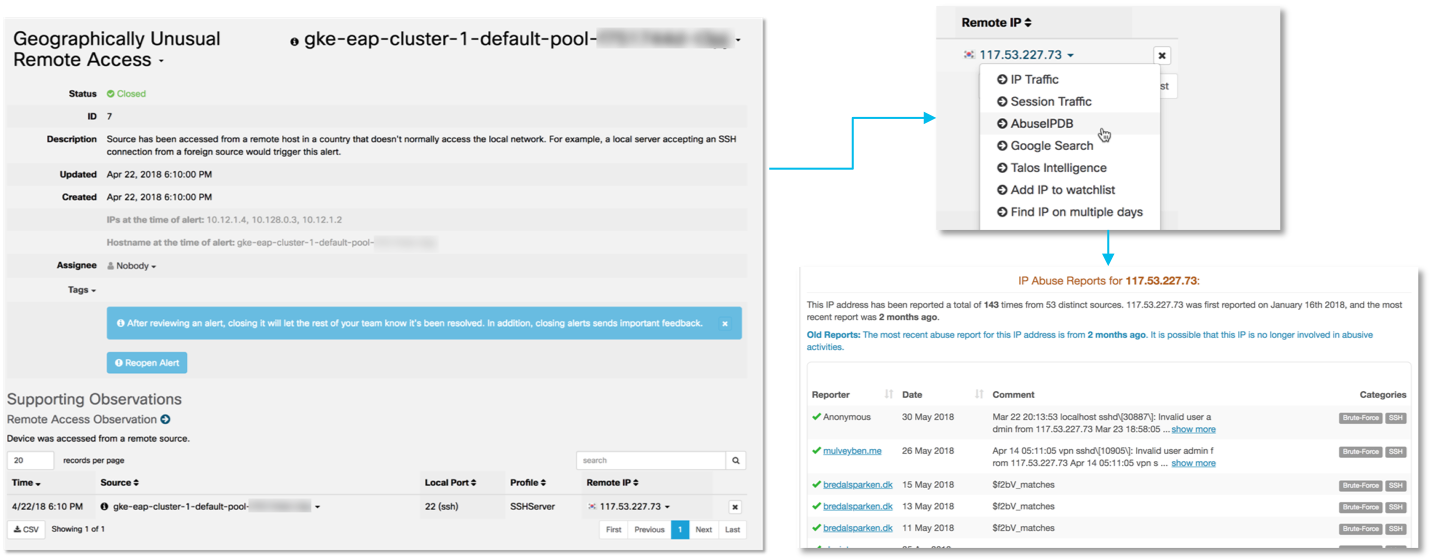

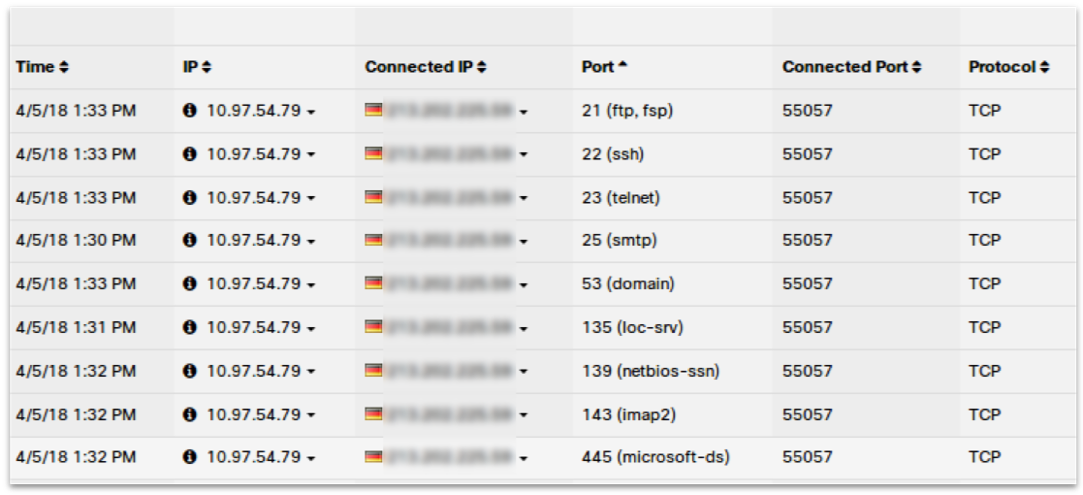

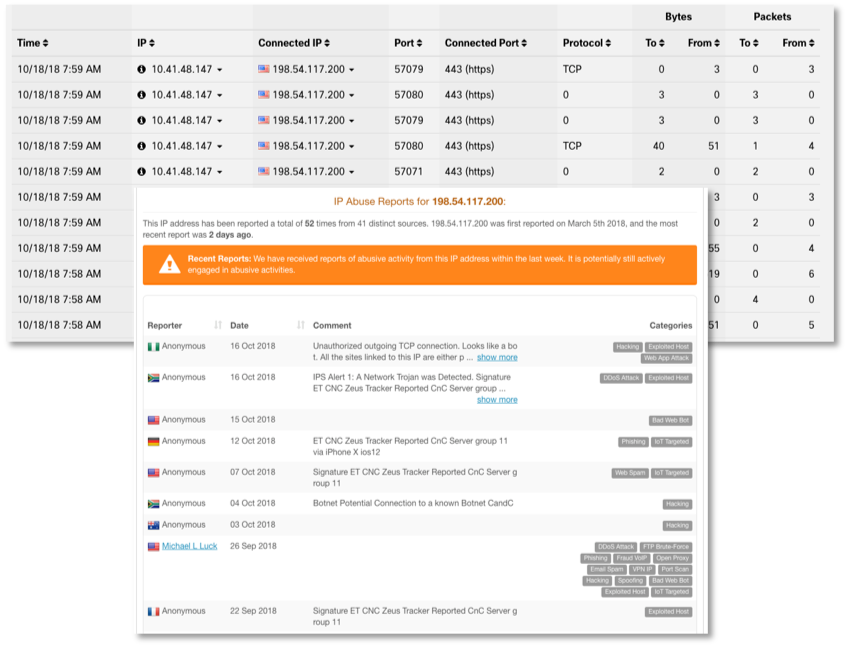

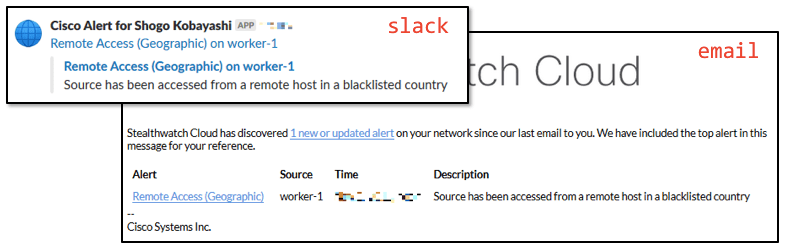

One of the key features of the Cisco Stealthwatch Cloud is the ability to model entities. With its help, you can create a program model (that is, an almost real-time simulation) of each of your cloud resources (it does not matter if it is AWS, Azure, GCP or something else). These may include servers and users, as well as resource types specific to your cloud environment, such as security groups and auto-scale groups. These models use structured data streams provided by cloud services as input. For example, for AWS, these will be VPC Flow Logs, AWS CloudTrail, AWS CloudWatch, AWS Config, AWS Inspector, AWS Lambda and AWS IAM. Entity modeling automatically detects the role and behavior of any of your resources (you can talk about profiling all cloud activity). Among these roles are an Android or Apple mobile device, a Citrix PVS server, an RDP server, a mail gateway, a VoIP client, a terminal server, a domain controller, etc. He then continuously monitors their behavior to determine when risky or safety-threatening behavior occurs. You can identify password guessing, DDoS attacks, data leaks, illegal remote access, malicious code, vulnerability scanning and other threats. For example, here's what it looks like to detect a remote access attempt from a country atypical of your organization (South Korea) to the Kubernetes cluster via SSH:

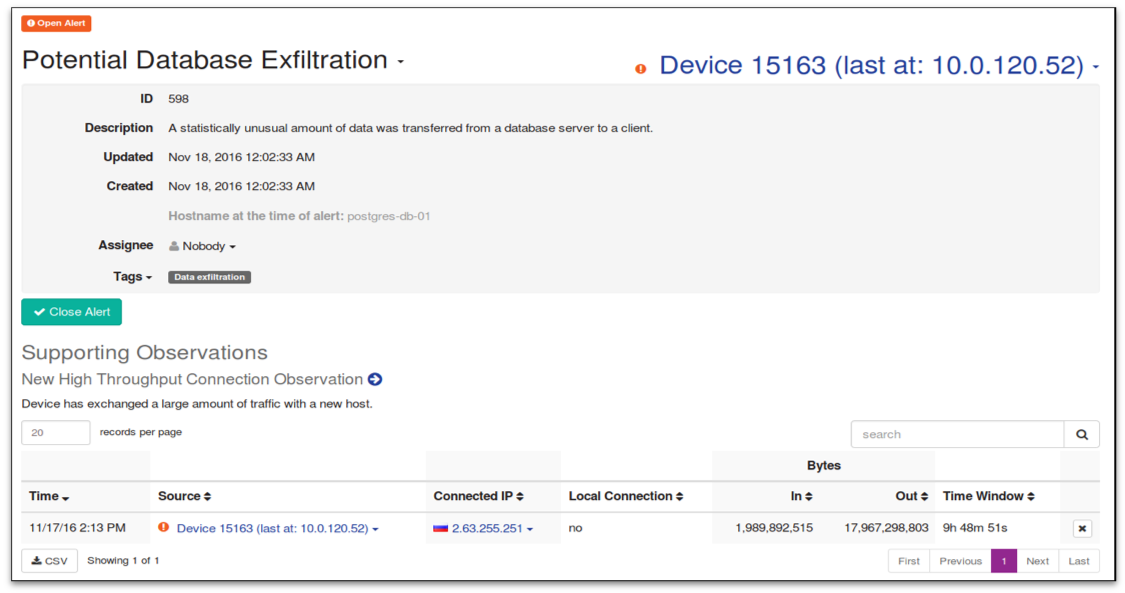

And this is what the alleged leak of information from the Postgress database into a country that has not previously been interacted with looks like:

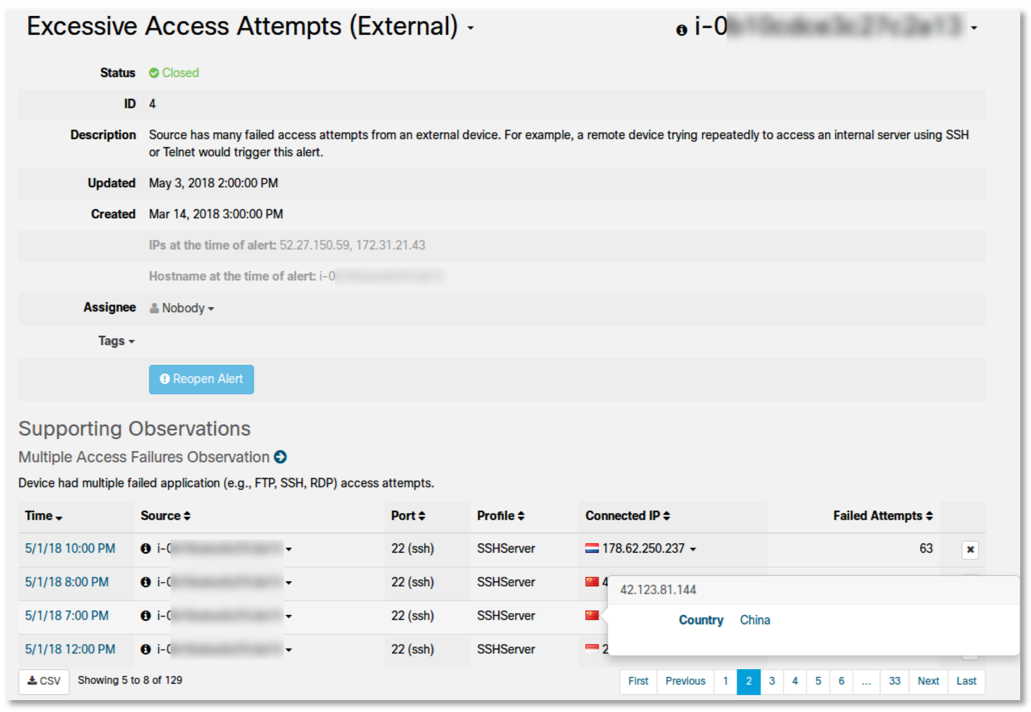

Finally, this is how too many failed SSH access attempts from China and Indonesia from an external remote device look like:

Or, suppose a server instance in a VPC, according to the policy, should never be a destination for remote login. Further, assume that a remote login has occurred on this computer due to an erroneous change to the firewall rule policy. The entity modeling feature will detect and report this activity (“Unusual Remote Access”) in near real time and point to a specific AWS CloudTrail, Azure Monitor or GCP Stackdriver Logging API call (including username, date and time, among other details), which caused the change to the ITU rule. And then this information can be given to SIEM for analysis.

Similar features are available for any cloud environment supported by Cisco Stealthwatch Cloud:

Entity modeling is a unique form of security automation that can detect a previously unknown problem with your people, processes, or technologies. For example, it allows you to detect, among other things, such security problems as:

The detected IB event can be transmitted in the form of an appropriate ticket to Slack, Cisco Spark, PagerDuty incident management system, and also sent to various SIEMs, including Splunk or ELK. Summarizing, we can say that if your company uses a multi-cloud strategy and is not limited to one cloud provider, the information security monitoring capabilities described above, then using Cisco Stealthwatch Cloud is a good option to get a unified set of monitoring capabilities for leading cloud players - Amazon , Microsoft and Google. The most interesting thing is that if you compare the prices of Stealthwatch Cloud with advanced security monitoring licenses in AWS, Azure or GCP, it may turn out that the Cisco solution will be even cheaper than the built-in capabilities of Amazon, Microsoft and Google solutions. Paradoxically, it is. And the more clouds and their capabilities you use, the more obvious will be the advantage of a consolidated solution.

In addition, the Stealthwatch Cloud can also monitor private clouds deployed in your organization, for example, based on Kubernetes containers or by monitoring Netflow streams or network traffic received through mirroring in network equipment (even domestic production), data from AD or DNS servers etc. All of this data will be enriched with Threat Intelligence information collected by Cisco Talos, the world's largest non-governmental group of security threat researchers.

This allows you to implement a single monitoring system for both public and hybrid clouds that your company can use. The information collected can then be analyzed using the built-in features of the Stealthwatch Cloud or sent to your SIEM (Splunk, ELK, SumoLogic and several others are supported by default).

This concludes the first part of the article in which I examined the internal and external security monitoring tools of IaaS / PaaS platforms that allow us to quickly detect and respond to incidents in the cloud environments that our enterprise has chosen. In the second part, we will continue the topic and consider monitoring options for SaaS platforms using Salesforce and Dropbox as an example, and also try to summarize and put everything together, creating a unified information security monitoring system for different cloud providers.

Let's say your company has moved part of its infrastructure to the cloud ... Stop. Not this way. If the infrastructure is transferred, and you are only now thinking about how you will monitor it, then you have already lost. If this is not Amazon, Google or Microsoft (and then with reservations), then you probably will not have many opportunities to monitor your data and applications. Well, if you are given the opportunity to work with logs. Sometimes data about security events will be available, but you will not have access to them. For example, Office 365. If you have the cheapest E1 license, then security events are not available to you at all. If you have an E3 license, your data is stored in just 90 days, and only if you have E5 - the duration of the logs is available for a year (although there are some nuances here as well, due to the need to separately request a number of logging functions from Microsoft support). By the way, the E3 license is much weaker in terms of monitoring functions than corporate Exchange. To achieve the same level, you need an E5 license or an additional Advanced Compliance license, which may require additional money that was not included in your financial model for the transition to the cloud infrastructure. And this is just one example of an underestimation of issues related to monitoring information security clouds. In this article, without pretending to be complete, I want to draw attention to some nuances that should be considered when choosing a cloud provider from a security point of view. And at the end of the article, a checklist will be given that should be completed before you consider that the issue of monitoring cloud information security has been resolved.

There are several typical problems that lead to incidents in cloud environments, which IB services do not have time to respond to or do not see at all:

- Security logs do not exist. This is a fairly common situation, especially for beginners in the cloud market. But putting an end to them right away is not worth it. Small players, especially domestic ones, are more sensitive to the requirements of customers and can quickly implement some requested functions by changing the approved roadmap to their products. Yes, it will not be an analogue of GuardDuty from Amazon or the Proactive Defense module from Bitrix, but at least something.

- IB does not know where the logs are stored or there is no access to them. Here it is necessary to enter into negotiations with the cloud service provider - perhaps he will provide such information if he considers the client significant for himself. But in general, it is not very good when access to the logs is provided “by a special decision”.

- It also happens that the cloud provider has logs, but they provide limited monitoring and event logging, insufficient to detect all incidents. For example, you can be given only the logs of changes on the site or the logs of attempts to authenticate users, but not give other events, for example, about network traffic, which will hide from you a whole layer of events that characterize attempts to break into your cloud infrastructure.

- There are logs, but access to them is difficult to automate, which makes them monitor not continuously, but on a schedule. And if you still can’t download the logs in automatic mode, then uploading logs, for example, in Excel format (like a number of domestic suppliers of cloud solutions), can completely lead to unwillingness to mess with them from the corporate information service.

- There is no monitoring of logs. This is perhaps the most incomprehensible reason for the occurrence of information security incidents in cloud environments. It seems that there are logs, and you can automate access to them, but no one does it. Why?

Cloud Shared Security Concept

Moving to the clouds is always the search for a balance between the desire to maintain control over the infrastructure and transferring it to the more professional hands of the cloud provider, which specializes in maintaining it. And in the field of cloud security, this balance also needs to be sought. Moreover, depending on the used model of providing cloud services (IaaS, PaaS, SaaS), this balance will be different all the time. In any situation, we must remember that today all cloud providers follow the so-called shared responsibility and shared security model. The cloud is responsible for something, the client is responsible for something, having placed its data, its applications, its virtual machines and other resources in the cloud. It would be foolish to expect that having gone into the cloud, we will transfer all responsibility to the provider. But building all the security on your own when moving to the cloud is also unwise. A balance is needed, which will depend on many factors: - risk management strategies, threat models available to the cloud provider of defense mechanisms, legislation, etc.

For example, the classification of data hosted in the cloud is always the responsibility of the customer. A cloud provider or an external service provider can only help him with tools that will help mark data in the cloud, identify violations, delete data that violates the law, or disguise it using one method or another. On the other hand, physical security is always the responsibility of the cloud provider, which it cannot share with customers. But everything that is between the data and the physical infrastructure is precisely the subject of discussion in this article. For example, cloud availability is the provider’s responsibility, and setting ITU rules or enabling encryption is the client’s responsibility. In this article, we’ll try to look at the information security mechanisms that various various popular cloud providers in Russia provide today, what are the features of their application, and when to look in the direction of external overlay solutions (for example, Cisco E-mail Security) that expand the capabilities of your cloud in part cybersecurity. In some cases, especially if you are following a multi-cloud strategy, you have no choice but to use external security monitoring solutions in several cloud environments at once (for example, Cisco CloudLock or Cisco Stealthwatch Cloud). Well, in some cases, you will understand that the cloud provider you have chosen (or imposed on you) does not offer any options for monitoring information security at all. This is unpleasant, but also a lot, as it allows you to adequately assess the level of risk associated with working with this cloud.

Cloud Security Monitoring Life Cycle

To monitor the security of the clouds you use, you have only three options:

- rely on the tools provided by your cloud provider,

- take advantage of third-party solutions that will monitor the IaaS, PaaS or SaaS platforms you are using,

- Build your own cloud monitoring infrastructure (IaaS / PaaS platforms only).

Let's see what features each of these options have. But first, we need to understand the general scheme that will be used in monitoring cloud platforms. I would highlight 6 main components of the information security monitoring process in the cloud:

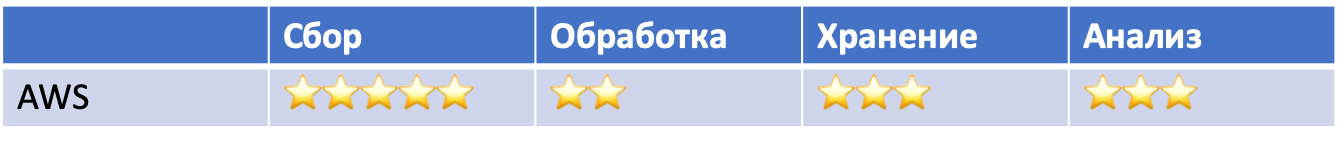

- Infrastructure preparation. Identification of the necessary applications and infrastructure for collecting events that are important for information security in the repository.

- Collection. At this stage, security events are aggregated from various sources for subsequent transmission to processing, storage and analysis.

- Treatment. At this point, the data is converted and enriched to facilitate their subsequent analysis.

- Storage. This component is responsible for the short-term and long-term storage of collected processed and raw data.

- Analysis. At this stage, you have the ability to detect incidents and respond to them automatically or manually.

- Reporting This stage helps to generate key indicators for interested parties (management, auditors, cloud providers, customers, etc.) that help us make certain decisions, for example, changing the provider or strengthening IS.

Understanding these components will allow you to quickly determine what you can get from your provider and what you will have to do yourself or with the help of external consultants.

Built-in Cloud Services Features

I already wrote above that so many cloud services today do not provide any possibility for monitoring information security. In general, they do not pay much attention to the topic of information security. For example, one of the popular Russian services for sending reports to government agencies via the Internet (I will not specifically mention his name). The entire security section of this service revolves around the use of certified cryptographic information protection tools. The information security section of another domestic cloud service for electronic document management is not an example anymore. It talks about public key certificates, certified cryptography, eliminating web vulnerabilities, protecting against DDoS attacks, applying ITU, backing up, and even conducting regular IS audits. But not a word about monitoring, nor about the possibility of gaining access to information security events that may be of interest to the customers of this service provider.

In general, by the way the cloud provider describes IS issues on its website and in the documentation, one can understand how seriously he generally takes this issue. For example, if you read the manuals for My Office products, then there isn’t a word about security at all, and the documentation for the separate product My Office. KS3 ”, designed to protect against unauthorized access, is the usual enumeration of clauses of the 17th order of the FSTEC, which executes“ My office.KS3 ”, but is not described how it performs and, most important, how to integrate these mechanisms with corporate information security. Perhaps such documentation is there, but I did not find it in the public domain on the My Office site. Although maybe I just do not have access to this classified information? ..

In the same Bitrix, the situation is an order of magnitude better. The documentation describes the formats of the event logs and, interestingly, the intrusion log, which contains events related to potential threats to the cloud platform. From there you can get IP, username or guest name, event source, time, User Agent, type of event, etc. True, you can work with these events either from the control panel of the cloud itself or upload data in MS Excel format. It’s difficult to automate work with Bitrix logs now and you will have to do part of the work manually (upload the report and load it into your SIEM). But if you recall that until relatively recently, and this was not possible, then this is a big progress. At the same time, I want to note that many foreign cloud providers offer similar functionality “for beginners” - either look at the logs with your eyes through the control panel, or upload data to yourself (although most upload data in .csv format, not Excel).

If you do not consider the option with no logs, then cloud providers usually offer you three options for monitoring security events - dashboards, uploading data and accessing them via API. The first one seems to solve many problems for you, but this is not entirely true - if there are several magazines, you have to switch between the screens that display them, losing the big picture. In addition, the cloud provider is unlikely to provide you with the opportunity to correlate security events and generally analyze them from a security point of view (usually you are dealing with raw data that you need to understand yourself). There are exceptions and we will talk about them further. Finally, it is worth asking, what events does your cloud provider record, in what format, and how much do they correspond to your IS monitoring process? For example, the identification and authentication of users and guests. The same Bitrix allows you to record the date and time of this event, the name of the user or guest (in the presence of the Web Analytics module), an object to which you have access, and other elements typical of a web site for these events. But corporate IS services may need information about whether a user has logged into the cloud from a trusted device (for example, in a corporate network, Cisco ISE implements this task). And such a simple task as the geo-IP function, which will help determine if the user account of the cloud service is stolen? And even if the cloud provider provides it to you, then this is not enough. The same Cisco CloudLock does not just analyze geolocation, but uses machine learning and analyzes historical data for each user and tracks various anomalies in attempts to identify and authenticate. Only MS Azure has similar functionality (if there is a corresponding subscription).

There is one more difficulty - since for many cloud providers IS monitoring is a new topic that they are just starting to deal with, they are constantly changing something in their decisions. Today they have one version of the API, tomorrow another, the third day after tomorrow. One must also be prepared for this. The same thing with functionality that can change, which must be taken into account in your information security monitoring system. For example, Amazon initially had separate cloud event monitoring services - AWS CloudTrail and AWS CloudWatch. Then came a separate IB event monitoring service - AWS GuardDuty. After some time, Amazon launched the new Amazon Security Hub management system, which includes an analysis of the data received from GuardDuty, Amazon Inspector, Amazon Macie and several others. Another example is the Azure log integration tool with SIEM - AzLog. Many SIEM vendors actively used it, until in 2018 Microsoft announced the termination of its development and support, which put many customers who used this tool before the problem (as it was resolved, we will talk later).

Therefore, carefully monitor all the monitoring functions that your cloud provider offers you. Or trust external solution providers to act as intermediaries between your SOC and the cloud you want to monitor. Yes, it will be more expensive (although not always), but on the other hand, you will shift all responsibility to the shoulders of others. Or not all? .. Recall the concept of shared security and understand that we can’t shift anything - you will have to figure out how different cloud providers provide information security monitoring of your data, applications, virtual machines and other resources located in the cloud. And we will start with what Amazon offers in this part.

Example: Security Monitoring in AWS-based IaaS

Yes, yes, I understand that Amazon is not the best example in view of the fact that it is an American service and can be blocked as part of the fight against extremism and the dissemination of information prohibited in Russia. But in this publication, I would just like to show how different cloud platforms differ in information security capabilities and what you should pay attention to when transferring your key processes to the clouds from a security point of view. Well, if some of the Russian developers of cloud solutions get something useful for themselves, then this will be excellent.

First I must say that Amazon is not an impregnable fortress. Various incidents regularly happen to his clients. For example, the names, addresses, birth dates, and telephones of 198 million voters were stolen from Deep Root Analytics. 14 million Verizon subscriber records stolen from Nice Systems, Israel In doing so, AWS built-in capabilities allow you to detect a wide range of incidents. For example:

- Influence on Infrastructure (DDoS)

- node compromise (command injection)

- account compromise and unauthorized access

- misconfiguration and vulnerabilities

- unprotected interfaces and APIs.

This discrepancy is due to the fact that the customer himself is responsible for the security of customer data, as we found out above. And if he did not worry about the inclusion of protective mechanisms and did not turn on monitoring tools, then he will learn about the incident only from the media or from his clients.

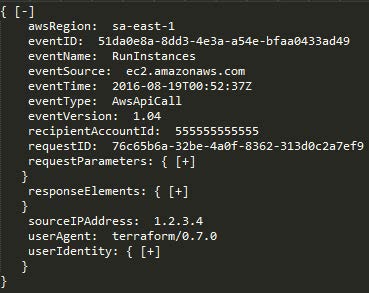

To detect incidents, you can use a wide range of various monitoring services developed by Amazon (although they are often complemented by external tools, such as osquery). So, in AWS, all user actions are tracked, regardless of how they are carried out - through the management console, command line, SDK or other AWS services. All records of the actions of each AWS account (including username, action, service, activity parameters and its result) and API use are available through AWS CloudTrail. You can view these events (for example, logging into the AWS IAM console) from the CloudTrail console, analyze them using Amazon Athena, or “give” them to external solutions, such as Splunk, AlienVault, etc. The AWS CloudTrail logs themselves are placed in your AWS S3 bucket.

The other two AWS services provide some more important monitoring capabilities. Firstly, Amazon CloudWatch is an AWS resource and application monitoring service that, among other things, can detect various anomalies in your cloud. All AWS built-in services, such as Amazon Elastic Compute Cloud (servers), Amazon Relational Database Service (databases), Amazon Elastic MapReduce (data analysis) and 30 other Amazon services, use Amazon CloudWatch to store their logs. Developers can use the Amazon CloudWatch open API to add log monitoring functionality to user applications and services, which allows expanding the range of analyzed events in the context of information security.

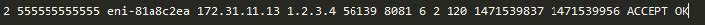

Secondly, the VPC Flow Logs service allows you to analyze the network traffic sent or received by your AWS servers (externally or internally), as well as between microservices. When any of your AWS VPC resources interacts with the network, VPC Flow Logs records network traffic information, including the source and destination network interfaces, as well as IP addresses, ports, protocol, number of bytes, and number of packets you saw. Those with local network security experience recognize this as an analogue to NetFlow flows that can be created by switches, routers, and enterprise-level firewalls. These logs are important for information security monitoring because they, unlike user and application activity events, also allow you to not miss network connectivity in AWS virtual private cloud environment.

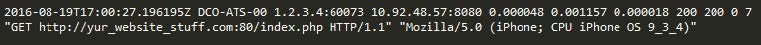

Thus, these three AWS services — AWS CloudTrail, Amazon CloudWatch, and VPC Flow Logs — together provide a reasonably effective view of your account usage, user behavior, infrastructure management, application and service activity, and network activity. For example, they can be used to detect the following anomalies:

- Attempts to scan the site, search for backdoors, search for vulnerabilities through bursts of “404 errors”.

- Injection attacks (such as SQL injection) through bursts of “500 errors”.

- Known tools for sqlmap, nikto, w3af, nmap, etc. through analysis of the User Agent field.

Amazon Web Services has also developed other services for cybersecurity that can solve many other tasks. For example, AWS has a built-in service for auditing policies and configurations - AWS Config. This service provides continuous auditing of your AWS resources and their configurations. Consider a simple example: suppose you want to make sure that user passwords are disabled on all your servers and access is only possible on the basis of certificates. AWS Config makes it easy to verify this for all your servers. There are other policies that can be applied to your cloud servers: “No server can use port 22”, “Only administrators can change the firewall rules” or “Only user Ivashko can create new user accounts, and he can do it is only on Tuesdays. ” In the summer of 2016, AWS Config was expanded to automate the detection of violations of developed policies. AWS Config Rules are, in essence, continuous configuration requests for the Amazon services you use, which generate events in case of violation of the relevant policies. For example, instead of periodically executing AWS Config requests to verify that all virtual server drives are encrypted, AWS Config Rules can be used to constantly check server drives for this condition. And, most importantly, in the context of this publication, any violations generate events that can be analyzed by your IS service.

AWS also has its equivalents to traditional corporate information security solutions that also generate security events that you can and should analyze:

- Intrusion Detection - AWS GuardDuty

- Leakage Control - AWS Macie

- EDR (although talking about endpoints in the cloud is a bit weird) - AWS Cloudwatch + open source osquery or GRR solutions

- Netflow Analysis - AWS Cloudwatch + AWS VPC Flow

- DNS analysis - AWS Cloudwatch + AWS Route53

- AD - AWS Directory Service

- Account Management - AWS IAM

- SSO - AWS SSO

- security analysis - AWS Inspector

- configuration management - AWS Config

- WAF - AWS WAF.

I will not detail all Amazon services that may be useful in the context of information security. The main thing to understand is that they can all generate events that we can and should analyze in the context of information security, using for this both Amazon’s built-in capabilities and external solutions, for example, SIEM, which can take security events to your monitoring center and analyze them there along with events from other cloud services or from internal infrastructure, perimeter or mobile devices.

In any case, it all starts with data sources that provide you with IB events. Such sources include, but are not limited to:

- CloudTrail - API Usage and User Actions

- Trusted Advisor - Security Check for Best Practices

- Config - inventory and configuration of accounts and service settings

- VPC Flow Logs - Connections with Virtual Interfaces

- IAM - Identity and Authentication Service

- ELB Access Logs - Load Balancer

- Inspector - application vulnerabilities

- S3 - file storage

- CloudWatch - Application Activity

- SNS - notification service.

Amazon, offering such a range of event sources and tools for their generation, is very limited in its ability to analyze the collected data in the context of information security. You will have to independently study the available logs, looking for the corresponding indicators of compromise in them. The AWS Security Hub, which Amazon recently launched, aims to solve this problem by becoming a sort of cloud-based SIEM for AWS. But while it is only at the beginning of its journey and is limited both by the number of sources with which it works, and by other restrictions established by the architecture and subscriptions of Amazon itself.

Example: Monitoring IS in Azure-based IaaS

I don’t want to enter into a long debate about which of the three cloud providers (Amazon, Microsoft or Google) is better (especially since each of them has its own specifics and is suitable for solving its problems); focus on the security monitoring capabilities these players provide. I must admit that Amazon AWS was one of the first in this segment and therefore has advanced the farthest in terms of its security functions (although many admit that using them is difficult). But this does not mean that we will ignore the opportunities that Microsoft offers us with Google.

Microsoft products have always been distinguished by their "openness" and in Azure the situation is similar. For example, if AWS and GCP always proceed from the concept of “everything that is not allowed is prohibited,” then Azure has the exact opposite approach. For example, creating a virtual network in the cloud and a virtual machine in it, all ports and protocols are open and enabled by default. Therefore, you will have to spend a little more effort on the initial setup of access control system in the cloud from Microsoft. And this also imposes more stringent requirements on you in terms of monitoring activity in the Azure cloud.

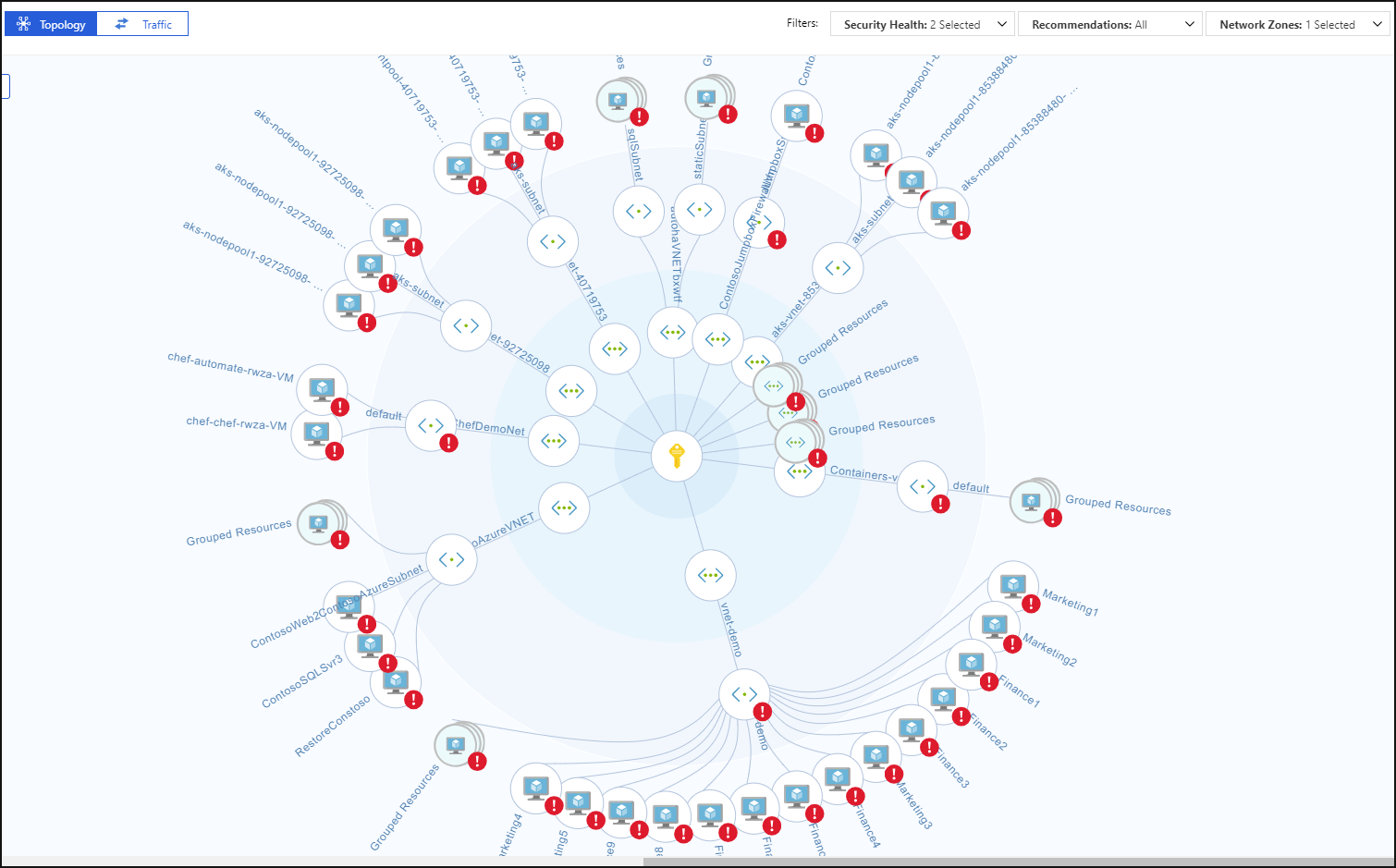

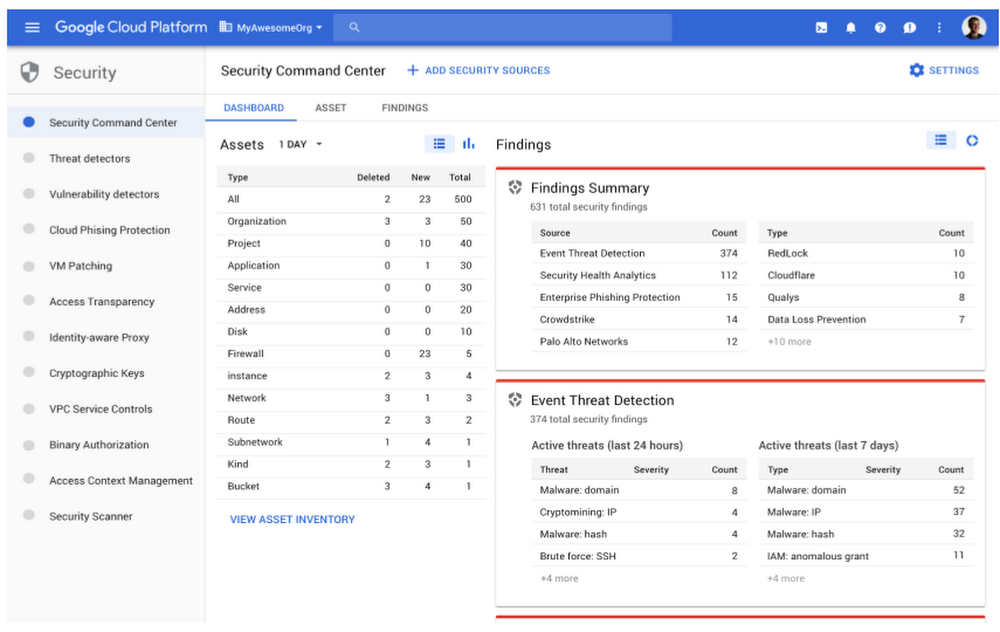

AWS has a peculiarity associated with the fact that when you monitor your virtual resources, if they are in different regions, then you have difficulties in combining all events and their unified analysis, to eliminate which you need to resort to various tricks, such as Create custom code for AWS Lambda that will carry events between regions. There is no such problem in Azure - its Activity Log mechanism monitors all activity throughout the organization without restrictions. The same applies to the AWS Security Hub, which was recently developed by Amazon to consolidate many security functions within a single security center, but only within its region, which, however, is not relevant for Russia.Azure has its own Security Center, which is not bound by regional restrictions, providing access to all the security features of the cloud platform. Moreover, for various local teams, he can provide his own set of defensive capabilities, including the safety events they manage. The AWS Security Hub is still striving to become like the Azure Security Center. But it’s worth adding a fly in the ointment - you can squeeze out a lot of what was described earlier in AWS from Azure, but this is most conveniently done only for Azure AD, Azure Monitor and Azure Security Center. All other Azure defense mechanisms, including security event analysis, are not yet managed in the most convenient way. Part of the problem is solved by the API, which permeates all Microsoft Azure services,but this will require additional efforts for integrating your cloud with your SOC and the availability of qualified specialists (in fact, like with any other. SIEM working with cloud APIs). Some SIEMs, which will be discussed later, already support Azure and can automate the task of monitoring it, but there are some difficulties with it - not all of them can take all the logs that Azure has.

Event collection and monitoring in Azure is provided using the Azure Monitor service, which is the main tool for collecting, storing and analyzing data in the Microsoft cloud and its resources - Git repositories, containers, virtual machines, applications, etc. All data collected by Azure Monitor is divided into two categories: real-time metrics that describe key performance indicators of the Azure cloud, and registration logs containing data organized in records characterizing certain aspects of the activities of Azure resources and services. In addition, using the Data Collector API, the Azure Monitor service can collect data from any REST source to build its own monitoring scenarios.

Here are a few sources of security events that Azure offers you that you can access through the Azure Portal, CLI, PowerShell, or REST API (and some only through the Azure Monitor / Insight API):

- Activity Logs - this journal answers the classic questions “who”, “what” and “when” regarding any write operation (PUT, POST, DELETE) over cloud resources. Events related to read access (GET) do not fall into this log, like a number of others.

- Diagnostic Logs - contains data on operations with a particular resource included in your subscription.

- Azure AD reporting - contains both user activity and system activity associated with managing groups and users.

- Windows Event Log and Linux Syslog - contains events from virtual machines hosted in the cloud.

- Metrics — “” . . 30 .

- Network Security Group Flow Logs — , Network Watcher .

- Storage Logs — , .

For monitoring, you can use external SIEM or the built-in Azure Monitor and its extensions. We’ll talk more about IS event management systems, but for now we’ll see what Azure offers us to analyze data in the context of security. The main screen for everything related to security in Azure Monitor is Log Analytics Security and Audit Dashboard (the free version supports storing a limited amount of events for only one week). This panel is divided into 5 main areas that visualize summary statistics on what is happening in your cloud environment:

- Security Domains - key quantitative indicators related to information security - the number of incidents, the number of compromised nodes, unpatched nodes, network security events, etc.

- Notable Issues - Displays the number and importance of active IB problems

- Detections — ,

- Threat Intelligence — ,

- Common security queries — , .

Azure Monitor extensions include Azure Key Vault (protection of cryptographic keys in the cloud), Malware Assessment (analysis of protection against malicious code on virtual machines), Azure Application Gateway Analytics (analysis of, among other things, cloud firewall logs), etc. . These tools, enriched by certain rules of event processing, allow you to visualize various aspects of the activities of cloud services, including security, and to identify certain deviations from work. But, as often happens, any additional functionality requires an appropriate paid subscription, which will require you to appropriate financial investments that you need to plan ahead.

Azure has a number of built-in threat monitoring capabilities that are integrated into Azure AD, Azure Monitor, and the Azure Security Center. Among them, for example, detecting the interaction of virtual machines with known malicious IPs (due to the integration with Microsoft Threat Intelligence services), detecting malware in the cloud infrastructure by receiving alarms from virtual machines located in the cloud, attacks like “password guessing” ”To virtual machines, vulnerabilities in the configuration of the user identification system, logging in from anonymizers or infected nodes, account leakage, logging in from unusual locations, etc. Azure today is one of the few cloud providers that offers you built-in Threat Intelligence capabilities to enrich the collected information security events.

As mentioned above, the protective functionality and, as a consequence, the security events generated by it are not accessible to all users equally, but requires a certain subscription that includes the functionality you need, which generates the corresponding events for monitoring information security. For example, some of the functions for monitoring anomalies in accounts described in the previous paragraph are available only in the P2 premium license for Azure AD. Without it, you, as in the case of AWS, will have to analyze the collected security events “manually”. And, also, depending on the type of license for Azure AD, not all events for analysis will be available to you.

On the Azure portal, you can manage both search queries to the logs of interest to you, and configure dashboards to visualize key information security indicators. In addition, there you can choose the Azure Monitor extensions, which allow you to expand the functionality of the Azure Monitor logs and get a deeper analysis of events from a security point of view.

If you need not only the ability to work with logs, but the comprehensive security center of your Azure cloud platform, including IS policy management, then you can talk about the need to work with the Azure Security Center, most of which are useful for a fee, for example, threat detection, monitoring outside of Azure, conformity assessment, etc. (In the free version, you only have access to a security assessment and recommendations for resolving identified problems). It consolidates all security issues in one place. In fact, we can talk about a higher level of information security than Azure Monitor provides, since in this case the data collected throughout your cloud factory is enriched using a variety of sources, such as Azure, Office 365, Microsoft CRM online, Microsoft Dynamics AX, outlook .com, MSN.com,Microsoft Digital Crimes Unit (DCU) and Microsoft Security Response Center (MSRC), which are superimposed on various sophisticated machine learning algorithms and behavioral analytics, which should ultimately increase the efficiency of threat detection and response.

Azure has its own SIEM - it appeared at the beginning of 2019. This is the Azure Sentinel, which relies on data from Azure Monitor, and can also integrate with. external security solutions (for example, NGFW or WAF), the list of which is constantly updated. In addition, through the integration of the Microsoft Graph Security API, you can connect your own Threat Intelligence feeds to Sentinel, which enriches the ability to analyze incidents in your Azure cloud. It can be argued that Azure Sentinel is the first "native" SIEM that appeared in cloud providers (the same Splunk or ELK, which can be placed in the cloud, for example, AWS, are still not developed by traditional cloud service providers).The Azure Sentinel and Security Center could be called the SOC for the Azure cloud and they could be limited (with certain reservations) if you no longer had any infrastructure and you transferred all your computing resources to the cloud and it would be a Microsoft cloud Azure

But since Azure’s built-in capabilities (even with a Sentinel subscription) are often not enough for IS monitoring and integration of this process with other sources of security events (both cloud and internal), it becomes necessary to export the collected data to external systems, which may include SIEM. This is done both with the help of the API, and with the help of special extensions that are officially available at the moment only for the following SIEMs - Splunk (Azure Monitor Add-On for Splunk), IBM QRadar (Microsoft Azure DSM), SumoLogic, ArcSight and ELK. More recently, there were more such SIEMs, but from June 1, 2019, Microsoft stopped supporting the Azure Log Integration Tool (AzLog),which at the dawn of Azure's existence and in the absence of normal standardization of working with logs (Azure Monitor did not even exist yet) made it easy to integrate external SIEMs with the Microsoft cloud. Now the situation has changed and Microsoft recommends the Azure Event Hub platform as the main integration tool for the rest of SIEM. Many have already implemented this integration, but be careful - they may not capture all the Azure logs, but only a few (see the documentation for your SIEM).

Concluding a brief excursion to Azure, I want to give a general recommendation on this cloud service - before you say anything about the security monitoring functions in Azure, you should configure them very carefully and test that they work as written in the documentation and as the consultants told you Microsoft (and they may have different views on the performance of Azure features). If you have financial opportunities from Azure, you can squeeze out a lot of useful things in terms of information security monitoring. If your resources are limited, as in the case of AWS, you will have to rely only on your own resources and raw data that Azure Monitor provides you with. And remember that many monitoring functions cost money and it is better to familiarize yourself with pricing in advance. For example,free of charge, you can store data for 31 days with a volume of not more than 5 GB per customer - exceeding these values will require you to fork out additionally (about 2+ dollars for storing each additional GB from the customer and $ 0.1 for storing 1 GB each additional month). Working with application telemetry and metrics may also require additional financial resources, as well as working with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).as well as work with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).as well as work with alerts and notifications (a certain limit is available for free, which may not be enough for your needs).

Example: IS monitoring in IaaS based on Google Cloud Platform

The Google Cloud Platform against the background of AWS and Azure looks quite young, but this is partly good. Unlike AWS, which was building up its capabilities, including defensive ones, gradually, having problems with centralization; GCP, like Azure, is much better managed centrally, which reduces the number of errors and implementation time in the enterprise. From a security perspective, GCP is, oddly enough, located between AWS and Azure. He also has a single event registration for the entire organization, but it is incomplete. Some functions are still in beta mode, but gradually this deficiency should be eliminated and GCP will become a more mature platform in terms of information security monitoring.

The main tool for recording events in GCP is Stackdriver Logging (an analogue of Azure Monitor), which allows you to collect events across your entire cloud infrastructure (as well as AWS). From a security perspective in GCP, each organization, project, or folder has four logs:

- Admin Activity - contains all events related to administrative access, for example, creating a virtual machine, changing access rights, etc. This journal is always written, regardless of your desire, and stores its data for 400 days.

- Data Access — , (, , ..). , . 30 . , . , , GCP.

- System Event — , , , . 400 .