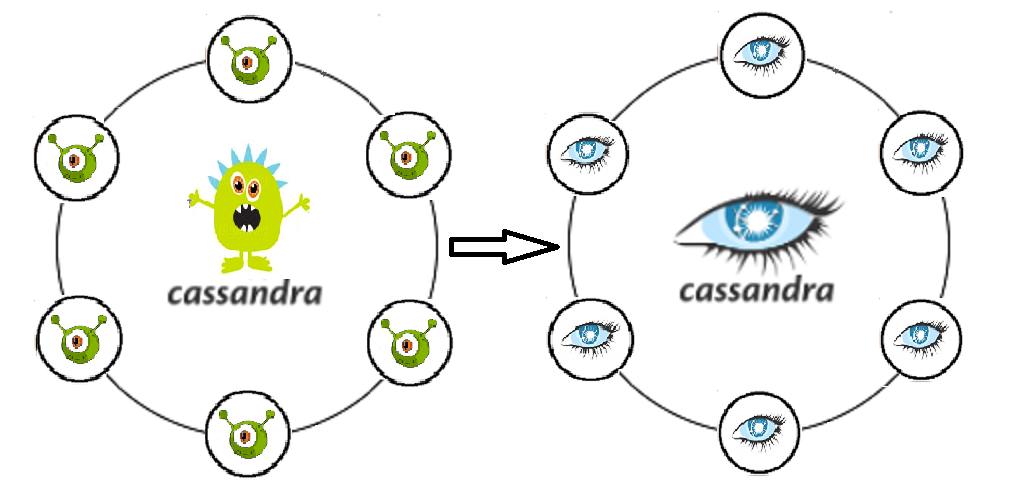

How to look into Cassandra’s eyes and not lose data, stability and faith in NoSQL

They say that in life everything is worth trying at least once. And if you are used to working with relational DBMSs, then getting to know NoSQL in practice is, first of all, at least for general development. Now, due to the rapid development of this technology, there are a lot of conflicting opinions and heated debates on this topic, which is especially fueling interest.

If you delve into the essence of all these disputes, you can see that they arise due to the wrong approach. Those who use NoSQL databases exactly where they are needed are satisfied and get all its advantages from this solution. And experimenters who rely on this technology as a panacea where it is not applicable at all, are disappointed to lose the strengths of relational databases without gaining significant benefits.

I will tell you about our experience in implementing a solution based on the Cassandra DBMS: what we had to deal with, how we got out of difficult situations, did we manage to get a benefit from using NoSQL and where did we have to invest additional efforts / funds.

The initial task is to build a system that records calls to a certain storage.

The principle of the system is as follows. Files with a certain structure describing the structure of the call come to the input. Then the application ensures that this structure is saved in the appropriate columns. In the future, stored calls are used to display information on traffic consumption for subscribers (charges, calls, balance history).

Why Kassandra was chosen is quite understandable - she writes as a machine gun, easily scalable, and fault tolerant.

So, here is what experience has given us

Yes, the crashed node is not a tragedy. That is the essence of Cassandra's fault tolerance. But the node can be live and at the same time begin to sag on performance . As it turned out, this immediately affects the performance of the entire cluster.

Cassandra does not hedge where Oracle saved with its constants . And if the author of the application did not understand this in advance, then the flown take for Cassandra is no worse than the original. Once he came, then we’ll insert it.

The free Kassandra “out of the box” did not like the information security sharply: there is no logging of user actions, and no differentiation of rights . Information about calls refers to personal data, which means that all attempts to request / change it in any way should be logged with the possibility of subsequent audit. Also, you need to be aware of the need to separate rights at different levels for different users. A simple operation engineer and super admin who can freely remove the entire keyspace are different roles, different responsibilities, competencies. Without such a differentiation of access rights, the value and integrity of the data will immediately be called into question more quickly than with the ANY consistency level.

We did not take into account that calls require serious analytics, as well as periodic samples for a variety of conditions. Since the selected records are then supposed to be deleted and rewritten (within the framework of the task, we must support the process of updating the data when the data loop initially came to us incorrectly), Kassandra is not our friend here. Cassandra, like a piggy bank, is convenient to put into it, but you won’t be able to count in it.

Faced the problem of transferring data to test zones (5 nodes in the test versus 20 in the prom). In this case, a dump cannot be used.

The problem of updating the data schema of an application writing to Kassandra. Rollback will give rise to a great many tombstones, which in an unpredictable way can lower our productivity . Cassandra is optimized for recording, and before recording, does not think much. Any operation with existing data in it is also a record. That is, having removed the excess, we simply spawn even more records, and only a part of them will be marked with tombstones.

Timeouts on insertion. Cassandra is beautiful in the recording, but sometimes the incoming stream can be very perplexing to her . This happens when the application starts to circle several records that cannot be inserted for any reason. And we will need quite a real DBA, which will follow gc.log, system and debug logs for slow query, metrics for compaction pending.

Several data centers in a cluster. Where to read and where to write?

Perhaps divided into reading and writing? And if so, should there be a DC for writing or reading closer to the application? And will not we get a real split brain if we choose the level of consistency incorrectly? A lot of questions, a lot of unexplored settings, features that I really want to twist.

How did we decide

That the node did not squander, disabled SWAP . And now with a lack of memory, the node should lie down, and not produce large gc pauses.

So, we no longer hope for logic in the database. Application developers retrain and begin to actively make secure in their own code. Perfect clear separation of data storage and processing.

We bought support from DataStax. The boxed Kassandra has already stopped developing (the last commit in February 2018). At the same time, Datastax offers excellent service and a large number of modified and adapted to existing IC solutions.

I also want to note that Kassandra is not very convenient for querying samples. Of course, CQL is a big step towards users (compared to Trift). But if you have entire departments, accustomed to such convenient joins, free filtering by any field and query optimization options, and these departments are working to close claims and accidents, then the decision on Kassandra seems to him to be enemy and stupid. And we began to address the issue of how our colleagues can make samples.

We considered two options. In the first option, we write calls not only in C *, but also in the Oracle archive database. Only, unlike C *, calls are stored in this database only for the current month (sufficient call storage depth for re-certification cases). Here we immediately saw the following problem: if you write synchronously, then we lose all the advantages of C * associated with quick insertion, if asynchronously, there is no guarantee that all the necessary calls generally hit Oracle. There was one plus, but big: for exploitation, the same familiar PL / SQL Developer remains, that is, we practically implement the “Facade” pattern. An alternative option. We implement a mechanism that unloads calls from C *, pulls some data for enrichment from the corresponding tables in Oracle, joins the received samples and gives us the result, which we then somehow use (rolls back, re-repeats, analyzes, admires). Cons: the process is quite multi-step, and in addition, there is no interface for operating personnel.

As a result, we still settled on the second option. Apache Spark was used for samples from different cans. The essence of the mechanism came down to Java code, which, using the specified keys (subscriber, call time — section keys), pulls data from C *, as well as the necessary data for enrichment from any other database. Then it joins them in its memory and displays the result in the resulting table. A web muzzle was drawn over the spark and it turned out to be quite suitable for operation.

When solving a problem with updating data, a promo test again examined several solutions. Both the transfer through Sstloader and the option of dividing the cluster in the test zone into two parts, each of which alternately enters into the same cluster as the promo one, thus being powered from it. When updating the test, it was planned to change their places: the part that worked in the test is cleared and entered into the prom, and the other starts working with the data separately. However, thinking again, we more rationally evaluated the data that should be transferred, and realized that the calls themselves are an inconsistent entity for tests, quickly generated if necessary, and it is the promo data set that is not worth transferring to the test. There are several storage objects that are worth moving, but this is literally a couple of tables, and not very heavy ones. Therefore , Spark again came to our aid as a solution, with the help of which we wrote and began to actively use the data transfer between tables prom-test scripts.

Our current deployment policy allows us to work without kickbacks. Before the prom, there is a mandatory roll over to the test, where the error is not so expensive. In case of failure, you can always drop the casespace and roll the whole scheme from the beginning.

To ensure the continuous availability of Cassandra, you need dba and not only it. Everyone who works with the application should understand where and how to look at the current situation and how to diagnose problems in a timely manner. To do this, we actively use DataStax OpsCenter (Administration and Monitoring of Workloads), Cassandra Driver system metrics (number of timeouts to write to C *, number of timeouts to read from C *, maximum latency, etc.), monitoring the application itself, working with Kassandra.

When we thought about the previous question, we realized where our main risk might lie. These are data display forms that output data from several storage queries that are independent of each other. This way we can get pretty inconsistent information. But this problem would be just as relevant if we only worked with one data center. So the most reasonable thing here is, of course, to do the batch function of reading data on a third-party application, which will ensure that data is received in a single period of time. As for the separation of reading and writing in terms of performance, here we were stopped by the risk that, with some loss of connection between DCs, we can get two completely inconsistent clusters.

As a result, at the moment we stopped at the level of consistency for the record EACH_QUORUM, for reading - LOCAL_QUORUM

Brief impressions and conclusions

In order to evaluate the resulting solution in terms of operational support and prospects for further development, we decided to think about where else to apply such a development.

If on the move, then scoring data for programs like “Pay when it is convenient” (load C * information, calculation using Spark scripts), accounting claims with aggregation by direction, storing roles and calculating user access rights using the role matrix.

As you can see, the repertoire is wide and varied. And if we choose the camp of supporters / opponents of NoSQL, then we will join the supporters, since we got our pluses, and exactly where we expected.

Even the Cassandra option out of the box allows for horizontal scaling in real time, absolutely painlessly solving the problem of increasing data in the system. We managed to put into a separate circuit a very high-loaded mechanism for calculating aggregates by calls, and also, to separate the application scheme and logic, getting rid of the vicious practice of writing custom jobs and objects in the database itself. We got the opportunity to choose and configure, in order to accelerate, on which DCs we will calculate and on which data records, we insured ourselves for the drops of both individual nodes and the whole DC.

Applying our architecture to new projects, and already having some experience, I would like to immediately take into account the nuances described above, and not make some mistakes, smooth out some sharp corners, which could not be avoided initially.

For example, keep track of updates to Cassandra on time , because quite a few problems that we received were already known and corrected.

Do not put the database itself and Spark on the same nodes (or strictly divide them by the amount of acceptable resource use), since Spark can eat more than the OP, and we will quickly get problem number 1 from our list.

To pump monitoring and operational competence even at the stage of project testing. Initially, take into account the maximum of all potential consumers of our solution , because the database structure will ultimately depend on this.

Twist the resulting circuit several times for possible optimization. Select which fields can be serialized. Understanding what additional tables we can do in order to take into account the most correctly and optimally, and then returning the required information upon request (for example, assuming that we can store the same data in different tables, taking into account different breakdowns according to different criteria, can significantly save processor time for read requests).

It is a good idea to immediately mount TTL and clean outdated data.

When unloading data from Cassandra, the application logic should work according to the FETCH principle, so that not all lines are loaded into memory at a time, but selected in batches.

Before transferring the project to the described solution, it is advisable to check the system fault tolerance by conducting a series of crash tests , such as data loss in one data center, restoration of damaged data for a certain period, and network drawdown between data centers. Such tests will not only allow you to assess the pros and cons of the proposed architecture, but will also give good warm-up practice to the engineers conducting them, and the resulting skill will be far from superfluous if system failures are reproduced in the prom.

If we work with critical information (such as data for billing, calculation of subscriber debt), then it is also worth paying attention to tools that will reduce the risks that arise due to the characteristics of the DBMS. For example, use the nodesync (Datastax) utility, having developed an optimal strategy for its use, so that for the sake of consistency not to form an excessive load on Cassandra and use it only for certain tables in a certain period.

So, after six months of life, with Cassandra? In general, there are no unsolved problems. Serious accidents and data loss, we also did not allow. Yes, I had to think about compensating for some previously unmet problems, but in the end it didn’t overshadow our architectural solution. If you want and are not afraid to try something new, but do not want to be very disappointed, then get ready for the fact that nothing happens for free. To understand, delve into the documentation and collect your individual rake will have more than in the old legacy solution and no theory will tell you in advance exactly which rake is waiting for you.

All Articles