Cloud Mobile Testing Platforms

And now the time has come when our testing needs have become crowded on the tester's desktop. The soul asked to the clouds. Not really. Not really.

Our goals and objectives

(hurrying reader, you can wind up to the next section)

We are developing a financial application for the foreign market, which is available in different formats: for desktop browsers (website and extension for Google Chrome), for mobile browsers, as well as a hybrid application for phones. Due to the specifics of the application, we pay special attention to testing the application on various configurations and devices. For us, the stable and safe operation of the application is important both on the desktop browsers of our clients and on their any devices.

The reason for finding a cloud-based farm of devices for testing for us was a change in the format of work from office to completely remote and distributed (between cities and countries). That is, if earlier for testing we could collect different devices in a bunch (literally) and manually test the next assembly in one table at the same time, now it has become impossible to do this. Moreover, with the growth of functionality, in order to reduce manual work, we automate the regression sets of important tests, which means that after assembly we need to be able to call tests on the desired configuration and device, and it is better to do this as soon as the assembly rolls to staging.

The simplest and most obvious solution is to use emulators for Android and simulators for iOS devices in our DevOps pipeline. However, which is relatively easy to implement on the developer's working computer, it becomes a difficult and expensive task to use in the cloud. For the same Android emulator to work quickly, an x86 server with HAXVM support is required, and for an iOS simulator, only a MacOS device with xcode is required. But, unfortunately, even having solved this problem, the question remains with the gap between the behavior of the software on emulators and real devices. For example, every second release we catch strange bugs on Samsung devices that cannot be played on emulators. Well, and, of course, rare exotic “Chinese” “delight” with unique and bugs that I would also like to catch at the development stage.

As a result, we had an understanding of the need to use a cloud farm of mobile devices, on which we could quickly run our tests and, if necessary, debug manually. And to which our entire team would have access from anywhere in the world (we love to work even when traveling).

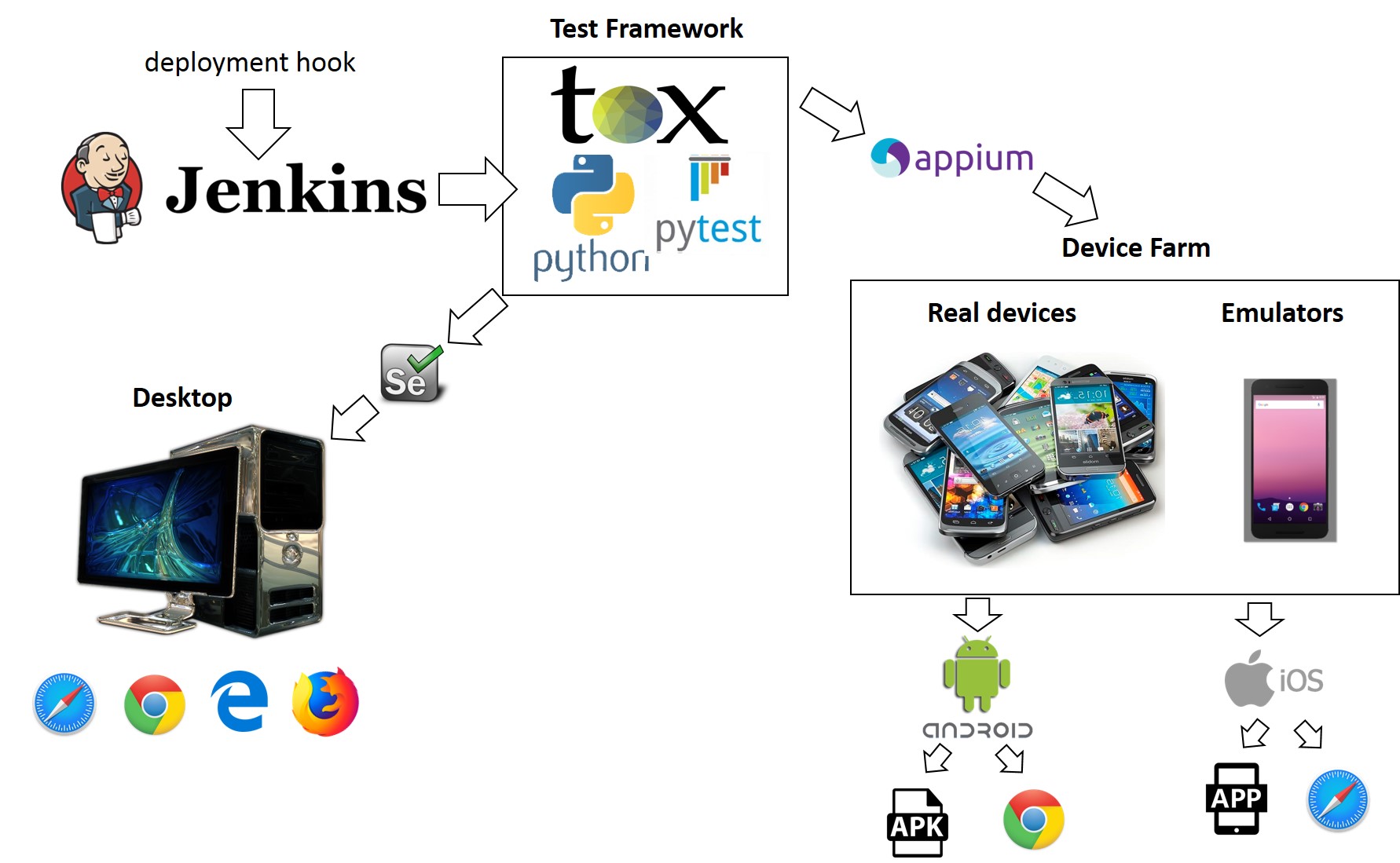

Our tests are written in Python 3.7 (this will be important later), as the stack we use tox + pytest + Selenium + Appium, and of course a small set of useful python libraries. We are sure to test machines on Windows and MacOS with Edge, Firefox, Chrome, Safari browsers, as well as Android and iOS devices with browsers and application. We do not have a lot of tests for each device (less than a thousand), but when testing in a single thread on the devices, a full set takes a couple of hours. Therefore, the criterion for choosing a service for us will be:

- API Testing (Selenium / Appium)

- IOS, Android devices

- Support mobile browser testing

- Support for downloading and testing applications

- Reference device (GooglePixel (Android 9) and iPhone X (iOS 12+))

- Manual debugging

- Logging (plus screenshots, recording a video run)

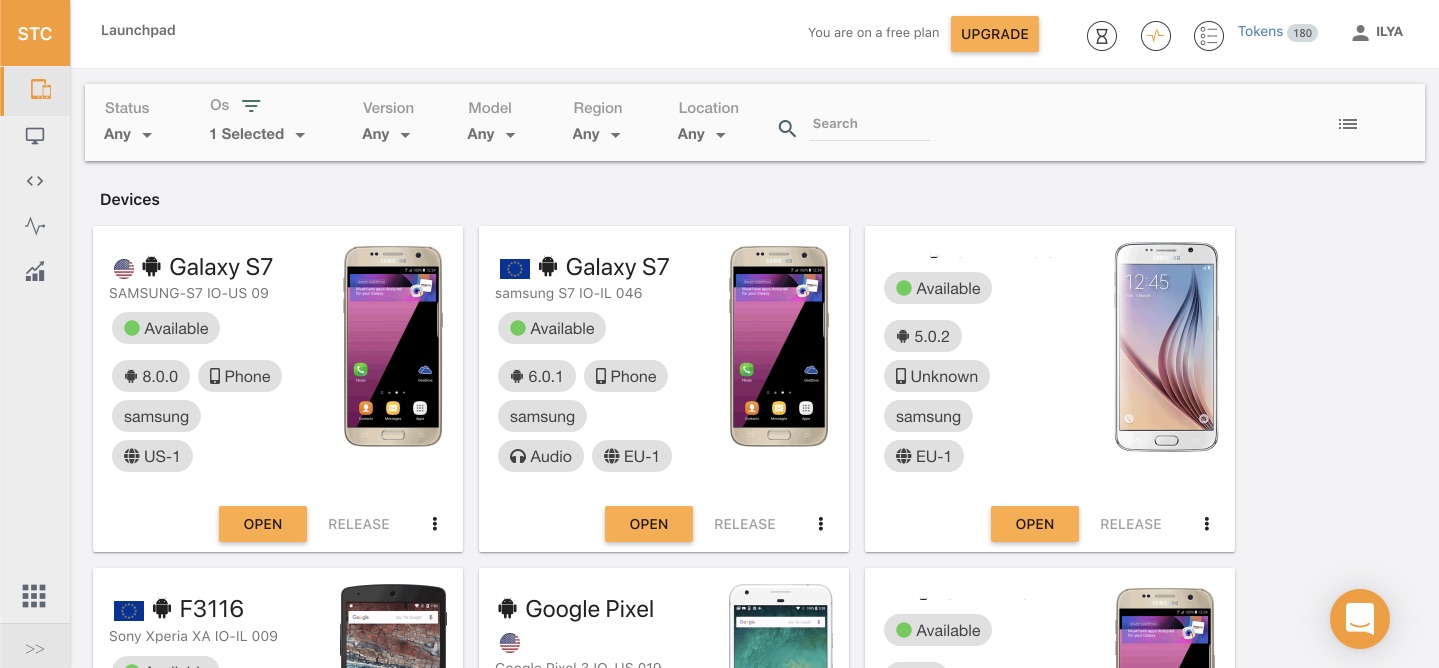

- Device Park and Availability

- Average test lead time

- Price

Desirable, but not necessary:

- Service level python support (whatever that means)

- Support for desktop devices \ browsers

Market Research Results

For a week I surfed the Internet and tried a dozen different services. Most of them provide free time for testing opportunities. The results of my research, the more conclusions are subjective. Your opinion and results may differ from mine.

On Habré I found an article for 2017 devoted to the same topic, but since then new services have appeared, and our task is a bit stricter. So, for example, “tasty” services like Samsung Remote Test Lab, Firebase Test Lab, Xamarin Test Cloud, alas, do not suit us.

Out of the game

Samsung Remote Test Lab

The service provides an opportunity for free to try to work with various Samsung devices, including the newest ones, including TVs or smart watches on Tizen (limitation is a maximum of 10 hours per day, per day the service gives out 10 credits for free, which is equal to 2.5 hours per day , the minimum session is half an hour (2 credits)). This is very good for debugging and finding the root causes of errors on certain devices, the service even provides access to remote debugging (remote debug bridge, access to the console and system logs), but, unfortunately, the service does not provide API access to devices. The only way to “automate” is to record user actions and then play them back in your local automation tool.

Firebase test lab

A service from Google allows you to test your application on devices running Android and iOS for free (not quite). But there is one caveat - the service requires the use of either native automation tools (UIAtomator2 and Espresso for Android and XCTest for iOS), or using automatic spiders (crawler) for Android - Robo Test and Game Loop Test. That is, to use UIAutomator and Selenium alas, will not work. As for free - the free package is limited to 10 tests on emulators and five on real devices per day. If you need more, then for each additional hour you will have to pay another $ 1 and $ 5, respectively. In general, for our tasks this would be a good choice if we wrote tests from scratch, but I don’t feel like reworking several hundred tests at all - it’s simply expensive. And it turns out that we would have to diverge greatly in tests between desktop versions and mobile, which would greatly complicate support.

Visual studio app center

Former Xamarin Test Cloud. This service finally supports Appium and allows testing on thousands of different devices. But, as in the case of other Microsoft products, it is firmly nailed to the native stack, which means that to use this service you will need both VisualStudio and the requirement to write the project and tests exclusively in Java. But if you suddenly have a Java stack (with MS VS), then the price is $ 99 per device slot per month, which is relatively liberal.

Services to choose from

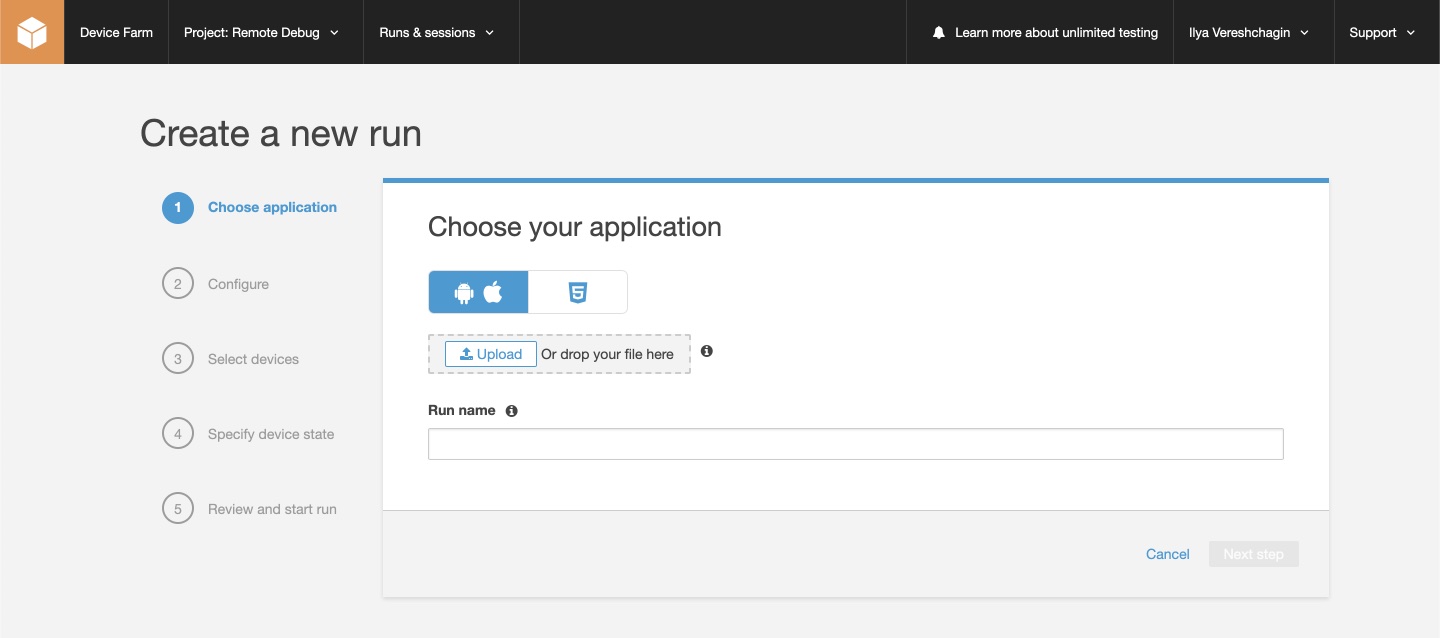

AWS Device Farm

Perhaps the most powerful farm for testing on virtual and real devices to date (more than 2500 devices). For us, this was a priority service, since our services are just deployed in the AWS cloud, in addition, prices per minute of the device’s time start at 17 cents. AWS allows you to work with native frameworks, as well as with Appium, Calabash, and other automated test frameworks. In addition to automated testing, the service provides the ability to manually debug. Well, 1000 minutes “to try” is very tempting. However, the devil, as usual, is in the details. In terms of testing, AWS has several features.

We, as I already mentioned, use Python 3.7, however AWS Device Farm still works with Python 2.7.6 (see the manual here ). And out of the box knows nothing about tox. For us, this means the absence of a number of capabilities and the need to process part of the tests to ensure backward compatibility, as well as creating an environment bypassing tox. In addition, a rather strange mechanism for downloading a test package (archive) also implies downloading an application for testing. In our case, if we test our service through a mobile browser, then downloading the application is an extra step. However, you can replace the application with a "stub", and create a venv with Python 3.7 in the Python 2.7 environment, and then create an environment with tox in it, which ...

Amazon would not be Amazon if everything rested on old versions. As an alternative (and no service will have such an opportunity below) AWS suggests using AWS Device Farm through the AWS CLI (command line interface) (see the manual here ). That is, we can connect a device from the cloud as a real device to our computer in remote debug mode, however, having previously replaced adb with a patched one (there is no binary for linux in the list of binaries, but I'm sure it exists in nature). That is, having set up the AWS CLI, for testing we will need to execute just a few commands (because we are not going to use the GUI as an AWS Device Farm App).

import boto3 # AWS SDK https://pypi.org/project/boto3/ aws_client = boto3.client('devicefarm') response = aws_client.list_devices() # device_arn = '' for phone in response["devices"]: if phone['name'] == "Google Pixel XL": # ( ) device_arn = phone['arn'] # Amazon Resource Name break project_arn = aws_client.list_projects()['projects'][0]['arn'] # # , SSH response = aws_client.create_remote_access_session(projectArn=project_arn, deviceArn=device_arn, remoteDebugEnabled=True, ssh-public-key=SSH_KEY) # adb devices uuid Google Pixel XL AWS Device Farm, iOS # ... RUN_TESTS() ... # ( ) aws_client.stop-remote-access-session(arn=response['remoteAccessSession']['arn']) aws_client.delete_remote_access_session(arn=response['remoteAccessSession']['arn'])

If we want to test the application, it can also be downloaded via the AWS SDK.

But I did not tell a key nuance here. We stumble upon the devil again in detail. The fact is that the remote debugging option is available only if we use the Private Devices plan for AWS. Firstly, this feature is available only upon request (you need to write a letter to Amazon), secondly, the option is available for the us-west-2 region, and thirdly, in fact, this option returns us to the scenario when we have a server for testing with a set (or at least one) of devices connected to it. The advantages are obvious - we can use this device exclusively, which is obviously safer and faster, on the other hand, we lose the main advantage - the choice and variety of devices.

I liked the service as a whole, but for our team, alas, there are too many “buts” in it.

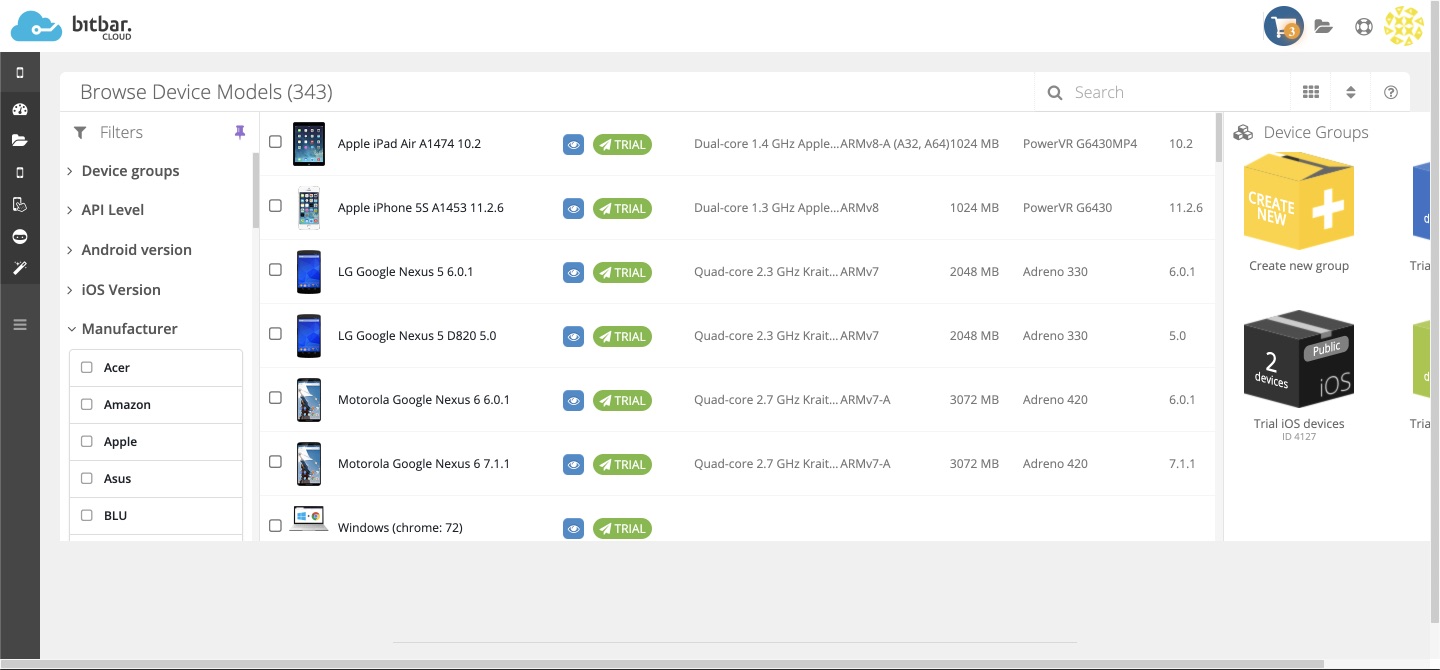

Bitbar

This cloud-based mobile farm is the first to fall on search engines. And not in vain. Looking ahead, I’ll say that this service has the best choice of devices (only real devices) and the best performance in terms of one test in comparison with others. Bitbar offers services for remote manual and automated testing (using Appium and other frameworks), and also, if desired, allows you to use something similar to the crawler from Firebase Test Lab (Robot Test) - AI TestBot. The main advantage of BitBar is an unlimited number of testing threads (that is, you can immediately test your application on hundreds of devices) by selecting the required device pool in advance. If the device is busy, another one will be selected the same, or the session will be queued. At the end of the test run, a log is generated, a test record, the results are saved, and the notification is sent to the mail. Although there are opportunities to configure interaction with different CI / CD tools. The service also provides the ability to test desktop browsers in different resolutions, and if desired, create, as in AWS, your private devices. True, you need to pay for all these chips - each minute of testing will cost $ 0.29.

The setup process is simple, like the interaction of two fingers with asphalt:

from appium import webdriver """ ... """ com_executor = 'https://appium.bitbar.com/wd/hub' desired_capabilities = { 'deviceName': 'Motorola Google Nexus 6', 'deviceId': 'FA7AN1A00253', 'newCommandTimeout': 12000, 'browserName': 'Chrome', # Chrome, app 'bitbar_apiKey': 'BITBAR_API_KEY', 'bitbar_project': 'Software Testing', 'bitbar_testrun': 'Test run #N', 'bitbar_device': 'Motorola Google Nexus 6', 'bitbar_app': '23425235' } driver = webdriver.Remote(com_executor, desired_capabilities) """ ... """

Kobiton

Another service that provides testing services on real devices. The choice of devices is more modest than Bitbar (350+), the availability of devices is also less. In general, it is very similar in its basic functionality to BitBar, it allows for manual and automatic testing (using Appium - here, without selecting frameworks). There is no way to test on desktop browsers. The service also allows you to organize testing with an unlimited number of sessions and devices, but you cannot create a device pool here. The price of the service is very liberal - from $ 0.10 per additional minute of testing, but during the trial period I noticed some instability of the service - the Internet often fell off on devices, once the device hung. Also, if the device is busy or reserved, then all your running tests will fail. That is, unlike Bitbar, there is no queue from sessions. True, with little cost it can be organized. Kobiton has its own small API.

The setup is also very simple, unlike the bitbar, almost the original Appium.

import base64 from time import sleep from appium import webdriver import requests """ ... """ # base64EncodedBasicAuth = base64.b64encode(bytes(f'{USERNAME}:{API_KEY}', 'utf-8')) headers = { 'Authorization': f'Basic {base64EncodedBasicAuth}' } reply = requests.get(f'https://api.kobiton.com/v1/apps', params={ }, headers = headers) # deviceId headers = { 'Authorization': base64EncodedBasicAuth, 'Accept': 'application/json' } reply = requests.get(f'https://api.kobiton.com/v1/devices/{deviceId}/status', params={ }, headers = headers) # , while (reply['isOnline'] == False or reply['isBooked'] == True): sleep(60) # com_executor = f'https://{USERNAME}:{API_KEY}@api.kobiton.com/wd/hub' desired_capabilities = { 'browserName': 'Chrome', # The generated session will be visible to you only. 'sessionName': 'Automation test session', 'sessionDescription': '', 'deviceOrientation': 'portrait', 'captureScreenshots': True, 'deviceGroup': 'KOBITON', # For deviceName, platformVersion Kobiton supports wildcard # character *, with 3 formats: *text, text* and *text* # If there is no *, Kobiton will match the exact text provided 'deviceName': 'Pixel 2', 'platformName': 'Android', 'platformVersion': '9' } driver = webdriver.Remote(com_executor, desired_capabilities) """ ... """ # RUN_TESTS() """ ... """ # , ( ) headers = { 'Authorization': {base64EncodedBasicAuth} } requests.delete(f'https://api.kobiton.com/v1/sessions/{sessionId}/terminate', params={ }) """ ... """

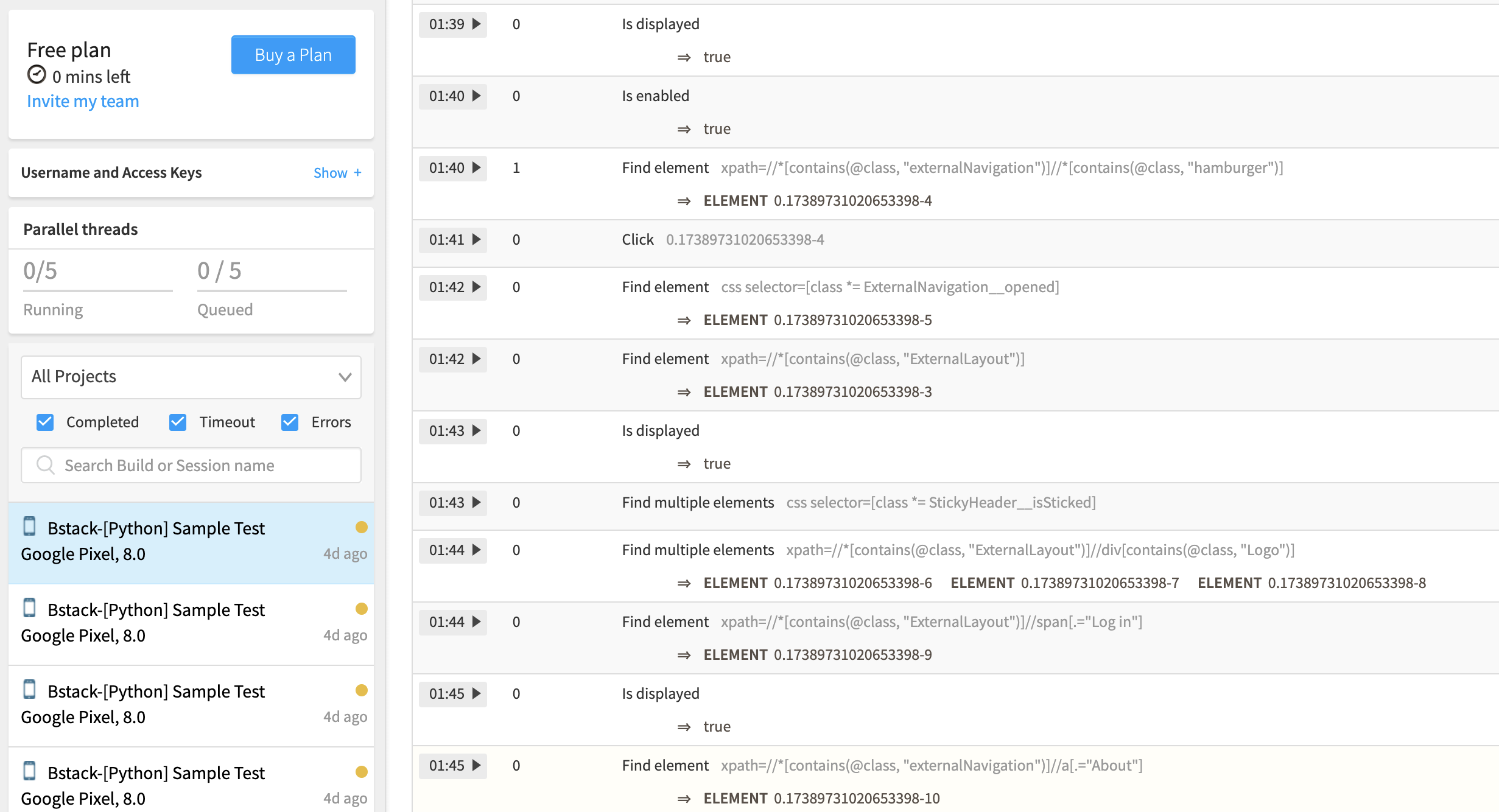

Browserstack

Good old BrowserStack. A lot of things were written about him and many who use it. Yes, it allows testing not only on different browsers, but also on different devices. Both in manual mode and using Selenium / Appium. Depending on your needs - on mobile browsers or using your application. In terms of capabilities, everything is the same as the two services on top, but unlike them, there are already restrictions on the number of simultaneous sessions. True, on the contrary, pay $ 199 per month and test for unlimited time. There are plugins for Jenkins, Travis CI, TeamCity, its rich API, excellent logs, and a large selection of devices and desktop browsers on different configurations. True, depending on what you are testing, the settings will vary - for testing browsers (even mobile ones) a Selenium hub is used, and for applications - an Appium hub. Moreover, for testing the application you will have to pay separately. That is, in order to test both mobile browsers and applications, you need to pay $ 199 and another $ 159 (the price for one device for testing at the same time).

from appium import webdriver """ ... """ com_executor = 'https://USERNAME:API_KEY@hub-cloud.browserstack.com/wd/hub' desired_capabilities = {'device': 'Google Pixel', 'deviceName': 'Google Pixel', 'app': app_name, 'realMobile': 'true', 'os_version': '8.0', 'name': 'Bstack-[Python] Sample Test' } driver = webdriver.Remote(com_executor, desired_capabilities) """ ... """

from selenium import webdriver """ ... """ com_executor = 'http://USERNAME:API_KEY@hub.browserstack.com:80/wd/hub' desired_capabilities = {'device': 'Google Pixel', 'deviceName': 'Google Pixel', 'browserName': 'Chrome', 'realMobile': 'true', 'os_version': '8.0', 'name': 'Bstack-[Python] Sample Test' } driver = webdriver.Remote(com_executor, desired_capabilities) """ ... """

Experimental

Another service that provides the ability to test both manually and automatically both mobile devices and desktop browsers using Appium, Selenium and other frameworks. As in the case of BrowserStack, the number of simultaneous sessions is limited, but the prices are slightly different - for testing mobile applications, the service asks for $ 199 per month, and for cross-browser testing only $ 39 (for one simultaneous session). In addition, like Bitbar with AWS, you can build your own private laboratory with devices that, however, can be mixed with a public cloud of thousands of devices, emulators and browsers of different versions and platforms (MacOS, Windows), if you wish. Of the interesting features - the presence of extensions for IntelliJ and Eclipse, its own Appium Studio tool, which allows you to use the advanced functionality of devices like interacting with FaceID, voice control, barcode scanning, setting communication quality, geo-location and more. Of the minuses, I can name a strange set of devices for the trial period, draconian tariffication for the trial period, as well as the requirement to use corporate mail (gmail will not work).

from appium import webdriver """ ... """ com_executor = 'https://Uhttps://cloud.seetest.io/wd/hub' desired_capabilities = {"deviceName": "iPhone X", "accessKey": API_KEY, "platformName": "ios", "deviceQuery": "'@os='ios' and @version='12.1.3' and @category='PHONE'", } driver = webdriver.Remote(com_executor, desired_capabilities) """ ... """

Saucelabs

One of the oldest cloud testing services. Almost 400 different real devices, a wide selection of simulators and emulators, including atypical emulators of Samsung devices, there are desktop browsers for different OSs, including Linux. Automation on Appium / Selenium and native frameworks. The main advantage of the service is the presence of an extensive collection of configurations, including old OS, browsers, devices. SauceLabs also have a limit on the number of simultaneous sessions, but here the cheapest option includes not one, but two simultaneous sessions. What is unusual: tariff plans on real devices and on emulators are different. So, the cheapest options, including two sessions with 2000 minutes of testing per month on emulators will cost $ 149, and on real devices already $ 349. There is integration with CI / CD, Slack. Unfortunately, I did not succeed in trying SauceLabs live, because alas, registration does not work, possibly due to the region, but I can’t say for sure.

Perfecto

The latest cloud testing provider is most visually similar to Experitest, but without advanced functionality. There is a simple scripting language. It is very strange, but in the service for non-corporate (Enterprise) tariffs there is no proposal for testing on desktop browsers, it is also impossible to monitor the execution of tests in real time (only if this is not manual testing). About one hundred different devices are available for testing. There is integration with Jenkins, as well as, interestingly, with test management tools like HP ALM, IBM Rational, TFS. Very strange tariff plans are also available, like 5 hours of testing per month (in terms of minutes as much as $ 0.33 (this is the most expensive service)), though with the ability to buy a package with additional hours, but again, it’s strange that there isn’t per minute or at least hourly billing after the fact. As for ease of use, during the trial period only manual testing is available, as well as a common laboratory, so that all launches from different users fall into one heap. So, it’s impossible to judge exactly the convenience and speed of a service. It can be seen that the service is mainly focused on large corporate clients, while, at least according to available information, the capabilities of this provider are the most modest of all I have tested.

Summary Results

For all the selection criteria, the services are very similar, the difference between the services in their performance and price (if there are no features, for example, as in the case of AWS). Therefore, we will summarize the research data in a table, look at the speed of services (taking into account connection via US VPN), as well as at the price, for convenience, comparing the average monthly testing time on devices (5 releases per month, 2 hours of testing on Android and iOS devices = 20 hours). As reference values, I use data from my local computer with an emulator, again connecting to it for the purity of the experiment through a VPN in the USA).

findings

It was quite interesting for me to research and choose the right service for our team. In general, there are solutions for every taste and for any tasks, and the services turned out to be very similar in terms of characteristics and implementation. As a result, depending on your tasks, I would recommend the following choice:

Option A : If the speed of verification is important to you, and you need to check on dozens of different devices at once, your choice is Bitbar .

Option B : If you have priority on the results from reference devices, and configuration testing is secondary (but necessary) - your choice is BrowserStack . This is just our case, since statistically - 90% of all errors are errors from reference platforms and devices (most often bugs are common for all reference platforms at once). The remaining 8% are MS IE errors, with the rejection of IE support - 2% MS Edge errors, and 0.5% errors on specific configurations.

Option B : If you are interested in checking special conditions, such as poor-quality communications, geolocation, or Touch / FaceID, then your choice is Experitest .

But in the long run, if your company has a large office, your work is Full-Time, then, as a rule, organizing your own, even mini-labs with a server for emulators with connected 2-3 devices, will cost less and more convenient than using specialized services.

All Articles