How to solve aggregator site problems using resident proxies

Image: Pexels

For e-commerce aggregator sites, it’s crucial to keep up-to-date information. Otherwise, their main advantage disappears - the ability to see the most relevant data in one place.

In order to solve this problem, you need to use the web scraping technique. Its meaning is that special software is created - the crawler, which bypasses the necessary sites from the list, parses information from them and uploads it to the aggregator site.

The problem is that often the owners of the sites from which these aggregators take data do not want to give them access so easily. This can be understood - if the price information in the online store gets to the site of the aggregator and turns out to be higher than that of competitors presented there, the business will lose customers.

Anti-Scraping Methods

Therefore, often the owners of such sites oppose scraping - that is, downloading their data. They can identify requests that crawler bots send by IP address. Typically, such software uses the so-called server IP, which is easy to calculate and block.

In addition, instead of blocking requests, another method is often used - the detected bots are shown irrelevant information. For example, they overstate or underestimate the price of goods or change their descriptions.

An example that is often cited in this regard is airfare. Indeed, quite often airlines and travel agencies can show different results for the same flights, depending on the IP address. The real case: searching for an air flight from Miami to London on the same date from an IP address in Eastern Europe and Asia returns different results.

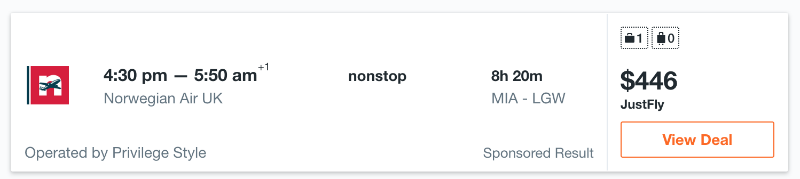

In the case of an IP address in Eastern Europe, the price looks like this:

And for an IP address from Asia like this:

As you can see, the price for the same flight is significantly different - the difference is $ 76, which is really a lot. For an aggregator site, there is nothing worse than this - if incorrect information is presented on it, then users will not use it. In addition, if a particular product has one price on the aggregator, and when it changes to the seller’s website, it changes - this also negatively affects the reputation of the project.

Solution: use resident proxies

You can avoid problems when scrapping data for the needs of their aggregation using resident proxies. Server IPs are provided by hosting providers. Identifying the address belonging to the pool of a particular provider is quite simple - each IP has an ASN number that contains this information.

There are many services for analyzing ASN numbers. Often they integrate with anti-bot systems that block access to the crawlers or juggle the data returned in response to their requests.

Resident IP addresses help circumvent such systems. Such IP providers give out to homeowners, with corresponding marks in all related databases. There are special services of resident proxies that allow you to use resident addresses. Infatica is just such a service.

The requests that crawlers of aggregator sites send from resident IPs look like they are coming from regular users from a specific region. And nobody blocks ordinary visitors - in the case of online stores, these are potential customers.

As a result, the use of rotated proxies from Infatica allows aggregator sites to receive guaranteed accurate data and to avoid blockages and difficulties with parsing.

Other articles on the use of resident proxies for business:

All Articles