Conversational BERT - Learn Social Networking Neural Networks

One of the main events in the field of computer linguistics and machine learning in 2018 was the release of BERT from Google AI, which was recognized as the best report of the year according to the North American branch of the Association of Computer Linguistics (NACL). In this article we will talk about this language model and its capabilities.

For those who have not heard before, BERT is a neural network based on the method of preliminary preparation of contextual representations of words, that is, it uses a bidirectional language model, and also allows you to analyze whole sentences. In this case, the words that come after this and through also are taken into account. This method allows to obtain results with a wide margin of state-of-the-art in a wide range of natural language processing (NLP) tasks, but requires large computational power.

BERT was originally taught in 104 Wikipedia languages (Multilingual). In addition to the multilingual version, Google has released BERT based on English Wikipedia and BERT in Chinese.

In the neural systems and deep learning laboratory, we used BERT to improve the NLP components of DeepPavlov, an open source interactive library for developing a virtual interactive assistant and text analysis based on TensorFlow and Keras. We trained the BERT model on the Russian Wikipedia - RuBERT, significantly improving the quality of Russian models. And besides, BERT was integrated into the solutions of three popular NLP tasks: text classification, sequence tagging and answers to questions. You can read more about BERT-models of DeepPavlov in the latest publications of our blog:

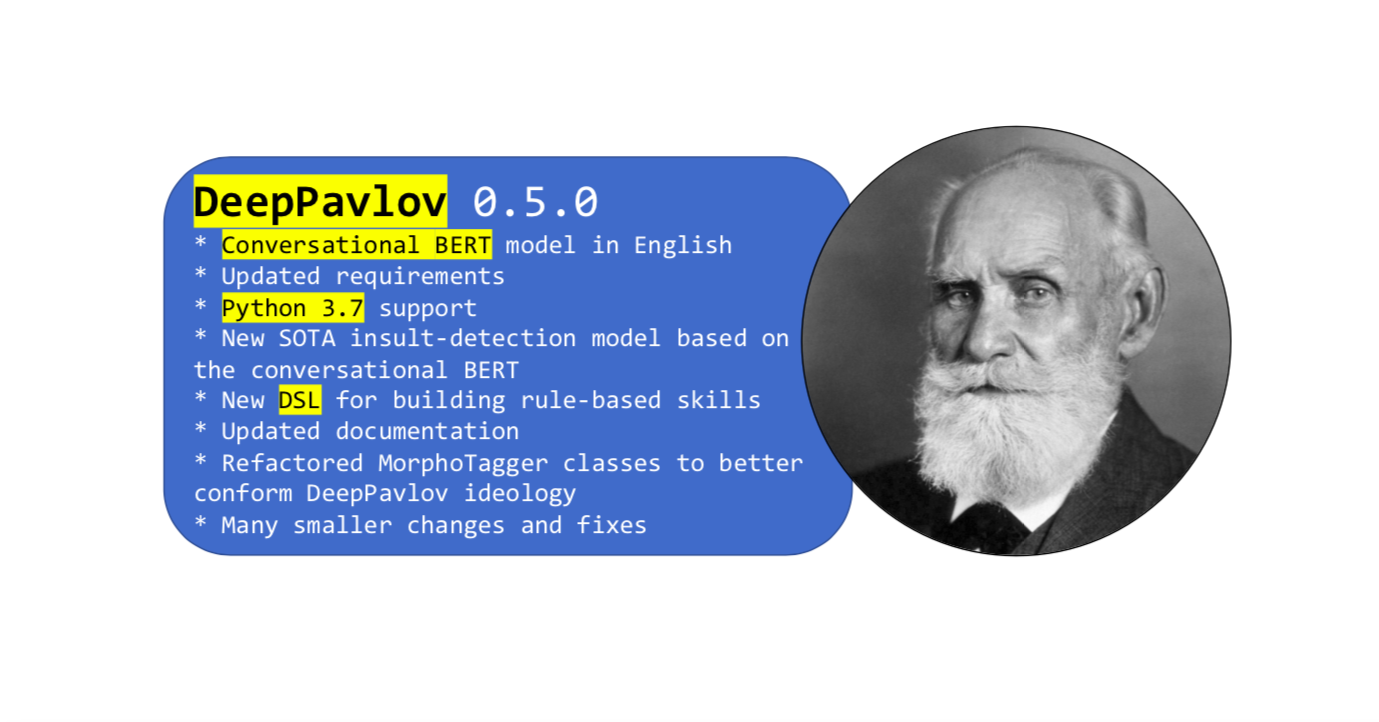

The key feature of this update was BERT, trained in the vocabulary of social networks in English. The formal language of Wikipedia is different from ordinary colloquial speech, while for solving some problems, you just need to have one.

Spoken BERT learned in English using open data from Twitter, Reddit, DailyDialogues, OpenSubtitles, debates, blogs, and Facebook news comments. This data was used to build the vocabulary of English subtokens into the English version of BERT as an initialization for English spoken BERT. As a result, spoken BERT showed state-of-the-art results in tasks that featured social data.

You can use the new Insult detection model based on the spoken BERT in English. Or any other BERT-based model using the simple documentation guide .

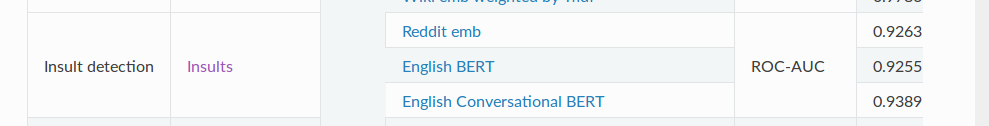

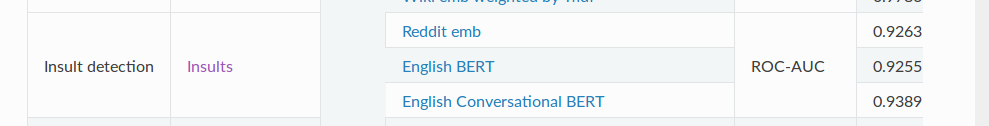

The SOTA Insult detection model contains a binary classification task for detecting abuse. For the ROC-AUC metric (performance measurement for the classification task at various threshold settings), this model shows a value of 93.89 on the data compared to the usual BERT - 92.55.

So that’s almost all we wanted to tell you about our conversational BERT. And do not forget that DeepPavlov has a forum - ask your questions regarding the library and models. Be in business!

For those who have not heard before, BERT is a neural network based on the method of preliminary preparation of contextual representations of words, that is, it uses a bidirectional language model, and also allows you to analyze whole sentences. In this case, the words that come after this and through also are taken into account. This method allows to obtain results with a wide margin of state-of-the-art in a wide range of natural language processing (NLP) tasks, but requires large computational power.

From formal speech to conversational

BERT was originally taught in 104 Wikipedia languages (Multilingual). In addition to the multilingual version, Google has released BERT based on English Wikipedia and BERT in Chinese.

In the neural systems and deep learning laboratory, we used BERT to improve the NLP components of DeepPavlov, an open source interactive library for developing a virtual interactive assistant and text analysis based on TensorFlow and Keras. We trained the BERT model on the Russian Wikipedia - RuBERT, significantly improving the quality of Russian models. And besides, BERT was integrated into the solutions of three popular NLP tasks: text classification, sequence tagging and answers to questions. You can read more about BERT-models of DeepPavlov in the latest publications of our blog:

- 19 entities for 104 languages: A new era of NER with the DeepPavlov multilingual BERT

- The BERT-based text classification models of DeepPavlov

- BERT-based Cross-Lingual Question Answering with DeepPavlov?

More recently, a new release of the library

The key feature of this update was BERT, trained in the vocabulary of social networks in English. The formal language of Wikipedia is different from ordinary colloquial speech, while for solving some problems, you just need to have one.

Spoken BERT learned in English using open data from Twitter, Reddit, DailyDialogues, OpenSubtitles, debates, blogs, and Facebook news comments. This data was used to build the vocabulary of English subtokens into the English version of BERT as an initialization for English spoken BERT. As a result, spoken BERT showed state-of-the-art results in tasks that featured social data.

How to use Conversational BERT in DeepPavlov

You can use the new Insult detection model based on the spoken BERT in English. Or any other BERT-based model using the simple documentation guide .

The SOTA Insult detection model contains a binary classification task for detecting abuse. For the ROC-AUC metric (performance measurement for the classification task at various threshold settings), this model shows a value of 93.89 on the data compared to the usual BERT - 92.55.

And finally

So that’s almost all we wanted to tell you about our conversational BERT. And do not forget that DeepPavlov has a forum - ask your questions regarding the library and models. Be in business!

All Articles