Fiber Channel: the vitality of connecting to storage in the data center

We all know that the amount of data continues to grow exponentially, and that the data itself is the new currency that enterprises are counting on. The ability to respond to this data in a timely manner can affect the competitiveness of a business in the market. Therefore, fast and reliable access to data is of paramount importance, and the underlying infrastructure that connects the user to storage systems is more important than ever before.

In a modern data center, architects can choose from many different connection options, but Fiber Channel has been and will remain a lifeblood for connecting to shared repositories. This is due to the fact that Fiber Channel is the most secure, reliable, cost-effective and scalable protocol for connecting servers and storage, as well as the only protocol specifically designed to transfer storage traffic.

Fiber Channel has been around for several decades and is still the primary choice for connecting to shared storage in the data center. Fiber Channel creates a dedicated storage network, and SCSI storage commands are sent between the server and storage devices with bandwidths up to 28.05 Gb / s (32GFC) and with IOPS in excess of one million. Since Fiber Channel was originally designed for storage traffic, it works very reliably and provides high-performance connectivity. The HPE StoreFabric 16GFC and 32GFC adapters and switching infrastructure provide the bandwidth, I / O operations per second, and low latency needed in data centers today and for years to come.

Advances in Fiber Channel technology keep him ahead of the curve when it comes to connectivity.

For example, the HPE StoreFabric 16GFC and 32GFC infrastructure is already capable of supporting NVMe storage traffic, even before native NVMe storage arrays become massive. Other advanced features include advanced diagnostics, simplified deployment and orchestration, and enhanced reliability such as the T-10 PI, dual port isolation, and more.

Another popular storage connection option is iSCSI. With iSCSI, commands are stored on a standard TCP / IP network, and this is great for low to mid-range systems where performance and security are not essential. A common misconception about Fiber Channel is that since it uses a dedicated storage network, it is more expensive than iSCSI. Although iSCSI can operate on the same Ethernet network as all regular network traffic, to ensure the performance required by most clients from its storage systems, iSCSI must operate on a segmented or dedicated Ethernet network isolated from normal network traffic. This means complex VLAN configurations and security policies, or a fully dedicated Ethernet network. Just like Fiber Channel.

The only real cost difference between FC and iSCSI is when DAC cables are used in iSCSI implementations. But with a distance limit of 5 meters using DAC cables.

This might work fine for SME customers with only one storage array, but DAC cables do not work well in a large-scale data center.

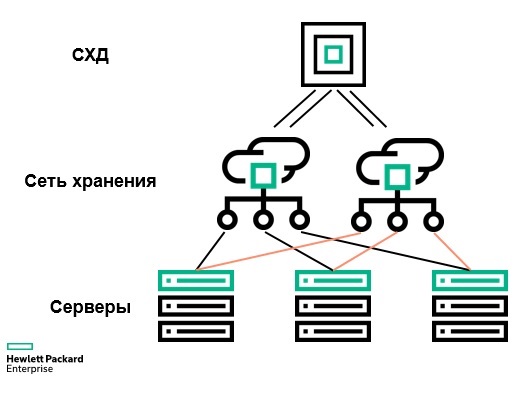

When you look at the topology of a storage area network, best practices are identical for iSCSI and Fiber Channel. To ensure fault tolerance and eliminate downtime, a storage area network (SAN) project has two identical network paths between servers and storage.

However, one significant difference is that Fiber Channel networks are less vulnerable to security breaches than Ethernet. When was the last time you heard about hacking a Fiber Channel network? Never? What about an Ethernet network?

Security is one of the main reasons that Fiber Channel will remain the backbone of the data center for many years.

As we expect, the SCSI instruction set will be replaced by Non-Volitile Memory Express or NVMe commands. NVMe is an optimized instruction set designed for SSD and storage class memory that is much more efficient than SCSI. In addition, NVMe is a multi-row architecture with I / O queues up to 64 KB, with each I / O queue supporting up to 64 KB of commands. Compared to single-queued SCSI with 64 commands, NVMe can provide significantly higher performance.

The modern HPE StoreFabric 16GFC and 32GFC infrastructure, which supports SCSI commands, can also run NVMe commands on a SAN or in a structure, as it is called. When using Ethernet, customers will need to implement RDMA with low latency compared to converged Ethernet or RoCE in order to take full advantage of NVMe. However, this approach requires a sophisticated lossless Ethernet implementation using data center bridges (DCBs) and priority flow control (PFC). Network complexity for NVMe over Ethernet will be a huge barrier for most customers, especially when the FC SAN deployed today works great with tomorrow's NVMe storage.

The bottom line is that Fiber Channel will remain the source of vitality for communication between servers and shared storage.

All Articles