KVM (under) VDI with one-time virtual machines using bash

Who is this article for?

This article may be of interest to system administrators who were faced with the task of creating a service of "one-time" jobs.

Prologue

The IT support department of a young dynamically developing company with a small regional network was asked to organize "self-service stations" for use by their external clients. The station data was supposed to be used for registration on the company's external portals, downloading data from external devices, and working with government portals.

An important aspect was the fact that most of the software is “sharpened” under MS Windows (for example, “Declaration”), and despite the movement towards open formats, MS Office remains the dominant standard in the exchange of electronic documents. Thus, we could not refuse MS Windows when solving this problem.

The main problem was the possibility of accumulating various data from user sessions, which could lead to their leak to third parties. This situation has already let the MFC down . But unlike the quasi-judicial (state autonomous institution) MFC, non-state organizations will be punished much more for such shortcomings. The next critical issue was the requirement to work with external storage media, on which, by all means, there will be a bunch of malicious malware. The probability of malware entry from the Internet was considered less likely due to the restriction of access to the Internet via a white list of addresses. Employees of other departments joined in developing the requirements, making their requirements and wishes, the final requirements looked as follows:

IS requirements

- After use, all user data (including temporary files and registry keys) should be deleted.

- All processes launched by the user should be completed at the end of work.

- Internet access through a white list of addresses.

- Restrictions on the ability to run third-party code.

- If the session is idle for more than 5 minutes, the session should automatically end, the station should perform a cleanup.

Customer requirements

- The number of client stations per branch is not more than 4.

- The minimum waiting time for the system to be ready, from the moment I "sat down in a chair" to the start of working with client software.

- The ability to connect peripheral devices (scanners, flash drives) directly from the installation site of the "self-service station."

- Customer wishes

- Demonstration of advertising materials (pictures) at the time of the shutdown of the complex.

Flour of creativity

Having played enough with Windows livecd, we came to the unanimous conclusion that the resulting solution does not satisfy at least 3 critical points. They are either loaded for a long time, or not quite live, or their customization was associated with wild pain. Perhaps we searched poorly, and you can advise a set of some tools, I will be grateful.

Further, we began to look towards VDI, but for this task, most solutions are either too expensive or require close attention. And I wanted a simple tool with a minimal amount of magic, most of the problems of which could be solved by simply rebooting / restarting the service. Fortunately, we had server equipment, low end class in the branches, from the decommissioned service, which we could use for the technological base.

What happened in the end? But I won’t be able to tell you what happened in the end, because the NDA, but in the process of searching, we developed an interesting scheme that showed itself well in laboratory tests, although it did not go into series.

A few disclaimers: the author does not claim that the proposed solution completely solves all the tasks and does it voluntarily and with the song. The author agrees in advance with the statement that Sein Englishe sprache is zehr schlecht. Since the solution no longer develops, you can’t count on a bug fix or a change in functionality, everything is in your hands. The author assumes that you are at least a little familiar with KVM and read a review article on the Spice protocol, and you worked a bit with Centos or another GNU Linux distribution.

In this article, I would like to analyze the backbone of the resulting solution, namely the interaction of the client and server and the essence of the processes on the life cycle of virtual machines within the framework of the solution in question. If the article is of interest to the public, I will describe the details of implementing live images for creating thin clients based on Fedora and tell about the details of tuning virtual machines and KVM servers to optimize performance and security.

If you take colored paper,

Paints, brushes and glue,

And a little bit more dexterity ...

You can make a hundred rubles!

Scheme and description of the test bench

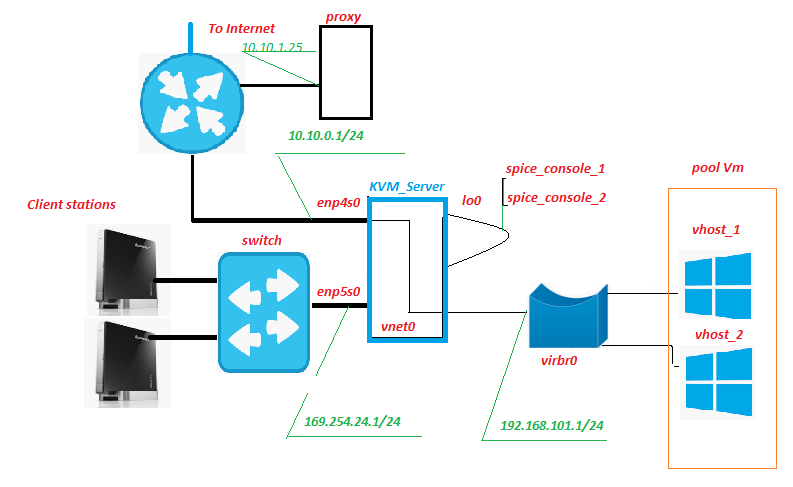

All equipment is located inside the branch network, only the Internet channel goes out. Historically, there has already been a proxy server, it is nothing extraordinary. But it is on it, among other things, that traffic from virtual machines will be filtered (abbr. VM later in the text). Nothing prevents placing this service on the KVM server, the only thing you need to watch is how the load from it on the disk subsystem changes.

Client Station - in fact, “self-service stations”, “front-end” of our service. They are nettops Lenovo IdeaCentre. What is this unit good for? Yes, almost everyone, especially pleased with the large number of USB connectors and card readers on the front panel. In our scheme, an SD card with hardware write protection is inserted in the card reader, on which the modified live image of Fedora 28 is recorded. Of course, a monitor, keyboard and mouse are connected to the nettop.

Switch - an unremarkable hardware switch of the second level, it is in the server room and blinks with lights. It is not connected to any networks except the network of “self-service stations”.

KVM_Server is the core of the circuit. In bench tests, the Core 2 Quad Q9650 with 8 GB of RAM confidently pulled 3 Windows 10 virtual machines on itself. Disk subsystem - adaptec 3405 2 Raid 1 + SSD drives. In field trials of the Xeon 1220, the more serious LSI 9260 + SSD easily pulled 5-6 VMs. We would get the server from the retired service, there would be not a lot of capital costs. KVM virtualization system with pool_Vm virtual machine pool is deployed on this server (s).

Vm is a virtual machine, the backend of our service. It is the work of the user.

Enp5s0 is a network interface that looks towards the network of “self-service stations”, dhcpd, ntpd, httpd live on it, and xinetd listens to the “signal” port.

Lo0 is the loopback pseudo-interface. Standard.

Spice_console - A very interesting thing, the fact is that, unlike the classic RDP, when you turn the KVM + Spice protocol bundle, an additional entity appears - the virtual machine console port. In fact, connecting to this TCP port, we get the Vm console, without the need to connect to Vm through its network interface. All interaction with Vm for signal transmission, the server takes over. The closest analogue in function is IPKVM. Those. The image of the VM monitor is transferred to this port, data on mouse movement are transmitted to it, and (most importantly) interaction via the Spice protocol allows you to seamlessly redirect USB devices to the virtual machine, as if this device is connected to the Vm itself. Tested for flash drives, scanners, webcams.

Vnet0, virbr0 and virtual network cards Vm form a network of virtual machines.

How it works

From Client Station

The client station boots graphically from the modified live image of Fedora 28, receives the ip address by dhcp from the network address space 169.254.24.0/24. During the boot process, firewall rules are created that allow connections to the “signal” and “spice” server ports. After the download is completed, the station waits for the authorization of the Client user. After user authorization, the “openbox” desktop manager is launched and the autostart autostart script is executed on behalf of the authorized user. Among other things, the autorun script runs the remote.sh script.

$ HOME / .config / openbox / scripts / remote.sh

#!/bin/sh server_ip=$(/usr/bin/cat /etc/client.conf |/usr/bin/grep "server_ip" \ |/usr/bin/cut -d "=" -f2) vdi_signal_port=$(/usr/bin/cat /etc/client.conf |/usr/bin/grep "vdi_signal_port" \ |/usr/bin/cut -d "=" -f2) vdi_spice_port=$(/usr/bin/cat /etc/client.conf |/usr/bin/grep "vdi_spice_port" \ |/usr/bin/cut -d "=" -f2) animation_folder=$(/usr/bin/cat /etc/client.conf |/usr/bin/grep "animation_folder" \ |/usr/bin/cut -d "=" -f2) process=/usr/bin/remote-viewer while true do if [ -z `/usr/bin/pidof feh` ] then /usr/bin/echo $animation_folder /usr/bin/feh -N -x -D1 $animation_folder & else /usr/bin/echo fi /usr/bin/nc -i 1 $server_ip $vdi_signal_port |while read line do if /usr/bin/echo "$line" |/usr/bin/grep "RULE ADDED, CONNECT NOW!" then /usr/bin/killall feh pid_process=$($process "spice://$server_ip:$vdi_spice_port" \ "--spice-disable-audio" "--spice-disable-effects=animation" \ "--spice-preferred-compression=auto-glz" "-k" \ "--kiosk-quit=on-disconnect" | /bin/echo $!) /usr/bin/wait $pid_process /usr/bin/killall -u $USER exit else /usr/bin/echo $line >> /var/log/remote.log fi done done

/etc/client.conf

server_ip=169.254.24.1 vdi_signal_port=5905 vdi_spice_port=5906 animation_folder=/usr/share/backgrounds/animation background_folder=/usr/share/backgrounds2/fedora-workstation

Description of the variables of the client.conf file

server_ip - address KVM_Server

vdi_signal_port - port KVM_Server on which xinetd "sits"

vdi_spice_port - the network port KVM_Server, from which the connection request will be redirected from the remote-viewer client to the spice port allocated by Vm (details below)

animation_folder - the folder where the images come from for demonstration bullshit animation

background_folder - the folder from which images are taken to demonstrate presentations in standby mode. More on animation in the next part of the article.

The remote.sh script takes the settings from the configuration file /etc/client.conf and uses nc to connect to the “vdi_signal_port” port of the KVM server and receives a data stream from the server, among which it expects the string “RULE ADDED, CONNECT NOW”. When the desired line is received, the remote-viewer process starts in kiosk mode, establishing a connection to the “vdi_spice_port” server port. The execution of the script is suspended until the end of the remote-viewer execution.

Remote-viewer connecting to the “vdi_spice_port” port, due to a redirect on the server side, gets to the “spice_console” port of the lo0 interface ie to the virtual machine’s console and the user’s work takes place directly. While waiting for the connection, the user is shown bullshit animation, in the form of a slide show of jpeg files, the path to the directory with pictures is determined by the value of the animation_folder variable from the configuration file.

If the connection to the “spice_console" port of the virtual machine is lost, which signals the shutdown / reboot of the virtual machine (that is, the actual end of the user session), all processes running on behalf of the authorized user are terminated, which leads to the restart of lightdm and return to the authorization screen .

From the side of KVM Server

On the “signal” port of the network card, enp5s0 is waiting for the xinetd connection. After connecting to the “signal” port, xinetd runs the vm_manager.sh script without passing any input parameters to it and redirects the result of the script to the nc session of the Client Station.

/etc/xinetd.d/test-server

service vdi_signal { port = 5905 socket_type = stream protocol = tcp wait = no user = root server = /home/admin/scripts_vdi_new/vm_manager.sh }

/home/admin/scripts_vdi_new/vm_manager.sh

#!/usr/bin/sh #<SET LOCAL VARIABLES FOR SCRIPT># SRV_SCRIPTS_DIR=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_scripts_dir" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_SCRIPTS_DIR=$SRV_SCRIPTS_DIR" export SRV_SCRIPTS_DIR=$SRV_SCRIPTS_DIR SRV_POOL_SIZE=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_pool_size" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_POOL_SIZE=$SRV_POOL_SIZE" export "SRV_POOL_SIZE=$SRV_POOL_SIZE" SRV_START_PORT_POOL=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_start_port_pool" |/usr/bin/cut -d "=" -f2) /usr/bin/echo SRV_START_PORT_POOL=$SRV_START_PORT_POOL export SRV_START_PORT_POOL=$SRV_START_PORT_POOL SRV_TMP_DIR=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_tmp_dir" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_TMP_DIR=$SRV_TMP_DIR" export SRV_TMP_DIR=$SRV_TMP_DIR date=$(/usr/bin/date) #</SET LOCAL VARIABLES FOR SCRIPT># /usr/bin/echo "# $date START EXECUTE VM_MANAGER.SH #" make_connect_to_vm() { #<READING CLEAR.LIST AND CHECK PORT FOR NETWORK STATE># /usr/bin/echo "READING CLEAN.LIST AND CHECK PORT STATE" #<CHECK FOR NO ONE PORT IN CLEAR.LIST># if [ -z `/usr/bin/cat $SRV_TMP_DIR/clear.list` ] then /usr/bin/echo "NO AVALIBLE PORTS IN CLEAN.LIST FOUND" /usr/bin/echo "Will try to make housekeeper, and create new vm" make_housekeeper else #<MINIMUN ONE PORT IN CLEAR.LIST FOUND># /usr/bin/cat $SRV_TMP_DIR/clear.list |while read line do clear_vm_port=$(($line)) /bin/echo "FOUND PORT $clear_vm_port IN CLEAN.LIST. TRY NETSTAT" \ "CHECK FOR PORT=$clear_vm_port" #<NETSTAT LISTEN CHECK FOR PORT FROM CLEAN.LIST># if /usr/bin/netstat -lnt |/usr/bin/grep ":$clear_vm_port" > /dev/null then /bin/echo "$clear_vm_port IS LISTEN" #<PORT IS LISTEN. CHECK FOR IS CONNECTED NOW># if /usr/bin/netstat -nt |/usr/bin/grep ":$clear_vm_port" \ |/usr/bin/grep "ESTABLISHED" > /dev/null then #<PORT LISTEN AND ALREADY CONNECTED! MOVE PORT FROM CLEAR.LIST # TO WASTE.LIST># /bin/echo "$clear_vm_port IS ALREADY CONNECTED, MOVE PORT TO WASTE.LIST" /usr/bin/sed -i "/$clear_vm_port/d" $SRV_TMP_DIR/clear.list /usr/bin/echo $clear_vm_port >> $SRV_TMP_DIR/waste.list else #<PORT LISTEN AND NO ONE CONNECT NOW. MOVE PORT FROM CLEAR.LIST TO # CONN_WAIT.LIST AND CREATE IPTABLES RULES>## /usr/bin/echo "OK, $clear_vm_port IS NOT ALREADY CONNECTED" /usr/bin/sed -i "/$clear_vm_port/d" $SRV_TMP_DIR/clear.list /usr/bin/echo $clear_vm_port >> $SRV_TMP_DIR/conn_wait.list $SRV_SCRIPTS_DIR/vm_connect.sh $clear_vm_port #<TRY TO CLEAN VM IN WASTE.LIST AND CREATE NEW WM># /bin/echo "TRY TO CLEAN VM IN WASTE.LIST AND CREATE NEW VM" make_housekeeper /usr/bin/echo "# $date STOP EXECUTE VM_MANAGER.SH#" exit fi else #<PORT IS NOT A LISTEN. MOVE PORT FROM CLEAR.LIST TO WASTE.LIST># /bin/echo " "$clear_vm_port" is NOT LISTEN. REMOVE PORT FROM CLEAR.LIST" /usr/bin/sed -i "/$clear_vm_port/d" $SRV_TMP_DIR/clear.list /usr/bin/echo $clear_vm_port >> $SRV_TMP_DIR/waste.list make_housekeeper fi done fi } make_housekeeper() { /usr/bin/echo "=Execute housekeeper=" /usr/bin/cat $SRV_TMP_DIR/waste.list |while read line do /usr/bin/echo "$line" if /usr/bin/netstat -lnt |/usr/bin/grep ":$line" > /dev/null then /bin/echo "port_alive, vm is running" if /usr/bin/netstat -nt |/usr/bin/grep ":$line" \ |/usr/bin/grep "ESTABLISHED" > /dev/null then /bin/echo "port_in_use can't delete vm!!!" else /bin/echo "port_not in use. Deleting vm" /usr/bin/sed -i "/$line/d" $SRV_TMP_DIR/waste.list /usr/bin/echo $line >> $SRV_TMP_DIR/recycle.list $SRV_SCRIPTS_DIR/vm_delete.sh $line fi else /usr/bin/echo "posible vm is already off. Deleting vm" /usr/bin/echo "MOVE VM IN OFF STATE $line FROM WASTE.LIST TO" \ "RECYCLE.LIST AND DELETE VM" /usr/bin/sed -i "/$line/d" $SRV_TMP_DIR/waste.list /usr/bin/echo $line >> $SRV_TMP_DIR/recycle.list $SRV_SCRIPTS_DIR/vm_delete.sh "$line" fi done create_clear_vm } create_clear_vm() { /usr/bin/echo "=Create new VM=" while [ $SRV_POOL_SIZE -gt 0 ] do new_vm_port=$(($SRV_START_PORT_POOL+$SRV_POOL_SIZE)) /usr/bin/echo "new_vm_port=$new_vm_port" if /usr/bin/grep "$new_vm_port" $SRV_TMP_DIR/clear.list > /dev/null then /usr/bin/echo "$new_vm_port port is already defined in clear.list" else if /usr/bin/grep "$new_vm_port" $SRV_TMP_DIR/waste.list > /dev/null then /usr/bin/echo "$new_vm_port port is already defined in waste.list" else if /usr/bin/grep "$new_vm_port" $SRV_TMP_DIR/recycle.list > /dev/null then /usr/bin/echo "$new_vm_port PORT IS ALREADY DEFINED IN RECYCLE LIST" else if /usr/bin/grep "$new_vm_port" $SRV_TMP_DIR/conn_wait.list > /dev/null then /usr/bin/echo "$new_vm_port PORT IS ALREADY DEFINED IN CONN_WAIT LIST" else /usr/bin/echo "PORT IN NOT DEFINED IN NO ONE LIST WILL CREATE" \ "VM ON PORT $new_vm_port" /usr/bin/echo $new_vm_port >> $SRV_TMP_DIR/recycle.list $SRV_SCRIPTS_DIR/vm_create.sh $new_vm_port fi fi fi fi SRV_POOL_SIZE=$(($SRV_POOL_SIZE-1)) done /usr/bin/echo "# $date STOP EXECUTE VM_MANAGER.SH #" } make_connect_to_vm |/usr/bin/tee -a /var/log/vm_manager.log

/etc/vm_manager.conf

srv_scripts_dir = / home / admin / scripts_vdi_new

srv_pool_size = 4

srv_start_port_pool = 5920

srv_tmp_dir = / tmp / vm_state

base_host = win10_2

input_iface = enp5s0

vdi_spice_port = 5906

count_conn_tryes = 10

srv_pool_size = 4

srv_start_port_pool = 5920

srv_tmp_dir = / tmp / vm_state

base_host = win10_2

input_iface = enp5s0

vdi_spice_port = 5906

count_conn_tryes = 10

Description of the variables of the configuration file vm_manager.conf

srv_scripts_dir - script location folder vm_manager.sh, vm_connect.sh, vm_delete.sh, vm_create.sh, vm_clear.sh

srv_pool_size - Vm pool size

srv_start_port_pool - the initial port, after which the spice ports of the virtual machine consoles will begin

srv_tmp_dir - folder for temporary files

base_host - base Vm (golden image) from which Vm clones will be made into the pool

input_iface - the network interface of the server, looking towards Client Stations

vdi_spice_port - the network port of the server from which the connection request will be redirected from the remote-viewer client to the spice port of the selected Vm

count_conn_tryes - a wait timer, after which it is considered that no connection to Vm has occurred (for details, see vm_connect.sh)

The vm_manager.sh script reads the configuration file from the vm_manager.conf file, evaluates the state of virtual machines in the pool according to several parameters, namely: how many VMs are deployed, whether there are free clean VMs. To do this, he reads the file clear.list which contains the numbers of the "spice_console" ports of the "newly created" (see VM creation cycle below) virtual machines and checks for an established connection with them. If a port with an established network connection is detected (which should absolutely not be), a warning is displayed and the port is transferred to waste.list When the first port is found from the clear.list file with which there is currently no connection, vm_manager.sh calls the vm_connect.sh script and passes him as a parameter the number of this port.

/home/admin/scripts_vdi_new/vm_connect.sh

#!/bin/sh date=$(/usr/bin/date) /usr/bin/echo "#" "$date" "START EXECUTE VM_CONNECT.SH#" #<SET LOCAL VARIABLES FOR SCRIPT># free_port="$1" input_iface=$(/usr/bin/cat /etc/vm_manager.conf |/usr/bin/grep "input_iface" \ |/usr/bin/cut -d "=" -f2) /usr/bin/echo "input_iface=$input_iface" vdi_spice_port=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "vdi_spice_port" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "vdi_spice_port=$vdi_spice_port" count_conn_tryes=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "count_conn_tryes" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "count_conn_tryes=$count_conn_tryes" #</SET LOCAL VARIABLES FOR SCRIPT># #<CREATE IPTABLES RULES AND SEND SIGNAL TO CONNECT># /usr/bin/echo "create rule for port" $free_port /usr/sbin/iptables -I INPUT -i $input_iface -p tcp -m tcp --dport \ $free_port -j ACCEPT /usr/sbin/iptables -I OUTPUT -o $input_iface -p tcp -m tcp --sport \ $free_port -j ACCEPT /usr/sbin/iptables -t nat -I PREROUTING -p tcp -i $input_iface --dport \ $vdi_spice_port -j DNAT --to-destination 127.0.0.1:$free_port /usr/bin/echo "RULE ADDED, CONNECT NOW!" #</CREATE IPTABLES RULES AND SEND SIGNAL TO CONNECT># #<WAIT CONNECT ESTABLISHED AND ACTIVATE CONNECT TIMER># while [ $count_conn_tryes -gt 0 ] do if /usr/bin/netstat -nt |/usr/bin/grep ":$free_port" \ |/usr/bin/grep "ESTABLISHED" > /dev/null then /bin/echo "$free_port NOW in use!!!" /usr/bin/sleep 1s /usr/sbin/iptables -t nat -D PREROUTING -p tcp -i $input_iface --dport \ $vdi_spice_port -j DNAT --to-destination 127.0.0.1:$free_port /usr/sbin/iptables -D INPUT -i $input_iface -p tcp -m tcp --dport \ $free_port -j ACCEPT /usr/sbin/iptables -D OUTPUT -o $input_iface -p tcp -m tcp --sport \ $free_port -j ACCEPT /usr/bin/sed -i "/$free_port/d" $SRV_TMP_DIR/conn_wait.list /usr/bin/echo $free_port >> $SRV_TMP_DIR/waste.list return else /usr/bin/echo "$free_port NOT IN USE" /usr/bin/echo "RULE ADDED, CONNECT NOW!" /usr/bin/sleep 1s fi count_conn_tryes=$((count_conn_tryes-1)) done #</WAIT CONNECT ESTABLISED AND ACTIVATE CONNECT TIMER># #<IF COUNT HAS EXPIRED. REMOVE IPTABLES RULE AND REVERT \ # VM TO CLEAR.LIST># /usr/bin/echo "REVERT IPTABLES RULE AND REVERT VM TO CLEAN \ LIST $free_port" /usr/sbin/iptables -t nat -D PREROUTING -p tcp -i $input_iface --dport \ $vdi_spice_port -j DNAT --to-destination 127.0.0.1:$free_port /usr/sbin/iptables -D INPUT -i $input_iface -p tcp -m tcp --dport $free_port \ -j ACCEPT /usr/sbin/iptables -D OUTPUT -o $input_iface -p tcp -m tcp --sport \ $free_port -j ACCEPT /usr/bin/sed -i "/$free_port/d" $SRV_TMP_DIR/conn_wait.list /usr/bin/echo $free_port >> $SRV_TMP_DIR/clear.list #</COUNT HAS EXPIRED. REMOVE IPTABLES RULE AND REVERT VM \ #TO CLEAR.LIST># /usr/bin/echo "#" "$date" "END EXECUTE VM_CONNECT.SH#" # Attention! Must Be! sysctl net.ipv4.conf.all.route_localnet=1

The vm_connect.sh script introduces firewall rules that create a redirect "vdi_spice_port" of the server port of the enp5s0 interface to the "spice console port" VM located on the lo0 server interface, passed as the startup parameter. The port is transferred to conn_wait.list, the VM is considered to be pending connection. The line “RULE ADDED, CONNECT NOW” is sent to the Client Station session on the “signal” port of the server, which is expected by the remote.sh script running on it. A connection waiting cycle begins with the number of attempts determined by the value of the variable "count_conn_tryes" from the configuration file. Every second in the nc session, the string “RULE ADDED, CONNECT NOW” will be given and the connection established to the “spice_console” port will be checked.

If the connection failed for the set number of attempts, the spice_console port is transferred back to clear.list The execution of vm_connect.sh is completed, the execution of vm_manager.sh is resumed, which starts the cleaning cycle.

If the Client Station connects to the spice_console port on the lo0 interface, the firewall rules that create a redirect between the spice server port and the spice_console port are deleted and the connection is further maintained by a mechanism for determining the status of the firewall. In the event of a disconnected connection, reconnecting to the spice_console port will fail. The spice_console port is transferred to waste.list, the VM is considered “dirty” and it cannot return to the pool of “clean” virtual machines without going through cleaning. The execution of vm_connect.sh is completed, the execution of vm_manager.sh is resumed, which starts the cleaning cycle.

The cleaning cycle begins by looking at the waste.list file into which the spice_console numbers of the virtual machine ports to which the connection is established are transferred. The presence of an active connection on each spice_console port from the list is determined. If there is no connection, it is assumed that the virtual machine is no longer in use and the port is transferred to recycle.list and the process of deleting the virtual machine (see below) that belongs to this port starts. If an active network connection is detected on the port, it is considered that the virtual machine is being used, no action is taken for it. If the port is not tapped, it is assumed that the VM is turned off and is no longer needed. The port is transferred to recycle.list and the process of removing the virtual machine starts. To do this, the vm_delete.sh script is called, to which the "spice_console" number is transferred to the VM port as a parameter, which must be deleted.

/home/admin/scripts_vdi_new/vm_delete.sh

#!/bin/sh #<Set local VARIABLES># port_to_delete="$1" date=$(/usr/bin/date) #</Set local VARIABLES># /usr/bin/echo "# $date START EXECUTE VM_DELETE.SH#" /usr/bin/echo "TRY DELETE VM ON PORT: $vm_port" #<VM NAME SETUP># vm_name_part1=$(/usr/bin/cat /etc/vm_manager.conf |/usr/bin/grep 'base_host' \ |/usr/bin/cut -d'=' -f2) vm_name=$(/usr/bin/echo "$vm_name_part1""-""$port_to_delete") #</VM NAME SETUP># #<SHUTDOWN AND DELETE VM># /usr/bin/virsh destroy $vm_name /usr/bin/virsh undefine $vm_name /usr/bin/rm -f /var/lib/libvirt/images_write/$vm_name.qcow2 /usr/bin/sed -i "/$port_to_delete/d" $SRV_TMP_DIR/recycle.list #</SHUTDOWN AND DELETE VM># /usr/bin/echo "VM ON PORT $vm_port HAS BEEN DELETE AND REMOVE" \ "FROM RECYCLE.LIST. EXIT FROM VM_DELETE.SH" /usr/bin/echo "# $date STOP EXECUTE VM_DELETE.SH#" exit

Removing a virtual machine is a fairly trivial operation, the vm_delete.sh script determines the name of the virtual machine that owns the port passed as the startup parameter. The VM is forced to stop, the VM is removed from the hypervisor, the virtual hard disk of this VM is deleted. The spice_console port is removed from recycle.list. Execution of vm_delete.sh ends, execution of vm_manager.sh resumes

The script vm_manager.sh, at the end of operations to clean up unnecessary virtual machines from the list waste.list, starts the cycle of creating virtual machines in the pool.

The process begins with the determination of the spice_console ports available for hosting. To do this, based on the parameter of the configuration file "srv_start_port_pool" which sets the starting port for the pool "spice_console" of virtual machines and the parameter "srv_pool_size", which determines the limit on the number of virtual machines, all possible port variants are sequentially enumerated. For each specific port, it is searched for in clear.list, waste.list, conn_wait.list, recycle.list. If a port is found in any of these files, the port is considered busy and is skipped. If the port is not found in the specified files, it is entered into the recycle.list file and the process of creating a new virtual machine begins. To do this, the vm_create.sh script is called, to which the port number "spice_console" for which you want to create a VM is passed as a parameter.

/home/admin/scripts_vdi_new/vm_create.sh

#!/bin/sh /usr/bin/echo "#" "$date" "START RUNNING VM_CREATE.SH#" new_vm_port=$1 date=$(/usr/bin/date) a=0 /usr/bin/echo SRV_TMP_DIR=$SRV_TMP_DIR #<SET LOCAL VARIABLES FOR SCRIPT># base_host=$(/usr/bin/cat /etc/vm_manager.conf |/usr/bin/grep "base_host" \ |/usr/bin/cut -d "=" -f2) /usr/bin/echo "base_host=$base_host" #</SET LOCAL VARIABLES FOR SCRIPT># hdd_image_locate() { /bin/echo "Run STEP 1 - hdd_image_locate" hdd_base_image=$(/usr/bin/virsh dumpxml $base_host \ |/usr/bin/grep "source file" |/usr/bin/grep "qcow2" |/usr/bin/head -n 1 \ |/usr/bin/cut -d "'" -f2) if [ -z "$hdd_base_image" ] then /bin/echo "base hdd image not found!" else /usr/bin/echo "hdd_base_image found is a $hdd_base_image. Run next step 2" #< CHECK FOR SNAPSHOT ON BASE HDD ># if [ 0 -eq `/usr/bin/qemu-img info "$hdd_base_image" | /usr/bin/grep -c "Snapshot"` ] then /usr/bin/echo "base image haven't snapshot, run NEXT STEP 3" else /usr/bin/echo "base hdd image have a snapshot, can't use this image" exit fi #</ CHECK FOR SNAPSHOT ON BASE HDD ># #< CHECK FOR HDD IMAGE IS LINK CLONE ># if [ 0 -eq `/usr/bin/qemu-img info "$hdd_base_image" |/usr/bin/grep -c "backing file" then /usr/bin/echo "base image is not a linked clone, NEXT STEP 4" /usr/bin/echo "Base image check complete!" else /usr/bin/echo "base hdd image is a linked clone, can't use this image" exit fi fi #</ CHECK FOR HDD IMAGE IS LINK CLONE ># cloning } cloning() { # <Step_1 turn the base VM off ># /usr/bin/virsh shutdown $base_host > /dev/null 2>&1 # </Step_1 turn the base VM off ># #<Create_vm_config># /usr/bin/echo "Free port for Spice VM is $new_vm_port" #<Setup_name_for_new_VM># new_vm_name=$(/bin/echo $base_host"-"$new_vm_port) #</Setup_name_for_new_VM># #<Make_base_config_as_clone_base_VM># /usr/bin/virsh dumpxml $base_host > $SRV_TMP_DIR/$new_vm_name.xml #<Make_base_config_as_clone_base_VM># ##<Setup_New_VM_Name_in_config>## /usr/bin/sed -i "s%<name>$base_host</name>%<name>$new_vm_name</name>%g" $SRV_TMP_DIR/$new_vm_name.xml #</Setup_New_VM_Name_in_config># #<UUID Changing># old_uuid=$(/usr/bin/cat $SRV_TMP_DIR/$new_vm_name.xml |/usr/bin/grep "<uuid>") /usr/bin/echo old UUID $old_uuid new_uuid_part1=$(/usr/bin/echo "$old_uuid" |/usr/bin/cut -d "-" -f 1,2) new_uuid_part2=$(/usr/bin/echo "$old_uuid" |/usr/bin/cut -d "-" -f 4,5) new_uuid=$(/bin/echo $new_uuid_part1"-"$new_vm_port"-"$new_uuid_part2) /usr/bin/echo $new_uuid /usr/bin/sed -i "s%$old_uuid%$new_uuid%g" $SRV_TMP_DIR/$new_vm_name.xml #</UUID Changing># #<Spice port replace># old_spice_port=$(/usr/bin/cat $SRV_TMP_DIR/$new_vm_name.xml \ |/usr/bin/grep "graphics type='spice' port=") /bin/echo old spice port $old_spice_port new_spice_port=$(/usr/bin/echo "<graphics type='spice' port='$new_vm_port' autoport='no' listen='127.0.0.1'>") /bin/echo $new_spice_port /usr/bin/sed -i "s%$old_spice_port%$new_spice_port%g" $SRV_TMP_DIR/$new_vm_name.xml #</Spice port replace># #<MAC_ADDR_GENERATE># mac_new=$(/usr/bin/hexdump -n6 -e '/1 ":%02X"' /dev/random|/usr/bin/sed s/^://g) /usr/bin/echo New Mac is $mac_new #</MAC_ADDR_GENERATE># #<GET OLD MAC AND REPLACE># mac_old=$(/usr/bin/cat $SRV_TMP_DIR/$new_vm_name.xml |/usr/bin/grep "mac address=") /usr/bin/echo old mac is $mac_old /usr/bin/sed -i "s%$mac_old%$mac_new%g" $SRV_TMP_DIR/$new_vm_name.xml #<GET OLD MAC AND REPLACE># #<new_disk_create># /usr/bin/qemu-img create -f qcow2 -b $hdd_base_image /var/lib/libvirt/images_write/$new_vm_name.qcow2 #</new_disk_create># #<attach_new_disk_in_confiig># /usr/bin/echo hdd base image is $hdd_base_image /usr/bin/sed -i "s%<source file='$hdd_base_image'/>%<source file='/var/lib/libvirt/images_write/$new_vm_name.qcow2'/>%g" $SRV_TMP_DIR/$new_vm_name.xml #</attach_new_disk_in_confiig># starting_vm #</Create_vm config># } starting_vm() { /usr/bin/virsh define $SRV_TMP_DIR/$new_vm_name.xml /usr/bin/virsh start $new_vm_name while [ $a -ne 1 ] do if /usr/bin/virsh list --all |/usr/bin/grep "$new_vm_name" |/usr/bin/grep "running" > /dev/null 2>&1 then a=1 /usr/bin/sed -i "/$new_vm_port/d" $SRV_TMP_DIR/recycle.list /usr/bin/echo $new_vm_port >> $SRV_TMP_DIR/clear.list /usr/bin/echo "#" "$date" "VM $new_vm_name IS STARTED #" else /usr/bin/echo "#VM $new_vm_name is not ready#" a=0 /usr/bin/sleep 2s fi done /usr/bin/echo "#$date EXIT FROM VM_CREATE.SH#" exit } hdd_image_locate

The process of creating a new virtual machine

The vm_create.sh script reads from the configuration file the value of the "base_host" variable which determines the sample virtual machine on the basis of which the clone will be made. Unloads the xml configuration of the VM from the hypervisor database, performs a series of checks qcow of the VM disk image and, upon successful completion, creates the xml configuration file for the new VM and the “linked clone” disk image of the new VM. After that, the xml config of the new VM is loaded into the hypervisor database and the VM starts. The spice_console port is transferred from recycle.list to clear.list. The execution of vm_create.sh ends and the execution of vm_manager.sh ends.

The next time you connect, it starts from the beginning.

For emergency cases, the kit includes a script vm_clear.sh that forcibly runs through all VMs from the pool and removes them with zeroing the values of the lists. Calling it at the loading stage allows you to start (under) VDI from scratch.

/home/admin/scripts_vdi_new/vm_clear.sh

#!/usr/bin/sh #set VARIABLES# SRV_SCRIPTS_DIR=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_scripts_dir" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_SCRIPTS_DIR=$SRV_SCRIPTS_DIR" export SRV_SCRIPTS_DIR=$SRV_SCRIPTS_DIR SRV_TMP_DIR=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_tmp_dir" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_TMP_DIR=$SRV_TMP_DIR" export SRV_TMP_DIR=$SRV_TMP_DIR SRV_POOL_SIZE=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_pool_size" |/usr/bin/cut -d "=" -f2) /usr/bin/echo "SRV_POOL_SIZE=$SRV_POOL_SIZE" SRV_START_PORT_POOL=$(/usr/bin/cat /etc/vm_manager.conf \ |/usr/bin/grep "srv_start_port_pool" |/usr/bin/cut -d "=" -f2) /usr/bin/echo SRV_START_PORT_POOL=$SRV_START_PORT_POOL #Set VARIABLES# /usr/bin/echo "= Cleanup ALL VM=" /usr/bin/mkdir $SRV_TMP_DIR /usr/sbin/service iptables restart /usr/bin/cat /dev/null > $SRV_TMP_DIR/clear.list /usr/bin/cat /dev/null > $SRV_TMP_DIR/waste.list /usr/bin/cat /dev/null > $SRV_TMP_DIR/recycle.list /usr/bin/cat /dev/null > $SRV_TMP_DIR/conn_wait.list port_to_delete=$(($SRV_START_PORT_POOL+$SRV_POOL_SIZE)) while [ "$port_to_delete" -gt "$SRV_START_PORT_POOL" ] do $SRV_SCRIPTS_DIR/vm_delete.sh $port_to_delete port_to_delete=$(($port_to_delete-1)) done /usr/bin/echo "= EXIT FROM VM_CLEAR.SH="

On this I would like to end the first part of my story. The above should be enough for system administrators to try underVDI in business. If the community finds this topic interesting, in the second part I will talk about the modification of livecd Fedora and its transformation into a kiosk.

All Articles