AI from Google taught a child of AI, which is superior to all AI created by man

In May 2017, researchers from Google Brain presented an AutoML project that automates the design of machine learning models. Experiments with AutoML have shown that this system can generate small neural networks with very good performance - quite comparable to neural networks that are designed and trained by human experts. However, at first, AutoML's capabilities were limited to small scientific data sets like CIFAR-10 and Penn Treebank.

Google engineers are thinking - what if you put more serious tasks for the AI generator? Can this AI system generate another AI that would be better man-made AI in some important task, such as classifying objects from ImageNet - the most famous of the large-scale data sets in machine vision. This is how the NASNet neural network, created almost without human intervention, appeared.

As it turned out, AI handles the design and training of neural networks as well as humans. The task of classifying objects from the ImageNet dataset and defining objects from the COCO dataset was carried out as part of the project Learning Transferable Architectures for Scalable Image Recognition .

The developers of the AutoML project say that the task turned out to be non-trivial, because the new data sets are several orders of magnitude larger than the old ones the system was used to working with. I had to change some of the AutoML operation algorithms, including redesigning the search space so that AutoML could find the best layer and duplicate it repeatedly before creating the final neural network. In addition, the developers investigated the neural network architecture options for CIFAR-10 - and manually transferred the most successful architecture to the tasks of ImageNet and COCO.

Thanks to these manipulations, AutoML was able to detect the most efficient layers of the neural network that worked well for CIFAR-10 and at the same time performed well in ImageNet and COCO tasks. These two discovered layers were combined to form an innovative architecture, which was called NASNet.

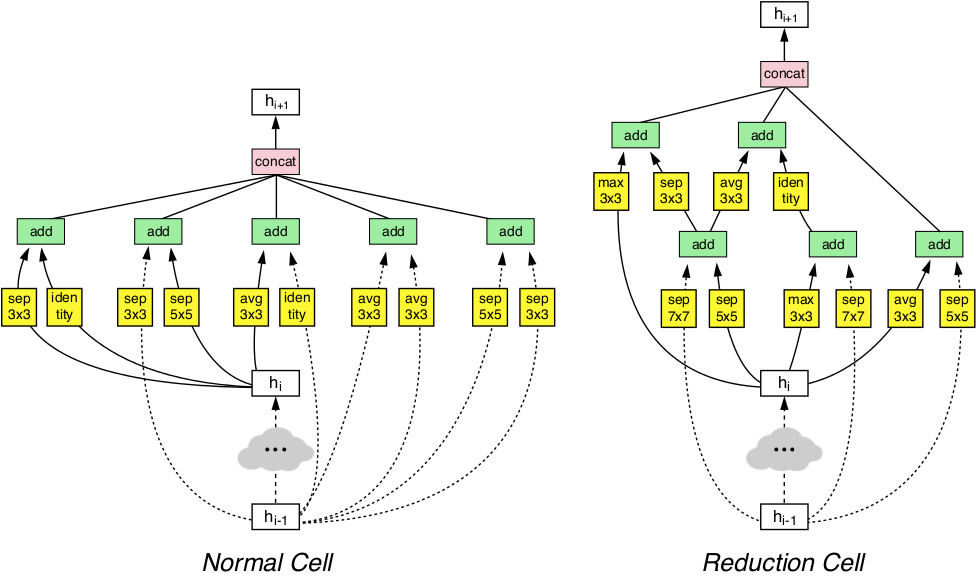

The NASNet architecture consists of two types of layers: a normal layer (left) and a reduction layer (right). These two layers are designed with an AutoML generator.

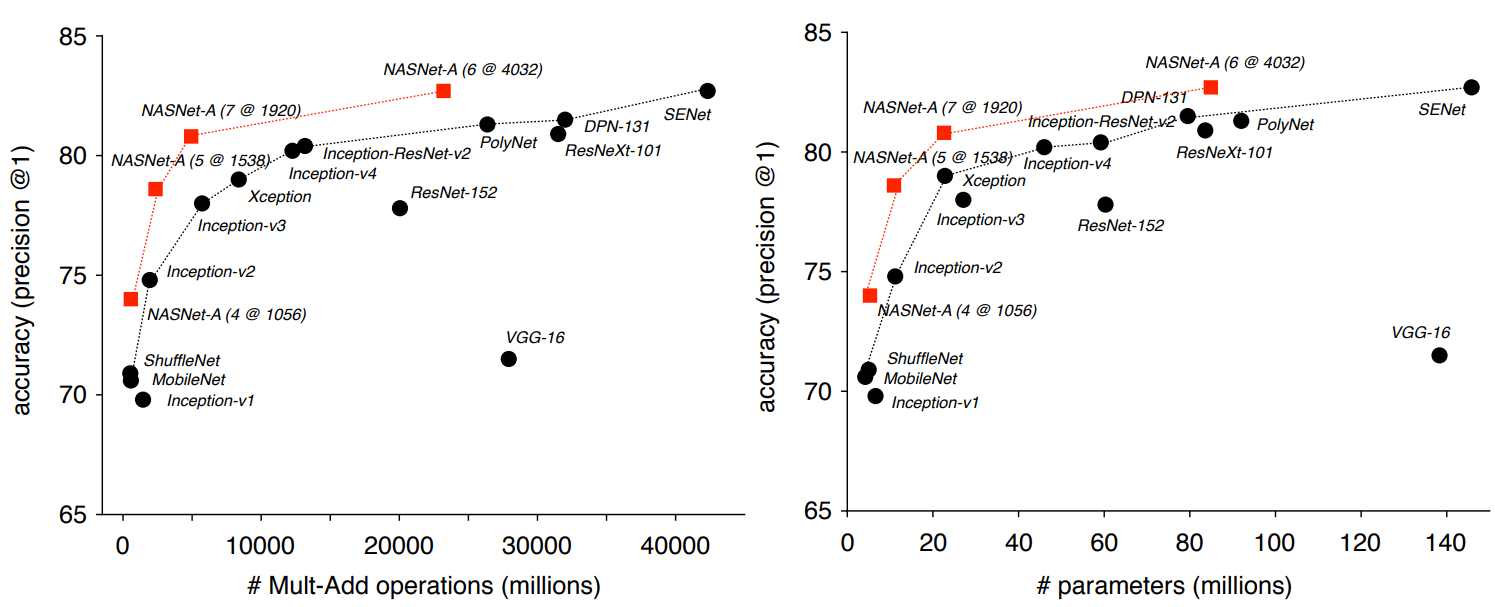

Benchmarks showed that the automatically generated AI surpasses all other computer vision systems created and trained by human experts in the classification and definition of objects.

Thus, in the ImageNet based classification task, the NASNet neural network demonstrated a prediction accuracy of 82.7% on a test set. This result is higher than all previously designed Inception family vision models. The NASNet system showed a result at least 1.2 percentage points higher than all known neural networks of computer vision, including the most recent results from papers not yet published in the scientific press, but already posted on the arXiv.org preprint site.

The researchers emphasize that NASNet can be scaled and, therefore, adapted to work on systems with weak computing resources without much loss of accuracy. The neural network is able to work even on a mobile phone with a weak CPU with a limited memory resource. The authors say that the miniature version of NASNet demonstrates an accuracy of 74%, which is 3.1 percentage points better than the highest-quality known neural networks for mobile platforms.

When the acquired characteristics from the ImageNet classifier were transferred to object recognition and combined with the Faster-RCNN framework , the system showed the best results in the object recognition problem COCO, both in a large model and in a smaller version for mobile platforms. The large model showed a result of 43.1% mAP, which is 4 percentage points better than the nearest competitor.

The authors opened the NASNet source code in the Slim and Object Detection repositories for TensorFlow, so that everyone can try out the new neural network at work.

The scientific article was published on December 1, 2017 on the site of preprints arXiv.org (arXiv: 1707.07012v3, third version).

All Articles