The brain-computer interface has ceased to be science fiction

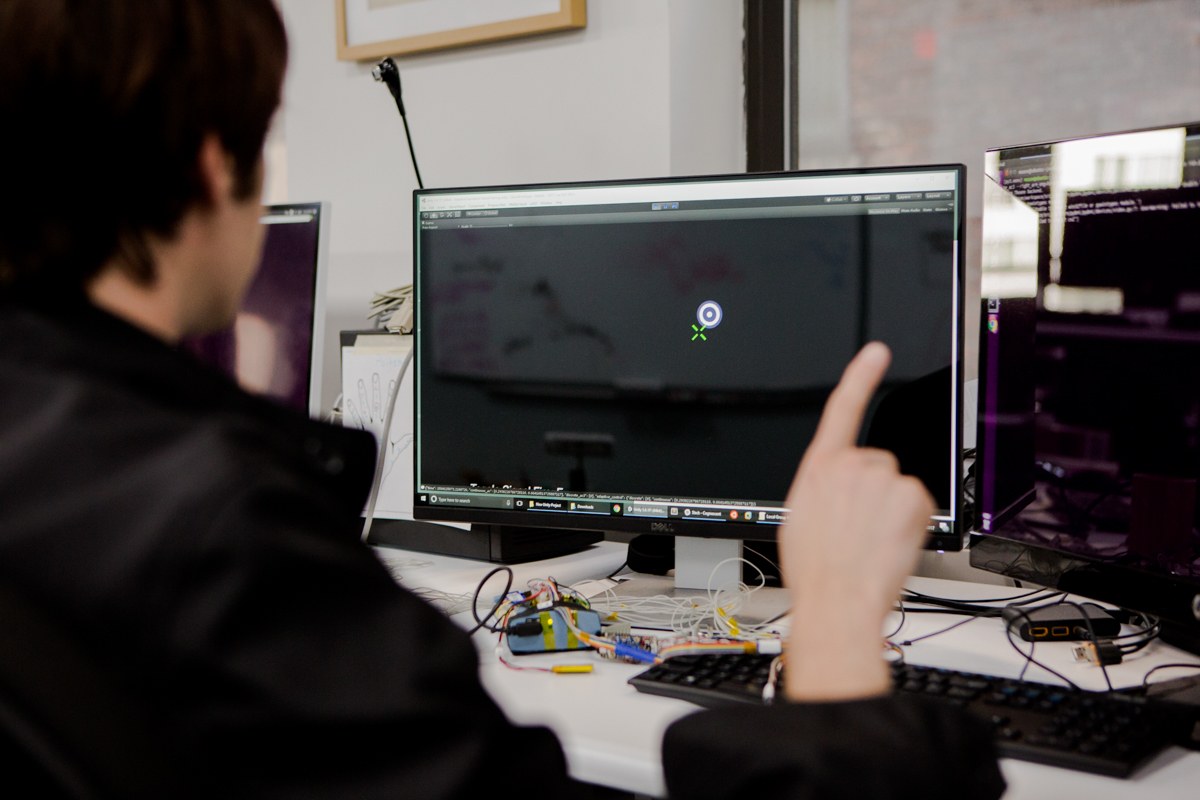

Thomas Reardon puts on terry elastic bracelets, microchips and electrodes are woven into the fabric — a sort of Steampunk jewelry [ I think the author had in mind cyberpunk - approx. trans. ] - on each wrist. "This demo blows the roof off," says Reardon, who prefers to be addressed by his last name. He sits at the keyboard, turns on the monitor, and starts typing. After a few lines of text, he pushes the keyboard away, exposing the white surface of the table, which stands at the headquarters of his startup, located in Manhattan. He continues typing, only this time he prints over the empty space of the table. But the result is the same - the words entered by him appear on the monitor.

This is, of course, cool, but much more important is how this focus happens. The text on the screen is not created by his fingers, but by the signals that his brain sends to his fingers. Bracelets intercept them, correctly interpret and transmit this input to the computer - just like the keyboard would do. And if Reardon’s fingers are drumming on the table is really not important; whether he has hands at all is not important. Communication takes place between the brain and the computer. Moreover, Reardon and his colleagues found that the machine can perceive even more subtle signals - such as a finger trembling - and does not require a real imitation of typing.

You can type a hundred words per minute on your smartphone, with your hands in your pockets. Shortly before this demonstration, I watched Reirdon’s partner, Patrick Kaifosh, play his iPhone in Asteroids. One of these mysterious bracelets was worn between his wrist and elbow. On the screen, it was clear that a good player was playing in Asteroids, and the tiny spacecraft cleverly dodged large stones and turned, breaking them into pixels. But the movements Kaifosh made to control the game were barely distinguishable: the light trembling of the fingers of his hand, which lay on the table. It seemed that he was playing a game, driving her brain. And in a sense, it was so.

2017 was the year of the appearance in public of the brain-machine interface (IMM), a technology that is trying to transfer the mysterious contents of a 1.5-pound slurry inside our skull to a machine that is becoming more and more central to our lives. The idea was taken from science fiction and went straight to the venture capitalist circles faster than the signal passes through the neuron. Facebook, Ilon Musk, other rich competitors such as the former founder of Braintree, Brian Johnson, seriously discussed the idea of silicon implants, not only merging us with computers, but also raising our level of intelligence. But CTRL-Labs, which not only has technical company recommendations, but also an advisory council from the stars in the world of neuroscience, skips the disentanglement of extremely complex intracranial connections and dismisses the need to cut the skin of the skull to insert a chip into it - and this is usually required by the IMM. Instead, it focuses on a rich set of signals that control movement and travel through the spinal cord — thus choosing an easier way to access the nervous system.

Thomas Reardon, co-founder and general director of CTRL-Labs

Reardon and colleagues from CTRL-Labs use these signals as a powerful API between all machines and the brain. By next year, they plan to turn clumsy bracelets into thinner, bracelet-like watches, so that their very first followers could abandon their keyboards and tiny buttons on their smartphone screens. The technology has the potential to improve the sensations of virtual reality, which at the moment is frightening users with their own requests by pressing buttons on controllers that they cannot see. Perhaps there is no better way to move and control the alternative world than with a brain-controlled system.

Reardon, 47-year-old director of CTRL-Labs, believes that the immediate practicality of the IMM version of his company puts it one step ahead of its rivals in science fiction. “When I see these announcements of brain-scanning technologies and an obsession with a neurobiology approach that denies the body, a sort of“ brain in a bank, ”it always seems to me that these people miss the most important thing - how all new technologies become commercial, this hard pragmatism, - he says. “We are trying to enrich people's lives, give them more control over what surrounds them, and over this little stupid device in your pocket - which now, in fact, serves as a read-only device, with terrifying ways to enter information.”

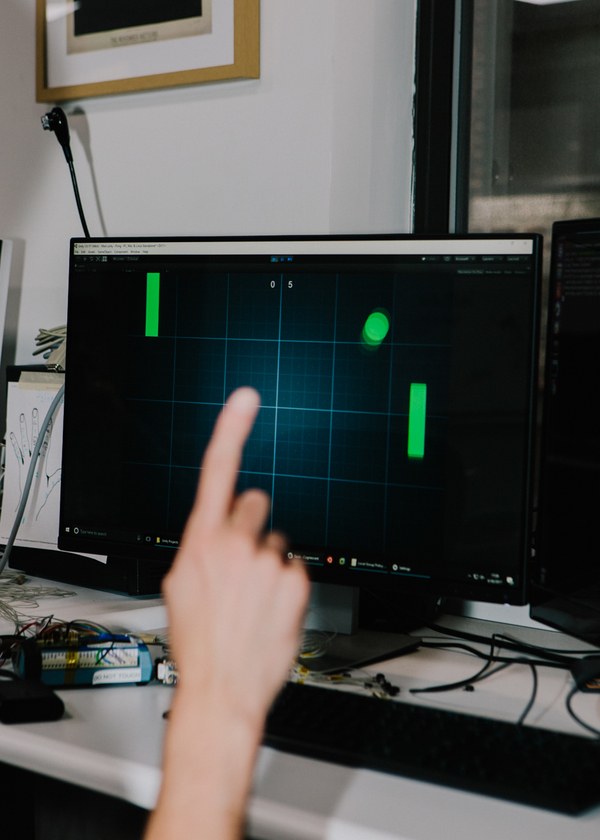

Mason Rimalee demonstrates control of the game with a bracelet

The goals of Reardon are very ambitious. “I would like our devices, whether we or our partners will produce them, to appear in millions of people in three to four years,” he says. But the improved interface to the phone is just the beginning. CTRL-Labs hopes to pave the way to a future in which people can equally successfully manage with a large number of devices surrounding them, using yet invented tools. In a world where clear signals from the hands — the secret, silent speech of the mind — is becoming the main way to communicate with the electronic sphere.

The initiative appeared at the prophetic moment of the company's existence, when it was in the ideal position to introduce innovations. The leader of the company is a talented programmer with a strategic mindset who led the implementation of the initiatives of large companies and left them to become a neurobiologist. Reardon realizes that all his past randomly led him to an incredibly important opportunity, perfect for a person with his skills. And he is determined to not miss it.

Reardon grew up in New Hampshire, and was one of 18 children in a working-class family. He fought off the pack at the age of 11, learned to program in a local training center sponsored by the technogiant Digital Equipment Corporation. “We were called“ gvipami ”, small hackers,” he says [gweep - so called the first hackers of early microcomputers / approx. trans.]. He completed several courses at MIT and by the age of 15 he entered New Hampshire University. It was a pity to look at him - it was a combination of a green youth, an outsider and a poor man. He did not study there for a year. “I was getting 16, and I realized that I needed to look for a job,” he says. As a result, he ended up in the town of Chapel Hill in North Carolina, and first worked in the X-ray laboratory at Duke University, setting up the university computer system to work with the Internet. Soon he founded his own network company, which created utilities for the then most powerful Novell company. As a result, Reardon sold the company, in the process met with the venture capitalist Ann Winblad, who introduced him to Microsoft.

Reardon’s first job there was managing a small team that cloned Novell’s key software to integrate it into Windows. He was still a teenager and was not used to managing people, and some of his subordinates called him Dougie Hauser . And yet he stood out from the crowd. “You meet a lot of smart people at Microsoft, but Reardon could hit you,” says Brad Silverberg, then the head of the Windows project, and now a venture investor (invested in CTRL-Labs). In 1993, Reirdon’s life changed when he saw the first web browser. He created a project from which Internet Explorer came out, which was quickly pushed into Windows 95 within the framework of the emerging competition. For a time, it was the most popular browser in the world.

A few years later, Reardon left the company, disappointed with the bureaucracy and tortured by the antitrust litigation involving the browser he helped create. Reardon and some people from his team have started a startup related to wireless Internet access. “We did not start on time, but we had the right idea,” he says. And then Reardon made an unexpected trick: he left the industry and became a student at Columbia University. To write a diploma in ancient culture. Inspiration he received from relaxed conversation with the famous Freeman Dyson , which occurred in 2005. He mentioned that he had read a lot of literature in Latin and Greek. “Probably the greatest living physicist told me - don't do science, read Tacitus ,” says Reardon. - That's exactly what I did". At the age of 30 years.

Thomas Reardon communicates with subordinates

In 2008, Reardon received his diploma, with honors, but even before graduating he began attending neuroscience courses and fell in love with working in the laboratory. “She reminded me of programming, doing something with my own hands and trying to do something, see how it works, and then look for mistakes,” he says. He decided to seriously address this issue and create a summary for the magistracy. He transferred to Colombia, worked under the leadership of the famous neuroscientist Thomas Jessel (currently advising CTRL-Labs along with other stars like Krishna Shenoy from Stanford).

According to their website, Jessel's laboratory “studies the systems and circuits that control the movement,” which she calls the “root of all behavior”. This reflects the orientation of Colombia to the division in neurobiology between those who study what is happening inside the brain itself and those who study its output. And although the activity of people trying to unravel the mysteries of the brain, studying its matter, is shrouded in charm, people from the second camp calmly believe that what the brain forces us to do is its main function. Neuroscientist Daniel Walpert once summed up this worldview: “We only have the brain for one reason - to produce easily adaptable and complex movements. There are no more reasons for having a brain. The world around you can be influenced only by movements. ”

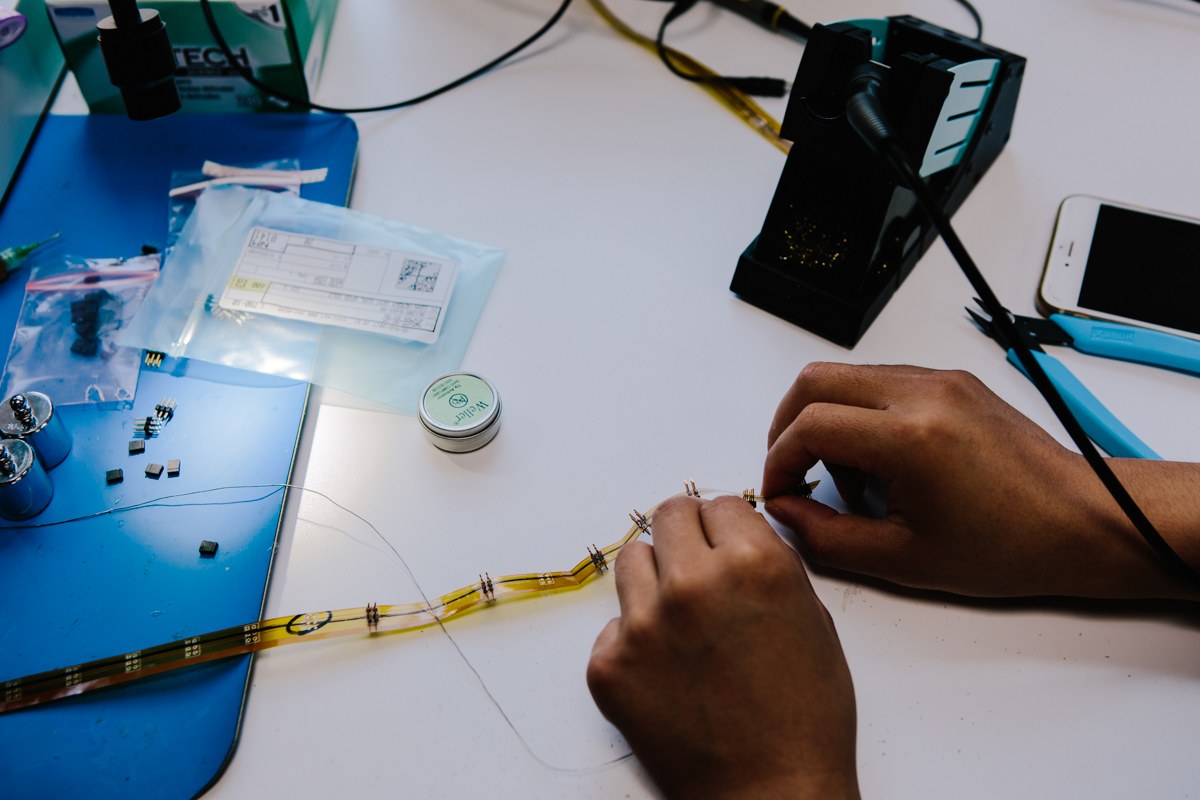

This approach helped shape CTRL-Labs, which appeared when Reardon brainstormed with his two colleagues in the laboratory in 2015. His co-founders were Kaifosh and Tim Machado, who received doctoral degrees a little earlier than Reardon. They set about creating a company. During training, Reardon was increasingly interested in network architecture, making possible "conscious movements" - actions that do not seem complicated, but in reality require accuracy, synchronization and unconsciously acquired experience. “Things like taking a cup of coffee in front of you, bringing it to your lips and not charging it with all your strength in the face,” he explains. It is incredibly difficult to calculate exactly which neurons of the brain give out to the body of the team so that these movements become possible. The only suitable way to access these actions is to drill a hole in the skull and stick an implant in the brain, and then painfully try to understand which neurons work there. “You can extract some data, but it takes a year for a person to train one of these neurons to, say, control the prosthesis,” says Reardon.

Patrick Kaifosh, Chief Information Security Officer and Co-Founder of CTRL-Labs

But the experiment Machado has opened up new possibilities. Machado, like Reardon, was very interested in how the brain controls the movements, but he never thought that IMM should be implemented through the implantation of electrodes in the brain. “I never imagined that people would do this in order to send text messages to each other afterwards,” Machado says. He studied how to do this, we can adapt motor neurons stretching through the spinal cord to the real muscles of the body. He created an experiment in which he removed the spinal cord in mice and kept them active to measure what was happening to the motor neurons. It turned out that the signals were surprisingly organized and coherent. “It was possible to understand the meaning of their activity,” says Machado. Two young neuroscientists and a programmer who became a neuroscientist, a little older than them, saw another opportunity to create an IMM. “If you work with signals, you can get something out of this,” Reardon recalls his reaction.

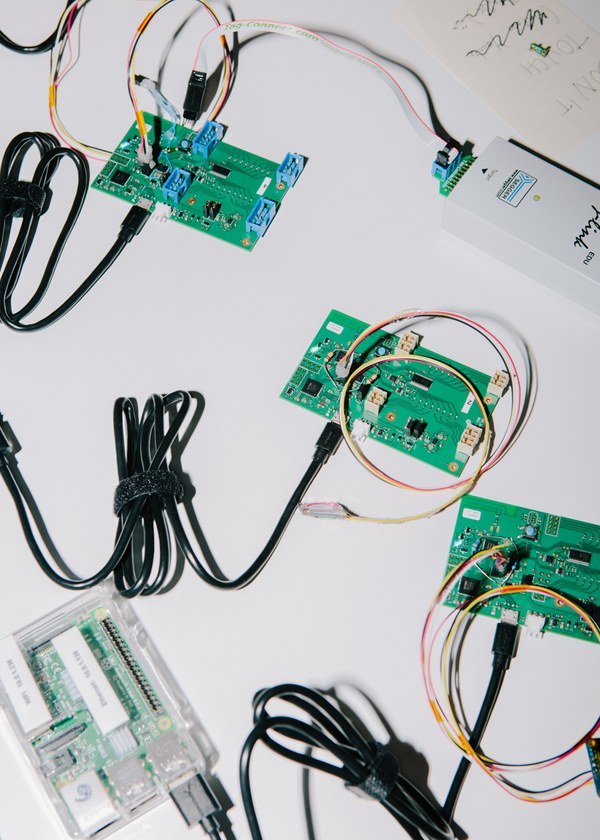

It was logical to catch these signals in the hands - because the human brain is mainly set to work with the hands. CTRL-Labs did not first understand the value of these signals: the standard test for determining neuromuscular abnormalities uses electromyography signals, EMG. In the first experiments, CTRL-Labs used standard medical instruments to generate EMG signals, even before it started building its own equipment. Innovation consists of more accurate reading of the EMG — including receiving signals from individual neurons — compared to existing technologies, and, more importantly, recognizing the links between electrical activity and muscles so that CTRL-Labs can turn EMG into instructions suitable for controlling computer devices.

Adam Berenzweig, a former technical director of machine learning company Clarifai, now a leading scientist at CTRL-Labs, believes that the development of these signals is comparable to recognizing such a complex signal as speech. Another leading scientist, Steve Demers, a physicist working in the field of computational chemistry, helped create the award-winning visual effect “bullet time” used in the film “The Matrix”. “Speech came as a result of evolution specifically to transfer information from one brain to another,” says Berenzweig. - These motor neurosignes appeared as a result of evolution specifically for transmitting data from the brain to the hand, to influence the changing world, but, unlike speech, so far we have not had access to these signals. It’s as if we don’t have microphones and the ability to record and view sound. ”

Adam Berenzwig, lead scientist at CTRL-Labs

But to catch the signal is only the first step. Perhaps the most difficult task is to turn them into signals that the device can understand. This requires a combination of programming, machine learning and neuroscience. In some cases, when you first use the system, a person will need to go through a small training period, during which the company's software will deal with how to match individual signals from a person with mouse clicks, keystrokes, touch buttons and finger movements on the smartphone screen, on the computer and on the manipulators for VR. Surprisingly, the simplest of the existing demonstration products takes only a few minutes.

It will be more difficult to train the system when people switch from imitating traditional movements - say, entering text through the QWERTY system - before changing existing tasks, say typing, with your hands in your pocket. It can be faster and more convenient, but it will require patience and effort. “This is one of the big and complex issues,” says Berenzweig. “Perhaps this will take several hours of training — but how long does it take people today to learn the QWERTY system?” Years. And he has ideas about raising the learning curve. One of them is gamification. Another is to invite people to imagine that they are learning a new language. “We can teach people how to make phonetic sounds with their hands,” he says. “It will look like they are talking with their hands.”

It is the use of brain commands of the new type that will show whether CTRL-Labs will become a company that makes improved computer interfaces, or will be dear to a new kind of symbiosis of humans and objects. One of CTRL-Labs' scientific advisers is John Krakauer, a professor of neurobiology and physical medicine and rehabilitation at the University’s medical school. Johns Hopkins, who runs a laboratory there for Brain, learning, animation, and movement. Krakauer told me that he works with other teams at his institute to use the CTRL-Labs system to train people using prostheses to replace lost limbs, in particular, to create a virtual limb with which people should learn how to manage before how to get a limb transplant from a donor. “It is very interesting for me to use this device to help people get more pleasure from their movements when they can no longer walk or play sports,” says Krakauer.

But Krakauer (who himself is a troublemaker in the world of neuroscience) also sees something more in the work of the CTRL-Labs system. Although the human hand is an unusually good device, it is possible that signals from the brain will be able to cope with something much more complex. “We don’t know if the hand is the best device we can control with our brain, or if our brain is much better than the hands,” he says. If the latter is true, EMG signals may be able to work with hands with a large number of fingers. Perhaps we can manage a variety of robotic devices with the same simplicity with which we play musical instruments with our own hands. “It’s not a big exaggeration to say that if you can do something on the screen, you can do it with a robot,” says Krakauer. “Take any corporeal abstraction you can think of, and transfer it somewhere else instead of a hand — let's say it could be an octopus.”

Perhaps we will be able to use prostheses that will exceed the capabilities of those parts of the body with which we were born. , , , . « - , – . – , ».

, CTRL-Labs . . , . , Cognescent, , IT- Cognizant $40 .

, – , . , . « - , - », – , . « , ». , 23- - Pong, . , , Asteroids, . 100%, , . Fruit Ninja. « , , , . , », – , , .

“The technology we are working on is binary in terms of capabilities — it either works or not,” says Reardon. - Can you imagine a computer mouse working 90% of the time? You would not use it. Today we have proof that the hell it works. It is surprising that it works now, ahead of schedule. ” According to co-founder Kaifosh, the next stage is the use of this technology in the company itself. “We’ll probably start by getting rid of the mice,” he says.

, . , , , . , . « , Google, Apple, Amazon, Microsoft Facebook, , – . – , ».

- . Thalmic Labs, $120 Amazon. , 2013 , , , . CTRL-Labs, , , , , «, , , , , Thalmic». $11 , CTRL-Labs, , Spark Capital, Matrix Partners, Breyer Capital, Glaser Investments Fuel Capital. , – , Facebook, . , .

« , – , , . – . , , , , , , . ».

, , , .

All Articles