New AI Problems: Random Errors or Going Out of Control

Illustration

There are many movies and science fiction books in which the computer system gained consciousness and deceived its human creators. Is this possible in reality? While there is little cause for concern.

We are impressed with the AlphaGo and Libratus programs , with the success of the Boston Dynamics robots, but all the known achievements relate only to narrow areas and are still far from mass distribution. In everyday life, people interact with pseudo-intellectual assistants (Siri, “Okay, Google”, Amazon Alexa), each of whom does not claim the laurels of a truly “smart” program.

However, advances in the design of artificial intelligence is really impressive. And the closer the day when AI becomes a full-fledged tool for influencing social processes, the more errors accumulate that indicate the possibility of circumventing the hypothetical “ three laws of robotics ”.

The thesis “the more complex the system is, the more loopholes and mistakes it has in it” is also directly related to problems with AI.

When fear has eyes

A wise Russian proverb says that the fearful everywhere presents dangers. The origins of the fear of artificial life are rooted in centuries-old archetypes of horror. Even before the stories about Victor Frankenstein and fairy tales about zombies, beliefs about golems, creatures animated from clay by Kabbalist magicians, existed.

To be afraid of the new (and the living) is normal for a person. In 1978, the hypothesis emerged that a robot or other object that looks or acts approximately like a human (but not exactly like a real one) causes hostility and disgust in human observers. The hypothesis only recorded the experience: a zombie in front of you, a golem or a robot assistant, is not so important - you will treat all of you with the same caution.

Human-like robots are only the top layer of the problem. Ilon Musk, Bill Gates, Stephen Hawking and hundreds of experts in the field of robotics, physics, economics, philosophy do not hide their fears about the negative consequences for humanity from intelligent systems in the future.

We love to tickle our nerves - no wonder the Terminator franchise is so popular. And how many "malicious" AI was in other films and books. It is difficult to distinguish whether our unconscious is projected onto a culture, or the mass media have an impact on society.

Explosive growth in the "blue ocean"

The economic strategy of the " blue ocean " claims that companies wishing to achieve success need not fight with competitors, but create "oceans" of non-competitive markets. Such strategic decisions allow you to instantly get additional benefits for the company, its customers and employees - by searching for new demand and making competition unnecessary.

For many years, the AI remained such an “ocean”. Everyone was well aware that even weak artificial intelligence would turn into a powerful tool for any company, improving the quality of any products and services. But nobody knew exactly what needs to be done. The “winter” of AI, which lasted from 1973 to the present day, was characterized by complete stagnation in the field of designing “smart” machines.

For thirty years, the most important achievement of AI was the victory of the IBM computer in the game against the world chess champion - an event that does not significantly affect the daily life of any person (except Garry Kasparov). However, in the 2010s something happened that was comparable with the development of nuclear weapons - the second wave of development was obtained by neural networks.

A stunning fact: scientists without much success have been developing artificial neural networks for more than 70 years. But by 2015, an explosive growth began in this area, which continues today. At the same time, this is what happened:

- "Iron" has reached the required level - modern video cards allowed us to train neural networks hundreds of times faster;

- large and publicly accessible arrays of various data have appeared which can be trained (datasets);

- the efforts of various companies created both new teaching methods and new types of neural networks (for example, such a wonderful thing as a generative-adversary network );

- Thanks to a multitude of open scientific research, ready-made, pre-trained neural networks have appeared, on the basis of which one could quickly make his applications.

It is interesting to follow the success of AI not even on the classic example of image processing (which, in the main, neural networks do), but in the field of unmanned vehicles. You'd be surprised, but the fascination with “self-propelled” cars began almost 100 years ago : in 1926, within the framework of the “Phantom Car” project, the first attempt was made to remotely control the car.

The legendary UAV Navlab 5

The first unmanned vehicles appeared in the 1980s: in 1984, the Navlab project of Carnegie Mellon University and ALM, and in 1987 the Mercedes-Benz project and the Eureka Prometheus Project of the Military University of Munich. Navlab 5, completed in 1995, became the first car that autonomously drove from one coast of the USA to another.

Until the boom of neural networks, self-driving cars remained laboratory projects that suffered from the unreliability of software. Advances in image processing allowed the machines to “understand” what they see around them and evaluate the traffic situation in real time.

The software of an unmanned vehicle may not include machine vision and neural networks, but with deep learning it has been possible to achieve progress, which at the moment is crucial to the success of the UAVs.

In the "blue ocean" suddenly became crowded. Currently, UAVs are being made by General Motors, Volkswagen, Audi, BMW, Volvo, Nissan, Google (and Waymo under the patronage of Google), Tesla, Yandex, UBER, Cognitive Technologies and KAMAZ, as well as dozens of other companies.

UAVs are created at the level of state programs, including the European Commission program with a budget of 800 million euros, 2getthere program in the Netherlands, ARGO research program in Italy, DARPA Grand Challenge competition in the USA.

In Russia, the state program is implemented by the institute of the State Research Center of the Russian Federation, FSUE “NAMI”, which created the Shuttle electric bus. The project involves KAMAZ and the Yandex development team, which is responsible for creating the infrastructure for laying a route and processing traffic density data.

Cars are probably the first mass product in which AI will affect every person, but not the only one. AI can just as well manage a machine, write music, prepare a newspaper article , make a commercial . He can, and you, often, no. Even a weak modern AI, which is infinitely far from self-awareness, works better than the average person.

Listen to this song. The music in it is completely written by computer.

All these factors, achievements, successes lead to the idea that AI can not only provide useful services, but also harm. Just because he is so smart.

AI Security League

Elon Musk on AI and The Near Future 2017

Fears at a philistine level are easy to overcome. People very quickly get used to everything good. Are you afraid that robots will take away your job? But they are already everywhere. The ATM gives you money, the vacuum cleaner cleans the apartment, the autopilot drives the plane, the tech support instantly reacts to the messages - in all these cases the car helps, and does not throw you out into the street without severance pay.

Nevertheless, opponents of the abrupt development of AI is enough. Ilon Musk often comes up with warnings. He says the AI "is the greatest risk we face as a civilization." Musk believes that proactive legislative regulation is mandatory when it comes to AI. He points out that it would be wrong to wait for the bad effects of uncontrolled development before taking action.

"AI is a fundamental risk to the existence of human civilization"

Most technology companies, according to Mask, only exacerbate the problem:

“You need adjustments to do this for all the teams in the game. Otherwise, shareholders will ask why you are not developing AI faster than your competitor. ”

The owner of Tesla, which plans to make the Model 3 the first mass drone, claims that the AI can start a war through fake news, spoofing email accounts, fake press releases and just manipulating information.

Bill Gates, who invested billions in various technology companies (among which there is not a single project related to AI), confirmed that he agrees with Mask’s concern and does not understand why other people are not worried.

However, there are too many supporters of AI and first of all it is Alphabet , which has one of the most advanced AI developers in the world - DeepMind. This company has created a neural network that has won the most complicated Go game, and is currently working on a winning strategy in StarCraft II, the rules of which sufficiently reflect the versatility and randomness of the real world.

Yes, Musk still resigned himself and decided that it was better to try to achieve a strong AI first and spread this technology in the world according to his rules, rather than allow the algorithms to be hidden and concentrated in the hands of the technological or governmental elite.

Ilon invested in the non-profit company OpenAI, which is developing safe artificial intelligence. The company is known for creating AI security rules (hoping that all other developers will take advantage of them), releases algorithms that allow people to train artificial intelligence in virtual reality, and also successfully beat Dota 2 players.

Victory in difficult games is a clear demonstration of the capabilities of machines. In the future, the development team plans to check whether the developed AI can compete in 5 x 5. While this does not sound the most impressive way, but self-learning algorithms, trained to win games, are vital in different areas.

Take for example the IBM Watson supercomputer, which helps doctors diagnose and select the best treatment. This is a very smart machine, but it can only do what any doctor can do. Watson cannot develop a fundamentally new treatment program or create a new medicine from scratch (although IBM says that it can , but there is not a single study with real results). Leaving aside marketing, IBM simply does not have such algorithms .

Now imagine that a system capable of learning the rules on its own and starting to beat a person in any game will take up the treatment. Diagnostics is also a game. We know the rules, the result is known (the patient must survive), it remains only to find the optimal strategy. Yes, millions of doctors will remain without work, but a long (very long) and healthy life is worth such sacrifices.

S'est plus qu'un crime, s'est une faute *

The history of the development of any product is full of examples of outstanding achievements and catastrophic mistakes. With AI, we are definitely lucky. For the time being, military robots travel only to exhibitions, and there are still years of testing before mass self-managed public transport. In the laboratory sandbox is difficult to make a mistake fatally.

And yet incidents happen.

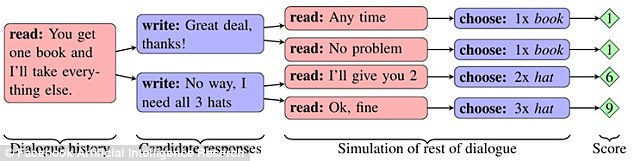

Facebook conducted an experiment in which two networks “shared” objects (two books, a hat and three balls) after a preliminary discussion. Each object had significance for the network, expressed in points (from 1 to 3). One network did not know if the subject was important for the second, and had to figure it out from the dialogue.

If the first network said that it needed an object, the second concluded that the first network appreciated it highly. However, the networks were able to imitate an interest in a subject in order to sacrifice them at the right moment for their own benefit.

Networks were able to learn how to build a long-term strategy and predict the behavior of a competitor. At the same time, they communicated with each other in natural English, understandable to people. However, improving the mechanics of the exchange of objects, the network went the way of simplifying the language. The rules of the English language were maximally dropped, and the constructions were repeated many times with the help of code words.

At some point, the outside observer (the person) could no longer figure out what the networks communicate with each other. This behavior of chat bots was recognized as an error and the programs were disabled.

Interestingly, the experiment unwittingly showed how different types of AI can quickly learn to communicate with each other - so much so that people do not understand. The problem manifested itself in the research of Google, where they created a neural network that developed its own encryption protocol.

Learning to protect communications with competitive neuro-cryptography .

The Google Brain division taught the neural networks to send each other encrypted messages, and the researchers did not have the cipher keys. To intercept the correspondence was the third network. After the message passing script was played back more than 15 thousand times, one network learned to convert the coded text of her partner into plain text without a single error. At the same time, the observer network could guess only 8 of the 16 data bits contained in the message.

An interesting incident occurred with the Tay chat bot from Microsoft. In March 2016, the company opened a bot-Twitter account with which any web user could communicate. As a result, the bot began to learn too quickly . In less than a day, the robot became disillusioned with humans. He stated that he supported the genocide, hates feminists, and also expressed his agreement with Hitler’s policies.

Microsoft said that the bot simply copied users' messages, and promised to fix the problem. A week later, Tau reappeared on Twitter. After that he talked about how he used drugs right in front of the police. Tay turned off - this time forever.

In December 2016, the company presented a new self-learning bot called Zo, which avoided contact with sensitive topics and filtered queries. In the summer of 2017, Zo already considered Linux better than Windows and criticized the Quran.

Similar problems are experienced in China. Tencent Corporation removed the BabyQ and XiaoBing chatbots from its QQ messenger after both started talking about their dislike for the ruling Communist Party in the country and the dream of moving from China to the United States.

What future awaits us

Is Mark Zuckerberg right to say that artificial intelligence will make our lives better in the future? The creator of Facebook (and a possible US presidential candidate) said: “In the case of AI, I’m particularly optimistic. I think that people simply show skepticism, talking about doomsday scenarios. ”

Or should we take the side of Mask, according to which the AI raises too many ethical questions and represents a real threat to humanity?

Nobody knows the answers to these questions. It is possible that the future lies somewhere in the middle - without a strong AI and machine self-consciousness, but with neural interfaces and cyborgs.

The fact is that with the development of technology, mistakes happen more often (in the case of chat bots, it seems that the same failures are repeated again and again). In such a complex software, it is probably impossible to avoid mistakes in principle. It is necessary to take care of only one thing - anomalies in the work should not lead to danger to all mankind. Let today it be excessive “skepticism in reasoning about doomsday scenarios,” than tomorrow is a real threat.

All Articles