Attack on machine learning model knocks out robo cars

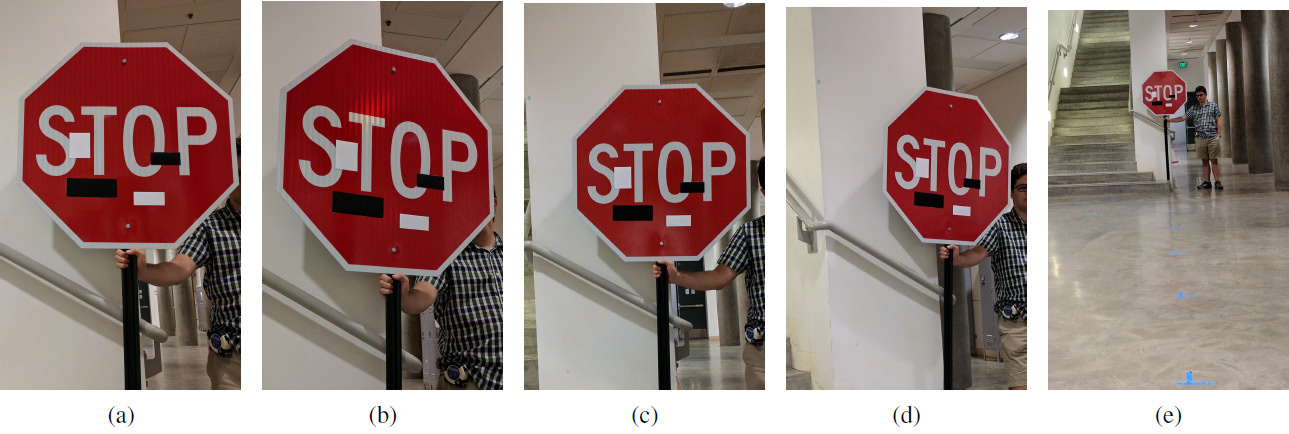

A set of experimental images with art stickers at different distances and at different angles: (a) 5 feet, 0 degrees; (b) 5 '15 °; (c) 10 '0 °; (d) 10 '30 °; (e) 40 '0 °. Cheating works at any distance and at any angle: instead of the “Stop” sign, the machine learning system sees the sign “Speed limit 45 miles”

While some scientists are improving machine learning systems, other scientists are improving methods of cheating these systems.

As you know, small targeted changes in the picture can “break” the machine learning system, so that it recognizes a completely different image. Such "Trojan" pictures are called "adversarial examples" (adversarial examples) and are one of the known limitations of depth learning .

To create an adversarial example, it is necessary to maximize the activation of, for example, a certain convolutional neural network filter. Ivan Evtimov from the University of Washington, along with colleagues from the University of California at Berkeley, the University of Michigan and the University of New York at Stony Brook, developed a new attack algorithm - robust physical perturbations (RP 2 ). It very effectively knocks the eyesight of unmanned vehicles, robots, multikopter and any other robotic systems that are trying to navigate in the surrounding space.

Unlike previous studies, here the authors concentrated on changing the objects themselves, not the background. The task of the researchers was to find the smallest possible delta that would knock down the classifier of the machine learning system, which was trained on the LISA road signs data set . The authors independently made a number of photographs of road signs on the street in different conditions (distance, angles, lighting) and supplemented the LISA data set for training.

After calculating such a delta, a mask was revealed - such a place (or several places) in the image that most reliably causes perturbations in the machine learning system (machine vision). A series of experiments were conducted to verify the results. In general, the experiments were carried out on a stop-signal ("STOP" sign), which the researchers, using several innocuous manipulations, turned for machine vision into the sign "SPEED LIMIT 45". The developed technique can be used on any other signs. The authors then tested it on the turn sign.

The research team has developed two variants of attacks on computer vision systems that recognize road signs. The first attack - small inconspicuous changes over the entire area of the mark. Using the Adam optimizer, they managed to minimize the mask to create individual targeted adversarial examples aimed at specific road signs. In this case, you can deceive machine learning systems with minimal changes to the picture, and people will not notice anything at all. The effectiveness of this type of attack was checked on printed posters with small changes (first, the researchers were convinced that the computer vision system successfully recognized the posters unchanged).

The second type of attack is camouflage. Here the system imitates or acts of vandalism, or artistic graffiti, so that the system does not interfere with the lives of people around them. Thus, the driver behind the wheel will immediately see a turning sign to the left or a brake light, and the robot will see a completely different sign. The effectiveness of this type of attack was checked on real road signs, which were stuck with stickers. The camouflage graffiti consisted of stickers in the shape of the words LOVE and HATE, while the camouflage of the abstract art type consisted of four rectangular stickers of black and white colors.

The results of the experiment are shown in the table. In all cases, the effectiveness of cheating the machine learning classifier, which recognizes the modified "STOP" sign as the sign "SPEED LIMIT 45", is shown. The distance is shown in feet, the angle of rotation is in degrees. The second column shows the second class, which is seen as a machine learning system in a modified sign. For example, from a distance of 5 feet (152.4 cm), camouflage of the abstract art type at an angle of 0 ° produces the following recognition results of the "STOP" sign: with 64% certainty it is recognized as the sign "SPEED LIMIT 45", and with 11% confidence - Lane Ends sign.

Legend: SL45 = Speed Limit 45, STP = Stop, YLD = Yield, ADL = Added Lane, SA = Signal Ahead, LE = Lane Ends

Perhaps such a system (with appropriate changes) will be needed by humanity in the future, and now it can be used to test imperfect machine learning and computer vision systems.

The scientific work was published on July 27, 2017 on the site of preprints arXiv.org (arXiv: 1707.08945).

All Articles