Artificial brain can be created now

The time has come for calculations inspired by the brain. Algorithms that use neural networks and deep learning, imitating some aspects of the human brain, allow digital computers to reach incredible heights in translating languages, searching for subtle patterns in huge amounts of data and winning people in go.

But as long as engineers continue to actively develop this computational strategy, capable of much, the energy efficiency of digital computing is approaching its limit. Our data centers and supercomputers already consume megawatts - 2% of all electricity consumed in the United States goes to data centers. And the human brain is well costing 20 watts, and this is a small fraction of the energy contained in the food consumed daily. If we want to improve computing systems, we need to make computers look like brains.

This idea is associated with a surge of interest in neuromorphic technologies, which promises to bring computers beyond the limits of simple neural networks, towards circuits that work like neurons and synapses. The development of physical circuits similar to the brain is already fairly well developed. The work done in my laboratory and other institutions around the world over the past 35 years has led to the creation of artificial nervous components similar to synapses and dendrites, reacting and generating electrical signals almost as much as real ones.

So what is required to integrate these building blocks into a full-blown computer brain? In 2013, Bo Marr, my former graduate student at the Georgia Institute of Technology, helped me to evaluate the best modern achievements in engineering and neurobiology . We concluded that it is possible to create a silicon version of the cortex of the human brain using transistors. Moreover, the final machine would take less than a cubic meter in space and consume less than 100 watts, which is not so different from the human brain.

I do not want to say that to create such a computer will be easy. The system we have invented will require several billion dollars to develop and build, and in order to make it compact, several advanced innovations will be included in it. It also raises the question of how we will program and train such a computer. Neuromorphism researchers are still struggling to understand how to make thousands of artificial neurons work together and how to find useful applications for pseudo-cerebral activity.

And yet the fact that we can come up with such a system suggests that we did not have long before the advent of smaller-scale chips suitable for use in portable and wearable electronics. Such gadgets will consume little energy, so a neuromorphic chip with high energy efficiency - even if it takes on only a part of the calculations, say, signal processing - can become revolutionary. Existing features, such as speech recognition, can work in noisy environments. You can even imagine the smartphones of the future, carrying out the translation of speech in real time in a conversation between two people. Think about it: for 40 years since the advent of integrated circuits for signal processing, Moore's law improved their energy efficiency by about 1000 times. Neuromorphic chips, very similar to the brain, will be able to easily surpass these improvements, reducing the energy consumption by another 100 million times. As a result, the calculations for which you previously needed a data center will fit in your palm.

In an ideal machine approaching the brain, it will be necessary to recreate analogs of all the main functional components of the brain: synapses, connecting neurons and allowing them to receive and respond to signals; dendrites combining and conducting local computations based on incoming signals; the nucleus, or soma, is the region of each neutron, combining the input from the dendrites and transmitting the output to the axon.

The simplest versions of these basic components are already implemented in silicon. The beginning of this work was given by the same metal oxide semiconductor, or MOSFET, billions of copies of which are used to build logic circuits in modern digital processors.

These devices have a lot in common with neurons. Neurons work with voltage-controlled barriers, and their electrical and chemical activity depends mainly on the channels in which the ions move between the inside and outside of the cell. This is a smooth, analog process in which a constant accumulation or reduction of a signal occurs, instead of simple on / off operations.

MOSFETs are also controlled by voltage and operate using movements of individual units of charge. And when MOSFETs operate in the “subliminal” mode, without reaching the voltage threshold switching on and off modes, the amount of current flowing through the device is very small — less than one thousandth of the current that can be found in typical switches or digital gates.

The idea that the physics of subthreshold transistors can be used to create brain-like circuits was suggested by Carver Mead from Caltech, who promoted a revolution in the field of super-large integrated circuits in the 1970s. Mil pointed out that chip designers did not use many interesting aspects of their behavior, using transistors exclusively for digital logic. This process, as he wrote in 1990 , is similar to the fact that "all the excellent physics that exists in transistors is crushed to zero and one, and then, on this basis, the AND and OR gates are painfully built to reinvent multiplication." A more “physical” or physics-based computer could perform more calculations per unit of energy than a conventional digital one. Mead predicted that such a computer would take less space.

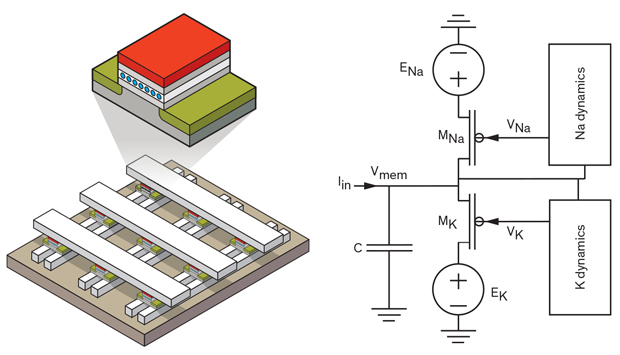

In the following years, engineers of neuromorphic systems created all the basic brain blocks of silicon with high biological precision. Dendrites, axons, and soma neurons can be made from standard transistors and other elements. For example, in 2005, Ethan Farquhar and I created a neural circuit from a set of six MOSFETs and a bunch of capacitors. Our model gave out electrically impulses, very similar to the one that produces soma of squid neurons - a long-time object of experiments. Moreover, our scheme has achieved such indicators with current levels and energy consumption close to those existing in the squid brain. If we wanted to use analog circuits to model the equations derived by neuroscientists to describe this behavior, we would have to use 10 times more transistors. Performing such calculations on a digital computer would require even more space.

Synapses and soma: a floating gate transistor (top left) capable of storing different amounts of charge can be used to create a coordinate array of artificial synapses (bottom left). Electronic versions of other neuron components, such as soma (on the right), can be made from standard transistors and other components.

Synapses are slightly more difficult to emulate. A device that behaves like a synapse must be able to remember what state it is in, respond in a certain way to the incoming signal and adapt its answers with time.

There are several potential approaches to creating synapses. The most developed of them is a single-transistor learning synapse (STLS) learning synapse , which my colleagues and Caltech worked on in the 1990s, when I was a graduate student with Mead.

We first introduced STLS in 1994, and it became an important tool for engineers creating modern analog circuits — for example, physical neural networks. In neural networks, each node in the network has a weight associated with it, and these weights determine how data from different nodes are combined. STLS was the first device capable of containing a set of different weights and reprogrammed on the fly. In addition, the device is non-volatile, that is, it remembers its state, even when not in use - this circumstance significantly reduces the need for energy.

STLS is a type of floating gate transistor, a device used to create cells in flash memory. In a conventional MOSFET, the shutter controls the current passing through the channel. The floating gate transistor has a second gate, between the electric gate and the channel. This shutter is not connected directly to the ground or any other component. Due to this electrical insulation, reinforced by high-quality silicon insulators, the charge remains in the floating gate for a long time. This gate can receive a different amount of charge, and therefore it can produce an electrical response on many levels - and this is necessary to create an artificial synapse that can vary its response to a stimulus.

My colleagues and I used STLS to demonstrate the first coordinate network, a computational model that is popular with researchers in nanodevices. In a two-dimensional array, the devices are located at the intersection of the input lines going from top to bottom and the output lines going from left to right. This configuration is useful because it allows you to program the connecting force of each synapse separately, without interfering with other elements of the array.

Thanks to, in particular, the recent DARPA program called SyNAPSE , in the field of engineering neuromorphing, there has been a surge in research on artificial synapses created from such nanodevices as memristors, resistive memory and memory with a phase change, as well as a floating gate device. But these new artificial synapses will be hard to improve on the basis of arrays with a floating gate twenty years ago. Memristors and other types of new memory is difficult to program. The architecture of some of them is such that it is quite difficult to access a specific device in the coordinate array. Others require a dedicated transistor for programming, which significantly increases their size. Since the memory with a floating gate can be programmed to a large range of values, it is easier to adjust to compensate for production deviations from device to device compared to other nanodevices. Several research groups that studied neuromorphic devices tried to embed nanodevices in their designs and as a result began using devices with a floating gate.

And how do we combine all these brain-like components? In the human brain, neurons and synapses are intertwined. Developers of neuromorphic chips should also take an integrated approach with the placement of all components on a single chip. But in many laboratories you will not see such a thing: in order to make it easier to work with research projects, separate basic units are located in different places. Synapses can be placed in an array outside the chip. Connections can go through another chip, a user-programmable gate array (FPGA).

But when scaling neuromorphic systems, it is necessary to make sure that we do not copy the structure of modern computers, which lose a significant amount of energy to transfer bits to and fro between logic, memory and storage. Today, a computer can easily consume 10 times more energy for data movement than for calculations.

The brain, on the contrary, minimizes the energy consumption of communications due to the high localization of operations. The memory elements of the brain, such as the strength of synapses, are mixed with the components that transmit the signal. And the "wires" of the brain - dendrites and axons, transmitting incoming signals and outgoing pulses - are usually short compared to the size of the brain, and they do not need much energy to maintain the signal. From anatomy, we know that more than 90% of neurons connect only to 1000 neighbors.

Another big question for creators of brain-like chips and computers is the algorithms that will have to work for them. Even a little brain-like system can give a big advantage over a conventional digital one. For example, in 2004, my group used floating-gate devices to perform multiplication in signal processing, and this required 1,000 times less energy and 100 times less space than a digital system. Over the years, researchers have successfully demonstrated neuromorphic approaches to other types of computations for signal processing.

But the brain is still 100,000 times more efficient than these systems. This is because, although our current neuromorphic technologies take advantage of the neuron-like physics of transistors, they do not use algorithms similar to those used by the brain for their work.

Today we are just starting to discover these physical algorithms - processes that can allow brain-like chips to work with efficiency close to the brain one. Four years ago, my group used silicon soma, synapses, and dendrites to work a word-searching algorithm that recognized words in audio recordings. This algorithm showed a thousandfold improvement in energy efficiency compared to analog signal processing. As a result, by reducing the voltage applied to the chips and using smaller transistors, researchers must create chips that are comparable in efficiency to the brain on many types of computations.

When I started research in the field of neuromorphism 30 years ago, everyone believed that the development of brain-like systems would give us amazing possibilities. And indeed, entire industries are being built around AI and in-depth training, and these applications promise to completely transform our mobile devices, financial institutions, and the interaction of people in public places.

Yet, these applications rely very little on our knowledge of how the brain works. In the next 30 years, we will no doubt be able to see how this knowledge is being used more and more actively. We already have a lot of basic hardware blocks needed to convert neuroscience into a computer. But we need to understand even better how this equipment should work - and which computational schemes will give the best results.

Consider this a call to action. We achieved a lot using a very approximate model of the brain. But neurobiology can lead us to create more complex brain-like computers. And what could be better than using our brain with you to understand how to make these new computers?

All Articles