Bayes theorem: why all the fuss?

Bayes theorem is called a powerful method of creating new knowledge, but it can also be used to advertise superstitions and pseudoscience.

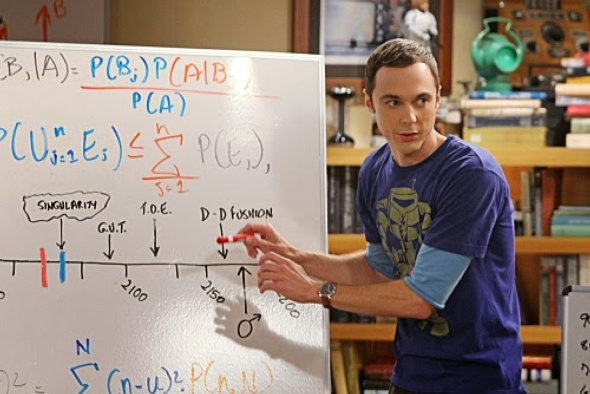

The Bayes theorem became so popular that it was even shown on the Big Bang Theory television show. But, like any instrument, it can be used for good or for harm.

I do not know exactly when I first heard about her. But truly, I began to show interest in it only in the last ten years, after several of the biggest nerds from my students began to advertise it as a magic conductor in life.

The students' delusions confused me, as well as the explanations of the theorem on Wikipedia and other sites - they were either completely stupid or too complicated. I decided that Bayes is a passing fad, and there is no sense in deep research. But now the Bayes fever has become too annoying to ignore.

According to The New York Times, Bayesian statistics "penetrates everywhere, from physics to cancer research, from ecology to psychology." Physicists have proposed Bayesian interpretations of quantum mechanics and Bayesian defenses of string theory and multiverse theory. Philosophers argue that all of science as a whole can be viewed as a Bayesian process, and that Bayes helps to distinguish science from pseudoscience better than the falsifiability method popularized by Karl Popper .

AI researchers, including Robo mobile developers at Google, use Bayes software to help machines recognize patterns and make decisions. Bayesian programs, according to Sharon Bertsch McGrayne, the author of the popular history of Bayes theorem, "sort email and spam, evaluate medical risks and national security, decipher DNA, other." On Edge.org, physicist John Mather worries that Bayesian machines can become so smart that they crowd out people.

Cognitiveists suggest that Bayes' algorithms work in our brain when it senses, reflects, and makes decisions. In November, scientists and philosophers studied this opportunity at a conference at New York University called “Does the Bayes Brain Work?”

Fanatics insist that if more people accepted the Bayesian method of thinking (instead of unconscious work on Bayes, which supposedly goes in the brain), the world would be much better. In the article "Intuitive explanation of the Bayes theorem," AI theorist Eliezer Yudkovsky speaks about Bayes' adoration:

“Why does the mathematical concept evoke such strange enthusiasm among its students? What is the so-called. "Bayesian revolution", which rolls through various areas of science, declaring the absorption of even experimental methods as special cases? What is the secret behind Bayesians? What light did they see? Soon you will find out. Soon you will be one of us. ” Yudkovsky is joking. Or not?

Because of all this hype, I tried once and for all to deal with Bayes. I found the best explanations of the theorem among countless of them on the Internet at Yudkovsky, on Wikipedia and in the works of the philosopher Curtis Brown and computer scientists Oscar Bonill and Kalida Azad. Now I will try, mainly for myself too, to explain what the essence of the theorem is.

The Bayes theorem, named after the 18th century Presbyterian priest Thomas Bayes [ correct transcription - Beise / approx. trans. ] Is a method of calculating the validity of beliefs (hypotheses, statements, suggestions) based on available evidence (observations, data, information). The simplest version is:

original faith + new evidence = new, improved faith

If more: the probability that a belief is true given the new evidence is equal to the probability that the belief was true without this evidence multiplied by the probability that the evidence is true if the belief is true and divided by the probability that the evidence is true regardless truth beliefs. Clear?

A simple mathematical formula looks like this:

P (B | E) = P (B) * P (E | B) / P (E)

Where P is the probability, B is the belief, E is the evidence. P (B) is the probability that B is true, P (E) is the probability that E is true. P (B | E) is the probability B in the case of truth E, and P (E | B) is the probability E in the case of truth B.

To demonstrate the work of the formula often use the example of medical tests. Suppose you are checked for cancer, which appears in 1% of people your age. If the test is 100% reliable, then you do not need a Bayes theorem to understand what a positive result means - but let's just look at this situation for an example.

To calculate the value of P (B | E), you need to place the data in the right side of the equation. P (B), the probability that you have cancer before testing is 1%, or 0.01. P (E) is also the same, the probability that the test result will be positive. Since they stand in the numerator and denominator, they are reduced, and P (B | E) = P (E | B) = 1 remains. If the test result is positive, you have cancer, and vice versa.

In the real world, test reliability rarely reaches 100%. Suppose your test is 99% reliable. That is, 99 out of 100 people with cancer will get a positive result, and 99 healthy people out of 100 will get a negative result. And it will still be an amazingly reliable test. Question: If your test is positive, what is the probability that you have cancer?

Now the Bayes theorem shows all power. Most people will find that the answer is 99%, or somewhere like that. After all, the test is so reliable, right? But the correct answer will be - only 50%.

To find out why, insert the data into the right side of the equation. P (B) is still 0.01. P (E | B), the probability of getting a positive test in case of cancer is 0.99. P (B) * P (E | B) = 0.01 * 0.99 = 0.0099. This is the likelihood that you will receive a positive test showing that you are sick.

What about the denominator, P (E)? There is a little trick. P (E) - the probability of getting a positive test, regardless of whether you are sick. In other words, it includes false positives and true positives.

To calculate the probability of a false positive response, you need to multiply the number of false positives, 1% or 0.01, by the percentage of people who do not have cancer - 0.99. It turns out 0,0099. Yes, your excellent test with 99% accuracy produces as many false positives as true ones.

Let's finish the calculations. To get P (E), we add the true and false positives, we get 0.0198, divide it to 0.0099, and we get 0.5. So, P (B | E), the probability that you have cancer in the event of a positive test is 50%.

If you pass the test again, you can drastically reduce the uncertainty, since the probability of having P (B) cancer is already 50% instead of 1. If the second test is also positive, according to Bayes' theorem, the probability of having cancer is 99%, or 0.99. As this example shows, repeating a theorem can give a very accurate answer.

But if the reliability of the test is 90%, which is quite good, the chances of having cancer even in the case of twice received positive results are still less than 50%.

Most people, including doctors, hardly understand this distribution of chances, which explains the excessive number of diagnoses and treatments for cancer and other diseases. This example shows that Bayesians are right: the world would be better if more people — at least more patients and doctors — would accept Bayesian logic.

On the other hand, the Bayes theorem is only a reduction to common sense code. As Yudkovsky writes by the end of his teaching material: “By this point, Bayes theorem may seem quite obvious and resemble a tautology, instead of being amazing and new. In that case, this introduction has achieved its goal. ”

Going back to the cancer example: the Bayes theorem says that the probability of having cancer in case of positive test results is equal to the probability of obtaining a true positive result divided by the probability of all positive results, true and false. In general, beware of false positive results.

Here is my generalization of this principle: the credibility of your belief depends on how strongly your belief explains the existing facts. The more options for explaining the facts, the less reliable your personal conviction. From my point of view, this is the essence of the theorem.

“Alternative explanations” can include a lot of things. Your facts can be false, obtained with the help of an improperly worked tool, incorrect analysis, a tendency to get the desired result, and even forged. Your facts may be accurate, but many other beliefs or hypotheses may explain them.

In other words, there is no magic in the Bayes theorem. It all comes down to the fact that your beliefs are credible as far as the evidence in their favor is true. If you have good proofs, the theorem produces valid results. If the evidence is so-so, the theorem will not help you. Garbage at the entrance, garbage at the exit.

Problems with the theorem can begin with P (B), the initial assumption about the probability of your beliefs, often called a priori probability. In the example above, we had a beautiful and accurate a priori probability of 0.01. In the real world, experts argue about how to diagnose and account for cancer. Your prior probability is likely to consist of a range, rather than a single number.

In many cases, the estimation of a priori probability is based only on conjectures, and allows subjective factors to sneak into calculations. One can guess that the probability of the existence of something - unlike the same cancer - is simply zero, for example, strings, the multiverse, inflation, or god. You can refer to dubious evidence of dubious faith. In such cases, the Bayesian theorem can advertise pseudoscience and superstition, along with common sense.

The theorem contains edification: if you are not scrupulously looking for alternative explanations for the evidence, then the evidence will only confirm what you already believe. Scientists often overlook this, which explains why so many scientific statements turn out to be wrong. Bayesians claim that their methods can help scientists overcome their penchant for finding facts that support their belief and produce more reliable results - but I doubt it.

As I already mentioned, some enthusiasts of string and multiverse theory use Bayesian analysis. Why? Because enthusiasts are tired of hearing that string theory and the multiverse theory are not falsifiable, and therefore unscientific. Bayes theorem allows them to present these theories in the best light. In these cases, the theorem does not destroy bias, but indulges it.

As a journalist who works with popular science topics, Fay Flame in The New York Times, wrote, Bayesian statistics "cannot save us from bad science." Bayes theorem is universal and can serve any purpose. A prominent Bayesian statistician, Donald Rubin worked as a consultant to tobacco companies in litigations related to diseases derived from smoking.

And yet I admire the Bayes theorem. It reminds me of the theory of evolution, another idea that seems to Tautology simple or depressingly deep, depending on the point of view, and in the same way inspired people to be nonsense and surprising discoveries.

Perhaps, because my brain works according to Bayes, allusions to this theorem are beginning to be seen everywhere. Scrolling through Edgar Allan Poe’s collected works on my Kindle, I came across the following sentence from The Tale of the Adventures of Arthur Gordon Pym: “Due to our bias or prejudice, we are not able to learn a lesson from even the most obvious things” [ trans. George Pavlovich Zlobin ].

Keep this in mind before signing up for Bayesians.

All Articles