The book "Data mining. Retrieving information from Facebook, Twitter, LinkedIn, Instagram, GitHub »

Hello, habrozhiteli! In the bowels of popular social networks - Twitter, Facebook, LinkedIn and Instagram - the richest deposits of information are hidden. From this book, researchers, analysts, and developers will learn how to extract this unique data using Python code, a Jupyter Notebook, or Docker containers. First, you will get acquainted with the functionality of the most popular social networks (Twitter, Facebook, LinkedIn, Instagram), web pages, blogs and feeds, email and GitHub. Then start the data analysis using the example of Twitter. Read this book to:

Hello, habrozhiteli! In the bowels of popular social networks - Twitter, Facebook, LinkedIn and Instagram - the richest deposits of information are hidden. From this book, researchers, analysts, and developers will learn how to extract this unique data using Python code, a Jupyter Notebook, or Docker containers. First, you will get acquainted with the functionality of the most popular social networks (Twitter, Facebook, LinkedIn, Instagram), web pages, blogs and feeds, email and GitHub. Then start the data analysis using the example of Twitter. Read this book to:

- Learn about the modern landscape of social networks;

- Learn to use Docker to easily operate with the codes provided in the book;

- Learn how to adapt and deliver code to the GitHub open repository;

- Learn to analyze the data collected using the capabilities of Python 3;

- Master advanced analysis techniques such as TFIDF, cosine similarity, collocation analysis, click detection and pattern recognition;

- Learn how to create beautiful data visualizations using Python and JavaScript.

Excerpt. 4.3. A brief introduction to data clustering techniques

Now that we’ve got an idea of how to access the LinkedIn API, we’ll go over to a specific analysis and discuss clustering in detail [This type of analysis is also often called the approximate matching , fuzzy matching and / or deduplication method - ed .] - a machine-learning method without a teacher, considered the main one in any set of data analysis tools. The clustering algorithm takes a collection of elements and divides them into smaller collections (clusters) according to some criterion designed to compare the elements in the collection.

Clustering is a fundamental method of data analysis, so that you can get a better idea of it, this chapter includes footnotes and notes describing the mathematical apparatus that underlies it. It’s good if you try to understand these details, but in order to successfully use the clustering methods, you don’t need to understand all the subtleties, and, of course, you are not required to understand them the first time. You may need to think a little to digest some information, especially if you do not have mathematical training.

For example, if you are considering moving to another city, you can try to combine LinkedIn contacts by geographic region in order to better assess the economic opportunities available. We will return to this idea a bit later, but for now we will briefly discuss some of the nuances associated with clustering.

When implementing solutions to the problems of clustering data from LinkedIn or from other sources, you will repeatedly encounter at least two main topics (a discussion of the third is given in the sidebar “The role of dimensionality reduction in clustering” below).

Data normalization

Even when using a very good API, data is rarely provided in the format you need - often it takes more than a simple conversion to bring the data into a form suitable for analysis. For example, LinkedIn users allow certain liberties when describing their posts, so it is not always possible to get perfectly normal descriptions. One manager may choose the name "chief technical director", another may use the more ambiguous name "TRP", and the third may describe the same position in some other way. Below we will return to the problem of data normalization and implement a template for processing certain aspects of it in LinkedIn data.

Affinity definition

Having a set of well-normalized elements, you may wish to evaluate the similarity of any two of them, be it the names of positions or companies, descriptions of professional interests, geographical names or any other fields whose values can be represented in arbitrary text. To do this, you need to define a heuristic that evaluates the similarity of any two values. In some situations, the definition of similarity is quite obvious, but in others it can be fraught with some difficulties.

For example, comparing the total length of service of two people is realized by simple addition operations, but comparing broader professional characteristics, such as “leadership abilities,” in a completely automated way can be quite a challenge.

The role of dimensionality reduction in clustering

Normalization of data and determination of similarity are two main topics that you will encounter in clustering at an abstract level. But there is a third topic - dimensionality reduction, which becomes relevant as soon as the data scale ceases to be trivial. To group elements in a set using a similarity metric, ideally it is advisable to compare each element with every other element. In this case, in the worst case scenario, for a set of n elements, you will have to calculate the degree of similarity approximately n 2 times to compare each of n elements with n –1 other elements.

In computer science, this situation is called the quadratic complexity problem and is usually denoted as O (n 2 ) ; in conversations, it is usually called the "problem of quadratic growth of large O." O (n 2 ) problems become unsolvable for very large n values, and in most cases the term unsolvable means that you have to wait “too long” for the solution to be computed. “Too long” can be minutes, years or eras, depending on the nature of the task and its limitations.

A review of dimensionality reduction methods is beyond the scope of the current discussion, so we only note that a typical dimensionality reduction method involves using a function to organize “fairly similar” elements into a fixed number of groups so that elements in each group can be fully considered similar. Dimension reduction is often not only a science, but also an art, and is usually considered confidential information or a trade secret by organizations that successfully use it to gain a competitive advantage.

Clustering methods are the main part of the arsenal of tools of any data analysis specialist, because in almost any industry - from military intelligence to banking and landscape design - you may need to analyze a truly huge amount of non-standard relational data, and the growth in the number of job openings of specialists according to previous years are clear evidence of this.

As a rule, a company creates a database to collect any information, but not every field may contain values from some predefined set. This may be due to the incompletely thought out logic of the user interface of the application, the inability to pre-determine all acceptable values, or the need to give users the ability to enter any text as they wish. Be that as it may, the result is always the same: you get a large amount of non-standardized data. Even if in a certain field in total N different string values can be stored, some of them will actually mean the same concept. Duplicates can occur for various reasons - due to spelling errors, the use of abbreviations or abbreviations, as well as different character registers.

As mentioned above, this is a classic situation that arises when analyzing data from LinkedIn: users can enter their information in free text form, which inevitably leads to an increase in variations. For example, if you decide to research your professional network and determine where most of your contacts work, you will have to consider commonly used options for writing company names. Even the simplest company names can have several options that you will almost certainly come across (for example, “Google” - an abbreviated form of “Google, Inc.”), and you will have to consider all of these options in order to bring them to a standard form. When standardizing company names, a good starting point may be to normalize abbreviations in names such as LLC and Inc.

4.3.1. Normalization of data for analysis

As a necessary and useful introduction to the study of clustering algorithms, let us consider a few typical situations that you may encounter when solving the problem of normalizing data from LinkedIn. In this section, we implement a standard template for normalizing company and job titles. As a more advanced exercise, we will also briefly discuss the issue of disambiguation and geocoding of place names from a LinkedIn profile. (That is, we will try to convert place names from LinkedIn profiles, such as “Greater Nashville Area”, to coordinates that can be mapped.)

The main result of efforts to normalize data is the ability to take into account and analyze important features and use advanced analysis methods, such as clustering. In the case of data from LinkedIn, we will study features such as posts and geographic locations.

Normalization and company counting

Let's try to standardize the names of companies from your professional network. As described above, you can extract data from LinkedIn in two main ways: programmatically, using the LinkedIn API, or using the export mechanism of a professional network in the form of an address book that includes basic information such as name, position, company, and contact information.

Imagine that we already have a CSV file with contacts exported from LinkedIn, and now we can normalize and display the selected entities, as shown in Example 4.4.

As described in the comments inside the examples, you need to rename the CSV file with the contacts that you exported from LinkedIn, following the instructions in the section “Downloading the contact information file on LinkedIn” and copy it to a specific directory where the program code can find it.

Example 4.4 Simple normalization of abbreviations in company names

import os import csv from collections import Counter from operator import itemgetter from prettytable import PrettyTable # LinkedIn: https://www.linkedin.com/psettings/member-data. # , LinkedIn , # . # resources/ch03-linkedin/. CSV_FILE = os.path.join("resources", "ch03-linkedin", 'Connections.csv') # , # . # . transforms = [(', Inc.', ''), (', Inc', ''), (', LLC', ''), (', LLP', ''), (' LLC', ''), (' Inc.', ''), (' Inc', '')] companies = [c['Company'].strip() for c in contacts if c['Company'].strip() != ''] for i, _ in enumerate(companies): for transform in transforms: companies[i] = companies[i].replace(*transform) pt = PrettyTable(field_names=['Company', 'Freq']) pt.align = 'l' c = Counter(companies) [pt.add_row([company, freq]) for (company, freq) in sorted(c.items(), key=itemgetter(1), reverse=True) if freq > 1] print(pt)

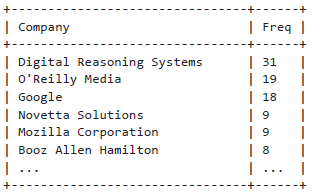

The following are the results of a simple frequency analysis:

Python supports the ability to pass arguments to functions by dereferencing a list and / or dictionary, which is sometimes very convenient, as shown in Example 4.4. For example, calling f (* args, ** kw) is equivalent to calling f (1, 7, x = 23), where args is defined as a list of arguments [1,7] and kw as a dictionary {'x': 23}. Other Python programming tips can be found in Appendix B.

Keep in mind that to handle more complex situations, for example, to normalize different names of the same company that have changed over time, such as O'Reilly Media, you will need to write more intricate code. In this case, the name of this company may be represented as O'Reilly & Associates, O'Reilly Media, O'Reilly, Inc. or just O'Reilly [If it seems to you that great difficulties await you, just imagine what kind of work the Dun & Bradstreet specialists had to do (http://bit.ly/1a1m4Om), which specializes in cataloging information and faced with the task of compiling and accompanied by a registry with company names in various languages of the world - ed. ].

About Authors

Matthew Russell (@ptwobrussell) is a leading specialist from Middle Tennessee. At work, he tries to be a leader, helps others become leaders, and creates highly effective teams to solve complex problems. Outside work, he reflects on reality, practices pronounced individualism and prepares for the zombie apocalypse and the rebellion of machines.

Mikhail Klassen, @MikhailKlassen is the chief data processing and analysis specialist at Paladin AI, a start-up company that creates adaptive learning technologies. He holds a PhD in astrophysics from McMaster University and a bachelor's degree in applied physics from Columbia University. Michael is interested in the problems of artificial intelligence and the use of tools for analyzing data for good purposes. When he does not work, he usually reads or travels.

»More details on the book can be found on the publisher’s website

» Contents

» Excerpt

25% off coupon for hawkers - Data Mining

Upon payment of the paper version of the book, an electronic book is sent by e-mail.

All Articles