The robot that will follow your smile. ROS. Part 3: accelerate, change the camera, fix the gait

Last time , working with OpenCV and ROS (robot operating system), using all the power of raspberry pi 3b +, we managed to ride along the line, see smiles on people's faces, sad muzzle of cats and even go to meet them.

But along with the encouraging first steps in this area of robotics, I had to face a host of small tasks: slow raspberry pi, a small distance from the camera, which recognized the face, shifting to the side when driving, and others. How to solve them, including opening new small horizons in the development of ROS, will be described later.

1. By running all the nodes (scripts in ROS) from a previously assembled project, and starting working with Haar cascades, it quickly became clear that the performance of a single-board computer for such tasks is still weak. Despite the fact that the processor load was not peak and varied within 80%, the robot thought for a long time when he saw the face of a person or the face of a cat, which he was supposed to follow.

The saddest thing was that after the face or face went out of the field of view of the camera, the robot began to move, “thinking” that it was going to the object. In general, as in M.Yu. Lermontov:

"The long-time outcast wandered

In the desert of the world without a shelter ... ".

The first attempts to rectify the situation were as follows:

- in the camera launch node (on raspberry pi) the size of the captured image was reduced from 640x480 60fps to 320x240 15 fps.

This gave a certain increase in productivity, but the picture as a whole remained far from ideal.

Further, in the script for starting following a person’s face (follow_face.py) or a cat (follow_cat2.py), the conclusions on the screen of opencv itself were commented out. That is all cv.imshow. Now, when starting the trip, it was impossible to visually observe whether the robot sees a picture to which it is moving or not. Well, I had to sacrifice visualization.

Also, the info-outputs of ROS itself in the scripts were commented on: # rospy.loginfo.

This slightly reduced the load and increased the speed of work.

But ... it was necessary to come up with something else.

2. In addition, the robot was terribly shortsighted. Following our own past experience and recommendations in this area, a fish-eye camera was used:

This camera provides a fairly wide viewing angle and allows you to capture a significant part of the room.

However, as it turned out, her results in the field of facial recognition quality were not impressive ... Mckayla was not impressed ... Due to the same wide viewing angle that the camera uses, it simultaneously distorts the recognizable object itself. For this reason, the Haar cascades used do not work well. Lighting also significantly affects shading, and the quality drops even further.

3. And to top it off - the robot lacked angular speed for clear twists, and even on the thresholds (not the ones that need to be crossed) and the carpets, it was completely stuck. Here, pepper added the difference in power of the “same power” motors, which are almost never the same, even after leaving the same batch. Because of this, the robot drifted significantly to the left.

Well, with performance, the situation began to improve noticeably when the main advantage of ROS as a system came to the rescue, namely: the ability to distribute the load on different machines.

That is, it is enough to run motion nodes, cameras and the master node on raspberry itself, and other, heavily loaded nodes can be laid on the shoulders of more powerful systems.

For example, installing ROS on a laptop or deploying a virtual machine.

In this situation, the second option was chosen.

In order not to describe how to install Ubuntu, ROS on a virtual machine, we use the catch phrase of Winnie Jones " we need a lifeless volunteer ." We prepared it ... - a virtual machine with all the stuffing can be downloaded here .

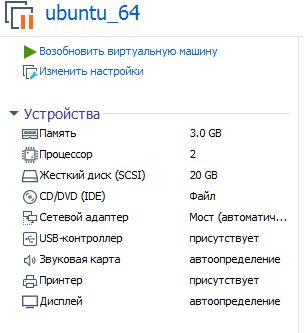

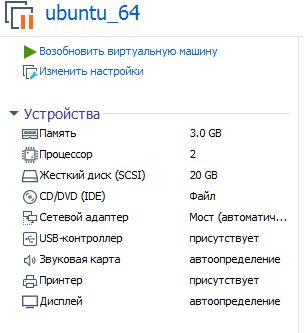

The parameters of the virtual machine that is proposed to be used are as follows:

It is understood that you are deploying (running) it on VMware workstation.

Password: raspberry

Now you can evaluate the performance when digesting Haar cascades on iron, more powerful than raspberry pi!

In order to start the ROS system, now divided into 2 parts, you need to specify the virtual machine where the master node works (and it starts on raspberry immediately after the system boots).

To do this, edit bashrc in a virtual machine:

In the lines at the very end of the file, specify ip addresses:

The first ip is the raspberry pi address, the second ip is the virtual machine address.

* The virtual machine and raspberry must be on the same local network.

On raspberry, the same bashrc will be different:

Two ip addresses on raspberry match.

* Do not forget to reboot after changing bashrc.

There are two terminals on raspberry.

In the 1st movement:

In the 2nd raspberry camera:

In total, 2 nodes will work on raspberry: one is waiting for a command to move, the second sends video from the camera to the network.

To connect to the master and control the robot from the keyboard, now in the virtual machine you need to run:

Similarly, instead of controlling from a virtual machine, you can run a node - following a person’s face:

Now a little dry statistics about what the results are with the fish-eye raspberry pi camera at different settings for the captured image from the camera.

320x240, 30fps:

Face of man. 80-100 cm. Lighting influences, practically does not see in a shadow. It is better to direct the lamp towards the face for improvement. Smile, with a smile, recognizes the face faster.

Cat face on sheet A4. 15-30 cm.

At 800x600 and 60fps - the face sees at a distance of 2-3 m.

At 1280x720 and 60fps - the face sees at a distance of 3-4 m. But there are false positives - it sees a wall clock as the face, etc.

A cat on A4 recognizes with a delay of 1-2 seconds, a distance of 1-1.5 m.

Virtual machine load 77-88%.

As you can see, when the window of the captured image from the camera is enlarged, the load on the virtual machine and the distance at which the robot sees the object increase. The load on raspberry is growing, but not significantly. Recognition speed compared to the operation of all nodes only on raspberry has increased significantly.

The statistics above showed that in order to improve vision, it is necessary to increase the resolution and fps of the camera, paying off the performance of the virtual machine. However, even at 1280x720 and 60fps, the distance to the object is not too large.

The solution to this problem came unexpectedly.

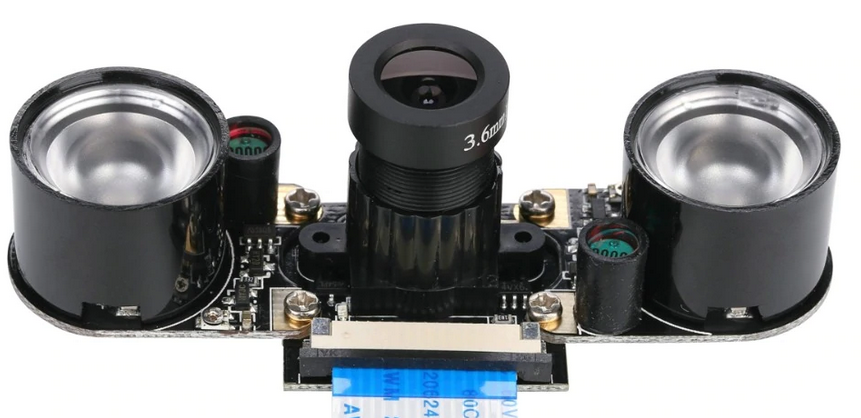

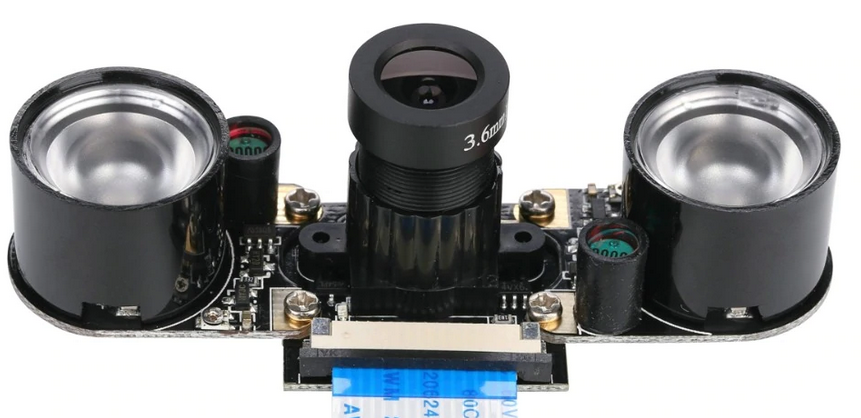

As you know, a whole line of cameras is available for raspberry. And for our case one of them was perfect, namely:

Her results are as follows:

With artificial lighting in the room sees a face at a distance of 7-8 m! It’s not even necessary to smile)

800x600 and 60fps - download. virtual machine - 70-80%, raspberry - 21% (there are only nodes of movement and start broadcasting the camera).

A cat on A4 recognizes with a delay of 1-2 seconds, a distance of 2-2.3 m.

As you can see, cats suffer again, which are determined at shorter distances. But here the reason is most likely in the settings of the Haar cascades, and not the camera.

* This camera, as a rule, comes complete with IR ears. It is not necessary to use them in daylight:

Thus, the camera for raspberry pi, different from fish-eye, showed the best results when working with objects, even in poor-quality artificial lighting. However, for increasing the distance from the camera, I had to pay with viewing angles and this must be taken into account.

As mentioned above, there are practically no twin engines, and the robot is doomed to drive either to the right or to the left, depending on how the motor turned out to be more powerful, all other things being equal.

Usually the difference in movement is evened out using encoders, since they are good in our project. About how it works there is a good movie .

However, here you can simplify everything and go for a little trick by correcting only the script for following a person’s face (follow_face.py) or a cat (follow_cat2.py) in a virtual machine.

We are interested in the following lines:

Depending on where and how much the robot blows, you need to adjust the values for z. In the code above, the values are selected when powering the 12V motor driver.

It is possible to increase the running characteristics of the robot (speed and maneuverability) by applying to the engine driver, which is used in the project (L9110s) instead of 8 V - 12V. But it’s better not to do this without installing a power stabilizer or at least a capacitor, because L9110s H-bridge chips burn fine, as it turned out. Maybe this makes them so cheap. Nevertheless, the robot drives 12 V rather friskly and frivolously.

Well, now that the robot has ceased to be carried away and his vision has improved, you can play hide and seek with him, for example. Or to force something to bring from the other end of the room. Of course, turning to face him, as to a person.

To be continued.

But along with the encouraging first steps in this area of robotics, I had to face a host of small tasks: slow raspberry pi, a small distance from the camera, which recognized the face, shifting to the side when driving, and others. How to solve them, including opening new small horizons in the development of ROS, will be described later.

1. By running all the nodes (scripts in ROS) from a previously assembled project, and starting working with Haar cascades, it quickly became clear that the performance of a single-board computer for such tasks is still weak. Despite the fact that the processor load was not peak and varied within 80%, the robot thought for a long time when he saw the face of a person or the face of a cat, which he was supposed to follow.

The saddest thing was that after the face or face went out of the field of view of the camera, the robot began to move, “thinking” that it was going to the object. In general, as in M.Yu. Lermontov:

"The long-time outcast wandered

In the desert of the world without a shelter ... ".

The first attempts to rectify the situation were as follows:

- in the camera launch node (on raspberry pi) the size of the captured image was reduced from 640x480 60fps to 320x240 15 fps.

cd /home/pi/rosbots_catkin_ws/src/rosbots_driver/scripts/rosbots_driver nano pi_camera_driver.py

This gave a certain increase in productivity, but the picture as a whole remained far from ideal.

Further, in the script for starting following a person’s face (follow_face.py) or a cat (follow_cat2.py), the conclusions on the screen of opencv itself were commented out. That is all cv.imshow. Now, when starting the trip, it was impossible to visually observe whether the robot sees a picture to which it is moving or not. Well, I had to sacrifice visualization.

Also, the info-outputs of ROS itself in the scripts were commented on: # rospy.loginfo.

This slightly reduced the load and increased the speed of work.

But ... it was necessary to come up with something else.

2. In addition, the robot was terribly shortsighted. Following our own past experience and recommendations in this area, a fish-eye camera was used:

This camera provides a fairly wide viewing angle and allows you to capture a significant part of the room.

However, as it turned out, her results in the field of facial recognition quality were not impressive ... Mckayla was not impressed ... Due to the same wide viewing angle that the camera uses, it simultaneously distorts the recognizable object itself. For this reason, the Haar cascades used do not work well. Lighting also significantly affects shading, and the quality drops even further.

3. And to top it off - the robot lacked angular speed for clear twists, and even on the thresholds (not the ones that need to be crossed) and the carpets, it was completely stuck. Here, pepper added the difference in power of the “same power” motors, which are almost never the same, even after leaving the same batch. Because of this, the robot drifted significantly to the left.

Performance improvement".

Well, with performance, the situation began to improve noticeably when the main advantage of ROS as a system came to the rescue, namely: the ability to distribute the load on different machines.

That is, it is enough to run motion nodes, cameras and the master node on raspberry itself, and other, heavily loaded nodes can be laid on the shoulders of more powerful systems.

For example, installing ROS on a laptop or deploying a virtual machine.

In this situation, the second option was chosen.

In order not to describe how to install Ubuntu, ROS on a virtual machine, we use the catch phrase of Winnie Jones " we need a lifeless volunteer ." We prepared it ... - a virtual machine with all the stuffing can be downloaded here .

The parameters of the virtual machine that is proposed to be used are as follows:

It is understood that you are deploying (running) it on VMware workstation.

Password: raspberry

Now you can evaluate the performance when digesting Haar cascades on iron, more powerful than raspberry pi!

In order to start the ROS system, now divided into 2 parts, you need to specify the virtual machine where the master node works (and it starts on raspberry immediately after the system boots).

To do this, edit bashrc in a virtual machine:

sudo nano ~/.bashrc

In the lines at the very end of the file, specify ip addresses:

export ROS_MASTER_URI=http://192.168.1.120:11311 export ROS_HOSTNAME=192.168.1.114

The first ip is the raspberry pi address, the second ip is the virtual machine address.

* The virtual machine and raspberry must be on the same local network.

On raspberry, the same bashrc will be different:

<source lang="bash">export ROS_MASTER_URI=http://192.168.1.120:11311 export ROS_HOSTNAME=192.168.1.120

Two ip addresses on raspberry match.

* Do not forget to reboot after changing bashrc.

How to start a distributed ROS system?

There are two terminals on raspberry.

In the 1st movement:

rosrun rosbots_driver part2_cmr.py

In the 2nd raspberry camera:

sudo modprobe bcm2835-v4l2 roslaunch usb_cam usb_cam-test.launch

In total, 2 nodes will work on raspberry: one is waiting for a command to move, the second sends video from the camera to the network.

To connect to the master and control the robot from the keyboard, now in the virtual machine you need to run:

rosrun teleop_twist_keyboard teleop_twist_keyboard.py /cmd_vel:=/part2_cmr/cmd_vel

Similarly, instead of controlling from a virtual machine, you can run a node - following a person’s face:

cd /home/pi/rosbots_setup_tools/rpi_setup python follow_face.py

Now a little dry statistics about what the results are with the fish-eye raspberry pi camera at different settings for the captured image from the camera.

320x240, 30fps:

Face of man. 80-100 cm. Lighting influences, practically does not see in a shadow. It is better to direct the lamp towards the face for improvement. Smile, with a smile, recognizes the face faster.

Cat face on sheet A4. 15-30 cm.

At 800x600 and 60fps - the face sees at a distance of 2-3 m.

At 1280x720 and 60fps - the face sees at a distance of 3-4 m. But there are false positives - it sees a wall clock as the face, etc.

A cat on A4 recognizes with a delay of 1-2 seconds, a distance of 1-1.5 m.

Virtual machine load 77-88%.

As you can see, when the window of the captured image from the camera is enlarged, the load on the virtual machine and the distance at which the robot sees the object increase. The load on raspberry is growing, but not significantly. Recognition speed compared to the operation of all nodes only on raspberry has increased significantly.

Treat myopia

The statistics above showed that in order to improve vision, it is necessary to increase the resolution and fps of the camera, paying off the performance of the virtual machine. However, even at 1280x720 and 60fps, the distance to the object is not too large.

The solution to this problem came unexpectedly.

As you know, a whole line of cameras is available for raspberry. And for our case one of them was perfect, namely:

Her results are as follows:

With artificial lighting in the room sees a face at a distance of 7-8 m! It’s not even necessary to smile)

800x600 and 60fps - download. virtual machine - 70-80%, raspberry - 21% (there are only nodes of movement and start broadcasting the camera).

A cat on A4 recognizes with a delay of 1-2 seconds, a distance of 2-2.3 m.

As you can see, cats suffer again, which are determined at shorter distances. But here the reason is most likely in the settings of the Haar cascades, and not the camera.

* This camera, as a rule, comes complete with IR ears. It is not necessary to use them in daylight:

Thus, the camera for raspberry pi, different from fish-eye, showed the best results when working with objects, even in poor-quality artificial lighting. However, for increasing the distance from the camera, I had to pay with viewing angles and this must be taken into account.

Now about the improvement of the chassis and the demolition of the robot to the side

As mentioned above, there are practically no twin engines, and the robot is doomed to drive either to the right or to the left, depending on how the motor turned out to be more powerful, all other things being equal.

Usually the difference in movement is evened out using encoders, since they are good in our project. About how it works there is a good movie .

However, here you can simplify everything and go for a little trick by correcting only the script for following a person’s face (follow_face.py) or a cat (follow_cat2.py) in a virtual machine.

We are interested in the following lines:

#-0.013 - because right wheel is faster twist_msg.linear.x = 0.1 twist_msg.angular.z = -0.013 elif self.face_found["x"] < 0.0: # To the left cur_dir = "left" twist_msg.linear.x = 0.1 twist_msg.angular.z = 0.016 else: # To the right cur_dir = "right" twist_msg.linear.x = 0.1 twist_msg.angular.z = -0.016

Depending on where and how much the robot blows, you need to adjust the values for z. In the code above, the values are selected when powering the 12V motor driver.

It is possible to increase the running characteristics of the robot (speed and maneuverability) by applying to the engine driver, which is used in the project (L9110s) instead of 8 V - 12V. But it’s better not to do this without installing a power stabilizer or at least a capacitor, because L9110s H-bridge chips burn fine, as it turned out. Maybe this makes them so cheap. Nevertheless, the robot drives 12 V rather friskly and frivolously.

Instead of a conclusion

Well, now that the robot has ceased to be carried away and his vision has improved, you can play hide and seek with him, for example. Or to force something to bring from the other end of the room. Of course, turning to face him, as to a person.

To be continued.

All Articles