19 useful features of the .htaccess file

I bet you didn’t suspect some. We have put together .htaccess applications to improve the site. It is often used by optimizers to correctly configure 301 redirects. But the possibilities of the file are not limited to this. Here, security, optimization, and display settings — using .htaccess, the webmaster can do a lot of useful things to make the site work correctly.

The .htaccess file (short for "hypertext access") overrides the settings of the most popular type of Apache web server and its analogues. Below are ways to use .htaccess for different purposes with code examples.

Why .htaccess is needed and where to look for it

The file is needed for more flexible server settings for optimizer tasks. It is not worth setting rules in .htaccess if you have access to the main server configuration file .httpd.conf or apache.conf (the name depends on the settings of the operating system). Changes in it take effect faster, requests do not overload the server. However, very often there is no such access, for example, in the case of shared hosting. Then you have to register the necessary settings through .htaccess.

Features .htaccess for site optimization:

- Setting up redirects for SEO.

- Ensuring the security of the resource as a whole and of individual sections.

- Setting the correct display of the site.

- Download speed optimization.

Where to look and how to edit

If the .htaccess file is in the root folder, the command action extends to the entire site, but you can place it in any directory. Then the directives will concern only a specific directory and subdirectories. Thus, a resource can have several .htaccess files. Priority is given to the commands of the file located in the directory, and not in the root.

.htaccess is the generally accepted and most popular name, but not required (it is specified in the httpd.conf file). Despite the unusual name, you can create and edit the file in any text editor.

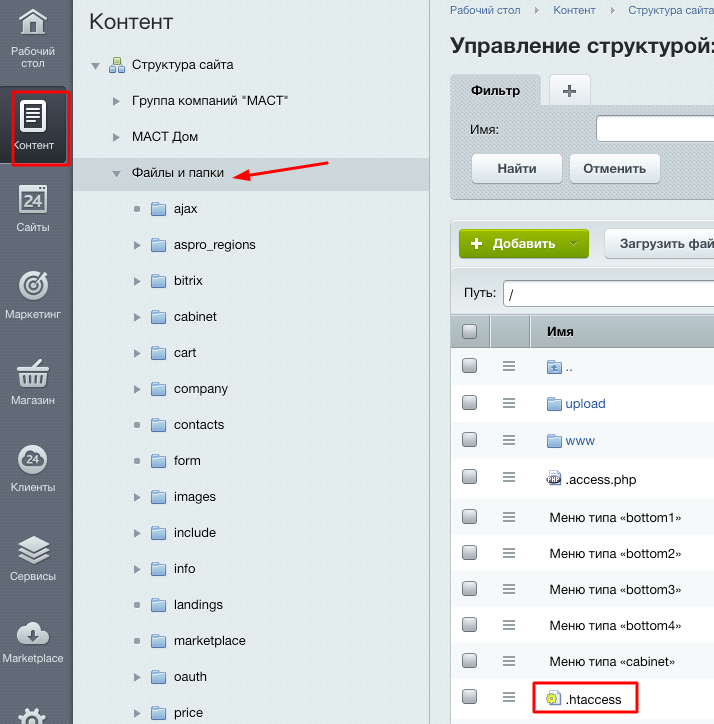

Some CMS provide the ability to edit the file through the administrative panel. In Bitrix, it can easily be found in the Content - Files and Folders section:

In WordPress, you can edit .htaccess using the Yoast SEO and All in One SEO Pack plugin modules.

If the .htaccess file is missing, create it in a text editor and place it in the root folder of the site or in the desired directory (you will need access to the hosting or via ftp).

Syntax .htaccess

The file syntax is simple: each directive (command) starts on a new line, after the # sign you can add comments that will not be taken into account by the server. Changes to the site take effect immediately; server reboot is not required.

Rules are set including using regular expressions. In order to read them, you need to understand the meaning of special characters and variables. Decrypt the most commonly used.

The main special characters:

- ^ - beginning of line;

- $ - end of line;

- . - any character;

- * - any number of any characters;

- ? - one specific character;

- [0-9] is a sequence of characters, for example, from 0 to 9;

- | - the symbol “or”, either one group or another is selected;

- () - used to select groups of characters.

The main variables are:

- % {HTTP_USER_AGENT} - User-Agent field, which is transmitted by the user's browser;

- % {REMOTE_ADDR} - IP address of the user;

- % {REQUEST_URI} - the requested URI;

- % {QUERY_STRING} - query parameters after the? Sign.

For those who want to thoroughly dive into the topic - the full official documentation on the use of .htaccess or a good resource in Russian . And we will go through the main features of the file to optimize your site.

Set up redirects for SEO

As we mentioned, this is the most popular way to use .htaccess. Before you configure this or that type of call forwarding, make sure that it is really necessary. For example, a redirect to pages with a slash is configured by default in some CMS. We wrote about the redirect settings for SEO on the blog .

When setting up 301 redirects, remember two rules:

- Avoid multiple consecutive redirects - they increase the load on the server and reduce the speed of the site.

- Place redirects from private to global. For example, first redirect from one page to another, then a general redirect to pages with a slash. This rule does not work in 100% of cases, so you need to experiment with the placement of directives.

1. Set up page 301 redirects

This will be required in the following cases:

- the structure of the site has changed and the level of nesting has changed;

- the page has ceased to exist, but it is necessary to preserve its incoming traffic (for example, in the absence of goods, they usually redirect to the product category);

- URL changed, which is extremely undesirable, but also found.

Just deleting the page is a bad idea, it’s better not to give the robot a 404 error, but redirect it to another URL. In this case, there is a chance not to lose the site’s position in the SERP and targeted traffic. You can configure a 301 redirect from one page to another using the simple redirect directive :

Redirect 301 /page1/ https://mysite.com/page2/

-

/page1/

- page address from the root, without protocol and domain. For example,/catalog/ofisnaya-mebel/kompjuternye-stoly/

. -

https://mysite.com/page2/

- full address of the redirect page, including protocol and domain. For example,https://dom-mebeli.com/ofisnaya-mebel/stoly-v-ofis/

.

2. Get rid of takes

Each page of the site should be accessible at only one address. To do this, the following should be configured:

- redirect to pages with a slash at the end of the URL or vice versa;

- The main mirror is the main site address in the search.

This can be done using the mod_rewrite

module. It uses special commands - complex redirection directives . The first command is always to enable URL conversion:

RewriteEngine On

Forward to slash or vice versa

Whether to redirect to pages with or without a slash, in each case, you need to decide individually. If the site has already accumulated a history in the search, analyze which pages in the index are larger. For new sites, they usually set up a redirect to a slash. Checking if redirection is not configured by default is simple: remove / add a slash at the end of the URL. If the page reloads with a new address - we have duplicates, configuration is required. If the URL is substituted, then everything is in order. It is better to check several levels of nesting.

Code 301 redirect to a slash:

RewriteCond %{REQUEST_URI} /+[^\.]+$ RewriteRule ^(.+[^/])$ %{REQUEST_URI}/ [R=301,L]

Code 301 redirect to pages without a slash:

RewriteCond %{REQUEST_URI} !\? RewriteCond %{REQUEST_URI} !\& RewriteCond %{REQUEST_URI} !\= RewriteCond %{REQUEST_URI} !\. RewriteCond %{REQUEST_URI} ![^\/]$ RewriteRule ^(.*)\/$ /$1 [R=301,L]

3. Set up the main mirror

First you need to decide which address will be the main one for the search. SSL certificate has long been a masthead. Just install it and add the rule in .htaccess. Remember to register it in robots.txt as well.

HTTPS redirect

RewriteEngine On RewriteCond %{HTTPS} !on RewriteRule (.*) https://%{HTTP_HOST}%{REQUEST_URI}

There are several ways to determine whether or not the main mirror will be with “www”:

- add a site to Yandex.Webmaster in two versions, the console displays information about which URL the search engine considers the main mirror;

- analyze the issuance and see which pages of the site are more in the index;

- it does not matter for a new resource, with or without an “www” address, the choice is yours.

Once the choice is made, use one of the two code options.

Redirect from www to without www

RewriteEngine On RewriteCond %{HTTP_HOST} ^www\.(.*)$ [NC] RewriteRule ^(.*)$ http://%1/$1 [R=301,L]

Redirect with no www to www

RewriteEngine On RewriteCond %{HTTP_HOST} !^www\..* [NC] RewriteRule ^(.*) http://www.%{HTTP_HOST}/$1 [R=301]

4. We redirect from one domain to another

The most obvious reason for setting up this redirect is to redirect robots and users to a different address when the site moves to a new domain. Also, optimizers use it to manipulate the reference mass, but drop domains and PBN are gray promotion technologies that we will not touch upon within this material.

Use one of the code options:

RewriteEngine On RewriteRule ^(.*)$ http://www.mysite2.com/$1 [R=301,L]

or

RewriteEngine on RewriteCond %{HTTP_HOST} ^www\.mysite1\.ru$ [NC] RewriteRule ^(.*)$ http://www.mysite2.ru/$1 [R=301,L]

Do not forget to change “mysite1” and “mysite2” in the code to the old and new domain, respectively.

SEO module in the Promopult system: for those who do not want to drown in routine. All the tools to improve the quality of the site and search promotion, process automation, check lists, detailed reports.

We provide site security

The .htaccess file provides great opportunities to protect the site from malicious scripts, content theft, and DOS attacks. You can also protect access to specific files and partitions.

5. We prohibit downloading images from your site

There are technologies in which third-party sites use content, including images, downloading it directly from your hosting using hotlinks (direct links to files). This is not only insulting and violates copyright, but also creates an unnecessary additional load on your server.

Siege thieves with this code:

Options +FollowSymlinks RewriteEngine On RewriteCond %{HTTP_REFERER} !^$ RewriteCond %{HTTP_REFERER} !^https://(www.)?mysite.com/ [nc] RewriteRule .*.(gif|jpg|png)$ https://mysite.com/img/goaway.gif[nc]

Replace “mysite.com” with the address of your site and create an image with any message saying that stealing other people's pictures is not good at https://mysite.com/img/goaway.gif

. This image will be shown on a third-party resource.

6. Deny access

Whole groups of unwanted guests from specific IP addresses, subnets, and malicious bots can be denied access to your resource using the following directives in .htaccess.

For unwanted User Agents (bots)

SetEnvIfNoCase user-Agent ^FrontPage [NC,OR] SetEnvIfNoCase user-Agent ^Java.* [NC,OR] SetEnvIfNoCase user-Agent ^Microsoft.URL [NC,OR] SetEnvIfNoCase user-Agent ^MSFrontPage [NC,OR] SetEnvIfNoCase user-Agent ^Offline.Explorer [NC,OR] SetEnvIfNoCase user-Agent ^[Ww]eb[Bb]andit [NC,OR] SetEnvIfNoCase user-Agent ^Zeus [NC] <limit get=”” post=”” head=””> Order Allow,Deny Allow from all Deny from env=bad_bot </limit>

The list of user agents can be supplemented, reduced or created your own. The list of good and bad bots can be found here .

A special case of such a ban is a ban for search robots. If for some reason you are not satisfied with the rule in robots.txt, you can deny access, for example, to Googlebot using the following directives:

RewriteEngine on RewriteCond %{USER_AGENT} Googlebot RewriteRule .* - [F]

For all but the specified IP

ErrorDocument 403 https://mysite.com Order deny,allow Deny from all Allow from IP1 Allow from IP2 . .

Do not forget to replace "https://mysite.com" with the address of your site and enter IP addresses instead of IP1, IP2, etc.

For specific IP addresses

allow from all deny from IP1 deny from IP2 . .

For subnet

allow from all deny from 192.168.0.0/24

Enter the netmask in the line after “deny from”.

Spam IP addresses can be calculated in server logs or using statistics services. The WordPress admin dashboard displays the IP addresses of commentators:

To specific file

<files myfile.html> order allow,deny deny from all </files>

Enter the name of the file instead of "myfile.html" in the example. The user will be shown error 403 - "access is denied."

It will not be superfluous to restrict access to the .htaccess file itself for security reasons, and we also recommend that you set 444 permissions to the file after setting all the rules.

<Files .htaccess> order allow,deny deny from all </Files>

For WordPress sites, it’s important to restrict access to the wp-config.php file, as it contains information about the database:

<files wp-config.php> order allow,deny deny from all </files>

For users coming from a specific site

You can block visitors from unwanted resources (for example, with adult or shocking content).

<IfModule mod_rewrite.c> RewriteEngine on RewriteCond %{HTTP_REFERER} bad-site.com [NC,OR] RewriteCond %{HTTP_REFERER} bad-site.com [NC,OR] RewriteRule .* - [F] </ifModule>

7. Protect access to a specific file or folder

To get started, create a .htpasswd file, write in it logins and passwords in user: password format and place it in the root of the site. For security reasons, passwords are best encrypted. This can be done using special services for generating records, for example, this . The next step is to add directories or files to .htaccess:

File Password Protection

<files secure.php=””> AuthType Basic AuthName “” AuthUserFile /pub/home/.htpasswd Require valid-user </files>

Password Protect Folders

resides AuthType basic AuthName “This directory is protected” AuthUserFile /pub/home/.htpasswd AuthGroupFile /dev/null Require valid-user

Instead of “/pub/home/.htpasswd”, specify the path to the .htpasswd file from the server root. We recommend checking access after installing the code.

8. We prohibit the execution of malicious scripts

The following group of directives protects the site from the so-called "script injection" - a tool for hacker attacks:

Options +FollowSymLinks RewriteEngine On RewriteCond %{QUERY_STRING} (\<|%3C).*script.*(\>|%3E) [NC,OR] RewriteCond %{QUERY_STRING} GLOBALS(=|\[|\%[0-9A-Z]{0,2}) [OR] RewriteCond %{QUERY_STRING} _REQUEST(=|\[|\%[0-9A-Z]{0,2}) RewriteRule ^(.*)$ index.php [F,L]

All attempts to harm your resource will be redirected to the 403 "access denied" error page.

9. Protecting the site from DOS attacks

One of the protection methods is to limit the maximum allowable request size (there is no restriction by default).

To do this, set the size of the downloaded files in bytes in .htaccess:

LimitRequestBody 10240000

In the example, the size is 10 MB. If you want to prohibit downloading files, write a number less than 1 MB (1048576 bytes).

You can also explore the possibilities of the LimitRequestFields, LimitRequestFieldSize and LimitRequestLine directives in the official documentation .

Customize the display of the site

Let's see what can be done with the display of the entire resource or its sections in users' browsers using .htaccess.

10. Replace the index file

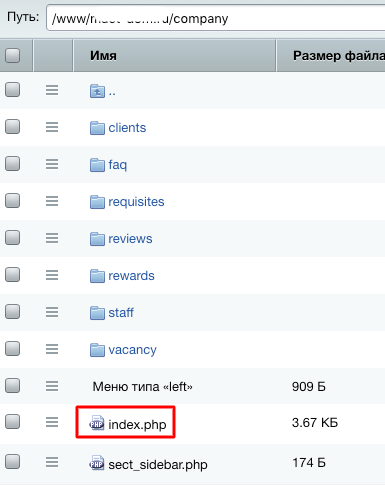

An index file is the one that opens by default when accessing a specific directory. Usually they are called: index.html, index.htm, index.php, index.phtml, index.shtml, default.htm, default.html.

Here's what it looks like in the directory structure:

To replace this file with any other, place it in the .htaccess directory and add this command:

DirectoryIndex hello.html

Instead of “hello.html” enter the address of the desired file.

You can specify the sequence of files that will open in the specified order if one of them is unavailable:

DirectoryIndex hello.html hello.php hello.pl

11. Add or remove html at the end of the URL

Keeping or removing the file extension in the URL is a matter for every optimizer. There are no reliable studies of the effect of extensions in addresses on resource ranking, but each webmaster has an opinion on this.

To add .html:

RewriteCond %{REQUEST_URI} (.*/[^/.]+)($|\?) RewriteRule .* %1.html [R=301,L] RewriteRule ^(.*)/$ /$1.html [R=301,L]

To remove .html:

RewriteBase / RewriteRule (.*)\.html$ $1 [R=301,L]

The same directives can add / remove the php extension.

12. Set up the encoding

To avoid errors in displaying the resource by the browser, you need to tell it in which encoding the site was created. Most Popular:

- UTF-8 - universal

- Windows-1251 - Cyrillic

- Windows-1250 - for Central Europe

- Windows-1252 - for Western Europe

- KOI8-R - Cyrillic (KOI8-R)

Most often they use UTF-8 and Windows-1251.

If the encoding is not specified in the meta tag of each page, you can specify it via .htaccess.

An example of a directive that sets the file to UTF-8 encoding:

AddDefaultCharset UTF-8

And such a command means that all files uploaded to the server will be converted to Windows-1251:

CharsetSourceEnc WINDOWS-1251

The examples show different encodings, but within the same site, the encodings in these directives must match.

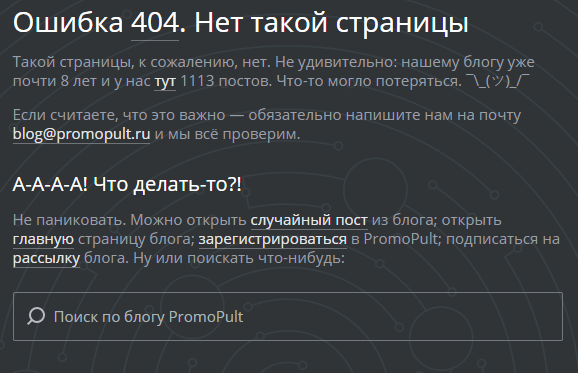

13. Create custom error pages

Using rules in .htaccess, you can configure the display of specially created pages for the most popular errors, for example:

ErrorDocument 401 /error/401.php ErrorDocument 403 /error/403.php ErrorDocument 404 /error/404.php ErrorDocument 500 /error/500.php

Before writing the directives, create the error folder in the root of the site and place the corresponding files for the error pages there.

Why is this needed? For example, in order not to lose the user on page 404, but to give him the opportunity to go to other sections of the site:

We optimize the site

Website loading speed is one of the ranking factors in search engines. You can increase it, including using the directives in .htaccess.

14. Compress site components using mod_gzip or mod_deflate

File compression, on the one hand, increases the speed of loading a site, but on the other hand, it loads the server more. In .htaccess, you can enable compression using two modules - mod_zip and mod_deflate. They are almost identical in compression quality.

The syntax of the Gzip module is more flexible and it can work with masks:

<ifModule mod_gzip.c> mod_gzip_on Yes mod_gzip_dechunk Yes mod_gzip_item_include file \.(html?|txt|css|js|php|pl)$ mod_gzip_item_include handler ^cgi-script$ mod_gzip_item_include mime ^text/.* mod_gzip_item_include mime ^application/x-javascript.* mod_gzip_item_exclude mime ^image/.* mod_gzip_item_exclude rspheader ^Content-Encoding:.*gzip.* </ifModule>

In mod_deflate, you list the types of files you want to compress:

<ifModule mod_deflate.c> AddOutputFilterByType DEFLATE text/html text/plain text/xml application/xml application/xhtml+xml text/css text/javascript application/javascript application/x-javascript </ifModule>

15. We strengthen caching

This set of commands will help to quickly load the site for those visitors who were already on it. The browser will not re-download pictures and scripts from the server, but will use data from the cache.

FileETag MTime Size <ifmodule mod_expires.c> <filesmatch “.(jpg|gif|png|css|js)$”> ExpiresActive on ExpiresDefault “access plus 1 week” </filesmatch> </ifmodule>

In the example, the cache life is limited to one week (“1 week”), you can specify your period in months (month), years (year), hours (hours), etc.

Another code option:

<IfModule mod_expires.c> ExpiresActive On ExpiresByType application/javascript "access plus 7 days" ExpiresByType text/javascript "access plus 7 days" ExpiresByType text/css "access plus 7 days" ExpiresByType image/gif "access plus 7 days" ExpiresByType image/jpeg "access plus 7 days" ExpiresByType image/png "access plus 7 days" </IfModule>

The following file types are available for caching:

- image / x-icon;

- image / jpeg;

- image / png;

- image / gif;

- application / x-shockwave-flash;

- text / css;

- text / javascript;

- application / javascript;

- application / x-javascript;

- text / html;

- application / xhtml + xml.

A few more possibilities

16. Manage php settings

This set of settings is done by programmers if there is no access to the php.ini file. Let us dwell on php_value expressions, which are responsible for the amount of data uploaded to the site and the processing time of scripts, because this directly affects performance.

<ifModule mod_php.c> php_value upload_max_filesize 125M php_value post_max_size 20M php_value max_execution_time 60 </ifModule>

In the line "upload_max_filesize" indicate the maximum size of the downloaded files in megabytes, "post_max_size" means the maximum amount of posting, "max_execution_time" indicates the time in seconds to process the scripts.

17. Fighting spam comments on WordPress

In order to block spammers from accessing the wp-comments-post.php file, add these directives to .htaccess:

RewriteEngine On RewriteCond %{REQUEST_METHOD} POST RewriteCond %{REQUEST_URI} .wp-comments-post\.php* RewriteCond %{HTTP_REFERER} !.*mysite.com.* [OR] RewriteCond %{HTTP_USER_AGENT} ^$ RewriteRule (.*) ^http://%{REMOTE_ADDR}/$ [R=301,L]

Instead of "mysite.com" enter the address of your site.

18. Install e-mail for server administrator

This code in .htaccess will set the administrator’s email address by default. It will receive notifications related to important events on the server.

ServerSignature EMail SetEnv SERVER_ADMIN admin@mysite.com

19. We warn about the unavailability of the site

In a situation where the site is unavailable for technical reasons, you can redirect users to a page with information about when work will be restored. It is better to avoid such interruptions, but in force majeure circumstances add the following directives to .htaccess:

RewriteEngine on RewriteCond %{REQUEST_URI} !/info.html$ RewriteCond %{REMOTE_HOST} !^12\.345\.678\.90 RewriteRule $ https://mysite.ru/info.html [R=302,L]

In the example (12 \ .345 \ .678 \ .90), replace the IP address with your own, in the last line indicate the address of the page of your resource with information about the nature and timing of completion.

General .htaccess rules

- Always back up the file before making changes to quickly roll back them.

- Make changes step by step, add one rule and evaluate how it worked.

- If you place several .htaccess files in different directories, write only new directives that are relevant for a particular directory in the children, the rest will be inherited from the parent directory or file in the root folder.

- Clear your browser cache: Ctrl + F5, on Safari: Ctrl + R, on Mac OS: Cmd + R.

- If a 500 error occurs, check the syntax of the rule (for typos). You can use the services of checking the .htaccess file online, for example such . If no errors are found, then this type of directive is prohibited in the main configuration file, you will have to consult a programmer and hosting provider.

- Cyrillic characters are not allowed in .htaccess directives. If you need to specify the address of the Cyrillic domain (moysite.rf), use any whois-service to find out its spelling using the punycode method. For example, the address "site.rf" would look like "xn - 80aswg.xn - p1ai / $ 1".

- Too many directives in .htaccess can reduce the site’s performance. Try to use the file only if the problem cannot be solved in another way.

- If you don’t have time to study the features of directives in detail, use the .htaccess generator .

All Articles