Computer graphics in cinema (movie plus article)

Young Schwarzenegger in 2009, running behind the window of a car, roaring the stands of the stadium, a running crowd of zombies, a medieval castle against the backdrop of a peaceful landscape (and even with a peaceful landscape), twins played by one actor, the jock who lost muscles, and all the kinds of disasters that destroy the long-suffering New York over and over again.

How is this all done? Right on the site or on the computer?

That is, special effects or visual effects?

They are often confused, but still computer graphics in movies is visual effects.

Here we will talk about them today. Under the cat is the video and its text version, adapted for the article. A lot of pictures!

Modeling

First about modeling . And, so as not to be sprayed, about sculpture, preparation for animation and shading.

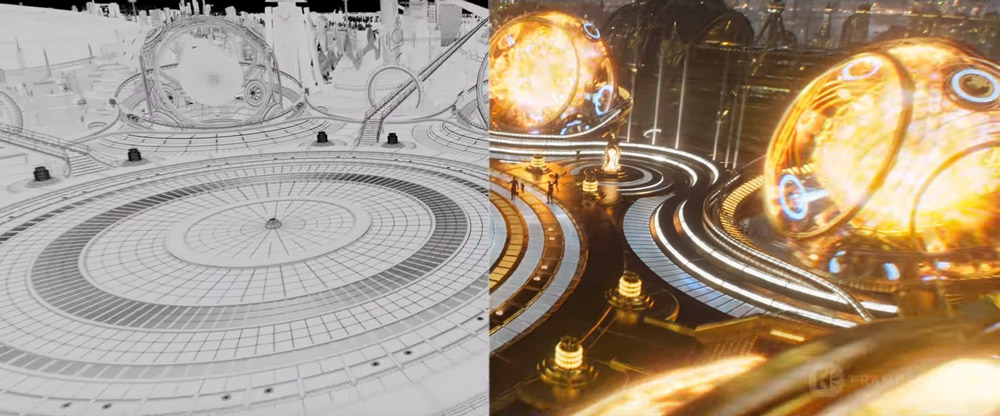

It's simple: to show the audience a scene that does not exist in reality, and building a pavilion is lazy, expensive, or there is no time, or it happens in a pixel cartoon - you need to build it virtually.

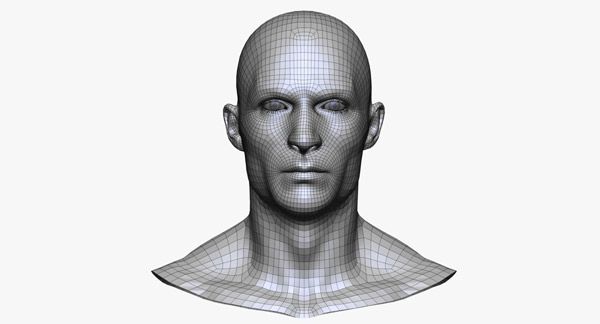

The most popular technology for describing objects in three-dimensional graphics is the creation of a polygonal mesh . That is, an object is described by points (vertices), edges connecting them and faces. Edges formed by any number of points from three are called polygons. This means that any computer character in a movie is, in fact, a polyhedron, just a lot of these faces.

How many times in the movie was a scene with an exploding helicopter? I don’t know, but I’m not sure that there are so many helicopters in the world.

How to remove such a scene? It is necessary to simulate a three-dimensional helicopter, draw a texture to it, select the material settings so that the surface gleams like a real metal in the sun, set the lighting as in the captured frame, animate its flight and synchronize the virtual camera that captures the helicopter, which has already captured the rest.

And even here the real shooting is not required. There is such a profession - draw backgrounds.

Mat-painting is the creation of all the beauty that is far away and does not particularly demonstrate the convexity of objects. Starry sky, azure shores, the plains of the Wild West.

This problem is effectively solved by a technical designer or artist who draws something, photographs something, models something and returns to a composite suitable for which later. To build an honest three-dimensional scene of the vast expanses, when they are simply not decorating behind the backs of the heroes and taking part in the action, is costly, timeless and meaningless.

What about modeling something more alive?

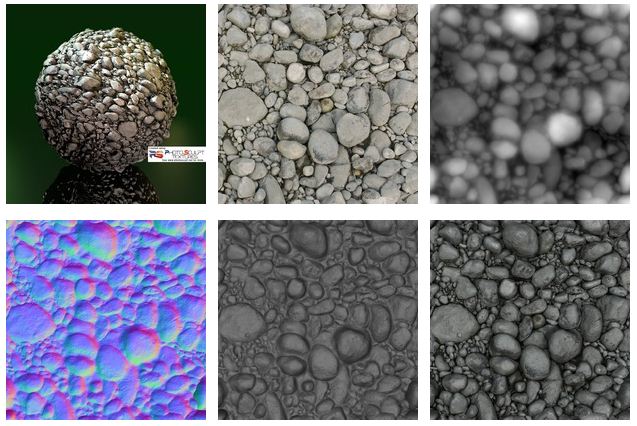

First, a blank with a minimum of details is modeled, then it is worked out in detail; This process is called sculpture. Then comes the turn of texturing and animation of the model, before which retopology can be carried out.

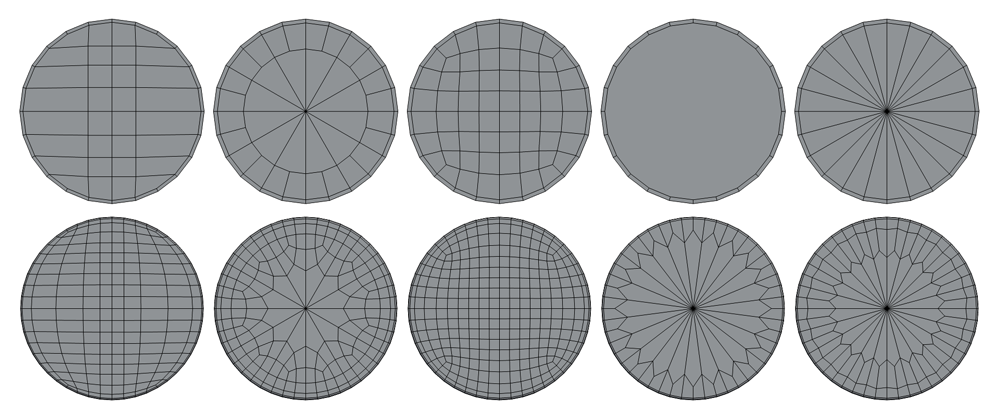

Topology is exactly how the mesh describes the object. Polygons can logically shape the shape, repeating the natural lines of the subject.

Due to the abundance of complex distortions of the model at the stage of sculpting, the topology can lose its original logic and then retopology. That is, re-create the grid, usually with a significantly smaller number of polygons. I will not be removed into the wilds, but this is done to make it more convenient to impose a texture, as well as to adequately distort polygons during animation, without incorrect influences on neighbors. And small details of the original high-poly model are returned with the help of normal maps and displacement - that is, textures that contain information about the necessary distortions.

In the movie, computer characters can do something about the plot, which means they need to be animated.

For the convenience of animators on the model make what is called rigging . This is a binding of a model to a conditional skeleton with bones and something like joints, which can then be moved, using convenient controls, so that the model would adequately move, portray emotions by moving virtual muscles, and even dance like a spiderman with a famous gif.

But in large projects, creating an animal, it is worked out from the inside. The creature is modeled with the skeleton and muscles, and is also responsibly animated. To muscle rolled under the skin, demonstrating the virtual effort.

It is obvious that the artist who creates the dragon must spend a lot of hours in the circle of real dragons in order for the result to look decent. Not such a safe job =)

After that, you can work with the model, but apparently it looks uniform, like an antique statue. It is all the same color and needs materials and textures.

Shading and its important part texturing - that is, an indication of how parts of the model should be painted and what optical properties to possess - are made with the help of reamers.

Texturing is also a multi-stage work, in the process of which both the model can be drawn and the expanded texture modified in Photoshop.

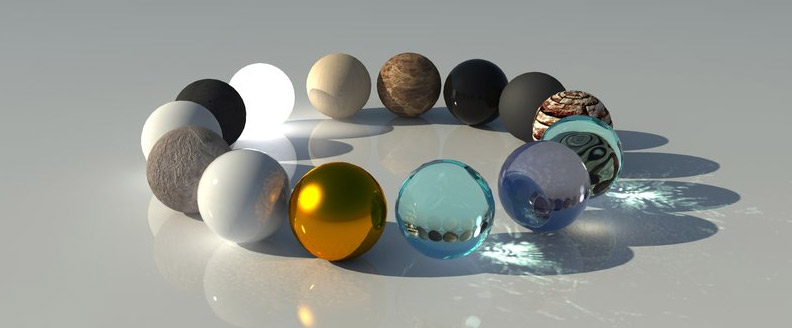

But texture is not all the information possessed by virtual material. The object may reflect other objects, be glossy, matte, translucent and refracting light. The skin of the face reflects light differently than the eye of that face. And even different areas of the skin can work in different ways.

In the case of working with a picture, we are not interested in modeling physical reality at the level of any quarks, the level of basic optics is quite suitable.

In the real world, the haze or glossiness of an object is due to its surface. If it is perfectly smooth, the parallel rays of light will also be reflected in parallel, the object will look glossy and the reflections will be clear. If the surface is rough, then the rays will fly off from small irregularities in different directions and the reflection will turn into a glare or completely spread over the surface, which we call matte.

It would be too difficult to calculate even such sufficiently high-level nuances on modern computers. But the technology of obtaining computer images simplify and optimize everything that is possible. It is not necessary to calculate such detailing so that virtual photons fly apart, as if from a non-smooth matte surface. You can simply program the parameters, following which the virtual object will look dull in the light. And the artist will not even have to program, because these basic functions are configured through the interface of any three-dimensional package.

But, even with such a simplification, how to take into account the fact that from point to point skin can have different properties? To prepare hundreds of similar materials for one person alone is not a solution. But the maps - that is, the textures, which speak on which areas which parameter should work harder or weaker - are quite enough.

Maps can be for everything: for reflections and glare, transparency and degree of refraction, pseudo-volume and true distortion , and much more for that. What I used to call the skin texture is also one of the maps, namely the diffuse color .

And textures can overlap each other with an unlimited number of nesting levels and with the task of a complex dependence on each other.

All this allows you to design a model so that on the screen it is easy to confuse with a real object with high-quality visualization. But about the visualization further, we must first talk about the most fun.

Simulations

In our case, simulations are any scenes that are not created frame-by-hand, but develop themselves, following some set rules.

Some movies are impossible without simulations.

2012 is a perfect example. The studio of special effects Uncharted Territory has worked for glory, simulating destruction in industrial quantities.

Cold Heart owes much of its charm to the excellent snow, which had to be developed specifically for the cartoon.

A beautiful huge wave like in Interstellar does not have to be simulated completely. It is enough to create a simple figure, similar to a wave, and on top of it flowing simulated water.

The epic sand and thunderstorm in Mad Max also demanded a lot of parts to create, although in that scene a lot of things are stuck.

Examples do not count. What kind of simulations are there?

Let's start with particle simulations . They are used when you need to burn, smoke, pour, pour, and so on.

Particles or particle are such points in the virtual space. By themselves, they do not have a visual component, but one can be hung over it.

Particles are useful in systems when they somehow interact with other particles nearby. Depending on the rules of this interaction, the particle system can behave like water, fire, sand, jelly, snow and many more in a way, obeying the forces given in the simulation and interacting with objects in the scene.

After the finished recording of their behavior, you can hang the geometry and effects that will make the viewer see the material instead of many points.

Simulations with particles and fluids is a simplified model of the behavior of real substances, the main difference here is in fewer points. But, as a result of this approach, high-quality imitation of the same water still requires a large number of particles and subsequent polishing with effects that simulate the foam and bubbles by separate processes.

Fire, smoke, explosions - they are all well imitated.

Bulk or viscous materials that control themselves behave done in exactly this way.

A variety of magical effects almost always have some blue sparks that are made just by particles.

And so on.

Object simulations are another big topic. In addition to the destruction of cities, physics consider the behavior of cars, crowds, fabrics. Yes, everything, anything, if the calculation gives a quality result faster than manual work.

For example, in a completely static architectural visualization, throwing a blanket on a bed or tying curtains with a tape is easier with just a quick simulation.

Of the typical tasks still need to remember about hair and wool.

The main three-dimensional packages have ready-made modules for creating hair. Which, however, were not sufficiently advanced to create Rapunzel hair - and in Disney they developed their own system.

But in more mundane cases, CJ-hair consists of a system of short lengths - with several knots for being able to gently bend - and simple geometry hung from above.

Hair simulation is a process of headache and time consuming because this hair can be on a character, and a character can have a lot of screen time. Badly tuned hair will strive to fall into each other, generate strange glitches and generally make you want to make films about bald people only.

Yes, and visualize them qualitatively, without noticeable artifacts - for a long time. Because, despite its simplicity, a lonely hair appears in few places.

Render

That got to visualization.

Once I played a computer game by Shrek, and I still remember my confusion about the fact that the graphics in the game were much worse than in the cartoon. Hello, this is a computer animation, just take a grafon from it and make a game!

A little later, I read an interview with the creators of the cartoon Cars, where they, among other things, talked about a few hours of rendering each frame of the cartoon on a farm of many computers.

So I learned that outputting a frame of a game and outputting a frame of graphics for a film are different tasks. In the first case, the most optimized picture should be created many times per second, while in the second one, the creation of one frame can take hours.

The three-dimensional scene in the image turns the program renderer. And the process is called, respectively, rendering or simply rendering.

Render is very closely connected with the shading of materials and lighting, because it gives them the basis. Basic materials, customizable as you like, light sources, virtual cameras - this all works in close conjunction with the visualizer. Three-dimensional packages have their own standard renderers, and almost always allow third-party connectors.

The most famous bunch is 3ds Max + V-Ray . This is a basic set for architectural visualization. Yes, this is a bunch of yesterday, but still the legacy of lessons on it holds a fairly low bar of entry. Yes, and in the summary of the three-dimensional artist, a line about the knowledge of Max is usually required.

Over the past ten years, renderers have come a long way towards photorealism. Materials and maps to them in free access allow you to quickly get a decent result even at home with some reservations. For example - static pictures can be done with almost no restrictions. Well, it will take a render from a freelancer for a few hours - set for the night, and everything. Here the video is much more difficult to do, most likely you will have to rent some kind of cloud power.

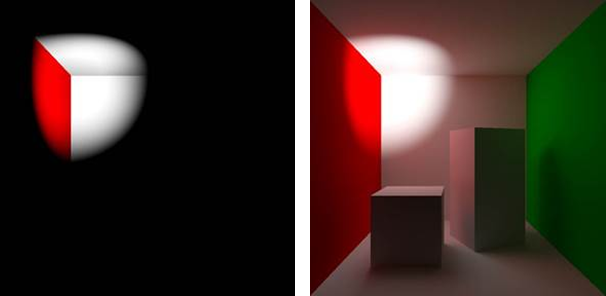

I have already talked about models and materials. There is still something important. This is the light .

Nothing will look natural if it is illuminated by primitive light sources. Good light is very expensive in computer resources, so game developers try to imitate it in a variety of ways, just to get a stable number of frames per second.

In games, point sources of light are used, parallel - to simulate conditionally infinitely distant sources like the Sun and ambient - that is, just lights without a specific source and, accordingly, shadows. Plus, in games, there are few real sources in the scene, this is very expensive.

They learned to imitate light without sources long ago, and in the case of three-dimensional games, the on-duty methods for a long time were light maps or lightmaps baked into textures: that is, the cut-off pattern was calculated in advance, and then simply was drawn on the objects of the scene. The disadvantage of this approach is obvious: you cannot make dynamic light, a character running past a drawn light source will not block the virtual rays and the correct shadow will not work.

But the methods can be combined and applied various other tricks, which game developers do, fighting for frame rates.

But in the graphics, where rendering in real time is not required, the light is simulated much more technologically and in detail.

Flat and three-dimensional light sources are added to the above, including any object that can be made luminous, and even simple sources work with algorithms that are more honest and build rays correctly. Yes, even the HDR-texture of the environment, shot in his yard, can be a source of light.

And the coolest thing is global illumination with multiple bounces of virtual photons, which makes it possible to critically add realism to the scene at a dozen clicks and a significant increase in render time.

In games, GOs imitate simpler shading, but even they are very hard on performance.

The more complex the scene, the more difficult it is to calculate the light in it. Materials can reflect it, pass through it with refraction and bundling - this is called caustic . The latter also does not weakly stretch the render time, so that it is difficult to make cut diamonds at home, even in the virtual version.

Separately, it must be said about subsurface light scattering (SSS) .

Without it, imitation of human skin and other non-fully opaque materials looks rubber and unnatural. You can easily illustrate this effect by covering the light source with your hand. The photons, reflected in the hand many times, make it shine.

When we just look at a person, this effect is not so vividly noticeable, but a computer face that behaves differently is not very similar to a human face.

Therefore, to make the monster much easier.

Compose

When the actors were filmed, the graphics were rendered, the backgrounds were drawn, the footage prepared, it is time to put it all together and revive the world with additional effects. So much so that the result does not look like a set of pictures from different worlds.

Compositing or composite is a complex multi-layer installation, combining all the shooting material in the frame. Layers can be freely located in the virtual space, undergo complex processing, affect each other. Any frame with computer graphics - the result of a composite.

Before the rendering, I missed one important point, which will allow us in principle to start combining footage.

Shooting the actors make the camera. Computer graphics also sees some kind of virtual camera, which can be controlled, but it is not the same that took off the actors. How to synchronize the movement of cameras, reality and models?

If there is no cool camera that records all of its movements and turns, but really want to attach a terminator’s red eye to yourself, you will have to watch.

Tracking is such magic that it is born when a program analyzes movement in a frame and builds space with a moving camera on the basis of this data. Or moving space with a static camera. Or even if everything moves relative to each other. Tracking allows you to bind a virtual camera to real shots, and therefore correctly add a virtual object to the scene.

The final frame is created from a whole mosaic of flat layers and sometimes three-dimensional objects located in virtual space. After it is subjected to color, but this is more related to the installation than to the visual effects.

For those interested, drive the original name of any blockbuster with the word "breakdown" in the search for YouTube. Effect studios always make selections with a demonstration of their work.

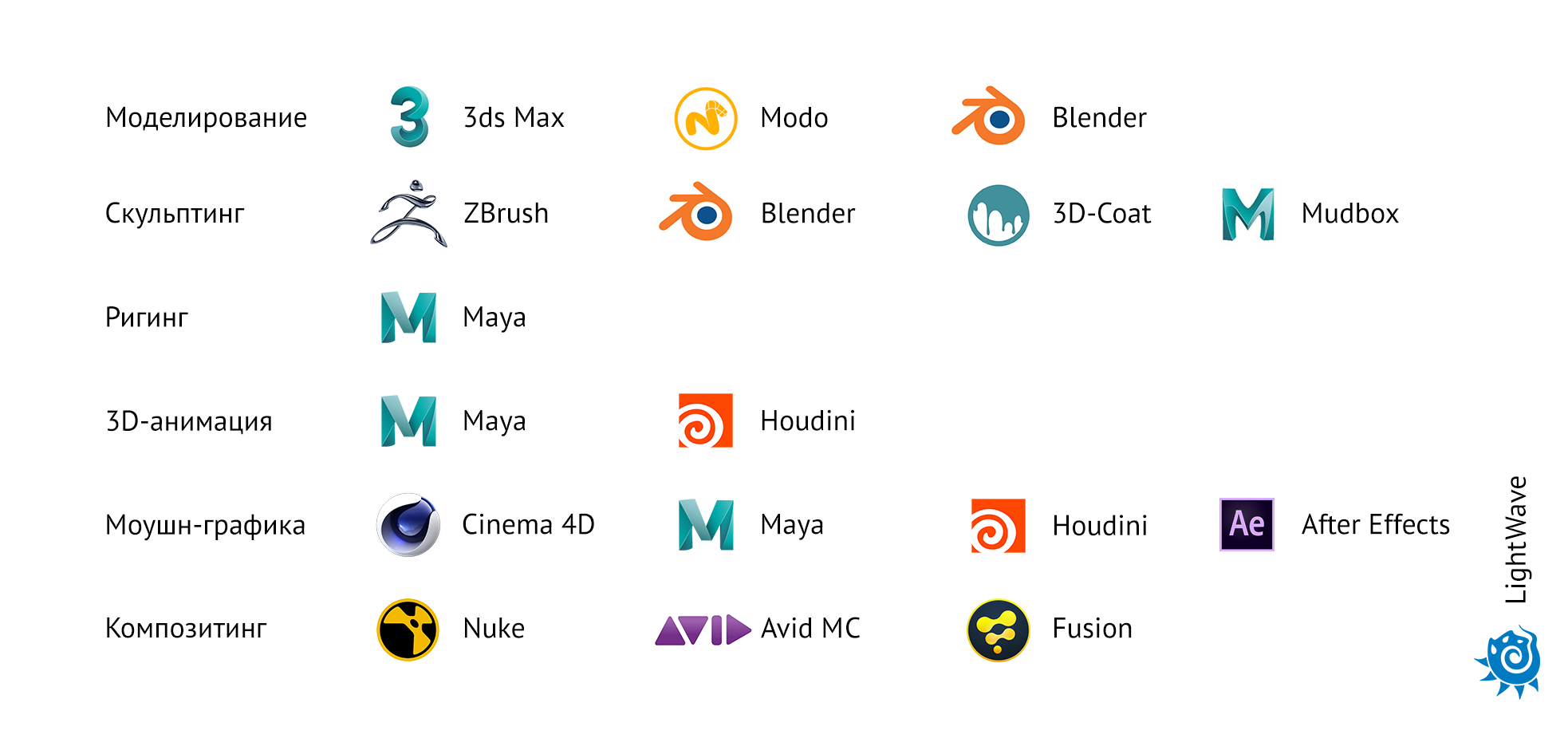

Soft

What is all this done? In which magic programs that are not accessible to mere mortals? Obviously not in Windows Movie Maker, where I riveted videos with tuning for Need Speed!

I can repeat here the products mentioned above. It is for convenience that all the software was in one place and it was more convenient to find if you suddenly need it.

Many have heard about 3ds Max from the company Autodesk. In practice, it is most often used for architectural visualizations. It is also used for modeling, since many lessons and plug-ins have been done for Max in the history of his existence for any occasion that you can master everything quickly enough. Modeling is also done in MODO and Blender.

In addition to Max, Avtodesk releases Maya, in which it is convenient to do rigging and animation.

Sculpting is done mostly in ZBrush, but it can also be done in Blender, 3D-Coat (in which it is also convenient to do a retopology) or Mudbox.

Motion graphics are done in Cinema 4D and for some time in Maya, when she got the tools for procedural animation.

But the king of complex procedural animation is Houdini. It does the simulation.

Two-dimensional motion graphics and simple three-dimensional do in After Effects from Adobe (a kind of Photoshop in motion with a simple three-dimensional engine), in which you can make a simple composite.

Serious compose do in Nuke, Avid Media Composer and Fusion.

There is Lightwave, but no one knows why it is needed =)

The products on the market are dark, for each task of their own, and often not one. Under the specific tasks of the studio often develop their own solutions, but at home, you do not need such. And for most everyday tasks, or for teaching mechanics to work in 3D, you can take the free Blender and gain some experience.

Funny nuance: people in the comments to the video wrote that it’s nice to start with Blender, but then trying to master the same Max is difficult and is associated with strong discomfort due to the unfriendly interface. A resume without mentioning precisely Max is considered much less eager.

That's all, all happy New Year!

All Articles