How new interfaces replace keyboard

This article focuses on the interfaces as well as the technologies that will come after touchscreens and voice recognition devices.

Apple Watch, which, by the way, is far from the most powerful computer to date, can handle gigabytes of data every second. Our brain has tens of billions of neurons and more than four quadrillion connections, while the amount of information that it can process every second is so great that we even can’t estimate it. However, the bridge between the incredibly powerful human brain and the equally fast world of high-tech 0s and 1s is nothing more than how modest devices, the computer keyboard and mouse, have long become commonplace.

Apple Watch is 250 times more powerful than the computer with which the Apollo lunar module was able to land on the moon's surface. As computers developed, the field of their use became more and more wide: from computerization of entire buildings to computer nanometers. However, the keyboard is still the most reliable and widely used means of interaction between humans and computers.

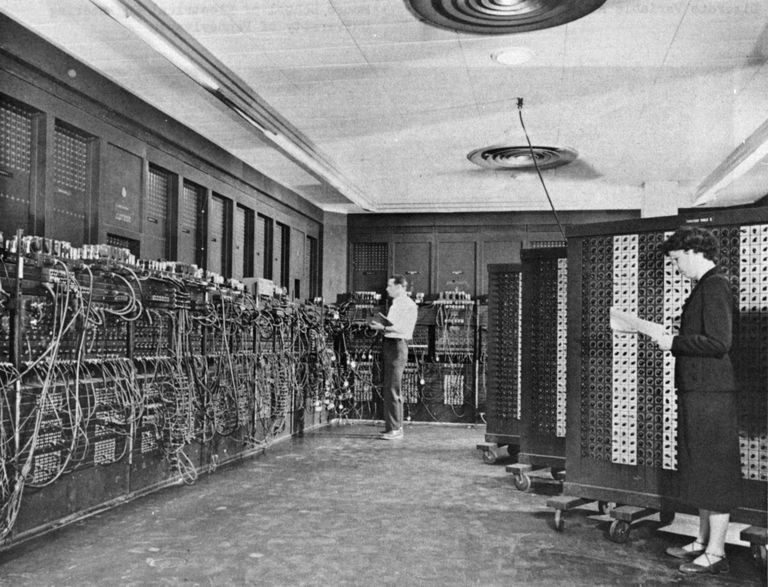

The development of the first computer keyboard began more than 50 years ago.

Already today we can observe the widespread introduction of computers into all sorts of objects around us, however, since it is not always convenient to connect a keyboard and mouse to such computerized objects, you need to find other ways to ensure interaction. At the moment, the most relevant solution is the interaction using smart objects or IoT - voice recognition devices. Well, let's take a closer look at the interaction methods that developers and research companies are working on today.

Advances in multi-touch technology and multi-touch gestures made the touch screen a favorite among interfaces. Researchers and owners of startups are conducting research aimed at improving the interaction with the help of touches: for example, devices will be able to determine how hard you tap the screen, what part of your finger you are doing, and who exactly touches the device.

iPhone's 3D Touch can determine the strength with which you touch the screen.

Qeexo is able to understand which part of your finger you touch the screen.

One of my favorites is the Swept Frequency Capacitive Sensing (SFCS) capacitive sweep frequency sensor, developed by Professor Chris Harrison of Carnegie Mellon University.

DARPA funded research in this area as far back as the 70s (!), But until recently such studies were not applied in any way. Nevertheless, thanks to the technology of deep learning, modern voice recognition devices are widely used. At the moment, the biggest problem with voice recognition is not deciphering them, but rather, with devices understanding and understanding of the meaning of the message being transmitted to them.

Hound copes with contextual speech recognition.

Eye tracking systems measure either the direction of the eye or the movement of the eye relative to the head. In view of the reduction in the cost of cameras and sensors, as well as the ever-increasing popularity of virtual reality glasses, the interaction of users and computers with the help of eye movement tracking systems becomes more relevant than ever.

The technology acquired by Google, Eyefluence, provides interaction with virtual reality through the movements of your eyes.

Tobii, an IPO company (Initial Public Offering) in 2015, and consumer electronics manufacturers are conducting joint research on eye tracking systems.

In my opinion, gesture tracking systems are the coolest among human-computer interaction systems. I personally conducted research on various methods of tracking gestures, and here are some of the technologies used today:

Data from the accelerometer, gyroscope and compass (all together or only some of them) are used to track gestures. The need for re-calibration and a fairly low coefficient of correspondence between incoming and received information are some of the problems inherent in this method.

The result of a study conducted by the Future Interfaces Group's CMU group was a bright classification using data with a high sampling rate.

Many of the cool gesture tracking systems presented above use a combination of high-resolution cameras, infrared illuminators and infrared cameras. Such systems work as follows: such a system projects thousands of small dots onto an object, and the distortion varies depending on how far such an object is located (there are many other similar methods, for example ToF, but I don’t go into their work I will). Various variants of this technology are used in the following platforms: Kinect, Intel's RealSense, Leap Motion, Google's Tango.

Leap Motion is a device for tracking gestures.

Apple has taken a step towards the introduction of such a system in the front camera iPhone X for FaceID.

In this method, a finger or other parts of the body are a conductive object that distorts the electromagnetic field that is created when the antennae of the transmitter touch the object.

AuraSense Smart Watch uses 1 transmitter and 4 antennas to track gestures.

Radar has long been used to track the movements of various objects - from airplanes to ships and cars. Google ATAP conducted literally jewelry work, creating a radar in the form of a microchip measuring 8 by 10 mm. This universal chipset can be embedded in smart watches, televisions and other devices in order to track movements.

Project Project Soli by Google ATAP.

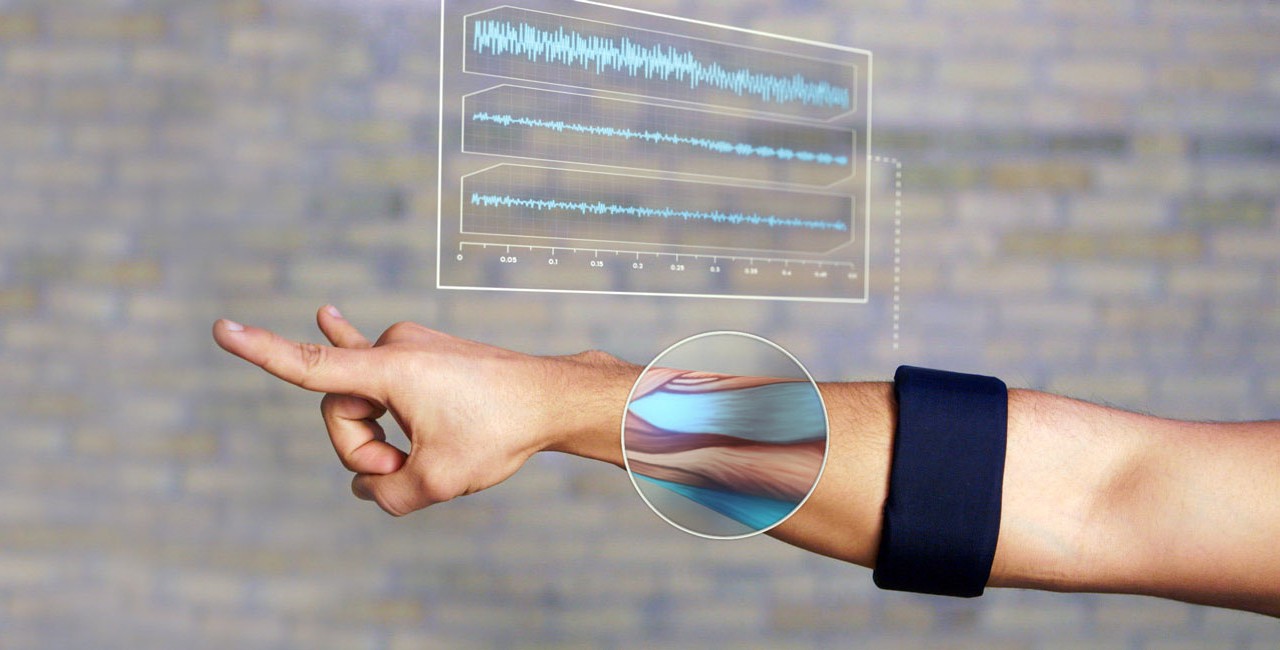

Muscular-Machine Interface from Thalmic Labs.

If these modern technologies have not yet plunged you into a light stupor, then let's not dwell on it. All of the above methods measure and detect the side effect of our gestures.

Processing the signals directly from the muscle nerves is another step towards improving the interaction between man and the computer.

Treatment of the surface electromyographic signal (sEMG) is provided by installing sensors on the skin of the biceps / triceps or forearm, while signals from different muscle groups are sent to the tracking device. Due to the fact that the sEMG signal is quite noisy, it is possible to determine certain movements.

Thalmic Labs was one of the first companies to develop a sEMG-based user device - the Myo bracelet.

By purchasing such a device, you, of course, want to wear it on your wrist, but your wrist muscles are deep enough, so it will be difficult for the device to get an accurate signal to track gestures.

CTRL Labs, which has been on the market for so long, has created a gesture tracking device using sEMG signals that you can wear on your wrist. Such a device from CTRL Labs measures the sEMG signal and detects a neural drive that comes from the brain following the movement. This method is another step towards effective interaction between the computer and the human brain. Thanks to the technology of this company, you can type a message on your phone with your hands in your pockets.

Over the past year, a lot has happened: DARPA invested $ 65 million in the development of neural interfaces; Ilon Musk raised $ 27 million for Neuralink; Kernel founder Brian Johnson invested $ 100 million in his project; and Facebook has begun work on the development of the neurocomputer interface (NCI). There are two types of NCIs:

A device for electroencephalography (ElectroEncephaloGraphy) receives signals from sensors mounted on the scalp.

Imagine a microphone mounted above a football stadium. You will not know what each person is saying, but by loud greetings and claps you can see if the goal has been achieved.

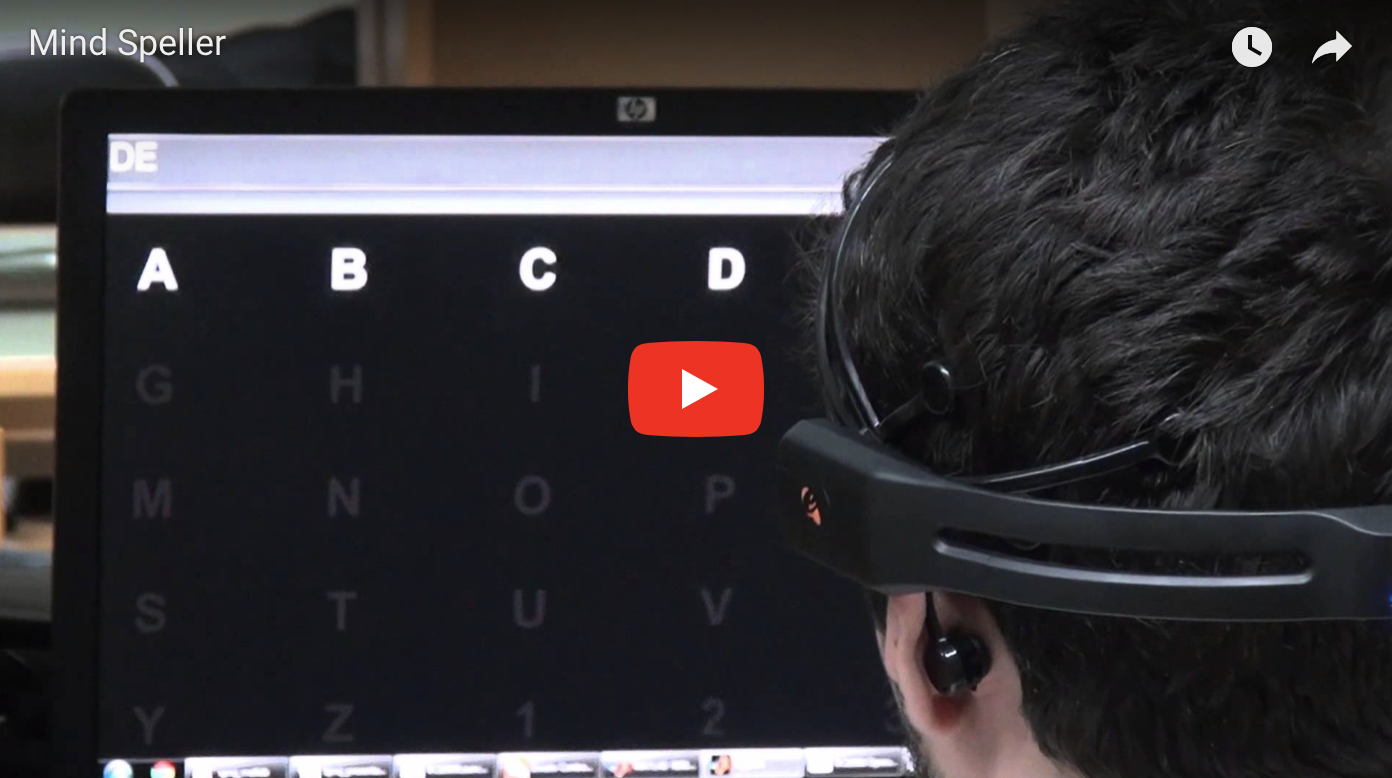

ElectroEncephaloGraphy (EEG) based interfaces really can't literally read your mind. The most commonly used NCI paradigm is the P300 Speller. For example, you want to type the letter "R"; computer randomly shows different characters; As soon as you see the “R” on the screen, your brain is surprised and gives a special signal. This is quite a resourceful way, but I would not say that the computer “reads your thoughts”, because you cannot determine what a person thinks about the letter “R”, and this is more like a magic trick that, however, does not work.

Companies such as Emotiv, NeuroSky, Neurable and several others have developed EEG headsets for a wide consumption market. Building 8 from Facebook announced the Brain Typing project, which uses another method to detect signals from the brain, called near-infrared functional spectroscopy (fNIRS), which aims to track 100 words per minute.

Neural interface Neurable.

At the moment, this is the highest level in the field of man-machine interface. The interaction of a person and a computer with the help of invasive NCI is provided by attaching electrodes directly to the human brain. However, it is worth noting that the supporters of this approach are faced with a number of unsolved problems that they still have to solve in the future.

Perhaps, reading this article, a thought occurred to you: they say, if all the above-mentioned technologies already exist, then why do we still use the keyboard and mouse. However, in order for a new technology that allows a person to interact with a computer, could become a consumer product, it must have some features.

Would you use the touchscreen as the main interface if it only responded to 7 out of 10 of your touches? It is very important that the interface, which will be fully responsible for the interaction between the device and the user, has the highest possible accuracy.

Waiting time

Just imagine: you type a message on the keyboard, and the words on the screen appear only 2 seconds after pressing the keys. Even a one second delay will have a very negative effect on the user's experience. A man-machine interface with a delay of even a few hundred milliseconds is simply useless.

The new man-machine interface should not imply the study of many special gestures by users. For example, imagine that you would have to learn a separate gesture for each letter of the alphabet!

Feedback

The sound of a keyboard click, the vibration of the phone, a small beep of the voice assistant - all this serves as a kind of notification of the user about the end of the feedback cycle (or the action caused by it). The feedback loop is one of the most important aspects of any interface that users often don’t even pay attention to. Our brain is arranged in such a way that it is waiting for confirmation that an action has been completed and we have received some result.

One of the reasons why it is very difficult to replace the keyboard with any other gesture tracking device is the lack of the ability of such devices to explicitly notify the user about the completed action.

At the moment, researchers are already working hard to create touchscreens that can provide tactile feedback in 3D, so that interaction with touch screens should reach a new level. In addition, Apple has done a truly tremendous job in this direction.

Because of all of the above, it may seem to you that we never see how something new will change the keyboard, at least in the near future. So, I want to share with you my thoughts about what features the interface of the future should have:

Apple Watch, which, by the way, is far from the most powerful computer to date, can handle gigabytes of data every second. Our brain has tens of billions of neurons and more than four quadrillion connections, while the amount of information that it can process every second is so great that we even can’t estimate it. However, the bridge between the incredibly powerful human brain and the equally fast world of high-tech 0s and 1s is nothing more than how modest devices, the computer keyboard and mouse, have long become commonplace.

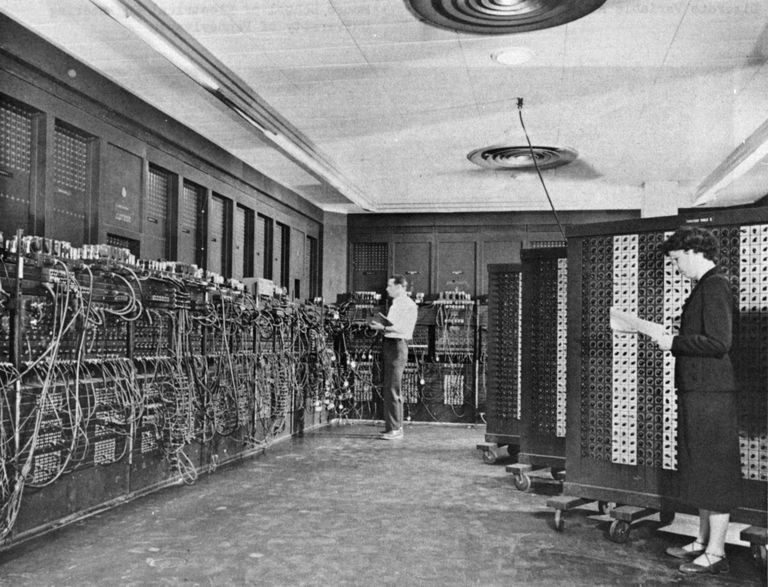

Apple Watch is 250 times more powerful than the computer with which the Apollo lunar module was able to land on the moon's surface. As computers developed, the field of their use became more and more wide: from computerization of entire buildings to computer nanometers. However, the keyboard is still the most reliable and widely used means of interaction between humans and computers.

The development of the first computer keyboard began more than 50 years ago.

What will replace the keyboard and mouse?

Already today we can observe the widespread introduction of computers into all sorts of objects around us, however, since it is not always convenient to connect a keyboard and mouse to such computerized objects, you need to find other ways to ensure interaction. At the moment, the most relevant solution is the interaction using smart objects or IoT - voice recognition devices. Well, let's take a closer look at the interaction methods that developers and research companies are working on today.

Touch Interaction

Advances in multi-touch technology and multi-touch gestures made the touch screen a favorite among interfaces. Researchers and owners of startups are conducting research aimed at improving the interaction with the help of touches: for example, devices will be able to determine how hard you tap the screen, what part of your finger you are doing, and who exactly touches the device.

iPhone's 3D Touch can determine the strength with which you touch the screen.

Qeexo is able to understand which part of your finger you touch the screen.

One of my favorites is the Swept Frequency Capacitive Sensing (SFCS) capacitive sweep frequency sensor, developed by Professor Chris Harrison of Carnegie Mellon University.

Voice interaction

DARPA funded research in this area as far back as the 70s (!), But until recently such studies were not applied in any way. Nevertheless, thanks to the technology of deep learning, modern voice recognition devices are widely used. At the moment, the biggest problem with voice recognition is not deciphering them, but rather, with devices understanding and understanding of the meaning of the message being transmitted to them.

Hound copes with contextual speech recognition.

Eye interaction

Eye tracking systems measure either the direction of the eye or the movement of the eye relative to the head. In view of the reduction in the cost of cameras and sensors, as well as the ever-increasing popularity of virtual reality glasses, the interaction of users and computers with the help of eye movement tracking systems becomes more relevant than ever.

The technology acquired by Google, Eyefluence, provides interaction with virtual reality through the movements of your eyes.

Tobii, an IPO company (Initial Public Offering) in 2015, and consumer electronics manufacturers are conducting joint research on eye tracking systems.

Gesture Interaction

In my opinion, gesture tracking systems are the coolest among human-computer interaction systems. I personally conducted research on various methods of tracking gestures, and here are some of the technologies used today:

Inertial Measuring Unit (I & P)

Data from the accelerometer, gyroscope and compass (all together or only some of them) are used to track gestures. The need for re-calibration and a fairly low coefficient of correspondence between incoming and received information are some of the problems inherent in this method.

The result of a study conducted by the Future Interfaces Group's CMU group was a bright classification using data with a high sampling rate.

Infrared illuminators + cameras (depth sensor)

Many of the cool gesture tracking systems presented above use a combination of high-resolution cameras, infrared illuminators and infrared cameras. Such systems work as follows: such a system projects thousands of small dots onto an object, and the distortion varies depending on how far such an object is located (there are many other similar methods, for example ToF, but I don’t go into their work I will). Various variants of this technology are used in the following platforms: Kinect, Intel's RealSense, Leap Motion, Google's Tango.

Leap Motion is a device for tracking gestures.

Apple has taken a step towards the introduction of such a system in the front camera iPhone X for FaceID.

Electromagnetic field

In this method, a finger or other parts of the body are a conductive object that distorts the electromagnetic field that is created when the antennae of the transmitter touch the object.

AuraSense Smart Watch uses 1 transmitter and 4 antennas to track gestures.

Radar

Radar has long been used to track the movements of various objects - from airplanes to ships and cars. Google ATAP conducted literally jewelry work, creating a radar in the form of a microchip measuring 8 by 10 mm. This universal chipset can be embedded in smart watches, televisions and other devices in order to track movements.

Project Project Soli by Google ATAP.

Muscular-Machine Interface from Thalmic Labs.

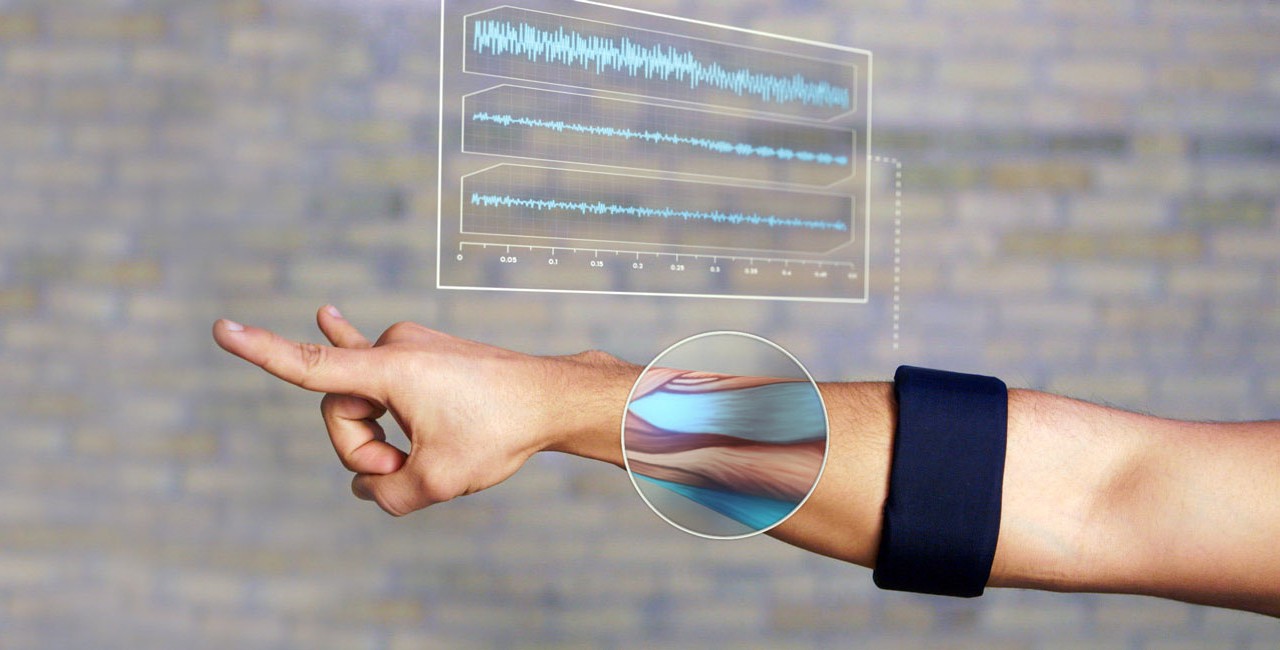

Biosignals

If these modern technologies have not yet plunged you into a light stupor, then let's not dwell on it. All of the above methods measure and detect the side effect of our gestures.

Processing the signals directly from the muscle nerves is another step towards improving the interaction between man and the computer.

Treatment of the surface electromyographic signal (sEMG) is provided by installing sensors on the skin of the biceps / triceps or forearm, while signals from different muscle groups are sent to the tracking device. Due to the fact that the sEMG signal is quite noisy, it is possible to determine certain movements.

Thalmic Labs was one of the first companies to develop a sEMG-based user device - the Myo bracelet.

By purchasing such a device, you, of course, want to wear it on your wrist, but your wrist muscles are deep enough, so it will be difficult for the device to get an accurate signal to track gestures.

CTRL Labs, which has been on the market for so long, has created a gesture tracking device using sEMG signals that you can wear on your wrist. Such a device from CTRL Labs measures the sEMG signal and detects a neural drive that comes from the brain following the movement. This method is another step towards effective interaction between the computer and the human brain. Thanks to the technology of this company, you can type a message on your phone with your hands in your pockets.

Neurocomputer interface

Over the past year, a lot has happened: DARPA invested $ 65 million in the development of neural interfaces; Ilon Musk raised $ 27 million for Neuralink; Kernel founder Brian Johnson invested $ 100 million in his project; and Facebook has begun work on the development of the neurocomputer interface (NCI). There are two types of NCIs:

Non-invasive NCI

A device for electroencephalography (ElectroEncephaloGraphy) receives signals from sensors mounted on the scalp.

Imagine a microphone mounted above a football stadium. You will not know what each person is saying, but by loud greetings and claps you can see if the goal has been achieved.

ElectroEncephaloGraphy (EEG) based interfaces really can't literally read your mind. The most commonly used NCI paradigm is the P300 Speller. For example, you want to type the letter "R"; computer randomly shows different characters; As soon as you see the “R” on the screen, your brain is surprised and gives a special signal. This is quite a resourceful way, but I would not say that the computer “reads your thoughts”, because you cannot determine what a person thinks about the letter “R”, and this is more like a magic trick that, however, does not work.

Companies such as Emotiv, NeuroSky, Neurable and several others have developed EEG headsets for a wide consumption market. Building 8 from Facebook announced the Brain Typing project, which uses another method to detect signals from the brain, called near-infrared functional spectroscopy (fNIRS), which aims to track 100 words per minute.

Neural interface Neurable.

Invasive NCI

At the moment, this is the highest level in the field of man-machine interface. The interaction of a person and a computer with the help of invasive NCI is provided by attaching electrodes directly to the human brain. However, it is worth noting that the supporters of this approach are faced with a number of unsolved problems that they still have to solve in the future.

Challenges to be solved

Perhaps, reading this article, a thought occurred to you: they say, if all the above-mentioned technologies already exist, then why do we still use the keyboard and mouse. However, in order for a new technology that allows a person to interact with a computer, could become a consumer product, it must have some features.

Accuracy

Would you use the touchscreen as the main interface if it only responded to 7 out of 10 of your touches? It is very important that the interface, which will be fully responsible for the interaction between the device and the user, has the highest possible accuracy.

Waiting time

Just imagine: you type a message on the keyboard, and the words on the screen appear only 2 seconds after pressing the keys. Even a one second delay will have a very negative effect on the user's experience. A man-machine interface with a delay of even a few hundred milliseconds is simply useless.

Training

The new man-machine interface should not imply the study of many special gestures by users. For example, imagine that you would have to learn a separate gesture for each letter of the alphabet!

Feedback

The sound of a keyboard click, the vibration of the phone, a small beep of the voice assistant - all this serves as a kind of notification of the user about the end of the feedback cycle (or the action caused by it). The feedback loop is one of the most important aspects of any interface that users often don’t even pay attention to. Our brain is arranged in such a way that it is waiting for confirmation that an action has been completed and we have received some result.

One of the reasons why it is very difficult to replace the keyboard with any other gesture tracking device is the lack of the ability of such devices to explicitly notify the user about the completed action.

At the moment, researchers are already working hard to create touchscreens that can provide tactile feedback in 3D, so that interaction with touch screens should reach a new level. In addition, Apple has done a truly tremendous job in this direction.

What awaits us in the future

Because of all of the above, it may seem to you that we never see how something new will change the keyboard, at least in the near future. So, I want to share with you my thoughts about what features the interface of the future should have:

- Multimodality. We will use different interfaces in different cases. To enter text, we will still use the keyboard; touch screens - for drawing and designing; voice recognition devices - to interact with our digital personal assistants; systems for tracking gestures using radar - while driving; muscular-machine interface systems - for games and virtual reality; and the neurocomputer interface is for choosing the music that is most suitable for our mood.

- Context Recognition. For example, you read an article on your laptop about forest fires in northern California, and then ask a virtual voice assistant through smart headphones: “How strong is the wind in this area now?”. So, the virtual assistant must understand that you are asking exactly about the area where fires are currently occurring.

- Automatism . Due to the development of AI, the computer will be able to better predict what you are planning to do, and thus you will not even have to give it commands. He will know what music to turn on when you wake up, so you will no longer need any interface to search and play your favorite track.

All Articles