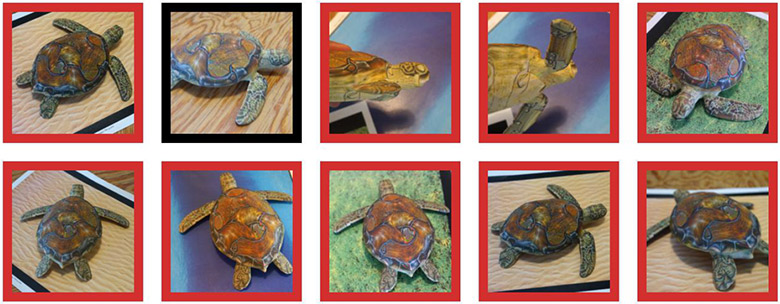

The first competitive 3D-examples to deceive neural networks

A turtle printed on a 3D printer is recognized by the neural network as a turtle (green contour), a rifle (red contour) or as another object (black contour)

It has long been known that small, targeted changes in the picture “break” the machine learning system, so that it classifies a completely different image . Such "Trojan" pictures are called "adversarial examples" (adversarial examples) and are one of the known limitations of depth learning .

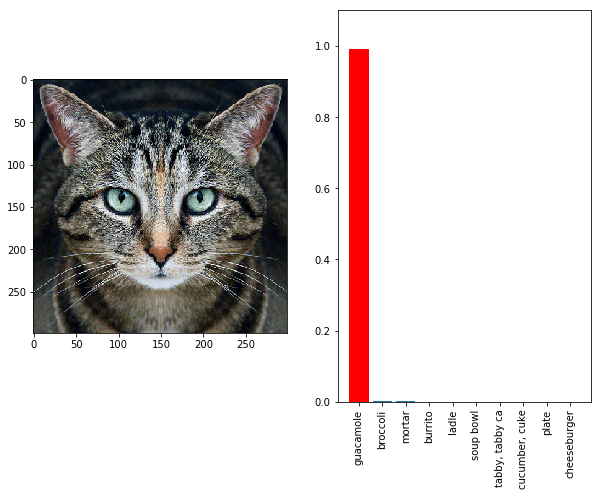

They work simply: you need to make a gradient ascent in the input data space to generate patterns that maximize class prediction for a given class. For example, if you take a photo of a panda and add a gibbon gradient, we will force the neural network to classify this panda as a gibbon. The turtle can be issued as a rifle (see illustration above). The cat turns into a cold snack guacamole (see under the cut) - it does not matter. Any object turns into any other object in the eyes of machine intelligence, because AI has a special “vision” system, different from a human one.

Until now, such a fine tuning of the gradient worked only on 2D images and was very sensitive to any distortion.

The cat's photo is recognized as guacamole in the InceptionV3 classifier

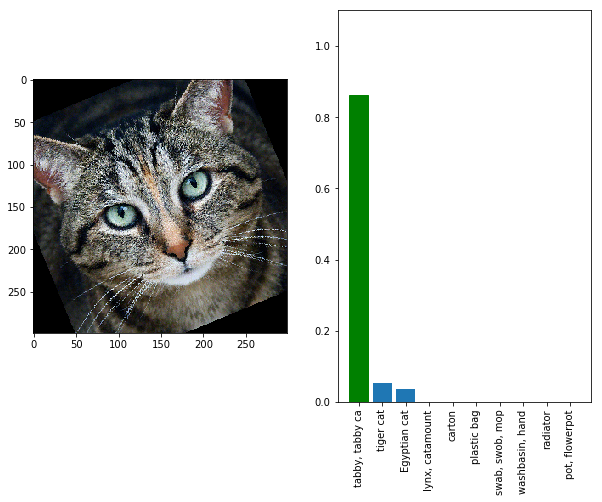

Look at the cat from a different angle or from another distance - and the neural network already sees the cat in it again, and not a cold snack.

The photo of the cat is again recognized as a cat in the InceptionV3 classifier, if you rotate it a little

That is, in reality, such competitive examples will not be effective due to zoom, digital camera noise, and other distortions that inevitably arise in reality. This is unacceptable if we want to reliably and steadily deceive computer vision systems offline . But now there is hope that people can do this task. Researchers at the Massachusetts Institute of Technology and the independent research group LabSix (made up of students and alumni of MIT) created the first algorithm in the world that generates competitive examples in 3D . For example, the video below shows a bug that is consistently recognized by the Google InceptionV3 classifier as a rifle at almost any angle.

The algorithm is capable of generating not only turtles, but also any arbitrary models. For the sample, the researchers also printed a baseball, which is classified as an espresso (coffee) from any angle, and also created a large number of other models - a kind of optical illusions for AI.

The deception of machine vision works even if the object in the photograph is in a semantically irrelevant context. Obviously, during training, the neural network could not see either a rifle under water, or an espresso in a catcher glove.

Competing examples in 3D: a turtle that looks like a rifle to an InceptionV3 neural network, and a baseball that looks like an espresso

Although the method is “sharpened” for a particular neural network, in comments to past scientific articles on this topic, they drew attention to the remark of the researchers that, most likely, the attack will affect many models trained on this particular data set - including even linear classifiers. So for an attack to be sufficient, a hypothesis is sufficient on the basis of what data the model could have been trained.

“In concrete terms, this means that it is likely that it is possible to create a road sign to sell the house, which for human drivers seems quite ordinary, but for an unmanned vehicle it will seem like a pedestrian who suddenly appeared on the sidewalk,” said the scientific work. “Contentious examples are of practical interest that need to be considered as neural networks become more common (and dangerous).”

To protect against such attacks, future AI developers can keep secret information about the architecture of their neural networks, and most importantly, about the data set that was used in training.

The scientific article was published on October 30, 2017 on the site of preprints arXiv.org (arXiv: 1707.07397v2).

All Articles