Details of creating a bot for Dota 2

The result of the game of our bot shows that playing with oneself [self-play] can seriously increase the quality of machine learning systems, and increase it from a state far below the human level to the superman level, if there are appropriate computing powers. Within a month, our system rose from a state that hardly corresponded to the level of a good player to the state in which it beat the best professionals, and since then has continued to improve. The quality of the supervised depth learning system is determined by the training data set, but for systems that play with themselves, the available data set is automatically improved.

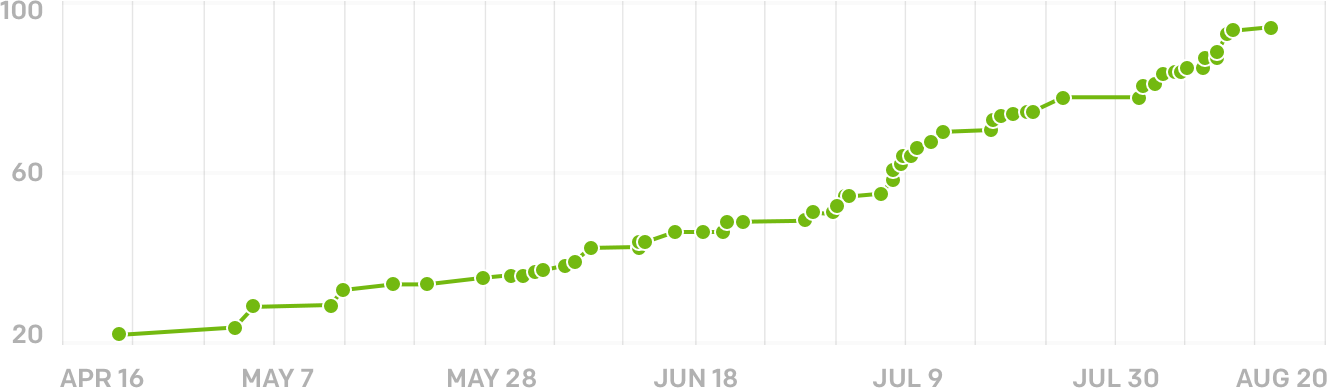

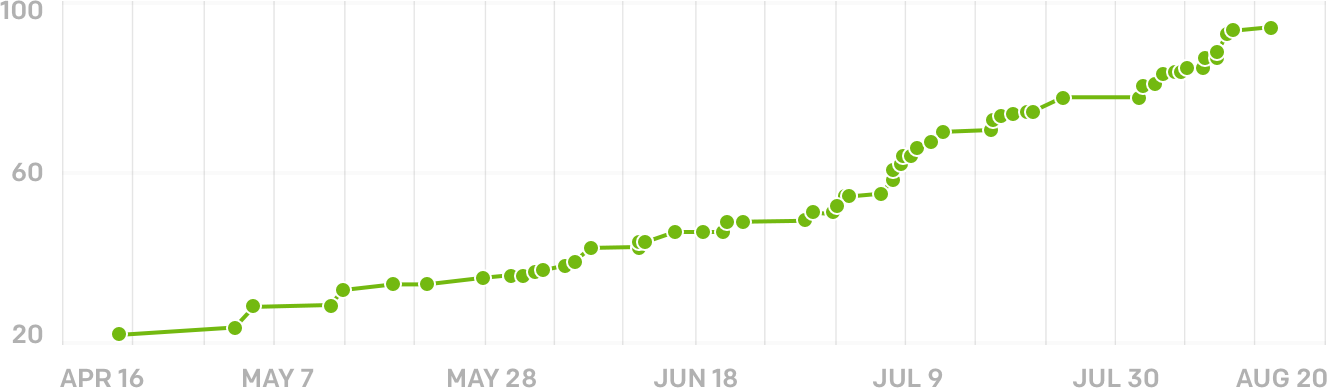

The change in the TrueSkill rating (similar to the Elo rating for chess) of our bot with time, calculated using simulation games between bots.

The project developed as follows. A rating of 15% of players is below 1.5K on the MMR scale; 58% of players have less than 3K; 99.99% of players are below 7.5K.

• May 1: First learning results with reinforcements in a simple Dota environment, where the Drow Ranger learns to fight hard-programmed Earthshaker.

• May 8: A tester with a MMR of 1.5K says that his results improve faster than that of a bot.

• Beginning of June: beat the tester with MMR 1,5K

• June 30: won most of the games from the MMR 3000 tester.

• July 8: For the first time, with a small margin, I won a semi-professional tester with MMR 7.5K.

• August 7: won Blitz (6.2K, former professional) 3-0, Pajkatt (8.5K, professional) 2-1, and CC & C (8.9K, professional) 3-0. They all agreed that SumaiL would figure out how to beat him.

• August 9: Arteezy won (10K, professional, one of the best players) 10-0. He said that SumaiL can handle this bot.

• August 10: SumaiL won (8.3K, professional, top 1 player) 6-0. The player said that the bot can not be defeated. Played with the version of the bot on August 9, won 2-1.

• August 11: Dendi won (7.3K, professional, former world champion) 2-0. 60% more wins than the version of August 10.

Game vs SumaiL

In the full version of the game, players are fighting for 5 to 5, but in some tournaments there are also games 1 to 1. Our bot played according to standard tournament rules - we did not add special simplifications for AI.

The bot worked with the following interfaces:

• Observation: APIs designed to have the same capabilities as live players, concerning heroes, other characters of the game, and the surface next to the hero. The game is partially observable.

• Actions: accessible through the API, with a frequency comparable to a human, including movement to a specific place, attack and use of objects.

• Feedback: the bot receives rewards for the victory, as well as simple parameters, such as health and lastst .

We selected dozens of items available for the bot, and chose one to explore. We also separately trained on blocking creeps using traditional reinforcement training techniques, as this happens before a rival appears.

Bot plays against Arteezy

Our approach, combining the game with ourselves and learning from outside, allowed us to significantly enhance the game of our bot from Monday to Thursday, while the tournament was going. Monday night Pajkatt won using an unusual assembly of items. We added this assembly to the list of available items.

At around 1 pm on Wednesday, we tested the latest version of the bot. The bot lost a lot of health after the first wave. We decided that we needed to roll back, but then we noticed that the subsequent game was awesome, and the behavior in the first wave was just bait for other bots. Subsequent games with himself solved the problem when the bot learned to resist the strategy with the bait. And we combined it with the Monday bot only for the first wave, and finished just 20 minutes before Arteezy appeared.

After matches with Arteezy, we updated the creep blocking model, which increased TrueSkill by one. Subsequent training sessions before the match with SumaiL on Thursday increased TrueSkill by two points. SumaiL pointed out that the bot has learned to cast destructive spells [raze] out of sight of the enemy. This happened thanks to the mechanics that we did not know about: casting outside the field of view of the enemy does not allow him to charge Vond.

Arteezy played a match with our 7.5K tester. Arteezy won the game, but our tester managed to surprise him with the help of a strategy spied on the bot. Arteezy later noted that Paparazi had once used this strategy against him, and that they rarely resorted to it.

Pajkatt wins the Monday bot. He lures the bot, and then uses the regeneration.

Although SumaiL called the bot "invincible", he can still get confused in situations that are too different from what he saw. We launched it at one of the events held at the tournament, where players played over 1000 games in order to defeat the bot in all possible ways.

Successful vulnerabilities fall into three categories:

• Tugging creeps. You can constantly force creeps from the line to chase after you immediately after they appear. As a result, several dozen creeps will run after you all over the map, and enemy creeps will destroy the bot's tower.

• Orb of venom + wind lace: give you an advantage in speed of movement over the bot on the first level and allow you to quickly cause damage.

• Raze on the first level: requires skills, but several 6-7K class players were able to kill the bot on the first level, successfully completing 3-5 spells in a short time.

Fixing problems for one-on-one matches will be like fixing a bug with Pajkatt. But for 5/5 matches, these problems are not vulnerabilities, and we will need a system that can cope with strange situations that she hasn’t seen before.

We are not yet ready to discuss the internal features of the bot - the team is working on solving the problem with the game 5 on 5.

The first step of the project was to understand how to run Dota 2 in the cloud on a physical GPU. The game gave an incomprehensible mistake in such cases. But when we started on the GPU on Greg's desktop (during the show, this desktop was brought onto the stage), we noticed that Dota boots with the connected monitor, and gives the same message without a monitor. Therefore, we set up our virtuals so that they pretend to be connected to a physical monitor.

At that time, Dota did not support dedicated servers, that is, launching with scaling and without a GPU was possible only in the version with a very slow software renderer. Then we created a stub for most of the OpenGL calls, except for those that were needed for the download.

At the same time, we wrote a bot on the scripts - as a reference for comparison (in particular, because the built-in bots do not work well in 1 on 1 mode) and to understand the semantics of the API for bots . The script bot reaches up to 70 lastshits in 10 minutes from an empty path, but it still loses quite well to the people playing. Our best bot, playing 1 on 1, reaches the mark of about 97 (it destroys the tower earlier, so we can only extrapolate), and the theoretical maximum is 101.

Bot plays against SirActionSlacks. The bot distraction strategy didn’t work as a crowd of couriers

Playing 1 on 1 is a difficult task, but 5 on 5 is an ocean of complexity. We will need to expand the limits of AI capabilities so that he can handle it.

In a familiar way, we begin by copying the behavior. Dota hosts about a million public games per day. Match records are stored on Valve servers for two weeks. We download every record of the game at the expert level since last November, and collected a data set of 5.8 million games (each game is about 45 minutes with 10 players). We use OpenDota to search for records and transferred $ 12,000 to them (which is ten times more than what they wanted to collect in a year) to support the project.

We still have a lot of ideas, and we hire programmers (interested in machine learning, but not necessarily experts) and researchers to help us. We thank Microsoft Azure and Valve for support in our work.

The change in the TrueSkill rating (similar to the Elo rating for chess) of our bot with time, calculated using simulation games between bots.

The project developed as follows. A rating of 15% of players is below 1.5K on the MMR scale; 58% of players have less than 3K; 99.99% of players are below 7.5K.

• May 1: First learning results with reinforcements in a simple Dota environment, where the Drow Ranger learns to fight hard-programmed Earthshaker.

• May 8: A tester with a MMR of 1.5K says that his results improve faster than that of a bot.

• Beginning of June: beat the tester with MMR 1,5K

• June 30: won most of the games from the MMR 3000 tester.

• July 8: For the first time, with a small margin, I won a semi-professional tester with MMR 7.5K.

• August 7: won Blitz (6.2K, former professional) 3-0, Pajkatt (8.5K, professional) 2-1, and CC & C (8.9K, professional) 3-0. They all agreed that SumaiL would figure out how to beat him.

• August 9: Arteezy won (10K, professional, one of the best players) 10-0. He said that SumaiL can handle this bot.

• August 10: SumaiL won (8.3K, professional, top 1 player) 6-0. The player said that the bot can not be defeated. Played with the version of the bot on August 9, won 2-1.

• August 11: Dendi won (7.3K, professional, former world champion) 2-0. 60% more wins than the version of August 10.

Game vs SumaiL

Task

In the full version of the game, players are fighting for 5 to 5, but in some tournaments there are also games 1 to 1. Our bot played according to standard tournament rules - we did not add special simplifications for AI.

The bot worked with the following interfaces:

• Observation: APIs designed to have the same capabilities as live players, concerning heroes, other characters of the game, and the surface next to the hero. The game is partially observable.

• Actions: accessible through the API, with a frequency comparable to a human, including movement to a specific place, attack and use of objects.

• Feedback: the bot receives rewards for the victory, as well as simple parameters, such as health and lastst .

We selected dozens of items available for the bot, and chose one to explore. We also separately trained on blocking creeps using traditional reinforcement training techniques, as this happens before a rival appears.

Bot plays against Arteezy

The International Tournament

Our approach, combining the game with ourselves and learning from outside, allowed us to significantly enhance the game of our bot from Monday to Thursday, while the tournament was going. Monday night Pajkatt won using an unusual assembly of items. We added this assembly to the list of available items.

At around 1 pm on Wednesday, we tested the latest version of the bot. The bot lost a lot of health after the first wave. We decided that we needed to roll back, but then we noticed that the subsequent game was awesome, and the behavior in the first wave was just bait for other bots. Subsequent games with himself solved the problem when the bot learned to resist the strategy with the bait. And we combined it with the Monday bot only for the first wave, and finished just 20 minutes before Arteezy appeared.

After matches with Arteezy, we updated the creep blocking model, which increased TrueSkill by one. Subsequent training sessions before the match with SumaiL on Thursday increased TrueSkill by two points. SumaiL pointed out that the bot has learned to cast destructive spells [raze] out of sight of the enemy. This happened thanks to the mechanics that we did not know about: casting outside the field of view of the enemy does not allow him to charge Vond.

Arteezy played a match with our 7.5K tester. Arteezy won the game, but our tester managed to surprise him with the help of a strategy spied on the bot. Arteezy later noted that Paparazi had once used this strategy against him, and that they rarely resorted to it.

Pajkatt wins the Monday bot. He lures the bot, and then uses the regeneration.

Bot vulnerabilities

Although SumaiL called the bot "invincible", he can still get confused in situations that are too different from what he saw. We launched it at one of the events held at the tournament, where players played over 1000 games in order to defeat the bot in all possible ways.

Successful vulnerabilities fall into three categories:

• Tugging creeps. You can constantly force creeps from the line to chase after you immediately after they appear. As a result, several dozen creeps will run after you all over the map, and enemy creeps will destroy the bot's tower.

• Orb of venom + wind lace: give you an advantage in speed of movement over the bot on the first level and allow you to quickly cause damage.

• Raze on the first level: requires skills, but several 6-7K class players were able to kill the bot on the first level, successfully completing 3-5 spells in a short time.

Fixing problems for one-on-one matches will be like fixing a bug with Pajkatt. But for 5/5 matches, these problems are not vulnerabilities, and we will need a system that can cope with strange situations that she hasn’t seen before.

Infrastructure

We are not yet ready to discuss the internal features of the bot - the team is working on solving the problem with the game 5 on 5.

The first step of the project was to understand how to run Dota 2 in the cloud on a physical GPU. The game gave an incomprehensible mistake in such cases. But when we started on the GPU on Greg's desktop (during the show, this desktop was brought onto the stage), we noticed that Dota boots with the connected monitor, and gives the same message without a monitor. Therefore, we set up our virtuals so that they pretend to be connected to a physical monitor.

At that time, Dota did not support dedicated servers, that is, launching with scaling and without a GPU was possible only in the version with a very slow software renderer. Then we created a stub for most of the OpenGL calls, except for those that were needed for the download.

At the same time, we wrote a bot on the scripts - as a reference for comparison (in particular, because the built-in bots do not work well in 1 on 1 mode) and to understand the semantics of the API for bots . The script bot reaches up to 70 lastshits in 10 minutes from an empty path, but it still loses quite well to the people playing. Our best bot, playing 1 on 1, reaches the mark of about 97 (it destroys the tower earlier, so we can only extrapolate), and the theoretical maximum is 101.

Bot plays against SirActionSlacks. The bot distraction strategy didn’t work as a crowd of couriers

Five to five

Playing 1 on 1 is a difficult task, but 5 on 5 is an ocean of complexity. We will need to expand the limits of AI capabilities so that he can handle it.

In a familiar way, we begin by copying the behavior. Dota hosts about a million public games per day. Match records are stored on Valve servers for two weeks. We download every record of the game at the expert level since last November, and collected a data set of 5.8 million games (each game is about 45 minutes with 10 players). We use OpenDota to search for records and transferred $ 12,000 to them (which is ten times more than what they wanted to collect in a year) to support the project.

We still have a lot of ideas, and we hire programmers (interested in machine learning, but not necessarily experts) and researchers to help us. We thank Microsoft Azure and Valve for support in our work.

All Articles